Computational Philosophy

Computational philosophy is the use of mechanized computational techniques to instantiate, extend, and amplify philosophical research. Computational philosophy is not philosophy of computers or computational techniques; it is rather philosophy using computers and computational techniques. The idea is simply to apply advances in computer technology and techniques to advance discovery, exploration and argument within any philosophical area.

After touching on historical precursors, this article discusses contemporary computational philosophy across a variety of fields: epistemology, metaphysics, philosophy of science, ethics and social philosophy, philosophy of language and philosophy of mind, often with examples of operating software. Far short of any attempt at an exhaustive treatment, the intention is to introduce the spirit of each application by using some representative examples.

- 1. Introduction

- 2. Anticipations in Leibniz

- 3. Computational Philosophy by Example

- 3.1 Social Epistemology and Agent-Based Modeling

- 3.2 Computational Philosophy of Science

- 3.3 Ethics and Social-Political Philosophy

- 3.4 Computational Philosophy of Language

- 3.5 From Theorem-Provers to Ethical Reasoning, Metaphysics, and Philosophy of Religion

- 3.6 Artificial Intelligence and Philosophy of Mind

- 4. Evaluating Computational Philosophy

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. Introduction

Computational philosophy is not an area or subdiscipline of philosophy but a set of computational techniques applicable across many philosophical areas. The idea is simply to apply computational modeling and techniques to advance philosophical discovery, exploration and argument. One should not therefore expect a sharp break between computational and non-computational philosophy, nor a sharp break between computational philosophy and other computational disciplines.

The past half-century has seen impressive advances in raw computer power as well as theoretical advances in automated theorem proving, agent-based modeling, causal and system dynamics, neural networks, machine learning and data mining. What might contemporary computational technologies and techniques have to offer in advancing our understanding of issues in epistemology, ethics, social and political philosophy, philosophy of language, philosophy of mind, philosophy of science, or philosophy of religion?[1] Suggested by Leibniz and with important precursors in the history of formal logic, the idea is to apply new computational advances within long-standing areas of philosophical interest.

Computational philosophy is not the philosophy of computation, an area that asks about the nature of computation itself. Although applicable and informative regarding artificial intelligence, computational philosophy is not the philosophy of artificial intelligence. Nor is it an umbrella term for the questions about the social impact of computer use explored for example in philosophy of information, philosophy of technology, and computer ethics. More generally, there is no “of” that computational philosophy can be said to be the philosophy of. Computational philosophy represents not an isolated topic area but the widespread application of whatever computer techniques are available across the full range of philosophical topics. Techniques employed in computational philosophy may draw from standard computer programming and software engineering, including aspects of artificial intelligence, neural networks, systems science, complex adaptive systems, and a variety of computer modeling methods. As a growing set of methodologies, it includes the prospect of computational textual analysis, big data analysis, and other techniques as well. Its field of application is equally broad, unrestricted within the traditional discipline and domain of philosophy.

This article is an introduction to computational philosophy rather than anything like a complete survey. The goal is to offer a handful of suggestive examples across computational techniques and fields of philosophical application.

2. Anticipations in Leibniz

The only way to rectify our reasonings is to make them as tangible as those of the Mathematicians, so that we can find our error at a glance, and when there are disputes among persons, we can simply say: Let us calculate, without further ado, to see who is right. —Leibniz, The Art of Discovery (1685 [1951: 51])

Formalization of philosophical argument has a history as old as logic.[2] Logic is the historical source and foundation of contemporary computing.[3] Our topic here is more specific: the application of contemporary computing to a range of philosophical questions. But that too has a history, evident in Leibniz’s vision of the power of computation.

Leibniz is known for both the development of formal techniques in philosophy and the design and production of actual computational machinery. In 1642, the philosopher Blaise Pascal had invented the Pascaline, designed to add with carry and subtract. Between 1673 and 1720 Leibniz designed a series of calculating machines intended to instantiate multiplication and division as well: the stepped reckoner, employing what is still known as the Leibniz wheel (Martin 1925). The sole surviving Leibniz step reckoner was discovered in 1879 as workmen were fixing a leaking roof at the University of Göttingen. In correspondence, Leibniz alluded to a cryptographic encoder and decoder using the same mechanical principles. On the basis of those descriptions, Nicholas Rescher has produced a working conjectural reconstruction (Rescher 2012).

But Leibniz had visions for the power of computation far beyond mere arithmetic and cryptography. Leibniz’s 1666 Dissertatio De Arte Combinatoria trumpets the “art of combinations” as a method of producing novel ideas and inventions as well as analyzing complex ideas into simpler elements (Leibniz 1666 [1923]). Leibniz describes it as the “mother of inventions” that would lead to the “discovery of all things”, with applications in logic, law, medicine, and physics. The vision was of a set of formal methods applied within a perfect language of pure concepts which would make possible the general mechanization of reason (Gray 2016).[4]

The specifics of Leibniz’s combinatorial vision can be traced back to the mystical mechanisms of Raymond Llull circa 1308, combinatorial mechanisms lampooned in Jonathan Swift’s Gulliver’s Travels of 1726 as allowing one to

write books in philosophy, poetry, politics, mathematics, and theology, without the least assistance from genius or study. (Swift 1726: 174, Lem 1964 [2013: 359])

Combinatorial specifics aside, however, Leibniz’s vision of an application of computational methods to substantive questions remains. It is the vision of computational physics, computational biology, computational social science, and—in application to perennial questions within philosophy—of computational philosophy.

3. Computational Philosophy by Example

Despite Leibniz’s hopes for a single computational method that would serve as a universal key to discovery, computational philosophy today is characterized by a number of distinct computational approaches to a variety of philosophical questions. Particular questions and particular areas have simply seemed ripe for various models, methodologies, or techniques. Both attempts and results are therefore scattered across a range of different areas. In what follows we offer a survey of various explorations in computational philosophy.

3.1 Social Epistemology and Agent-Based Modeling

Computational philosophy is perhaps most easily introduced by focusing on applications of agent-based modeling to questions in social epistemology, social and political philosophy, philosophy of science, and philosophy of language. Sections 3.1 through 3.3 are therefore structured around examples of agent-based modeling in these areas. Other important computational approaches and other areas are discussed in 3.4 through 3.6.

Traditional epistemology—the epistemology of Plato, Hume, Descartes, and Kant—treats the acquisition and validation of knowledge on the individual level. The question for traditional epistemology was always how I as an individual can acquire knowledge of the objective world, when all I have to work with is my subjective experience. Perennial questions of individual epistemology remain, but the last few decades have seen the rise of a very different form of epistemology as well. Anticipated in early work by Alvin I. Goldman, Helen Longino, Philip Kitcher, and Miriam Solomon, social epistemology is now evident both within dedicated journals and across philosophy quite generally (Goldman 1987; Longino 1990; Kitcher 1993; Solomon 1994a, 1994b; Goldman & Whitcomb 2011; Goldman & O’Connor 2001 [2019]; Longino 2019). I acquire my knowledge of the world as a member of a social group: a group that includes those inquirers that constitute the scientific enterprise, for example. In order to understand the acquisition and validation of knowledge we have to go beyond the level of individual epistemology: we need to understand the social structure, dynamics, and process of scientific investigation. It is within this social turn in epistemology that the tools of computational modelling—agent-based modeling in particular—become particularly useful (Klein, Marx and Fischbach 2018).

The following two sections use computational work on belief change as an introduction to agent-based modeling in social epistemology. Closely related questions regarding scientific communication are left to sections 3.2.2 and 3.2.3.

3.1.1 Belief change and opinion polarization

How should we expect beliefs and opinions to change within a social group? How might they rationally change? The computational approach to these kinds of questions attempts to understand basic dynamics of the target phenomenon by building, running, and analyzing simulations. Simulations may start with a model of interactive dynamics and initial conditions, which might include, for example, the initial beliefs of individual agents and how prone those agents are to share information and listen to others. The computer calculates successive states of the model (“steps”) as a function (typically stochastic) of preceding stages. Researchers collect and analyze simulation outputs, which might include, for example, the distribution of beliefs in the simulated society after a certain number of rounds of communication. Because simulations typically involve many stochastic elements (which agents talk with which agents at what point in the simulation, what specific beliefs specific agents start with, etc.), data is usually collected and analyzed across a large number of simulation runs.

One model of belief change and opinion polarization that has been of wide interest is that of Hegselmann and Krause (2002, 2005, 2006), which offers a clear and simple example of the application of agent-based techniques.

Opinions in the Hegselmann-Krause model are mapped as numbers in the [0, 1] interval, with initial opinions spread uniformly at random in an artificial population. Individuals update their beliefs by taking an average of the opinions that are “close enough” to an agent’s own. As agents’ beliefs change, a different set of agents or a different set of values can be expected to influence further updating. A crucial parameter in the model is the threshold of what counts as “close enough” for actual influence.[5]

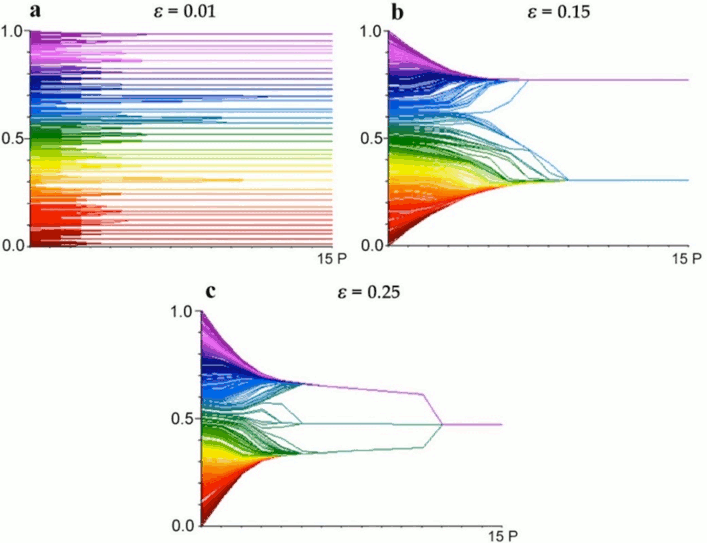

Figure 1 shows the changes in agent opinions over time in single runs with thresholds ε set at 0.01, 0.15, and 0.25 respectively. With a threshold of 0.01, individuals remain isolated in a large number of small local groups. With a threshold of 0.15, the agents form two permanent groups. With a threshold of 0.25, the groups fuse into a single consensus opinion. These are typical representative cases, and runs vary slightly. As might be expected, all results depend on both the number of individual agents and their initial random locations across the opinion space. See the interactive simulation of the Hegselmann and Krause bounded confidence model in the Other Internet Resources section below.

Figure 1: Example changes in opinion across time from single runs with different threshold values \(\varepsilon \in \{0.01, 0.15, 0.25\}\) in the Hegselmann and Krause (2002) model. [An extended description of figure 1 is in the supplement.]

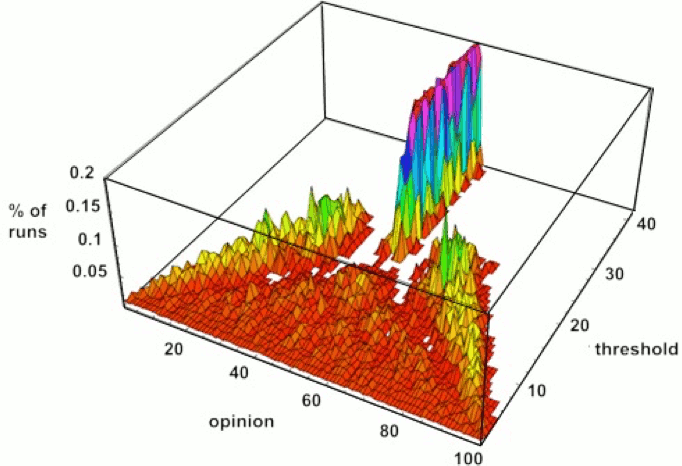

An illustration of average outcomes for different threshold values appears as figure 2. What is represented here is not change over time but rather the final opinion positions given different threshold values. As the threshold value climbs from 0 to roughly 0.20, there is an increasing number of results with concentrations of agents at the outer edges of the distribution, which themselves are moving inward. Between 0.22 and 0.26 there is a quick transition from results with two final groups to results with a single final group. For values still higher, the two sides are sufficiently within reach that they coalesce on a central consensus, although the exact location of that final monolithic group changes from run to run creating the fat central spike shown. Hegselmann and Krause describe the progression of outcomes with an increasing threshold as going through three phases: “from fragmentation (plurality) over polarisation (polarity) to consensus (conformity).” (2002: 11, authors’ italics)

Figure 2: Frequency of equilibrium opinion positions for different threshold values in the Hegselmann and Krause model scaled to [0, 100] (as original with axes relabeled; Hegselmann and Krause 2002). [An extended description of figure 2 is in the supplement.]

A number of models further refine the “bounded confidence” mechanisms of the Hegselmann Krause model. Deffuant et al., for example, replace the sharp cutoff of influence in Hegselmann-Krause with continuous influence values (Deffuant et al. 2002; Deffuant 2006; Meadows & Cliff 2012). Agents are again assigned both opinion values and threshold (“uncertainty”) ranges, but the extent to which the opinion of agent i is influential on agent j is proportional to the ratio of the overlap of their ranges (opinion plus or minus threshold) over i’s range. Opinion centers and threshold ranges are updated accordingly, resulting in the possibility of individuals with narrower and wider ranges. Given the updating algorithm, influence may also be asymmetric: individuals with a narrower range of tolerance, which Deffuant et al. interpret as higher confidence or lower uncertainty, will be more influential on individuals with a wider range than vice versa. The influence on polarization of “stubborn” individuals who do not change, and of agents on extremes, has also been studied, showing a clear impact on the dynamics of belief change in the group.[6]

Eric Olsson and Sofi Angere have developed a sophisticated program in which the interaction of agents is modelled within a Bayesian network of both information and trust (Olsson 2011). Their program, Laputa has a wide range of applications, one of which is a model of polarization interpreted in terms of the Persuasive Argument Theory in psychology and which replicates an effect seen in empirical studies: the increasing divergence of polarized groups (Lord, Ross, & Lepper 1979; Isenberg 1986; Olsson 2013). Olsson raises the question of whether polarization may be epistemically rational, offering a positive answer. O’Connor and Weatherall (2018) and Singer et al. (2019) also argue that polarization can be rational, using different models and perhaps different senses of polarization (Bramson et al. 2017). Kevin Dorst uses simulation as part of an argument that polarization can be a predictable result if fully rational agents, while aiming for accuracy, selectively find flaws in evidence opposed to their current view. Initial divergences, he argues, can be the result of iterated Bayesian updating on ambiguous evidence (Dorst 2023).

The topic of polarization is anticipated in an earlier tradition of cellular automata models initiated by Robert Axelrod. The basic premise of Axelrod (1997) is that people tend to interact more with those like themselves and tend to become more like those with whom they interact. But if people come to share one another’s beliefs (or other cultural features) over time, why do we not observe complete cultural convergence? At the core of Axelrod’s model is a spatially instantiated imitative mechanism that produces cultural convergence within local groups but also results in progressive differentiation and cultural isolation between groups.

100 agents are arranged on a \(10 \times 10\) lattice such as that illustrated in Figure 3. Each agent is connected to four others: top, bottom, left, and right. The exceptions are those at the edges or corners of the array, connected to only three and two neighbors, respectively. Agents in the model have multiple cultural “features”, each of which carries one of multiple possible “traits”. One can think of the features as categorical variables and the traits as options or values within each category. For example, the first feature might represent culinary tradition, the second one the style of dress, the third music, and so on. In the base configuration an agent’s “culture” is defined by five features \((F = 5)\) each having one of 10 traits \((q =10),\) numbered 0 through 9. Agent x might have \(\langle 8, 7, 2, 5, 4\rangle\) as a cultural signature while agent y is characterized \(\langle 1, 4, 4, 8, 4\rangle\). Agents are fixed in their lattice location and hence their interaction partners. Agent interaction and imitation rates are determined by neighbor similarity, where similarity is measured as the percentage of feature positions that carry identical traits. With five features, if a pair of agents share exactly one such element they are 20% similar; if two elements match then they are 40% similar, and so forth. In the example just given, agents x and y and have a similarity of 20% because they share only one feature.

| 41846 | 09617 | 06227 | 73975 | 78196 | 98865 | 67856 | 39579 | 46292 | 39070 |

| 95667 | 34557 | 85463 | 49129 | 83446 | 31042 | 78640 | 70518 | 61745 | 96211 |

| 47298 | 86948 | 54261 | 75923 | 02665 | 97330 | 67790 | 69719 | 45520 | 37354 |

| 09575 | 72785 | 94991 | 70805 | 04952 | 52299 | 99741 | 12929 | 18932 | 81593 |

| 02029 | 94602 | 14852 | 94392 | 83121 | 84309 | 33260 | 44121 | 19166 | 73581 |

| 84484 | 93579 | 09052 | 12567 | 72371 | 08352 | 25212 | 39743 | 45785 | 55341 |

| 69263 | 94414 | 25246 | 68061 | 12208 | 44813 | 02717 | 90699 | 94938 | 05728 |

| 98129 | 44971 | 86427 | 26499 | 05885 | 45788 | 40317 | 08520 | 35527 | 73303 |

| 18261 | 18215 | 70977 | 15211 | 92822 | 74561 | 60786 | 34255 | 07420 | 42317 |

| 30487 | 23057 | 24656 | 03204 | 60418 | 56359 | 57759 | 01783 | 21967 | 84773 |

Figure 3: Typical initial set of “cultures” for a basic Axelrod-style model consisting of 100 agents on a \(10 \times 10\) lattice with five features and 10 possible traits per agent. The marked sight shares two of five traits with the site above it, giving it a cultural similarity score of 40% (Axelrod 1997).

For each iteration, the model picks at random an agent to be active and one of its neighbors. With probability equal to their cultural similarity, the two sites interact and the active agent changes one of its dissimilar elements to that of its neighbor. If agent \(i = \langle 8, 7, 2, 5, 4\rangle\) is chosen to be active and it is paired with its neighbor agent \(j = \langle 8, 4, 9, 5, 1\rangle,\) for example, the two will interact with a 40% probability because they have two elements in common. If the interaction does happen, agent i changes one of its mismatched elements to match that of j, becoming perhaps \(\langle 8, 7, 2, 5, 1\rangle.\) This change creates a similarity score of 60%, yielding an increased probability of future interaction between the two.

In the course of approximately 80,000 iterations, Axelrod’s model produces large areas in which cultural features are identical: local convergence. It is also true, however, that arrays such as that illustrated do not typically move to full convergence. They instead tend to produce a small number of culturally isolated stable regions—groups of identical agents none of whom share features in common with adjacent groups and so cannot further interact. As an array develops, agents interact with increasing frequency with those with whom they become increasingly similar, interacting less frequently with the dissimilar agents. With only a mechanism of local convergence, small pockets of similar agents emerge that move toward their own homogeneity and away from that of other groups. With the parameters described above, Axelrod reports a median of three stable regions at equilibrium. It is this phenomenon of global separation that Axelrod refers to as “polarization”. See the interactive simulation of the Axelrod polarization model in the Other Internet Resources section below.

Axelrod notes a number of intriguing results from the model, many of which have been further explored in later work. Results are very sensitive to the number of features F and traits q used as parameters, for example. Changing numbers of features and traits changes the final number of stable regions in opposite directions: the number of stable regions correlates negatively with the number of features F but positively with the number of traits q (Klemm et al. 2003). In Axelrod’s base case with \(F = 5\) and \(q = 10\) on a \(10 \times 10\) lattice, the result is a median of three stable regions. When q is increased from 10 to 15, the number of final regions increases from three to 20; increasing the number of traits increases the number of stable groups dramatically. If the number of features F is increased to 15, in contrast, the average number of stable regions drops to only 1.2 (Axelrod 1997). Further explorations of parameters of population size, configuration, and dynamics, with measures of relative size of resultant groups, appear in Klemm et al. (2003a, b, c, 2005) and in Centola et al. (2007).

One result that computational modeling promises regarding a phenomenon such as opinion polarization is an understanding of the phenomenon itself: how real opinion polarization might happen, and how it might be avoided. Another and very different outcome, however, is created by the fact that computational modeling both offers and demands precision about concepts and measures that may otherwise be lacking in theory. Bramson et al. (2017), for example, argues that “polarization” has a range of possible meanings across the literature in which it appears, different aspects of which are captured by different computational models with different measures.

3.1.2 The social dynamics of argument

In general, the social dynamics of belief change reviewed above treats beliefs as items that spread by contact, much on the model of infection dynamics (Grim, Singer, Reade, & Fisher 2015, though Riegler & Douven 2009 can be seen as an exception). Other attempts have been made to model belief change in greater detail, motivated by reasons or arguments.

With gestures toward earlier work by Phan Minh Dung (1995), Gregor Betz constructs a model of belief change based on “dialectical structures” of linked arguments (Betz 2013). Sentences and their negations are represented as digits positive and negative, arguments as ordered sets of sentences, and two forms of links between arguments: an attack relation in which a conclusion of one argument contradicts a premise of another and support relations in which the conclusion of one argument is equivalent to the premise of another (Figure 4). A “position” on a dynamical structure, complete or partial, consists of an assignment of truth values T or F to the elements of the set of sentences involved. Consistent positions relative to a structure are those in which contradictory sentences are signed opposite truth values and every argument in which all premises are assigned T has a conclusion which is assigned T as well. Betz then maps the space of coherent positions for a given dialectical structure as an undirected network, with links between positions that differ in the truth-value of just one sentence of the set.

Figure 4: A dialectical structure of propositions and their negations as positive and negative numbers, with two complete positions indicated by values of T and F. The left assignment is consistent; the right assignment is not (after Betz 2013). [An extended description of figure 4 is in the supplement.]

In the simplest form of the model, two agents start with random assignments to a set of 20 sentences with consistent assignments to their negations. Arguments are added randomly, starting from a blank slate, and agents move to the coherent position closest to their previous position, with a random choice in the case of a draw. In variations on the basic structure, Betz considers (a) cases in which an initial background agreement is assumed, (b) cases of “controversial” argumentation, in which arguments are introduced which support a proponent’s position or attack an opponent’s, and (c) in which up to six agents are involved. In two series of simulations, he tracks both the consensus-conduciveness of different parameters, and—with an assumption of a specific assignment as the “truth”—the truth-conduciveness of different parameters.

In individual runs, depending on initial positions and arguments introduced, Betz finds that argumentation of the sort modeled can either increase or decrease agreement, and can track the truth or lead astray. Averaging across many debates, however, Betz finds that controversial argumentation in particular is both consensus-conducive and better tracks the truth.[7]

3.2 Computational Philosophy of Science

Computational models have been used in philosophy of science in two very different respects: (a) as models of scientific theory, and (b) as models of the social interaction characteristic of collective scientific research. The next sections review some examples of each.

3.2.1 Network models of scientific theory

“Computational philosophy of science” is enshrined as a book title as early as Paul Thagard’s 1988. A central core of his work is a connectionist ECHO program, which constructs network structures of scientific explanation (Thagard 1992, 2012). From inputs of “explain”, “contradict”, “data”, and “analogous” for the status and relation of nodes, ECHO uses a set of principles of explanatory coherence to construct a network of undirected excitatory and inhibitory links between nodes which “cohere” and those which “incohere”, respectively. If p1 through pm explain q, for example, all of p1 through pm cohere with q and with each other, for example, though the weight of coherence is divided by the number of p1 through pm. If p1 contradicts p2 or p1 and p2 are parts of competing explanations for the same phenomenon, they “incohere”.

Starting with initial node activations close to zero, the nodes of the coherence network are synchronously updated in terms of their old activation and weighted input from linked nodes, with “data” nodes set as a constant input of 1. Once the network settles down to equilibrium, an explanatory hypothesis p1 is taken to defeat another p2 if its activation value is higher—at least generally, positive as opposed to negative (Figure 5).

Figure 5: An ECHO network for hypotheses P1 and P2 and evidence units Q1 and Q2. Solid lines represent excitatory links, the dotted line an inhibitory link. Because Q1 and Q2 are evidence nodes, they take a constant excitatory value of 1 from E. Started from values of .01 and following Thagard’s updating, P1 dominates P2 once the network has settled down: a hypothesis that explains more dominates its alternative. Adapted from Thagard 1992.

Thagard is able to show that such an algorithm effectively echoes a range of familiar observations regarding theory selection. Hypotheses that explain more defeat those that explain less, for example, and simpler hypotheses are to be preferred. In contrast to simple Popperian refutation, ECHO abandons a hypothesis only when a dominating hypothesis is available. Thagard uses the basic approach of explanatory coherence, instantiated in ECHO, in an analysis of a number of historical cases in the history of science, including the abandonment of phlogiston theory in favor of oxygen theory, the Darwinian revolution, and the eventual triumph of Wegener’s plate tectonics and continental drift.

The influence of Bayesian networks has been far more widespread, both across disciplines and in technological application—application made possible only with computers. Grounded in the work of Judea Pearl (1988, 2000; Pearl & Mackenzie 2018), Bayesian networks are directed acyclic graphs in which nodes represent variables that can be read as either probabilities or degrees of belief and directed edges as conditional probabilities from “parent” to “child”. By the Markov convention, the value of a node is independent of all other nodes that are not its descendants, conditional on its parents. A standard textbook example is shown in Figure 6.

Figure 6: A standard example of a simple Bayesian net. [An extended description of figure 6 is in the supplement.]

Changes of values at the nodes of a Bayesian network (in response to evidence, for example) are updated through belief propagation algorithms applied at every node. The update of a response to input from a parent uses the conditional probabilities of the link. A parent’s response to input from a child uses the related likelihood ratio (see also the supplement on Bayesian networks in Bringsjord & Govindarajulu 2018 [2019]). Reading some variables as hypotheses and others as pieces of evidence, simple instances of core scientific concepts can easily be read off such a structure. Simple explanation amounts to showing how the value of a variable “downstream” depends on the pattern “upstream”. Simple confirmation amounts to an increase in the probability or degree of belief of a node h upstream given a piece of evidence e downstream. Evaluating competing hypotheses consists in calculating the comparative probability of different patterns upstream (Climenhaga 2020, 2023, Grim et al. 2022a). In tracing the dynamics of credence changes across Bayesian networks subjected to an ‘evidence barrage,’ it has been argued that a Kuhnian pattern of normal science punctuated with occasional radical shifts follows from Bayesian updating in networks alone (Grim et al. 2022b).

As Pearl notes, a Bayesian network is nothing more than a graphical representation of a huge table of joint probabilities for the variables involved (Pearl & Mackenzie 2018: 129). Given any sizable number of variables, however, calculation becomes humanly unmanageable—hence the crucial use of computers. The fact that Bayesian networks are so computationally intensive is in fact a point that Thagard makes against using them as models of human cognitive processing (Thagard 1992: 201). But that is not an objection against other philosophical interpretations. One clear reading of networks is as causal graphs. Application to philosophical questions of causality in philosophy of science is detailed in Spirtes, Glymour, and Scheines (1993) and Sprenger and Hartmann (2019). Bayesian networks are now something of a standard in artificial intelligence, ubiquitous in applications, and powerful algorithms have been developed to extract causal networks from the massive amounts of data available.

3.2.2 Network models of scientific communication

It should be no surprise that the computational studies of belief change and opinion dynamics noted above blend smoothly into a range of computational studies in philosophy of science. Here a central motivating question has been one of optimal investigatory structure: what pattern of scientific communication and cooperation, between what kinds of investigators, is best positioned to advance science? There are two strands of computational philosophy of science that attempt to work toward an answer to this question. The first strand models the effect of communicative networks within groups. The second strand, left to the next section, models the effects of cognitive diversity within groups. This section outlines what makes modeling of both sorts promising, but also notes limitations and some failures as well.

One might think that access to more data by more investigators would inevitably optimize the truth-seeking goals of communities of investigators. On that intuition, faster and more complete communication—the contemporary science of the internet—would allow faster, more accurate, and more exploration of nature. Surprisingly, however, this first strand of modeling offers robust arguments for the potential benefits of limited communication.

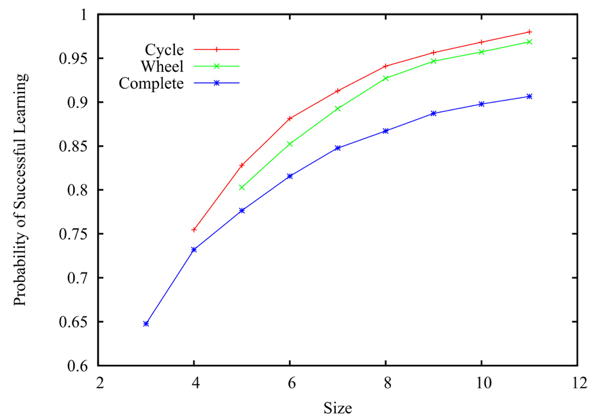

In the spirit of rational choice theory, much of this work was inspired by analytical work in economics on infinite populations by Venkatesh Bala and Sanjeev Goyal (1998), computationally implemented for small populations in a finite context and with an eye to philosophical implications by Kevin Zollman (2007, 2010a, 2010b). In Zollman’s model, Bayesian agents choose between a current method \(\phi_1\) and what is set as a better method \(\phi_2,\) starting with random beliefs and allowing agents to pursue the investigatory action with the highest subjective utility. Agents update their beliefs based on the results of their own testing results—drawn from a distribution for that action—together with results from the other agents to which they are communicatively connected. A community is taken to have successfully learned when all agents converge on the better \(\phi_2.\)

Zollman’s results are shown in Figure 7 for the three simple networks shown in Figure 8. The communication network which performs the best is not the fully connected network in which all investigators have access to all results from all others, but the maximally distributed network represented by the ring. As Zollman also shows, this is also that configuration which takes the longest time to achieve convergence. See an interactive simulation of a simplified version of Zollman’s model in the Other Internet Resources section below.

Figure 7: A 10 person ring, wheel, and complete graph. After Zollman (2010a).

Figure 8: Learning results of computer simulations: ring, wheel, and complete networks of Bayesian agents. Adapted from Zollman (2010a). [An extended description of figure 8 is in the supplement.]

Olsson and Angere’s Bayesian network Laputa (mentioned above) has also been applied to the question of optimal networks for scientific communication. Their results essentially confirm Zollman’s result, though sampled over a larger range of networks (Angere & Olsson 2017). Distributed networks with low connectivity are those that most reliably fix on the truth, though they are bound to do so more slowly.

In Zollman’s original version, all agents are envisaged as scientists who follow the same set of updating rules. The model has been extended to include both scientists who communicate all results and industry propagandists who selectively communicate only results favoring their side, modelling the impact on policy makers who receive input from both. Not surprisingly, the activity of the propagandist (and selective publication in general) can affect whether policy makers can find the truth in order to act on it (Holman and Bruner 2017; Weatherall, Owen, O’Connor and Bruner 2018; O’Connor and Weatherall 2019).

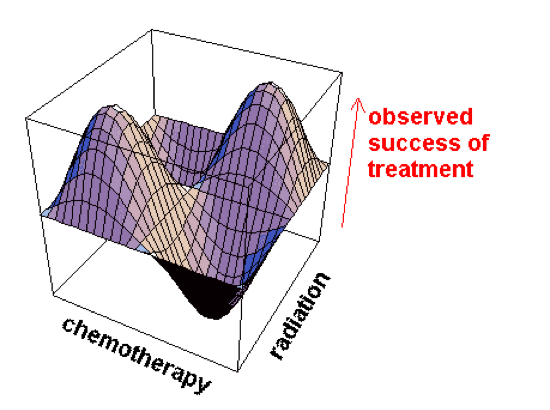

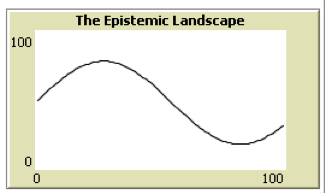

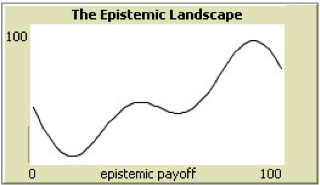

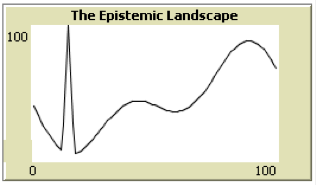

The concept of an epistemic landscape has also emerged as of central importance in this strand of research. Analogous to a fitness landscape in biology (Wright 1932), an epistemic landscape offers an abstract representation of ideal data that might in principle be obtained in testing a range of hypotheses (Grim 2009; Weisberg & Muldoon 2009; Hong & Page 2004, Page 2007). Figure 9 uses the example of data that might be obtained by testing alternative medical treatments. In such a graph points in the chemotherapy-radiation plane represent particular hypotheses about the most effective combination of radiation and chemotherapy. Graph height at each location represents some measure of success: the percentage of patients with 5-years survival on that treatment, for example.

Figure 9: A three-dimensional epistemic landscape. Points on the xz plane represent hypotheses regarding optimal combination of radiation and chemotherapy; graph height on the y axis represents some measure of success. [An extended description of figure 9 is in the supplement.]

An epistemic landscape is intended to be an abstract representation of the real-world phenomenon being explored. The key word, of course, is “abstract”: few would argue that such a model is fully realistic either in terms of the simplicity of limited dimensions or the precision in which one hypothesis has a distinctly higher value than a close neighbor. As in all modeling, the goal is to represent as simply as possible those aspects of a situation relevant to answering a specific: in this case, the question of optimal scientific organization. Epistemic landscapes—even those this simple—have been assumed to offer a promising start. As outlined below, however, one of the deeper conclusions that has emerged is how sensitive results can be to the specific topography of the epistemic landscape.

Is there a form of scientific communication which optimizes its truth-seeking goals in exploration of a landscape? In a series of agent-based models, agents are communicatively linked explorers situated at specific points on an epistemic landscape (Grim, Singer et al. 2013). In such a design, simulation can be used to explore the effect of network structure, the topography of the epistemic landscape, and the interaction of the two.

The simplest form of the results echo the pattern seen in different forms in Bala and Goyal (1998) and in Zollman (2010a, 2010b), here played out on epistemic landscapes. Agents start with random hypotheses as points on the x-axis of a two-dimensional landscape. They compare their results (the height of the y axis at that point) with those of the other agents to which they are networked. If a networked neighbor has a higher result, the agent moves toward an approximation of that point (in the interval of a “shaking hand”) with an inertia factor (generally 50%, or a move halfway). The process is repeated by all agents, progressively exploring the landscape in attempting to move toward more successful results.

On “smooth” landscapes of the form of the first two graphs in Figure 10, agents in any of the networks shown in Figure 10 succeed in finding the highest point on the landscape. Results become much more interesting for epistemic landscapes that contain a “needle in a haystack” as in the third graph in Figure 10.

Figure 10: Two-dimensional epistemic landscapes.

ring radius 1

small world

wheel

hub

random

complete

Figure 11: Sample networks.

In a ring with radius 1, each agent is connected with just its immediate neighbors on each side. Using an inertia of 50% and a “shaking hand” interval of 8 on a 100-point landscape, 50 agents in that configuration converge on the global maximum in the “needle in the haystack” landscape in 66% of simulation runs. If agents are connected to the two closest neighbors on each side, results drop immediately to 50% of runs in which agents find the global maximum. A small world network can be envisaged as a ring in which agents have a certain probability of “rewiring”: breaking an existing link and establishing another one to some other agent at random (Watts & Strogatz 1998). If each of 50 agents has a 9% probability of rewiring, the success rate of small worlds drops to 55%. Wheels and hubs have a 42% and 37% success rate, respectively. Random networks with a 10% probability of connection between any two nodes score at 47%. The worst performing communication network on a “needle in a haystack” landscape is the “internet of science” of a complete network in which everyone instantly sees everyone else’s result.

Extensions of these results appear in Grim, Singer et al. (2013). There a small sample of landscapes is replaced with a quantified “fiendishness index”, roughly representing the extent to which a landscape embodies a “needle in a haystack”. Higher fiendishness quantifies a lower probability that hill-climbing from a randomly chosen point “finds” the landscape’s global maximum. Landscapes, though still two-dimensional, are “looped” so as to avoid edge-effects also noted in Hegselmann and Krause (2006). Here again results emphasize the epistemic advantages of ring-like or distributed network over fully connected networks in the exploration of intuitively difficult epistemic landscapes. Distributed single rings achieve the highest percentage of cases in which the highest point on the landscape is found, followed by all other network configurations. Total or completely connected networks show the worst results over all. Times to convergence are shown to be roughly though not precisely the inverse of these relationships. See the interactive simulation of a Grim and Singer et al.’s model in the Other Internet Resources section below.

What all these models suggest is that it is distributed networks of communication between investigators, rather than full and immediate communication between all, that will—or at least can—give us more accurate scientific outcomes. In the seventeenth century, scientific results were exchanged slowly, from person to person, in the form of individual correspondence. In today’s science results are instantly available to everyone. What these models suggest is that the communication mechanisms of seventeenth century science may be more reliable than the highly connected communications of today. Zollman draws the corollary conclusion that loosely connected communities made up of less informed scientists might be more reliable in seeking the truth than communities of more informed scientists that are better connected (Zollman 2010b).

The explanation is not far to seek. In all the models noted, more connected networks produce inferior results because agents move too quickly to salient but sub-optimal positions: to local rather than global maxima. In the landscape models surveyed, connected networks result in all investigators moving toward the same point, currently announced to everyone as highest, skipping over large areas in the process—precisely where the “needle in the haystack” might be hidden. In more distributed networks, local action results in a far more even and effective exploration of widespread areas of the landscape; exploration rather than exploitation (Holland 1975).

How should we structure the funding and communication structure of our scientific communities? It is clear both from these results in their current form, and in further work along these general lines, that the answer may well be “landscape”-relative: it may well depend on what kind of question is at issue what form scientific communication ought to take. It may also depend on what desiderata are at issue. The models surveyed emphasize accuracy of results, abstractly modeled. All those surveyed concede that there is a clear trade-off between accuracy of results and the speed of community consensus (Zollman 2007; Zollman 2010b; Grim, Singer et al. 2013). But for many purposes, and reasons both ethical and practical, it may often be far better to work with a result that is only roughly accurate but available today than to wait 10 years for a result that is many times more accurate but arrives far too late.

3.2.3 Division of labor, diversity, and exploration

A second tradition of work in computational philosophy of science also uses epistemic landscapes, but attempts to model the effect not of network structure but of the division of labor and diversity within scientific groups. An influential but ultimately flawed precursor in this tradition is the work of Weisberg and Muldoon (2009).

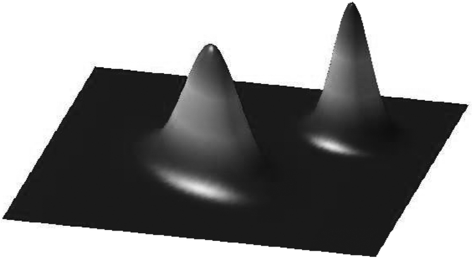

Two views of Weisberg and Muldoon’s landscape appear in Figure 12. In their treatment, points on the base plane of the landscape represent “approaches”—abstract representations of the background theories, methods, instruments and techniques used to investigate a particular research question. Heights at those points are taken to represent scientific significance (following Kitcher 1993).

Figure 12: Two visions of Weisberg and Muldoon’s landscape of scientific significance (height) at different approaches to a research topic.

The agents that traverse this landscape are not networked, as in the earlier studies noted, except to the extent that they are influenced by agents with “approaches” near theirs on the landscape. What is significant about the Weisberg & Muldoon model, however, is that their agents are not homogeneous. Two types of agents play a primary role.

“Followers” take previous investigation of the territory by others into account in order to follow successful trends. If any previously investigated points in their immediate neighborhood have a higher significance than the point they stand on, they move to that point (randomly breaking any tie).[8] Only if no neighboring investigated points have higher significance and uninvestigated point remain, followers move to one of those.

“Mavericks” avoid previously investigated points much as followers prioritize them. Mavericks choose unexplored points in their neighborhoods, testing significance. If higher than their current spot, they move to that point.

Weisberg and Muldoon measure both the percentages of runs in which groups of agents find the highest peak and the speed at which peaks are found. They report that the epistemic success of a population of followers is increased when mavericks are included, and that the explanation for that effect lies in the fact that mavericks can provide pathways for followers: “[m]avericks help many of the followers to get unstuck, and to explore more fruitful areas of the epistemic landscape” (for details see Weisberg & Muldoon 2009: 247 ff). Against that background they argue for broad claims regarding the value for an epistemic community of combining different research strategies. The optimal division of labor that their model suggests is “a healthy number of followers with a small number of mavericks”.

Critics of Weisberg and Muldoon’s model argue that it is flawed by simple implementation errors in which >= was used in place of >, with the result that their software agents do not in fact operate in accord with their outlined strategies (Alexander, Himmelreich & Thomson 2015). As implemented, their followers tend to get trapped into oscillating between two equivalent spaces (often of value 0). According to the critics, when followers are properly implemented, it turns out that mavericks help the success of a community solely in terms of discovery by the mavericks themselves, not by getting followers “unstuck” who shouldn’t have been stuck in the first place (see also Thoma 2015). If the critics are right, the Weisberg-Muldoon model as originally implemented proves inadequate as philosophical support for the claim that division of labor and strategic diversity are important epistemic drivers. There’s an interactive simulation of the Weisberg and Muldoon model, which includes a switch to change the >= to >, in the Other Internet Resources section below.

Critics of the model don’t deny the general conclusion that Weisberg and Muldoon draw: that cognitive diversity or division of cognitive labor can favor social epistemic outcomes.[9] What they deny is that the Weisberg and Muldoon model adequately supports that conclusion. A particularly intriguing model that does support that conclusion, built on a very different model of diversity, is that of Hong and Page (2004). But it also supports a point that Alexander et al. emphasize: that the advantages of cognitive diversity can very much depend on the epistemic landscape being explored.

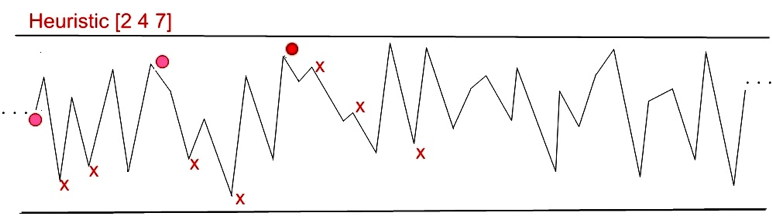

Lu Hong and Scott Page work with a two-dimensional landscape of 2000 points, wrapped around as a loop. Each point is assigned a random value between 1 and 100. Their epistemic individuals explore that landscape using heuristics composed of three ordered numbers between, say, 1 and 12. An example helps. Consider an individual with heuristic \(\langle 2, 4, 7\rangle\) at point 112 on the landscape. He first uses his heuristic 2 to see if a point two to the right—at 114—has a higher value than his current position. If so, he moves to that point. If not, he stays put. From that point, whichever it is, he uses his heuristic 4 in order to see if a point 4 steps to the right has a higher peak, and so forth. An agent circles through his heuristic numbers repeatedly until he reaches a point from which none within reach of his heuristic offers a higher value. The basic dynamic is illustrated in Figure 13.

Figure 13: An example of exploration of a landscape by an individual using heuristics as in Hong and Page (2004). Explored points can be read left to right. [An extended description of figure 13 is in the supplement.]

Hong and Page score individuals on a given landscape in terms of the average height they reach starting from each of the 2000 points. But their real target is the value of diversity in groups. With that in mind, they compare the performance of (a) groups composed of the 9 individuals with highest-scoring heuristics on a given landscape with (b) groups composed of 9 individuals with random heuristics on that landscape. In each case groups function together in what has been termed a “relay”. For each point on the 2000-point landscape, the first individual of the group finds his highest reachable value. The next individual of the group starts from there, and so forth, circling through the individuals until a point is reached at which none can achieve a higher value. The score for the group as a whole is the average of values achieved in such a way across all of the 2000 points.

What Hong and Page demonstrate in simulation is that groups with random heuristics routinely outperform groups composed entirely of the “best” individual performers. They christen their findings the “Diversity Trumps Ability” result. In a replication of their study, the average maximum on the 2000-point terrain for the group of the 9 best individuals comes in at 92.53, with a median of 92.67. The average for a group of 9 random individuals comes in at 94.82, with a median of 94.83. Across 1000 runs in that replication, a higher score was achieved by groups of random agents in 97.6% of all cases (Grim et al. 2019). See an interactive simulation of Hong and Page’s group deliberation model in the Other Internet Resources section below. Hong and Page also offer a mathematical theorem as a partial explanation of such a result (Hong & Page 2004). That component of their work has been attacked as trivial or irrelevant (Thompson 2014), though the attack itself has come under criticism as well (Kuehn 2017, Singer 2019).

The Hong-Page model solidly demonstrates a general claim attempted in the disputed Weisberg-Muldoon model: cognitive diversity can indeed be a social epistemic advantage. In application, however, the Hong-Page result has sometimes been appealed to as support for much broader claims: that diversity is always or quite generally of epistemic advantage (Anderson 2006, Landemore 2013, Gunn 2014, Weymark 2015). The result itself is limited in ways that have not always been acknowledged. In particular, it proves sensitive to the precise character of the epistemic landscape employed.

Hong and Page’s landscape is one in which each of 2000 points is given a random value between 1 and 100: a purely random landscape. One consequence of that fact is that the group of 9 best heuristics on different random Hong-Page landscapes have essentially no correlation: a high-performing individual on one landscape need have no carry-over to another. Grim et al. (2019) expands the Hong-Page model to incorporate other landscapes as well, in ways which challenge the general conclusions regarding diversity that have been drawn from the model but which also suggest the potential for further interesting applications.

An easy way to “smooth” the Hong-Page landscapes is to assign random values not to every point on the 2000-point loop but every second point, for example, with intermediate points taking an average between those on each side. Where a random landscape has a “smoothness” factor of 0, this variation will have a randomness factor of 1. A still “smoother” landscape of degree 2 would be one in which slopes are drawn between random values assigned to every third point. Each degree of smoothness increases the average value correlation between a point and its neighbors.

Using Hong and Page’s parameters in other respects, it turns out that the “Diversity Trumps Ability” result holds only for landscapes with a smoothness factor less than 4. Beyond that point, it is “ability”—the performance of groups of the 9 best-performing individuals—that trumps “diversity”—the performance of groups of random heuristics.

The Hong-Page result is therefore very sensitive to the “smoothness” of the epistemic landscape modeled. As hinted in section 3.2.2, this is an indication from within the modeling tradition itself of the danger of restricted and over-simple abstractions regarding epistemic landscapes. Moreover, the model’s sensitivity is not limited to landscape smoothness: social epistemic success depends on the pool of numbers from which heuristics are drawn as well, with “diversity” showing strength on smoother landscapes if the pool of heuristics is expanded. Results also depend on whether social interaction is modeled using of Hong-Page’s “relay” or an alternative dynamics in which individuals collectively (rather than sequentially) announce their results, with all moving to the highest point announced by any. Different landscape smoothnesses, different heuristic pool sizes, and different interactive dynamics will favor the epistemic advantages of different compositions of groups, with different proportions of random and best-performing individuals (Grim et al. 2019).

3.3 Ethics and Social-Political Philosophy

What, then, is the conduct that ought to be adopted, the reasonable course of conduct, for this egoistic, naturally unsocial being, living side by side with similar beings? —Henry Sidgwick, Outlines of the History of Ethics (1886: 162)

Hobbes’ Leviathan can be read as asking, with Sidgwick, how cooperation can emerge in a society of egoists (Hobbes 1651). Cooperation is thus a central theme in both ethics and social-political philosophy.

3.3.1 Game theory and the evolution of cooperation

Game theory has been a major tool in many of the philosophical considerations of cooperation, extended with computational methodologies. Here the primary example is the Prisoner’s Dilemma, a strategic interaction between two agents with a payoff matrix in which joint cooperation gets a higher payoff than joint defection, but the highest payoff goes to a player who defects when the other player cooperates (see esp. Kuhn 1997 [2019]). Formally, the Prisoner’s Dilemma requires the value DC for defection against cooperation to be higher than CC for joint cooperation, with CC higher than the payoff CD for cooperation against defection. In order to avoid an advantage to alternating trade-offs, CC should also be higher than \((\textrm{CD} + \textrm{DC}) / 2.\) A simple set of values that fits those requirements is shown in the matrix in Figure 14.

| Player A | |||

| Cooperate | Defect | ||

| Player B | Cooperate | 3,3 | 0,5 |

| Defect | 5,0 | 1,1 | |

Figure 14: A Prisoner’s Dilemma payoff matrix

It is clear in the “one-shot” Prisoner’s Dilemma that defection is strictly dominant: whether the other player cooperates or defects, one gains more points by defecting. But if defection always gives a higher payoff, what sense does it make to cooperate? In a Hobbesian population of egoists, with payoffs as in the Prisoner’s Dilemma, it would seem that we should expect mutual defection as both a matter of course and the rational outcome—Hobbes’ “war of all against all”. How could a population of egoists come to cooperate? How could the ethical desideratum of cooperation arise and persist?

A number of mechanisms have been shown to support the emergence of cooperation: kin selection (Fisher 1930; Haldane 1932), green beards (Hamilton 1964a,b; Dawkins 1976), secret handshakes (Robson 1990; Wiseman & Yilankaya 2001), iterated games, spatialized and structured interactions (Grim 1995; Skyrms 1996, 2004; Grim, Mar, & St. Denis 1998; Alexander 2007), and noisy signals (Nowak & Sigmund 1992). This section offers examples of the last two of these.

In the iterated Prisoner’s Dilemma, players repeat their interactions, either in a fixed number of rounds or in an infinite or indefinite repetition. Robert Axelrod’s tournaments in the early 1980s are the classic studies in the iterated prisoner’s dilemma, and early examples of the application of computational techniques. Strategies for playing the Prisoner’s Dilemma were solicited from experts in various fields, pitted against all others (and themselves) in round-robin competition over 200 rounds. Famously, the strategy that triumphed was Tit for Tat, a simple strategy which responds to cooperation from the other player on the previous round with cooperation, responding to defection on the previous round with defection. Even more surprisingly, Tit for Tat again came out in front in a second tournament, despite the fact that submitted strategies knew that Tit for Tat was the opponent to aim for. When those same strategies were explored with replicator dynamics in place of round-robin competition, Tit for Tat again was the winner (Axelrod and Hamilton 1981). Further work has tempered Tit for Tat’s reputation somewhat, emphasizing the constraints of Axelrod’s tournaments both in terms of structure and the strategies submitted (Kendall, Yao, & Chang 2007; Kuhn 1997 [2019]).

A simple set of eight “reactive” strategies, in which a player acts solely on the basis of the opponent’s previous move, is shown in Figure 15. Coded with “1” for cooperate and “0” for defect and three places representing first move i, response to cooperation on the other side c, and response to defection on the other side d, these give us 8 strategies that include all defect, all cooperate, tit for tat as well as several other variations.

| i | c | d | reactive strategy |

| 0 | 0 | 0 | All Defect |

| 0 | 0 | 1 | |

| 0 | 1 | 0 | Suspicious Tit for Tat |

| 0 | 1 | 1 | Suspicious All Cooperate |

| 1 | 0 | 0 | Deceptive All Defect |

| 1 | 0 | 1 | |

| 1 | 1 | 0 | Tit for Tat |

| 1 | 1 | 1 | All Cooperate |

Figure 15: 8 reactive strategies in the Prisoner’s Dilemma

If these strategies are played against each other and themselves, in the manner of Axelrod’s tournaments, it is “all defect” that is the clear winner. If agents imitate the most successful strategy, a population will thus immediately go to All Defect—a game-theoretic image of Hobbes’ war of all against all, perhaps.

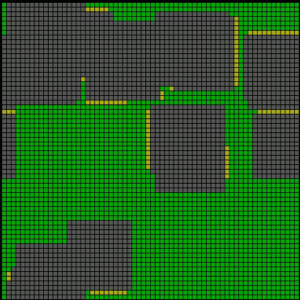

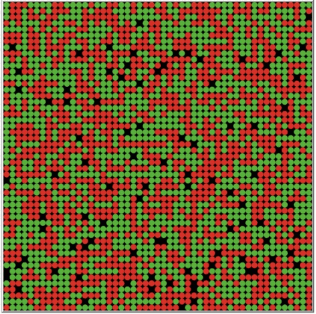

Consider, however, a spatialized Prisoner’s Dilemma in the form of cellular automata, easily run and analyzed on a computer. Cells are assigned one of these eight strategies at random, play an iterated game locally with their eight immediate neighbors in the array, and then adopt the strategy of that neighbor (if any) that achieves a higher total score. In this case, with the same 8 strategies, occupation of the array starts with a dominance by All Defect, but clusters of Tit for Tat grow to dominate the space (Figure 16). An interactive simulation in which one can choose which competing reactive strategies play in a spatialized array is available in the Other Internet Resources section below.

Figure 16: Conquest by Tit for Tat in the Spatialized Prisoner’s Dilemma. All defect is shown in green, Tit for Tat in gray.

In this case, there are two aspects to the emergence of cooperation in the form of Tit for Tat. One is the fact that play is local: strategies total points over just local interactions, rather than play with all other cells. The other is that imitation is local as well: strategies imitate their most successful neighbor, rather than that strategy in the array that gained the most points. The fact that both conditions play out in the local structure of the lattice allows clusters of Tit for Tat to form and grow. In Axelrod’s tournaments it is particularly important that Tit for Tat does well in play against itself; the same is true here. If either game interaction or strategy updating is made global rather than local, dominance goes to All Defect instead. One way in which cooperation can emerge, then, is through structured interactions (Grim 1995; Skyrms 1996, 2004; Grim, Mar, & St. Denis 1998). J. McKenzie Alexander (2007) offers a particularly thorough investigation of different interaction structures and different games.

Martin Nowak and Karl Sigmund offer a further variation that results in an even more surprising level of cooperation in the Prisoner’s Dilemma (Nowak & Sigmund 1992). The reactive strategies outlined above are communicatively perfect strategies. There is no noise in “hearing” a move as cooperation or defection on the other side, and no “shaking hand” in response. In Tit for Tat a cooperation on the other side is flawlessly perceived as such, for example, and is perfectly responded to with cooperation. If signals are noisy or responses are less than flawless, however, Tit for Tat loses its advantage in play against itself. In that case a chancy defection will set up a chain of mutual defections until a chancy cooperation reverses the trend. A “noisy” Tit for Tat played against itself in an infinite game does no better than a random strategy.

Nowak and Sigmund replace the “perfect” strategies of Figure 14 with uniformly stochastic ones, reflecting a world of noisy signals and actions. The closest to All Defect will now be a strategy .01, .01, .01, indicating a strategy that has only a 99% chance of defecting initially and in response to either cooperation or defection. The closest to Tit for Tat will be a strategy .99, .99, .01, indicating merely a high probability of starting with cooperation and responding to cooperation with cooperation, defection with defection. Using the mathematical fiction of an infinite game, Nowak and Sigmund are able to ignore the initial value.

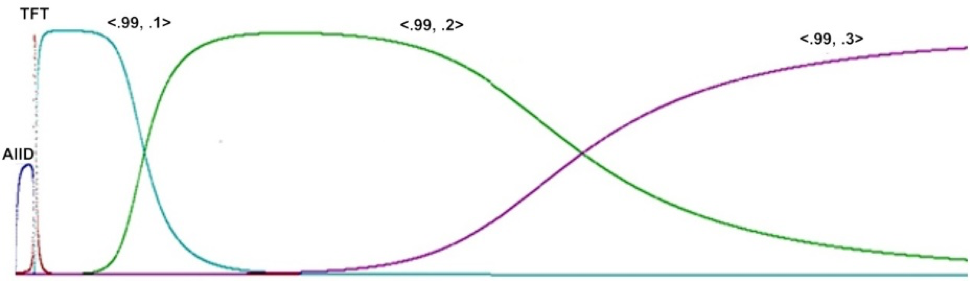

Pitting a full range of stochastic strategies of this type against each other in a computerized tournament, using replicator dynamics in the manner of Axelrod and Hamilton (1981), Nowak and Sigmund trace a progressive evolution of strategies. Computer simulation shows imperfect All Defect to be an early winner, followed by Imperfect Tit for Tat. But at that point dominance in the population goes to a still more cooperative strategy which cooperates with cooperation 99% of the time but cooperates even against defection 10% of the time. That strategy is eventually dominated by one that cooperates against defection 20% of the time, and then by one that cooperates against defection 30% of the time. A replication of the Nowak and Sigmund result is shown in Figure 17. Nowak and Sigmund show analytically that the most successful strategy in a world of noisy information will be “Generous Tit for Tat”, with probabilities of \(1 - \varepsilon\) and 1/3 for cooperation against cooperation and defection respectively.

Figure 17: Evolution toward Nowak and Sigmund’s “Generous Tit for Tat” in a world of imperfect information (Nowak & Sigmund 1992). Population proportions are shown vertically for labelled strategies shown over 12,000 generations for an initial pool of 121 stochastic strategies \(\langle c,d\rangle\) at .1 intervals, full value of 0 and 1 replaced with 0.01 and 0.99. [An extended description of figure 17 is in the supplement.]

How can cooperation emerge in a society of self-serving egoists? In the game-theoretic context of the Prisoner’s Dilemma, these results indicate that iterated interaction, spatialization and structured interaction, and noisy information can all facilitate cooperation, at least in the form of strategies such as Tit for Tat. When all three effects are combined, the result appears to be a level of cooperation even greater than that indicated in Nowak and Sigmund. Within a spatialized Prisoner’s Dilemma using stochastic strategies, it is strategies in the region of probabilities \(1 - \varepsilon\) and 2/3 that emerge as optimal in the sense of having the highest scores in play against themselves without being open to invasion from small clusters of other strategies (Grim 1996).

This outline has focused on some basic background regarding the Prisoner’s Dilemma and emergence of cooperation. More recently a generation of richer game-theoretic models has appeared, using a wider variety of games of conflict and coordination and more closely tied to historical precedents in social and political philosophy. Newer game-theoretic analyses of state of nature scenarios in Hobbes appear in Vanderschraaf (2006) and Chung (2015), extended with simulation to include Locke and Nozick in Bruner (2020).

There is also a new body of work that extends game-theoretic modeling and simulation to questions of social inequity. Bruner (2017) shows that the mere fact that one group is a minority in a population, and thus interacts more frequently with majority than with minority members, can result in its being disadvantaged where exchanges are characterized by bargaining in a Nash demand game (Young 1993). Termed the “cultural Red King”, the effect has been further explored through simulation, with links to experiment, and with extensions to questions of “intersectional disadvantage”, in which overlapping minority categories are in play (O’Connor 2017; Mohseni, O’Connor, & Rubin 2019 [Other Internet Resources]; O’Connor, Bright, & Bruner 2019). The relevance of this to the focus of the previous section is made clear in Rubin and O’Connor (2018) and O’Connor and Bruner (2019), modeling minority disadvantage in scientific communities.

3.3.2 Modeling democracy

In computational simulations, game-theoretic cooperation has been appealed to as a model for aspects of both ethics in the sense of Sidgwick and social-political philosophy on the model of Hobbes. That model is tied to game-theoretic assumptions in general, however, and often to the structure of the Prisoner’s Dilemma in particular (though Skyrms 2003 and Alexander 2007 are notable exceptions). With regard to a wide range of questions in social and political philosophy in particular, the limitations of game theory may seem unhelpfully abstract and artificial.

While still abstract, there are other attempts to model questions in social political philosophy computationally. Here the studies mentioned earlier regarding polarization are relevant. There have also been recent attempts to address questions regarding epistemic democracy: the idea that among its other virtues, democratic decision-making is more likely to track the truth.

There is a contrast, however, between open democratic decision-making, in which a full population takes part, and representative democracy, in which decision-making is passed up through a hierarchy of representation. There is also a contrast between democracy seen as purely a matter of voting and as a deliberative process that in some way involves a population in wider discussion (Habermas 1992 [1996]; Anderson 2006; Landemore 2013).

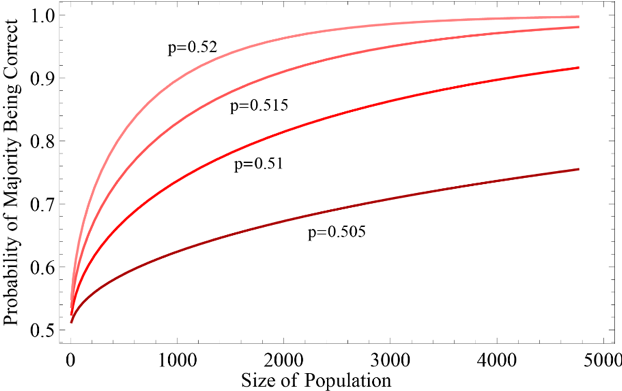

Figure 18: The Condorcet result: probability of a majority of different odd-numbered sizes being correct on a binary question with different homogeneous probabilities of individual members being correct. [An extended description of figure 18 is in the supplement.]

The classic result for an open democracy and simple voting is the Condorcet jury theorem (Condorcet 1785). As long as each voter has a uniform an independent probability greater than 0.5 of getting an answer right, the probability of a correct answer from a majority vote is significantly higher than that of any individual, and it quickly increases with the size of the population (Figure 18).

It can be shown analytically that the basic thrust of the Condorcet result remains when assumptions regarding uniform and independent probabilities are relaxed (Boland, Proschan, & Tong 1989; Dietrich & Spiekermann 2013). The Condorcet result is significantly weakened, however, when applied in hierarchical representation, in which smaller groups first reach a majority verdict which is then carried to a second level of representatives who use a majority vote on that level (Boland 1989). More complicated questions regarding deliberative dynamics and representation require simulation using computers.

The Hong-Page structure of group deliberation, outlined in the context of computational philosophy of science above, can also be taken as a model of “deliberative democracy” beyond a simple vote. The success of deliberation in a group can be measured as the average value height of points found. In a representative instantiation of this kind of deliberation, smaller groups of individuals first use their individual heuristics to explore a landscape collectively, then handing their collective “best” for each point on the landscape to a representative. In a second round of deliberation, the representatives work from the results from their constituents in a second round of exploration.

Unlike in the case of pure voting and the Condorcet result, computational simulations show that the use of a representative structure does not dull the effect of deliberation on this model: average scores for three groups of three in a representative structure are if anything slightly higher than average scores from an open deliberation involving 9 agents (Grim et al. 2020). Results like these show how computational models might help expand the political philosophical arguments for representative democracy.

Social and political philosophy appears to be a particularly promising area for big data and computational philosophy employing the data mining tools of computational social science, but as of this writing that development remains largely a promise for the future.

3.3.3 Social outcomes as complex systems

The guiding idea of the interdisciplinary theme known as “complex systems” is that phenomena on a higher level can “emerge” from complex interactions on a lower level (Waldrop 1992, Kauffman 1995, Mitchell 2011, Krakauer 2019). The emergence of social outcomes from the interaction of individual choices is a natural target, and agent-based modeling is a natural tool.

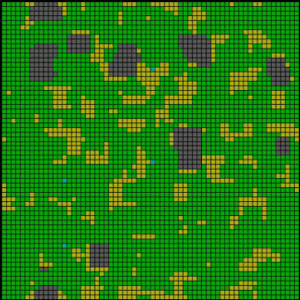

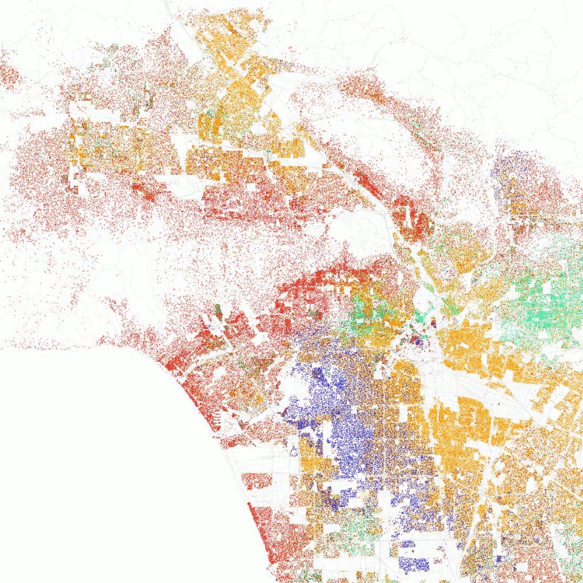

Opinion polarization and the evolution of cooperation, outlined above, both fit this pattern. A further classic example is the work of Thomas C. Schelling on residential segregation. A glance at demographic maps of American cities makes the fact of residential segregation obvious: ethnic and racial groups appear as clearly distinguished patches (Figure 19). Is this an open and shut indication of rampant racism in American life?

Figure 19: A demographic map of Los Angeles. White households are shown in red, African-American in purple, Asian-American in green, and Hispanic in orange. (Fischer 2010 in Other Internet Resources)

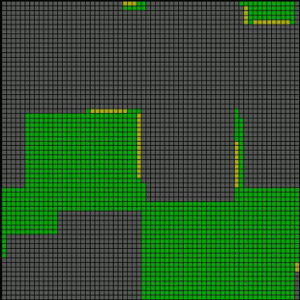

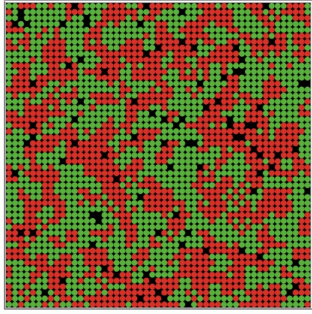

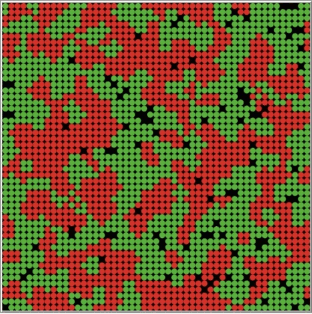

Schelling attempted an answer to this question with an agent-based model that originally consisted of pennies and dimes on a checkerboard array (Schelling 1971, 1978), but which has been studied computationally in a number of variations. Two types of agents (Schelling’s pennies and dimes) are distributed at random across a cellular automata lattice, with given preferences regarding their neighbors. In its original form, each agent has a threshold regarding neighbors of “their own kind”. At that threshold level and above, agents remain in place. Should they not have that number of like neighbors, they move to another spot (in some variations, a move at random, in others a move to the closest spot that satisfies their threshold).

What Schelling found was that residential segregation occurs even without a strong racist demand that all of one’s neighbors, or even most, are “of one’s kind”. Even when preference is that just a third of one’s neighbors are “of one’s kind”, clear patches of residential segregation appear. The iterated evolution of such an array is shown in Figure 20. See the interactive simulation of this residential segregation model in the Other Internet Resources section below.

Figure 20: Emergence of residential segregation in the Schelling model with preference threshold set at 33%

The conclusion that Schelling is careful to draw from such a model is simply that a low level of preference can be sufficient for residential segregation. It does not follow that more egregious social and economic factors aren’t operative or even dominant in the residential segregation we actually observe.

In this case basic modeling assumptions have been challenged on empirical grounds. Elizabeth Bruch and Robert Mare use sociological data on racial preferences, challenging the sharp cut-off employed in the Schelling model (Bruch & Mare 2006). They claim on the basis of simulation that the Schelling effect disappears when more realistically smooth preference functions are used instead. Their simulations and the latter claim turn out to be in error (van de Rijt, Siegel, & Macy 2009), but the example of testing the robustness of simple models with an eye to real data remains a valuable one.

3.4 Computational Philosophy of Language

Computational modeling has been applied in philosophy of language along two main lines. First, there are investigations of analogy and metaphor using models of semantic webs that share a developmental history with some of the models of scientific theory outlined above. Second, there are investigations of the emergence of signaling, which have often used a game-theoretic base akin to some approaches to the emergence of cooperation discussed above.

3.4.1 Semantic webs, analogy and metaphor

WordNet is a computerized lexical database for English built by George Miller in 1985 with a hierarchical structure of semantic categories intended to reflect empirical observations regarding human processing. A category “bird” includes a sub-category “songbirds” with “canary” as a particular, for example, intended to explain the fact that subjects could more quickly process “canaries sing”—which involves traversing just one categorical step—than they could process “canaries fly” (Miller, Beckwith, Fellbaum, Gross, & Miller 1990).

There is a long tradition, across psychology, linguistics, and philosophy, in which analogy and metaphor are seen as an important key to abstract reasoning and creativity (Black 1962; Hesse 1943 [1966]; Lakoff & Johnson 1980; Gentner 1982; Lakoff & Turner 1989). Beginning in the 1980s several notable attempts have been made to apply computational tools in order to both understand and generate analogies. Douglas Hofstadter and Melanie Mitchell’s Copycat, developed as a model of high-level cognition, has “codelets” compete within a network in order to answer simple questions of analogy: “abc is to abd as ijk is to what?” (Hofstadter 2008). Holyoak and Thagard envisage metaphors as analogies in which the source and target domain are semantically distinct, calling for relational comparison between two semantic nets (Holyoak & Thagard 1989, 1995; see also Falkenhainer, Forbus, & Gentner 1989). In the Holyoak and Thagard model those comparisons are constrained in a number of different ways that call for coherence; their computational modeling for coherence in the case of metaphor was in fact a direct ancestor to Thagard’s coherence modeling of scientific theory change discussed above (Thagard 1988, 1992).

Eric Steinhart and Eva Kittay’s NETMET (see Other Internet Resources) offers an illustration of the relational approach to analogy and metaphor. They use one semantic and inferential subnet related to birth another related to the theory of ideas in the Theatetus. Each subnet is categorized in terms of relations of containment, production, discarding, helping, passing, expressing and opposition. On that basis NETMET generates metaphors including “Socrates is a midwife”, “the mind is an intellectual womb”, “an idea is a child of the mind”, “some ideas are stillborn”, and the like (Steinhart 1994; Steinhart & Kittay 1994). NETMET can be applied to large linguistic databases such as WordNet.

3.4.2 Signaling games and the emergence of communication

Suppose we start without pre-existing meaning. Is it possible that under favorable conditions, unsophisticated learning dynamics can spontaneously generate meaningful signaling? The answer is affirmative. —Brian Skyrms, Signals (2010: 19)

David Lewis’ sender-receiver game is a cooperative game in which a sender observes a state of nature and chooses a signal, a receiver observes that signal and chooses an act, with both sender and receiver benefiting from an appropriate coordination between state of nature and act (Lewis 1969). A number of researchers have explored both analytic and computational models of signaling games with an eye to ways in which initially arbitrary signals can come to function in ways that start to look like meaning.