Experiment in Biology

Like the philosophy of science in general, the philosophy of biology has traditionally been mostly concerned with theories. For a variety of reasons, the theory that has by far attracted the most interest is evolutionary theory. There was a period in the philosophy of biology when this field was almost identical with the philosophy of evolutionary theory, especially if debates on the nature of species are included in this area. From the 1960s almost until the 1990s, basically the only non-evolutionary topic discussed was the issue of the reduction (or non-reduction) of classical genetics to molecular genetics. In the 1990s, practitioners of the field finally started to move into other areas of biology such as cell biology, molecular biology, immunology, neuroscience, as well as addressing philosophical issues other than theory reduction (e.g., Darden 1991; Burian 1992, 1997; Schaffner 1993; Bechtel and Richardson 1993; Rheinberger 1997). As these are profoundly experimental disciplines, an increased attention to the experiment in biology was inevitable. Thus, what is generally known as the “New Experimentalism” in general philosophy of science arose more or less independently in the philosophy of biology, even though this fact has not been advertised much. Perhaps, the increased focus on experimentation was more due to the influence of historians of biology and their practical turn than due to the “New Experimentalists” in general philosophy of science such as Hacking (1983), Franklin (1986) or Mayo (1996). At any rate, today there is a wealth of historical as well as philosophical scholarship that closely studies what goes on in biological laboratories. Some of this literature relates to classical issues in philosophy of science, but it has also explored new philosophical ground.

There are issues in the philosophy of biological experimentation that also arise in other experimental sciences, for example, issues that have to do with causal inference, experimental testing, the reliability of data, the problem of experimental artifacts, and the rationality of scientific practice. Experimental biological knowledge is sufficiently different from other kinds of scientific knowledge to merit a separate philosophical treatment of these issues. In addition, there are issues that arise exclusively in biology, such as the role of model organisms. In what follows, both kinds of issues will be considered.

- 1. Experimentation and Causal Reasoning

- 2. Crucial Experimental Evidence

- 3. Experimental Systems and Model Organisms

- 4. Experimentation, Rationality and Social Epistemology

- 5. Experimental Artifacts and Data Reliability

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. Experimentation and Causal Reasoning

1.1 Mill’s Methods in Experimental Biology

Causal reasoning approaches try to reconstruct and sometimes justify the rules that allow scientists to infer causal relationships from data, including experimental data. One of the oldest such attempts is due to John Stuart Mill (1996 [1843]), who presented a systematic account of causal inference that consisted of five different so-called “methods”: The Method of Agreement, the Method of Difference, the Joint Method of Agreement and of Difference, the Method of Residues, and the Method Concomitant Variation. While some of these “methods” pertain more to observation, the Method of Difference in particular is widely seen as encapsulating an important principle of scientific reasoning based on experiment. Mill himself characterized it thus: “If an instance in which the phenomenon under investigation occurs, and an instance in which it does not occur, have every circumstance in common save one, that one occurring only in the former; the circumstance in which alone the two instances differ, is the effect, or the cause, or an indispensable part of the cause, of the phenomenon” (Mill 1996 [1843], Ch. 8, §2). Thus, Mill’s method of difference asks us to look at two situations: one in which the phenomenon under investigation occurs, and one in which it does not occur. If a factor can be identified that is the only other difference between the two situations, then this factor must be causally relevant.

As Mill noted, the method of difference is particularly germane to experimental inquiry because such a difference as is required by this method can often be produced by an experimental intervention. Indeed, according to a position known as interventionism about causality there is a tight connection between the concept of cause and experimental interventions (Woodward 2003).

Mill’s method of difference captures an important kind of reasoning that is used frequently in biological experiments. Let’s suppose we want to find out if a newly discovered compound is an antibiotic, i.e., inhibits the growth of certain bacteria. We start by dividing a bacterial cell culture into several aliquots (samples of same size derived from a homogeneous solution). Then, we add to one group of aliquots the suspected antibiotic that is dissolved in phosphate buffer (“treatment”). To the other group, we add only the phosphate buffer (“control”). Then we record bacterial growth in all the samples (e.g., by measuring the increase in optical density as the culture medium clouds up due to the bacteria). This experimental setup makes sure that the treatment and control samples differ only in the presence or absence of the antibiotic, thus ruling out that any observed difference in growth between the treatment and control aliquots is caused not by the suspected antibiotic but by the buffer solution. Let us denote the antibiotic as “A” and the growth inhibition as “W”. Biologists would thus infer from this experiment that A is an antibiotic if W is observed in the samples containing A but not in the samples not containing A.

Mill construed this “method” in terms of a principle of inductive inference that can be justified pragmatically. However, it is interesting to note that the principle can also be viewed as instantiating a form of deductive inference.

To this end, of course, the Method of Difference must be strengthened with additional premises. Here is one way how this can be done (adapted from Hofmann and Baumgartner 2011):

- S1 and S2 are two homogeneous test situations (assumption)

- Two factors A and W both occur in S1 both not in S2 (exp. result)

- W is an effect in a deterministic causal structure (assumption)

- In S1 there exists a cause of the occurrence of W (from 2, 3)

- In S2 there exists no cause of the occurrence of W (from 2,3)

- S2 contains no confounder of W (from 5)

- S1 contains no confounder of W (from 1,6)

- The cause of W belongs to the set {A, W} (from 4, 7)

- W does not cause itself (assumption)

- A is the cause or a part of the cause existing in S1

Some of the terms used in this deduction require explication. Two test situations are homogeneous on the condition that, if a factor is causally relevant and is present in test situation S1, then it is also present in test situation S2, and vice versa. A confounder would be a causally relevant factor that does not belong to the set {A, W}. The presence of such a confounder is excluded by the assumption of causal homogeneity (1). In practical situations, a confounder might be an unknown or uncontrolled (i.e., not measurable or not measured) factor that is present in only one of the test situations. In our antibiotics example, this could be some chemical that was put into just one aliquot inadvertently or without knowledge of the experimenter. In fact, the risk of a confounder is precisely why our biological experimenter will divide up the mother culture into aliquots just before putting in the substance to be tested. This makes it unlikely that one aliquot contains an uncontrolled chemical that the other does not contain. Furthermore, a skilled experimenter will make sure that the cultures are well stirred, thus preventing a physical-chemical inhomogeneity of the culture (e.g., some chemical or temperature gradient). Thus, there are typical laboratory manipulations and procedures that reduce the risk of confounders.

In spite of these control procedures, it is clear that the derivation of a causal factor from a Millean difference test presupposes strong assumptions. In particular, it must be assumed that we are dealing with a deterministic causal structure (3) and that nothing happens uncaused (4). In our simple example, this amounts to assuming that the bacteria don’t exhibit any kind of spontaneity, in other words, their growth behavior is assumed to be determined by their genetic constitution and their environment (although most biologists are convinced that their experimental organisms have good and bad days just like themselves!).

If we thus construe Millean causal reasoning as deductive reasoning, all the inferential risks are shifted from the induction rules into the premises such as causal homogeneity, determinism and the principle of universal causality. It is a characteristic feature of inductive inference that this is always possible (Norton 2003). A justification of these premises, of course, does not exist. They may be viewed as being part and parcel of a certain kind of experimental practice that is vindicated as a whole by its fruitfulness for research (see Section 5).

1.2 Generalizations of Mill’s Methods

Mill’s methods can be formalized and generalized into rich and sophisticated methodologies of causal reasoning (e.g., Ragin 1987, Baumgartner 2009, Graßhoff 2011, Beirlaen, Leuridan and Van De Putte 2018). Such accounts haven been successfully used in the reconstruction of historical episodes such as the discovery of the urea cycle (Graßhoff, Casties and Nickelsen 2000; Grasshoff and May 1995).

Mill’s methods and their generalized versions may even be viewed as providing some kind of a logic of discovery (see also Schaffner 1974), the existence of which has long been controversial (Nickles 1980). However, it should be noted that the methods of causal reasoning do not generate causal knowledge from scratch, as it were. They already take causal hypotheses as inputs and refine them in an iterative way (Grasshoff 2011). While some formulations (including Mill’s own mentioned above) may suggest that the inputs to Mill’s methods are mere associations or regularities, it should be clear by now that the method is only reliable if some kind of causal knowledge is already at hand. In our example above, the causal assumption is that of causal homogeneity, which obviously has causal content. This vindicates the slogan “no causes in, no causes out” (Cartwright 1989, Ch. 2).

This discussion has focused on deterministic causal reasoning, which is widespread in experimental biology. It should be mentioned that, of course, there are also statistical inference methods of the kind formalized in Spirtes, Glymour and Scheines (2000) in use, in particular regression analysis and analysis of variance, which are frequently used in biological field experiments. Wet lab experiments, by contrast, rarely require such techniques.

Some enthusiasts of causal inference methods believe that a sophisticated version of Mill’s methods (and their statistical counterparts) are basically all that is needed in order to account for experimental practice (e.g., Graßhoff 2011). An attraction of this view is that it can be construed essentially as requiring only deduction and no cumbersome inductive methods.

1.3 Mechanistic Constitution and Interlevel Experiments

Experimental methodology has traditionally been mainly concerned with inferring causal dependencies. However, recent research suggests that we need to broaden its scope. A considerable body of scholarship documents that much biological research is best described in terms of the search for mechanisms, which may be understood as collections of entities and activities that produce a phenomenon that biologists want to understand (e.g., Wimsatt 1974, Machamer, Darden and Craver 2000, Glennan 2005, Bechtel 2006, Craver 2007a). Mechanisms are both what biological science is aiming at and a means to this end, for sketches or schemes of mechanisms can guide scientists in the discovery of missing parts (Darden and Craver 2002, Scholl and Nickelsen 2015).

According to Craver (2007b), we should distinguish between two kinds of relations that make up mechanisms: (1) causal and (2) constitutive relations. The former may hold between different parts of a mechanism. For example, in the basic mechanism of synaptic transmission at the terminal part of a neuron, the influx of calcium causes the release of neurotransmitter in the space between the synaptic terminal and the postsynaptic cell membrane. This causal link can be understood pretty much as discussed in the last two sections. The other kind of relation, mechanistic constitutive relevance (or just mechanistic constitution) holds between the parts and of a mechanism and the phenomenon the mechanism is for. For example, the influx of calcium into an axon terminal, together with other events, constitutes the phenomenon of synaptic transmission. Craver (2007b) contends that this is not a causal relation because the relata cannot be viewed as distinct and non-overlapping.

But what defines constitutive relevance? Inspired by interventionism about causality, Craver argued that it is best defined by the kinds of interventions that are used by biologists in order to find out whether some entity and an associated activity are part of a mechanism: by certain kinds of experiments. In particular, there are two kinds of so-called interlevel experiments the combination of which establishes (and defines) constitutive relevance. In the first kind, an intervention is performed on some part and an ensuing change is observed in the phenomenon under study. To return to our synaptic example, a calcium antagonist may be used to show that preventing the binding of calcium ions to their receptors prevents the release of neurotransmitter. This is a bottom-up experiment. The second kind of inter-level experiment intervenes on the phenomenon as a whole in order to see some change in the parts. For example, stimulating synaptic transmission by increasing the rate by which action potentials arrive at the terminal will lead to a measurable increase in calcium influx at this terminal. This can be done, for example, by asking a subject to perform a cognitive task (such as trying to memorize something) and by observing changes in calcium concentration by functional magnetic resonance imaging (fMRI). Thus, mechanistic constitution is defined by the mutual manipulability of the parts of mechanisms and the mechanism as a whole.

Recent debate has challenged the mutual manipulability account (Leuridan 2012, Harinen 2018, Romero 2015). One issue is that inter-level experiments are necessarily “fat-handed” (Baumgartner and Gebharter 2016) because they change the values of at least two different variables residing at different levels (e.g., calcium binding and synaptic transmission, where the former is a part of the latter). But this threatens to undermine the inferences to mechanistic constitution. A possible solution might consist in inferring constituents abductively, by positing constitutive relations as the best explanation for the presence of common causes that unbreakably correlate phenomena and their constituents (Baumgartner and Casini 2017).

Thus, the discovery of mechanisms in biology may require a set of experimental reasoning principles that must supplement Mill’s methods, even if there are considerable similarities between these principles and the better-known principles of causal inference (Harbecke 2015).

2. Crucial Experimental Evidence

2.1 Duhem’s Predicament and the Oxidative Phosphorylation Controversy

Biological textbooks are replete with famous experiments that are presented as having provided crucial experimental evidence for some hypothesis. To name just a few examples: Oswald Avery, Colin MacLeod and Maclyn McCarthy (1944) are reputed to have established DNA as the carrier of hereditary information by a set of ingenious transformation experiments using a bacterium causing lung infection, Pneumococcus. Matthew Meselson and Frank Stahl (1958) conducted an experiment in that is widely thought to have provided evidence that DNA replication is semi-conservative rather than conservative or dissipative (meaning that a newly formed DNA double helix contains one intact strand from the parent molecule and one newly synthesized strand). Ephraim Racker and Walter Stoeckenius (1974) are thought to have provided experimental evidence that settled the long-standing controversy (known as the “oxidative phosphorylation or ox-phos controversy”) as to whether respiration and the generation of the energy-rich compound ATP in mitochondria are coupled through a chemical intermediate or a proton gradient.

Many philosophers of science have been skeptical about the possibility of such “crucial experiments”. Most famous are the arguments provided by Pierre Duhem (1905), who claimed that crucial experimental are impossible (in physics) because, even if they succeed in eliminating a false hypothesis, this does not prove that the remaining hypothesis is true. Scientists, unlike mathematicians, are never in possession of a complete set of hypotheses one of which must be true. It is always possible that the true hypothesis has not yet been conceived, thus eliminating all but one hypothesis by crucial experiments will not necessarily lead to the truth.

Based on such considerations, it could be argued that the function of the alleged crucial experiments in biological textbooks is more pedagogical than evidential. In order to assess this claim, I will consider two examples in somewhat greater detail, namely the ox-phos controversy and the Meselson-Stahl case.

The ox-phos controversy was about the mechanism by which respiration (i.e., the oxidation of energy-rich compounds) is coupled to the phosphorylation of ADP (adenosine diphosphate) to ATP (adenosine triphosphate). The reverse reaction, hydrolysis of ATP to ADP and inorganic phosphate, is used by cells to power numerous biochemical reactions such as the synthesis of proteins as well as small molecules, the replication of DNA, locomotion, and many more (the energy for muscle contraction is also provided by ATP hydrolysis). Thus, ATP functions like a universal biochemical battery that is used to power all kinds of cellular processes. So how can the cell use the chemical energy contained in food to charge these batteries? A first pathway that generates ATP from ADP to be described was glycolysis, the degradation of sugar. This pathway does not require oxygen. The way it works is that the breakdown of sugar is used by the cell to make an activated phosphate compound – a so-called high-energy intermediate – that subsequently transfers its phosphate group to ADP to make ATP.

It was clear already in the 1940s that this cannot be the only process that makes ATP, as it is anaerobic (doesn’t require oxygen) and not very efficient. The complete breakdown of food molecules requires oxygen. Hans Krebs showed that this occurs in a cyclic reaction pathway today known as the Krebs cycle. The Krebs cycle generates a compound called reduced NADH (nicotinamide adenine dinucleotide). The big question in biochemistry from the 1940s onward was how this compound was oxidized and how this oxidation was coupled to the phosphorylation of ADP. Naturally, biochemists searched for a high-energy intermediate as it was used in glycolysis. However, this intermediate proved to be extremely elusive.

In 1961, the British biochemist Peter Mitchell (1961) suggested a mechanism for oxidative phosphorylation (as this process became known) that did not require a high-energy intermediate. According to Mitchell’s scheme, NADH is oxidized step-wise on the inner membranes of mitochondria (membrane-bounded intracellular organelles that are canonically described as the cell’s power stations). This process occurs asymmetrically with respect to the membrane such that a net transport of protons across the membrane occurs (later it was shown that the protons are actually transported across proteinaceous pores in the membrane). Thus, a concentration gradient of protons builds up. As protons are electrically charged, this gradient also creates a voltage difference across the membrane. How is this energy used? According to Mitchell, the membrane contains another enzyme that uses the energy of the proton gradient to directly phosphorylate ADP. Thus, it is the proton gradient that couples the reduction of NADH to the phosphorylation of ADP, not a chemical high-energy intermediate. This mechanism became known as the “chemi-osmotic mechanism”.

Mitchell’s hypothesis was met with considerable skepticism, in spite of the fact that Mitchell and a co-worker were quickly able to produce experimental evidence in its favor. Specifically, he was able to demonstrate that isolated respiring mitochondria indeed expel protons (thus leading to a detectable acidification of the surrounding solution), as his hypothesis predicted. However, this evidence was dismissed by most of the biochemists at that time as inconclusive. For it was difficult to rule out at that time that the proton expulsion by respiring mitochondria was a mere side-effect of respiration, while the energy coupling was still mediated by a chemical intermediate.

This was the beginning of one of the most epic controversies in the history of 20th Century biochemistry. It took the larger part of a decade to resolve this issue, time during which several laboratories claimed success in finding the elusive chemical intermediate. But none of these findings survived critical scrutiny. On the other side, all the evidence adduced by Mitchell and his few supporters was considered non-conclusive for the reasons mentioned above. Weber (2002) shows that the two competing theories of oxidative phosphorylation can be viewed as incommensurable in the sense of T.S. Kuhn (1970), without thereby being incomparable (as Kuhn is often misread).

Nonetheless, the controversy was eventually closed in the mid-1970s in Mitchell’s favor, earning him the 1978 Nobel Prize for Chemistry. The cause(s) for this closure are subject to interpretation. Most biochemistry textbooks as well as Weber (2002, 2005) cite a famous experiment by Ephraim Racker and Walter Stoeckenius (1974) as crucial for establishing Mitchell’s mechanism. These biochemists worked with synthetic membrane vesicles into which they had inserted purified enzymes, one of which being the mitochondrial ATPase (the enzyme directly responsible for phosphorylation of ADP). The other enzyme was not derived from mitochondria at all, but from a photosynthetic bacterium. This enzyme had been shown to function as a light-driven proton pump. When this enzyme was inserted into artificial membrane vesicles together with the mitochondrial ATPase, the vesicles phosphorylated ADP upon illumination.

This experiment was viewed as crucial evidence for the chemi-osmotic mechanism because it was the first demonstration that the ATPase can be powered by a chemiosmotic gradient alone. The involvement of a chemical intermediate could be ruled out because there were no respiratory enzymes present to deliver an unknown intermediate to the ATPase.

The Racker-Stoeckenius experiment is perhaps best viewed as a straightforward case of an eliminative induction. On this view, the experiment decided between two causal graphs:

- (1)

- respiration → proton gradient → phosphorylation

The alternative causal graph was:

- (2)

- proton gradient ← respiration → chemical high-energy intermediate → phosphorylation

The experiment showed a direct causal link from the protein gradient to phosphorylation, which was consistent with (1) but not with (2), thus (2) was eliminated. However, it is clear that Duhem-style skeptical doubts could be raised about this case. From a strictly logical point of view, even if the experiment ruled out the chemical hypothesis, it didn’t prove Mitchell’s hypothesis.

So why have biochemists emphasized this experiment so much? Perhaps the reason is not merely the beauty of the experiment or its pedagogical value. From a methodological point of view, the experiment stands out from other experiments in the amount of control that biochemists had of what was going on in their test tubes. Because it was an artificially created system assembled from purified components, the biochemists knew exactly what they had put in there, making it less likely that they were deceived by traces from the enormously complex system from which these biochemical agents were derived. On such an interpretation, the experiment was not crucial in Duhem’s sense of ruling out all alternative hypotheses. Rather, what distinguishes it is that, within the body of evidence that supported Mitchell’s hypothesis, this one reached an unprecedented standard of experimental rigor, making it more difficult for opponents of Mitchell’s hypothesis to challenge it (see Weber 2005, Chapter 4).

2.2 DNA Replication and Inference to the Best Explanation

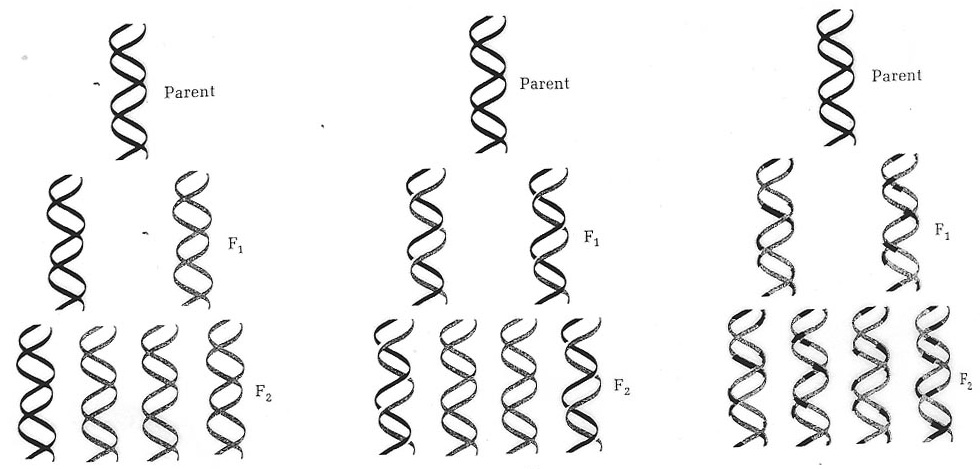

As another example, consider the models for DNA replication that were proposed in the 1950s after the publication of the double helix structure of DNA by J.D. Watson and F.R.H. Crick (Lehninger 1975):

Figure 1.

In purely causal terms, what all these models show is that the sequence of nucleotides in a newly synthesized DNA molecule depends on the sequence of a pre-existing molecule. But the models also contain structural information such as information the DNA double helix topology and the movements undergone by a replicating DNA molecule (still hypothetical at that time). According to the model on the left, the so-called “conservative” model, the two strands of the DNA double helix do not separate prior to replication; they stick together and the daughter molecule grows along an intact double helix that acts as a template as a whole. According to the model in the middle, called the “semi-conservative” mechanism (believed to be correct today), the two strands do separate in the process. The newly synthesized strands grow along the separated pre-existing strands that each acts as templates. On the model on the right, the “dispersive” model, the two strands not only separate in the process, they are also cut into pieces that are then rejoined. While pure causal graphs (i.e., causal graphs that contain only information about what variables depend on what other variables causally) may be used to depict the causes of the separation and cutting events in semi-conservative and dispersive model, it also takes a description of the structure of the DNA molecule, including the topology and relative movements of the strands at different stages of the replication process. This is genuine mechanistic information that must supplement the information contained in the causal graphs in order to provide a normatively adequate mechanistic explanation.

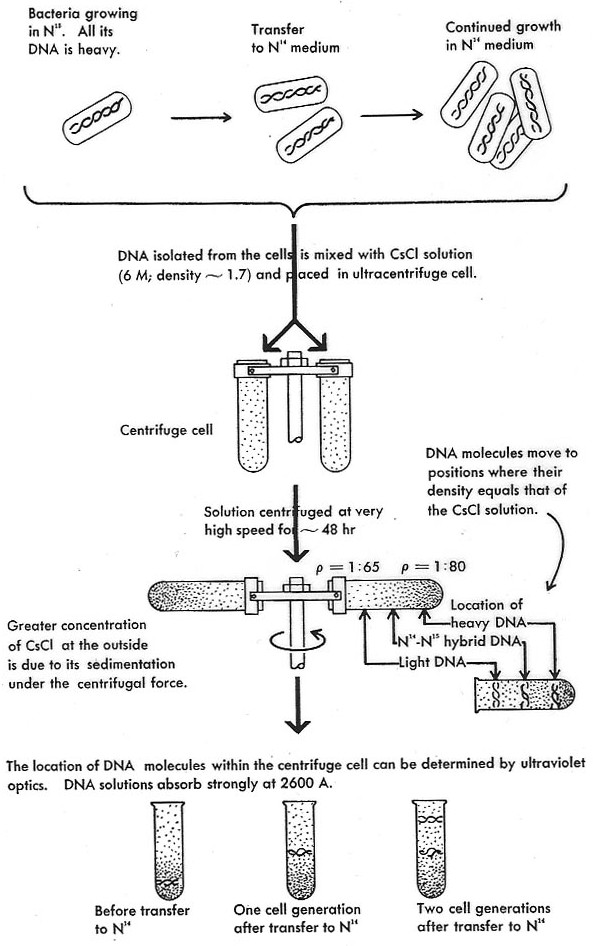

How can such a mechanism be tested? To see this, it is illuminating to consider the famous experiment that is said to have enabled a choice between the three DNA replication mechanisms in 1957. Also known as “the most beautiful experiment in biology” (Holmes 2011), this experiment was carried out by Matthew Meselson and Frank Stahl by using an analytic ultracentrifuge (see also the discussion of the Meselson-Stahl experiment in the entry experiment in physics).

Meselson and Stahl grew bacteria in a growth medium that contained a heavy nitrogen isotope, 15N. These bacteria were then transferred to a medium with normal light nitrogen, 14N. After one generation, the bacteria were harvested and their DNA was extracted and loaded onto a caesium chloride solution in the ultracentrifuge chamber (Fig. 2). Caesium atoms are so heavy that they will form a concentration gradient when subjected to high centrifugal forces. In such a gradient, DNA floats at a band in the gradient where the molecular density corresponds to that of the caesium chloride solution. Thus, the analytic centrifuge is an extremely sensitive measuring device for molecular density. In fact, it is sensitive enough to detect the difference between DNA that contains heavy nitrogen and DNA that contains light nitrogen. It can also distinguish these two DNA species from a hybrid made of one strand containing heavy nitrogen and one strand containing light nitrogen. Indeed, after one round of replication, the bacterial DNA in Meselson’s and Stahl’s experiment was of intermediate density. After another round, the DNA had the same density as DNA in its natural state.

Figure 2. The Meselson-Stahl experiment. The depiction of the centrifuge is misleading; Meselson and Stahl were using a sophisticated analytic ultracentrifuge, not a swinging bucket preparatory centrifuge as this picture suggests. Furthermore, this representation of the experiment already contains its theoretical interpretation (Watson 1965).[1]

The outcome was exactly what one would expect on the basis of the semi-conservative model: After one round of replication, the DNA is exactly of intermediate density because it is a hybrid of a heavy and a light strand (containing heavy or light nitrogen, respectively). After another round, a light molecule appears which contains no heavy nitrogen. With the exception of the heavy/light hybrid, there are no other molecules of intermediate density (as one would expect on the dispersive scheme).

Even though this really looked like a spectacular confirmation of the semi-conservative scheme, Meselson and Stahl (1958) were quite cautious in stating their conclusions. Avoiding theoretical interpretation, all the experiment showed was that base nitrogen distributes evenly during replication. While this is inconsistent with the dispersive mechanism, it did not rule out the conservative mechanism. For it was possible that the material of intermediate density that the ultracentrifuge’s optical devices picked up did not consist of hybrid heavy/light DNA molecules at all, but of some kind of complex of heavy and light DNA. For example, Meselson and Stahl speculated that end-to-end covalent associations of old and newly synthesized DNA that was produced by the conservative mechanism would also have intermediate density and therefore produce the same band in their experiment. For this reason, could not completely rule out an interpretation of the results in terms of the conservative mechanism.

Meselson’s and Stahl’s caution was well justified, as we know now. For they did not know exactly what molecular species made up the bands they saw in their CsCl gradients. More recent considerations suggest that their DNA must have been fragmented, due to Meselson’s and Stahl’s use of a hypodermic needle to upload the DNA on their gradients. Such a treatment tears a whole genome from E. coli into little pieces. This fragmentation of the DNA would have ruled out the alternative interpretation with end-to-end linked molecules, had the scientists been aware of it at the time. In fact, without the shearing of the DNA before the density measurement the whole experiment wouldn’t have worked in the first place, due to the circular structure of the E. coli chromosome, which affects its density (Hanawalt 2004). What this shows is that there were relevant facts about the experimental system that Meselson and Stahl did not know, which makes it difficult to view this experiment as crucial.

Nonetheless, it is possible to view the experiment as performed in 1957 as strong evidence for the semi-conservative mechanism. Weber (2009) argues that this mechanism is part of the best explanation of Meselson’s and Stahl’s data. By “data”, Weber means the actual bands observed. Why is this explanation better than the one involving the conservative scheme and end-to-end associations of heavy and light DNA? To be consistent with some data is not the same as being a good explanation of the data. The explanation using the semi-conservative scheme was better because it showed directly how the nitrogen is distributed evenly. By contrast, the alternative explanation requires the additional assumption that the parental and filial DNA somehow remain covalently attached together after replication. This assumption wasn’t part of the mechanism, therefore the latter explanation was incomplete. A complete explanation is always better than an incomplete one; no principle of parsimony need be invoked here.

The case can thus be reconstructed as an inference to the best explanation (Lipton 2004), or perhaps preferably as an inference to the only explanation (Bird 2007). Inference to the best explanation (IBE), also known as abduction, is a controversial inductive inference scheme that selects from a set of candidate hypothesis the one that best explains the phenomena.

A variant of IBE is also used by Cresto (2008) to reconstruct the experiment by Avery that showed that the transforming principle in pneumococcus is DNA rather than protein. However, her conclusion is that, in this case, IBE is inconclusive. What it does show is that there was a rational disagreement, at the time, on the question of whether DNA was the transforming principle. Novick and Scholl (forthcoming) argue that Avery et al. were not relying on IBE; they actually had a stronger case conforming to the traditional vera causa ideal (see Section 5.2).

To sum up, we have seen that a methodological analysis of some of biology’s most famous experiments reveals many intricacies and lacunae in their evidential force, which makes it difficult to see them as “crucial experiments”. However, a rationale other than their pedagogical value can often be given for why the textbooks accord them a special epistemic status.

3. Experimental Systems and Model Organisms

The last two sections have treated experiments primarily as ways of testing theoretical hypotheses and causal claims, which is where traditional philosophy of science saw their principal role. However, there exists a considerable body of scholarship in the history and philosophy of biology that shows that this does not exhaust the role of experiments in biology. Experimentation, to echo Ian Hacking’s famous slogan, “has a life of its own” (Hacking 1983). Much that goes on in a biological laboratory does not have the goal of testing a preconceived theory. For example, many experiments play an exploratory role, that is, they assist scientists in discovering new phenomena about which they may not yet have any theoretical account or not even any clear ideas. Exploratory experimentation has been investigated in the history and philosophy of physics (Steinle 1997) as well as biology (Burian 1997, 2007; O’Malley 2007; Waters 2007). These studies show that the development of any discipline in experimental biology cannot be understood by focusing on theories and attempts to confirm or refute these theories. Experimental practice is simply not organized around theories, particularly not in biology. If this is so, we must ask in what other terms this practice can be explained or reconstructed. Recent scholarship has focused in particular on two kinds of entities: model organisms and experimental systems.

3.1 Model Organisms

What would modern biology be without its model organisms? To give just a few examples: Classical genetics was developed mainly by experimenting with fruit flies of the species Drosophila melanogaster. The same organism has recently also been at the center of much exciting work in developmental biology, together with the nematode Caenorhabditis elegans and the zebrafish Danio rerio. Molecular biologists were initially mostly working with the bacterium Escherichia coli and bacteriophage (viruses that infect bacteria). Neuroscientists owe much to the squid with its giant axons. For immunology, the mouse Mus musculus has proven invaluable. Plant molecular biology’s favorite is Arabidopsis thaliana. Cell biology has found in baker’s yeast Saccharomyes cerevisiae an organism that is as easy to breed as E. coli, but its cells are more similar to animal and human cells. This list could go on, but not forever. Compared to field biology, the number of species that are being studied in the experimental life sciences is quite small; so small, in fact, that for most of these model organisms complete genomic DNA sequences are available today.

It may seem as somewhat paradoxical that it is exactly these experimental disciplines of biology that limit their research to the smallest part of life’s diversity that aspire to the greatest degree of universality. For the point of studying model organism is often to gain knowledge that is not only valid for one species but for many species, sometimes including humans. Before discussing this epistemological question, it is pertinent to review some results of historical and philosophical analyses of research on model organisms.

An important study is Robert Kohler’s Lords of the Fly (Kohler 1994), a history of Drosophila genetics originating from Thomas Hunt Morgan’s laboratory at Columbia University. Kohler attributes the success of this way of doing science to the enormous output of the Drosophila system in terms of newly described and mapped mutations. The fruit fly breeds relatively fast and is easy to keep in laboratories in large numbers, which increases the chance of detecting new mutations. Kohler refers to Drosophila as the “breeder-reactor” to capture this kind of fecundity, thus, his study is not unlike an ecology of the lab fruit fly. The elaborate techniques of genetic mapping developed in Morgan’s laboratory, which assigned each mutation a physical location on one of the fly’s four chromosomes, can also be viewed as a way of organizing laboratory work and keeping track of the zillions of mutants that were cropping up continuously. A genetic map is a systematic catalogue of mutants and thus a research tool as much as it is a theoretical model of the fly’s genetic system (cf. Weber 1998). Thus, most of what went on in Morgan’s laboratory is best described as breeding, maintaining, characterizing and cataloguing (by genetic mapping) fly strains. Very little of it had anything to do with testing genetic theories, even though the theoretical knowledge about regularities of gene transmission canonically known as “Mendel’s laws” was, of course, also considerably refined in the process (Darden 1991). However, classical genetic theory was also a means of discovering mutants and genes for further study, not an end in itself (Waters 2004). This is also evident in the way in which Drosophila was adapted to the age of the molecular biology (Weber 2005; Falk 2009).

Model organisms were often chosen for a particular field of study, but turned out to be invaluable for other fields as well. Drosophila is a case in point; Morgan was mainly interested in embryology when he chose Drosophila as a model system. This paid off enormously, but the fly was just as important for genetics itself, including evolutionary genetics (especially the work of one of the “architects” of the evolutionary synthesis, T. Dobzhansky; see Dobzhansky 1937), behavioral genetics (e.g., S. Benzer’s studies of the circadian clock mechanism, see Weiner 1999), biochemical genetics, as well as neuroscience.

There is always an element of contingency in the choice of model organisms; no biological problem determines the choice of the best organism to study it. Even though considerations of convenience, easy breeding and maintenance, as well as suitability for the study of specific phenomena play a role (Clarke and Fujimura 1992; Burian 1992, 1993; Lederman and Burian 1993; Clause 1993; Creager 2002; Geison and Creager 1999; Lederman and Tolin 1993), there are always many different organisms that would satisfy these criteria. Thus, which model organism is chosen sometimes is a matter of chance.

Is it possible that our biological knowledge would be altogether different, had contingent choices been different in the past? As this question requires us to indulge in historical counterfactuals, which are controversial (Radick 2005), it is difficult to answer. However, it may not matter much one way or the other if the knowledge produced by model organisms can be shown to be of general validity, or at least as transcending the particular organism in which it was produced. This is an instance of the more general epistemological problem of extrapolating knowledge from one domain into another and can be fruitfully discussed as such (Burian 1992).

Let us first consider a special case, the universality of the genetic code. In molecular biology, unlike in popular science articles, the term “genetic code” does not refer to an organism’s genomic DNA sequence but to the assignment of amino acids to base triplets on the messenger RNA. There are four such bases: A, U, G and C. Three bases together form a “codon” and specify an amino acid, for example, the triplet AUG specifies the amino acid methionine (it is also a start codon, i.e., it initiates an open reading frame for making a protein). It was remarkable to find that the genetic code is completely identical in almost every organism that has been studied so far. There are only a few exceptions known, for example, the genetic code of mitochondria and chloroplasts or that of Trypanosomes (a human parasite causing sleeping sickness). Therefore, the code is called “universal”, because the exceptions are so few. But how do biologists know that the code is universal, given that it has been determined only in a small number of species?

In this case, a simple Bayesian argument can be used to show that current belief in the universality of the genetic code is well justified. To see this, it must first be noted that the code is arbitrary; there is no necessity in the specific mapping of amino acids to codons in the current standard genetic code. A second premise is that there is an enormously large number of different possible genetic codes. Now, we may ask what the probability is that the exact same genetic code evolved independently in so many different species as have been investigated at the molecular level to date. Surely, this probability is vanishingly small. The probability of coincidence of genetic codes is much, much larger on the assumption that the code did not arise independently in the different species. On this hypothesis, the code arose only once, and the coincidence is due to common descent. However, once a specific genetic code is in place, it is very difficult to change, because any mutational change in the amino acid-codon assignment tends to affect a larger number of different proteins and thus threatens to kill the organism. It is for this reason that Jacques Monod (1974) has described the code as a “frozen accident”. Its mutation rate is just very, very low. These considerations together support the hypothesis of universality in the case of the genetic code.

Such an argument may not always be available. In fact, in the general case extrapolation from a model organism is intrinsically problematic. The main problem is what Daniel Steel (2008) has termed the “extrapolator’s circle”. The problem is this. We want to infer that some system S has a mechanism M because another system T is already known to have M. For this inference to be good, we must assume that S and T are relevantly similar. We can’t know that the systems are relevantly similar unless we already know that they both have M. But if we knew that, there would not be a need for extrapolating in the first place.

Note that such a circle does not arise when we extrapolate genetic codes. In this case, we do know that the systems are relevantly similar because all organisms share a common ancestor and because the code is hard to change. What can we do if no such argument is available?

Steel (2008) has proposed a form of reasoning that he calls “comparative process tracing”. Steel’s proposed solution is that even in the absence of strong evidence as to what mechanism(s) operate in a target system compared to a model system, scientists can compare the two systems with respect to certain crucial nodes in the respective causal structures, namely those where the two systems are most likely to differ. This can break the regress by providing evidence that the two systems are similar enough to warrant extrapolations, yet it is not necessary to already have an understanding of the target systems that matches the knowledge about the model system.

While this reasoning strategy seems plausible, it may not be so pertinent for extrapolating from model organisms. Steel’s reasoning strategy pertains to cases where we want to infer from one system where we know a certain mechanism operates to a different kind of system about we do not (yet) know that the same mechanism operates. However, in biology there is often evidence to the effect that, in fact, very similar mechanisms operate in the model as well as in the target system. A particularly important kind of evidence is the existence of so-called sequence homologies between different organisms. These are usually DNA sequences that show a more or less strong similarity, in some cases even identity. As it is (like in the case of the genetic code discussed above) not likely that this sequence similarity is due to chance, it is normally attributed to common ancestry (hence the term sequence “homology”; in biology, this term in its modern usage indicates relations of shared ancestry). Sequence homology has proven to be a reliable indicator of similarity of mechanisms. To give an example, a highly conserved sequence element called the “homeobox”, which was first described in Drosophila, is today believed to play a similar role in development in an extremely broad range of organisms (Gehring 1998). When there are reasons to think that the model and the target share similar causal mechanisms due to common descent, the extrapolator’s circle does not arise.

However, the extent in which extrapolations from model organisms to other organisms can serve as evidence in the context of justification or merely as a heuristic in the context of discovery remains controversial. Baetu (1916) argues for a middle ground according to which extrapolations can be lines of evidence, but only in the context of a larger body of evidence where certain assumptions made in extrapolations are subjected to empirical scrutiny.

There has also been some debate to what extent there is a strong parallel between model organisms and theoretical models in science, for example, the Lotka-Volterra predator-prey model in population ecology. A strong parallel is suggested by the fact that there is a widespread agreement in the literature on scientific modeling (see entry Models in Science) that scientific models need not be abstract (i.e., consist of mathematical equations or something of this sort); they can also be concrete and physical. Watson’s and Crick’s 1953 DNA model made of cardboard and wire qualifies as a model in this sense. Could a fruit fly bred in a laboratory to do genetic experiments also be considered as a scientific model in the standard sense? Ankeny and Leonelli (2011) answer this question in the affirmative, on the grounds that (1) model organisms are used to represent a whole range of other organisms and (2) that they are used to represent specific phenomena.

Levy and Currie (2015) have criticized this idea. On their view, scientific models such as Lotka’s and Volterra’s (and all the other models discussed in the burgeoning modeling literature) play a completely different role in science than model organisms. In particular, they differ in the way they are used in scientific inferences. In a model such as the mathematical predator-prey model, scientists draw analogical inferences from model to target, inferences that are underwritten by theoretical assumptions. By contrast, model organisms serve as a basis for extrapolations from individual members to a whole class (often but not necessarily on the basis of shared ancestry). Thus, they want to exclude what they call “empirical extrapolation” (a form of induction), which they take to be characteristic for model organism-target inferences from the kind of theoretical inferences that are used to gain knowledge about a target via scientific models in general.

However, this does not mean that living organisms or populations of organisms cannot be used as models. Levy and Currie accept that certain model systems in experimental ecology and experimental evolution are scientific models that are akin to the Lotka-Volterra model. A case in point are Thomas Park’s (1948) famous competition experiments using the flour beetle Tribolium. What characterizes such experimental models, in contrast to model organisms, is that they can be used to represent generalized biologically phenomena (e.g., interspecific competition in Park’s case) that can be applied to radically different and taxonomically unrelated target systems (see also Weber 2014).

No matter whether model organisms are considered as scientific models or as something else, it is widely agreed that they serve many different purposes, only some of which are representational. The development of model organisms such as Drosophila (fruit fly), Arabidopsis (Thale cress), Caenorhabditis (roundworm) and the associated databases such as FlyBase, Wormbase etc. has had a profound impact on the way in which biological research is organized (e.g., Geison and Creager 1999, Clarke and Fujimura 1992, Creager 2002, Leonelli and Ankeny 2012). Furthermore, model organisms are also important sources of research materials such as genetically well-defined laboratory strains or genetically modified organisms without which cutting-edge biological experimentation is no longer possible today. Weber (2005) has introduced the notion of preparative experimentation to capture this role of model organisms.

3.2 Experimental Systems

As we have seen, model organisms have greatly shaped the development of experimental biology, and continue to do so. Another important entity are so-called experimental systems. These are not to be confused with model organisms: The latter is a biological species that is being bred in the laboratory for experimental work. An experimental system may involve one or several model organisms, and most model organisms are used in several experimental system. An experimental system typically consists of certain research materials (which may be obtained from a model organism), preparatory procedures, measurement instruments, and data analysis procedures that are mutually adapted to each other.

Experimental systems are the core of Hans-Jörg Rheinberger’s (1997) account of mid-20th Century research on protein synthesis. Rheinberger characterizes experimental systems as “systems of manipulation designed to give unknown answers to questions that the experimenters themselves are not yet clearly to ask” and also as “the smallest integral working units of research” (Rheinberger 1997, 28). He argues that research in experimental biology always “begins with the choice of a system rather than with the choice of a theoretical framework” (p. 25). He then follows a certain experimental system through time and shows how it exerted a strong effect on the development of biology. The system in question is a so-called in-vitro system for protein synthesis that was developed in the laboratory of Paul Zamecnik at Massachusetts General Hospital in the 1950s. “In vitro” means that the system doesn’t contain any living cells. It is rather based on a cell extract, originally from bacteria, later also from other organisms including rabbits and yeast. The cell extract is prepared in a way that preserves functionality of the protein synthesis machinery. Instead of the RNAs that naturally occur in the source organism, the in-vitro system may be “programmed” with exogenous or even artificial RNA. Furthermore, the experimental system contains measurement procedures (sometimes called “assays” in experimental biology) for protein synthesis activity. One such method is to measure incorporation of radioactivity introduced by amino acids containing a radioisotope such as sulfur-35, but there were other methods as well. Probably the most famous experiment done with such a system is the experiment by Marshall Nirenberg and Heinrich Matthaei (1961). These researchers added an artificial RNA, namely poly-U (U or uracil is one of the four bases of RNA) to the in-vitro system and showed that it produced a polypeptide (=protein) containing only the amino acid phenylalanine. Thus, they “cracked” the first “word” of the genetic code, namely UUU for phenylalanine.

One of the central points of Rheinberger’s analysis is that the in-vitro system was never designed to do this task. Its original purpose was to study protein synthesis in cancer cells. In addition to unraveling the genetic code and the mechanisms of protein synthesis, it was later used for various other purposes in biochemistry and cell biology (for example, for studying the transport of proteins across endoplasmic reticulum and mitochondrial membranes). The system was thus not even confined to a single discipline, in fact, it to some extent lead to a re-organization of scientific disciplines. Furthermore, the system was also fused with other experimental system or it bifurcated into different systems. Experimental systems, like model organisms are at least as important as focal points that organize research as theories.

While Rheinberger’s account of experimental research in biology is right to put its emphasis on experimental systems rather than on theories, it may be criticized for the ontology of experimental systems that it contains. On this account, experimental systems are construed as very inclusive entities. They contain not only the specific materials and apparatus necessary to do research, but also the preparatory procedures, lab protocols, storage devices, measurement techniques, and so on. Thus, it is clear that such systems are not only composed of material entities; at least some of the constituents of experimental systems are conceptual in nature. For example, a lab protocol that describes how to prepare a cell extract that will be able to carry out protein synthesis in vitro is really a conceptual entity. A researcher who is following such a protocol is not merely manipulating materials, lab equipment or symbols. Rather, she is acting under the guidance of concepts. (Action is always behavior guided by concepts). Among these concepts there may be what used to be known as “observation concepts” such as “supernatant”, “pellet”, “band”, and so on. Furthermore, the protocol will contain terms such “microsomal fraction”, which is supposed to designate a certain sediment produced by centrifugation that contains microsomes (vesicles of endoplasmic reticulum membrane). Thus, the protocol also contains theoretical terms.

Rheinberger refers to theoretical entities as “epistemic things”. This concept signifies the “material entities or processes—physical structures, chemical reactions, biological functions—that constitute the objects of inquiry” (Rheinberger 1997, 28). Epistemic things can unexpectedly appear and disappear, or be re-constituted in new guises as experimental systems are developed. Sufficiently stabilized epistemic things can “turn into the technical repertoire of the experimental arrangement” (1997, 29) and thus become “technical objects.” One of Rheinberger’s examples of an epistemic thing is the ribosome (a comparatively large molecular complex of protein and RNA that catalyzes some of the crucial steps in protein synthesis). Here, it can be argued that whether or not some experimental setup contained ribosomes is a theoretical judgment. Thus, an experimental system contains materials and apparatus as well as observation concepts and theoretical concepts. They are rather heterogeneous ontologically.

If jumbles are considered undesirable from an ontological point of view, it is also possible to construe experimental systems as purely conceptual entities. They are ways of acting in a laboratory, and action is always guided by concepts. Material things, insofar as they appear in experimental practice, are only parts of an experimental system to the extent in which they are subject to purposeful experimental action. They can only be subject to such action to the extent to which they are recognized by an experimenter, for example, as a microsomal fraction or an antiserum with a certain antigen specificity, and so on. A biological sample without its label and some researcher who knows what this label means is of no value whatsoever; in fact, it isn’t even a biological sample.

It can thus be argued that it’s really thought and concepts that give an experimental system its identity and persistence conditions; without concepts it’s really just a loose assemblage of plastic, glass, metal, and dead stuff coming out of a blender. On such a view, experimental systems are not “out there” as mind-independent parts of a material reality; they exist only within some concept-guided practice.

4. Experimentation, Rationality and Social Epistemology

As the preceding sections should have made clear, there is ample evidence that biological research does not fit a Popperian image of science according to which “The theoretician puts certain definite questions to the experimenter, and the latter, by his experiments, tries to elicit a decisive answer to these questions, and to no others. All other questions he tries hard to exclude” (Popper 1959, 107). According to Rheinberger, much experimental research in biology does not aim at testing pre-conceived theories. However, sometimes theories or theoretical frameworks are, in fact, adopted by the scientific community, while others are abandoned. Even if it is true that most research is not aimed at testing theories, research can still undermine some theoretical ideas and support others, to the point that one framework is chosen while another is rejected. How are such choices made? What principles guide them? And do the choices actually exhibit some kind of epistemic rationality, as most philosophers of science think, or do they merely reflect the interests or larger cultural changes in society, as many sociologists and historians of science think?

Questions such as these are notoriously difficult to answer. Those who prefer a rational view of scientific change must demonstrate that their preferred epistemic norms actually inform the choices made by scientists. This has proven to be difficult. If we re-consider our case from Section 3, the oxidative phosphorylation controversy, there exists a sociological account (Gilbert and Mulkay 1984) as well as different philosophical accounts that do not even agree in how to explain scientific change (Allchin 1992, 1994, 1996; Weber 2005, Ch. 4–5).

By the same token, those who think that scientific change is historically contingent, in theory, must be able to justify historical counterfactuals of the form “had the social/cultural context at some given time been different, scientists would have adopted other theories (or other experimental systems, model organisms, etc)”. It is controversial whether such claims are justifiable (see Radick 2005 for a recent attempt).

Perhaps there is a way of reconciling the two perspectives. In recent years, a new kind of epistemology has emerged that sees no contradiction in viewing science both as a profoundly social activity and at the same time as rational. This, of course, is social epistemology (e.g., Goldman 1999; Longino 2002; Solomon 2001). Social epistemologists try to show that social interactions in scientific groups or communities can give rise to a practice that is rational, although perhaps not in exactly the same way as epistemological individualists have imagined it.

Could there be a social account of scientific change for experimental biology? Of course, such an account should not fall back into a theory-first view of science but rather view theories as embedded in some practice. To see how this is possible, it’s best to consider the case of classical genetics.

Classical genetics is said to derive from Mendel’s experiments with pea plants, however, the story is more complex than this. At the beginning of the 20th Century, there existed several schools in genetics, on both sides of the Atlantic. In England, the Biometric school of Karl Pearson and Raphael Weldon were developing a quantitative approach that took Francis Galton “Law of ancestral heredity” as its point of departure. The Mendelian William Bateson was leading a rival movement. In the United States, Thomas Hunt Morgan and his associates were constructing the first genetic maps of the fruit fly. For a while, William Castle was defending a different approach to genetic mapping, as well as a different theory of the gene. Various approaches were also developed in Continental Europe, for example, in the Netherlands (Hugo de Vries), Germany (Carl Correns) or Austria (Erich Tschermak). The American school of Thomas Hunt Morgan pretty much carried the day. Its approach to mapping genes as well the (ever changing) theory of the gene became widely accepted in the 1930s, and became officially incorporated in the Evolutionary Synthesis of the 1930s and 40s (Mayr 1982; Provine 1971).

A traditional way for a philosopher of science of looking at this case would be to consider the salient theories and to ask: What evidence compelled scientists to adopt Morgan’s theory? However, this may not be the right question to ask. For this question makes the silent supposition that theories and experimental evidence can be separated from each other in genetics. But it can be argued that the two depend too strongly on each other to be considered separate entities. Classical genetics—in all its forms—was first and foremost a way of doing crossing experiments with various model organisms, and to arrange the results of these experiments in a certain way, for example, linear genetic maps. To be sure, there were also certain ideas about the nature of the gene, some of which highly speculative, others well established by experiments (for example, the linear arrangement on the chromosome, see Weber 1998b). But what is sometimes referred to as “the laws of transmission genetics” or simply “Mendel´s laws” was part and parcel of a certain way of doing genetics. Thus, theory is not separable from experimental practice (Waters 2008). This, of course, cuts both ways: Experimental data in genetics are as “theory-laden” as they are in other sciences, in other words, the analysis and interpretation of experimental data require theories.

For our current issue of scientific change, what this means is that theory and experimental evidence were selected together, not the former on the basis of the latter (as a naive empiricist account would have it).

On what grounds was this selection made? And who made it? A simple, but perhaps incomplete explanation is that the Morgan approach to genetics was selected because it was the most fruitful. A skilled geneticist who adopted the Morgan way was almost guaranteed to come up with publishable results, mostly in the form of genetic maps, and perhaps also some interesting observations such as mutations that behaved oddly in genetic crosses. By contrast, the alternative approaches that were still around in the early 20th Century did not prove to be as fruitful. That is, they did not produce a comparable number of results that could be deemed a success according to these sciences’ own standards. What is more, the alternative genetic approaches were not adaptable to other scientific disciplines such as evolutionary biology. Classical genetics thus out-competed the other approaches by its sheer productivity, which was manifested by published research papers, successful grant applications, successful students, and so on. It was therefore the scientific community as a whole that selected the new genetics due to its fruitfulness. At no point was there any weighing of evidence for or against the theoretical framework in question on the part of individual scientists, or at least this played no role (of course, there was weighing of evidence for more specific claims made within the same framework). Theories in experimental biology are thus selected as parts of experimental practices (or experimental systems, see §4.2), and always at the community level. This is how a social epistemological account of scientific change in experimental biology might look like (Weber 2011).

The image of the rise of classical genetics just drawn is reminiscent of Thomas Kuhn’s philosophy of science (Kuhn 1970), or of David Hull’s account of the rise of cladistic taxonomy (Hull 1988). It is a controversial issue whether such an image is compatible with a broadly rational construal of scientific practice. Kuhn thought that there exists no external standard of rationality that we could appeal to in order to answer this question, but he also held that science is the very epitome of rationality (Kuhn 1971, 143f.). According to Kuhn, there is an emergent social rationality inherent in modern science that cannot be construed as the exercise of individual rational faculties. Hull (1988) seems to be taking a similar view.

5. Experimental Artifacts and Data Reliability

5.1 Robustness

Under what conditions should scientists trust experimental data that support some theoretical conclusion such as, for example, that DNA has a helical structure or that mitochondria are bounded by two layers of membrane? This is known as the problem of “data reliability”. Unreliable data are commonly referred to as “experimental artifacts” in biology, indicating their status as being “made” by the experimental procedure. This is somewhat misleading, as all data—whether they are reliable or artifacts—are made by the experimental procedure. The difference is that reliable data are correct representations of an underlying reality, whereas so-called artifacts are incorrect representations. This characterization assumes that data have some sort of representational content; they represent an object as instantiating some property or properties; (see Van Fraassen 2008, Ch. 6–7). The question of what it means for data to represent their object correctly has not been much discussed in this context. The focus so far has been mostly on various strategies for checking data for reliability, which will be discussed below. As for scientists themselves, they tend to place this problem under the rubric of replicability. While this is also an important topic, the fact that some data are replicable is not sufficient for their reliability. There are lots of well-known examples of perfectly replicable data that turned out to be not reliable, e.g., the case of the mesosome to be discussed below.

One of the more influential ideas in philosophy of science is that of robustness. Originally, the term was introduced to denote a certain property of theoretical models, namely insensitivity to modeling assumptions. A robust result in theoretical modeling is a result that is invariant with respect to variations in modeling assumptions (Levins 1966; Wimsatt 1981; Weisberg 2006). In experimental science, the term means something else, namely that a result is invariant with respect to the use of different experimental methods. Robust experimental results are results that somehow agree even although they have been produced by independent methods. The independence of methods can mean at least two things: First, the methods use different physical processes. For example, a light microscope and a (transmission) electron microscope are independent methods in this sense; the former uses visible light, the latter an electron beam to gather information about biological structures. The second sense of independence concerns the theoretical assumptions used to analyze and interpret the data (most data are theory-dependent). If these assumptions are different for the two methods, they are also referred to as independent, or as independently theory-dependent (Culp 1995). These two senses of “independent” usually coincide, although there could be exceptions.

A much-discussed example of a robust experimental result from the physical sciences is the numerical value obtained for Avogadro’s number (6.022 x 1023) at the beginning of the 20th Century by using no less than thirteen independent methods according to the physicist Jean Perrin (Nye 1972). Salmon (1984) has argued that this provided a strong justification for belief in the existence of atoms by way of a common-cause argument. On Salmon’s view, the existence of atoms can be inferred as the common cause of Perrin’s thirteen different determinations of Avogadro’s number. Others have construed robustness arguments more along the lines of Putnam’s “no miracle” argument for scientific realism, according to which the assumption of the reality of some theoretical entities is the best explanation for the predictive success of theories or, in the case of robustness, for the agreement of experimental results (Weber 2005, Ch. 8; Stegenga 2009).

Various authors have tried to show that robustness-based reasoning plays an important role in judgments about data reliability in experimental biology. For example, Weber (1998b) argues that the coincidence of genetic maps of Drosophila produced by different mapping techniques provided an important argument for the fidelity of these maps in the 1930s. Culp (1995) argued that robustness provides a way out of a methodological conundrum know as “data-technique circles”, which is a more apt designation for Collins’ “experimenter’s regress”. According to Collins (1985), scientists must trust data on the grounds of their being produced by a reliable instrument, but they can only judge an instrument to be reliable on the basis of it’s producing the right kind of data. According to Culp (1995), robustness provides a way out of this circle. If the data obtained by independent methods agree, then this supports both the reliability of these data and of the instrument that produced these data. She attempts to demonstrate this on the example of different methods of DNA sequencing.

Stegenga (2009) criticizes the approach from robustness. On his view, what he calls “multi-modal evidence”, i.e., evidence obtained by different techniques, is often discordant. If it is concordant, it is difficult to judge if the different methods are really independent. However, Stegenga (2009) does not discuss Culp’s (1995) example of DNA sequencing, which seems to be a case where evidence obtained by different sequencing techniques is often concordant. Furthermore, Culp shows that the techniques are independent in the sense that they are based both on different biochemical processes and different theoretical assumptions.

A historical case that has been extensively discussed is the case of the hapless mesosome. For more than 20 years (ca. 1960–1983), this was thought to be a membrane-bound structure inside bacterial cells, not unlike the nucleus or the mitochondria of the cells of higher organisms. However, it could only be seen in electron microscopy (bacterial cells being too small for light microscopy). Because electron microscopy requires a vacuum, biological samples require a lot of preparation. Cells must be chemically fixed, which was done mostly by using Osmium tetroxide at the time. Furthermore, the samples must be protected from the effects of freezing water. For this purpose, cryoprotectants such as glycol must be used. Finally, the material must be cut into extremely thin slices, as an electron beam does not have much penetrating power. Electron microscopists used two different techniques: The first involved embedding the cells in a raisin before cutting with very sharp knives (so-called microtomes). The other technique used fast freezing followed by fracturing the cells along their membranes. At first, mesosomes were seen in electron micrographs under a variety of conditions, but then there were increasing findings were they failed to show. Thus, suspicion started to rise that mesosomes were really a microscopic artifact. Ultimately, this is what microbiologists and electron microscopists ended up concluding by the mid-1980s, after more than two decades of research on these structures.

There are different interpretations of this episode to be found in the literature. One of the oldest is Rasmussen (1993), who basically offered a sociological explanation for the rise and fall of the mesosome. This account has been widely criticized (see Rasmussen 2001 for a more recent defense). Others have tried to show that this case exhibits some methodological standard in action. On Culp’s (1994) account, the case demonstrates the relevance of the standard of robustness in actual scientific practice. According to Culp, the mesosome was accepted as a real entity so long as a robust (i.e., diverse and independently theory-dependent) body of data provided signs of their existence. When the winds changed and the evidence against the mesosome became more robust than the evidence in its favor, it was dropped by biologists.

As this presentation should make clear, Culp’s account requires that robustness comes in degrees and that there should, therefore, be some way of comparing degrees of robustness. However, no proponents of robustness to date have been able to come up with such a measure. Ultimately, what the case of the mesosome shows is that, in realistic cases, the evidence for some theoretical entity is discordant (Stegenga 2009), meaning that some findings supported the existence of mesosomes whereas others did not. But this means that robustness as a criterion cannot give unequivocal results, unless there is some way of saying which body of data is more robust: the body that supported the theoretical entity or the body which did not. Thus, when the evidence is discordant the criterion of robustness fails to provide a solution to the problem of data reliability.

An alternative account of the mesosome story has been offered by Hudson (1999). Hudson argues that considerations of robustness did not play a role in the scientists’ decisions concerning the reality of the mesosome. Had they, in fact, used such reasoning, they would never have believed in mesosomes. Instead, they used a kind of reasoning that Hudson refers to as “reliable process reasoning”. By this, he means basically that scientists trust experimental data to the extent in which they have reasons to believe that the processes that generated these data provide faithful representations of reality. In the case of the mesosome, the evidence from electron microscopes was first judged reliable. But later, it was shown empirically that mesosomes were actually produced by the preparation procedure. Briefly, it was shown by various experiments that the chemical fixants used directly damage biological membranes. The mesosomes were thus shown to be artifacts by empirical methods. Considerations of robustness thus played no role in the electron microscopists’ data reliability judgments.

Similar to Hudson, Weber (2005, Ch. 9) argues that the demonstration that mesosomes were experimental artifacts was nothing but an ordinary experimental demonstration of the existence of a causal relationship, namely between mesosome appearances and the fixation agents. Thus, there may not be any special kind of reasoning other than ordinary causal reasoning (see §2.1) involved in data reliability judgments.

An aspect that has so far been largely neglected in this debate is a different kind of robustness, namely physical robustness. If we compare the case of the mesosomes with that of other intracellular organelles, for example, the eukaryotic nucleus or mitochondria, it is notable that it was never possible to isolate mesosomes, whereas the latter organelles can be recovered in more or less intact form from cell extracts, normally by the use of centrifugation. Although it is, of course, entirely possible that the structures so recovered are artifacts of the preparation procedure, there may be additional evidence for their reality. For example, there could be telltale enzyme activities that can be measured in isolated cell organelles that correspond to the organelle’s function. It might be possible to subsume this kind of reasoning under Hacking’s (1983) strategies of using the ability to intervene with theoretical entities as a ground for their real existence.

5.2 The Vera Causa Ideal

Recent scholarship has defended the traditional ideal of vera causa both as a normatively adequate methodological standard for experimental biology as well as a standard that biologists are actually committed to. The ideal requires that scientific explanations of a phenomenon cite true causes or verae causae. To qualify as a true cause, an entity must satisfy the following criteria (e.g., Hodge 1992): First, it must have been shown to exist. Second, it must be causally competent to produce the phenomenon in question. Third, it must be causally responsible for this phenomenon. Importantly, the evidence for existence must be independent from the evidence for causal competence. Thus, the mere fact that an entity’s purported causal capacities are able to explain a phenomenon dies not alone constitute sufficient evidence for its existence. What this means, obviously, is that an inference to the best explanation (IBE) of a phenomenon cannot alone justify acceptance of a postulated entity. It must always be accompanied by independent evidence for existence.

Proponents of the vera causa ideal thus oppose the idea that IBE might be something like a common methodological standard for metaphysics and for science. If metaphysicians rely on IBE to justify the entities they postulate, for examples, tropes or substances (as argued by Paul 2012), they do not hold these beliefs to the same methodological standard as scientists do (Novick forthcoming). For scientists are not content with IBE-type arguments; they require something more before they accept theoretical entities.