Supplement to Temporal Consciousness

Husserl, the Brain and Cognitive Science

- 1. Varela and van Gelder: retention without representation

- 2. Lloyd: connectionist retentions

- 3. Grush: a trajectory estimation model

Husserl’s tripartite conception of the (Retentionalist) specious present has been influential, and in recent years there have been a number of attempts to incorporate his insights into neurobiology and cognitive science.

1. Varela and van Gelder: retention without representation

Seeking to build bridges between the cerebral and the phenomenal, Varela has proposed that there may be important analogies between the dynamical behaviour of neural cell-assemblies and Husserl’s tri-partite conception of the phenomenal present. More specifically, he suggests that these neuronal ensembles can become synchronized for periods lasting around 1 second, and these transient periods of synchrony are the neural correlates of present-time consciousness (1999: 119). Husserl himself did not suggest that the 1 second time-scale is privileged in any way, and it is not immediately apparent what Varela’s neuronal ensembles might have in common with time-consciousness as described by Husserl. Matters become clearer with Varela’s claim that retentions may be ‘dynamical trajectories’, although this takes a little unpacking. (See Thompson 2007 chapter 11 for a sympathetic exposition.)

Neural networks are often assumed to be instances of the sort of chaotic (or non-linear) systems whose behaviour has come under increasing scrutiny in recent decades. Although such systems can react in difficult-to-predict ways in response to the smallest of stimuli, they often have a certain number of favoured states – states they are more likely to enter into – and these correspond to the ‘attractors’ in the abstract phase spaces used to describe their behaviour. The behaviour of a dynamic system from one moment to the next depends on several factors: the system’s current global state, its tendency to move along certain preferred trajectories through its phase space from any given location, and external influences. So at any given point, a system’s behavioural dispositions are determined by its precise location in its phase space, and its current location in its phase space is a function of its previous locations. For this reason a system’s current phase-space trajectory can be viewed as reflecting its past: ‘in its current point in time a dynamical system has no “representation” of its past. But the past acts into the present … The present state wouldn’t be what it is except for its past, but the past is not actually present … and is not represented’ (Varela 1999: 137). Husserl’s contention that the present contains remnants or retentions of the past can seem puzzling, even paradoxical. How is it possible for experienced succession to be generated by contents that exist only in the momentary present? But if a system’s present state can reflect the recent past without actually or explicitly representing it, the problem of how presently occurring representations can perform the functions Husserl ascribes to them is solved, or at least that is what Varela maintains.[37]

Van Gelder is another advocate of this geometricization of retention. Commenting on how an artificial neural net can be taught to recognize sounds, he writes:

How is the past built in? By virtue of the fact that the current position of the system is the culmination of a trajectory which is determined by the particular auditory pattern (type) as it was presented up to that point. In other words, retention is a geometric property of dynamical systems: the particular location the system occupies in the space of possible states when in the process of recognizing the temporal object. It is that location, in its difference with other locations, which “stores” in the system the exact way in which the auditory pattern unfolded in the past. It is how the system “remembers” where it came from. (1997: §38)

Van Gelder goes on to outline a number of other respects in which the workings of dynamical (in this case, connectionist) systems correspond to what Husserl had so say about the structure of temporal awareness. While these analogies are certainly noteworthy, there is a significant difficulty with the central claim. Dispensing with anything resembling an explicit representation of a system’s prior states in its present state may make life easier, but for Husserl the whole mystery or ‘wonder’ of time-consciousness consists in the way the past lives on in the present. Van Gelder recognizes that there is nothing resembling a ‘perception’ of the past in his model, but he presents this as a positive: he suggests Husserl’s talk of perceptions of the past is confused and unnecessary, and that he only used such formulations because ‘he had no better model of how something could “directly intend” the past’ (1997: §40). Whether Husserl would have agreed with this verdict is debatable.

2. Lloyd: connectionist retentions

Lloyd is sympathetic to the overall approach taken by van Gelder and Varela, but he finds something lacking:

Husserl’s challenge to us is not to show how a difference in the real past of a system can influence its present state – the actual past is excluded along with the rest of the objective world at the phenomenological get-go. Rather, Husserlian retention is the presence (now) of an apparent past experience, the prior Now in all its richness. So if a distributed pattern of activity is the network Now, then retention is achieved only if that pattern is inflected by its own prior Now-state, and not just some aspect of the prior now, but all of it. (2004: 342)

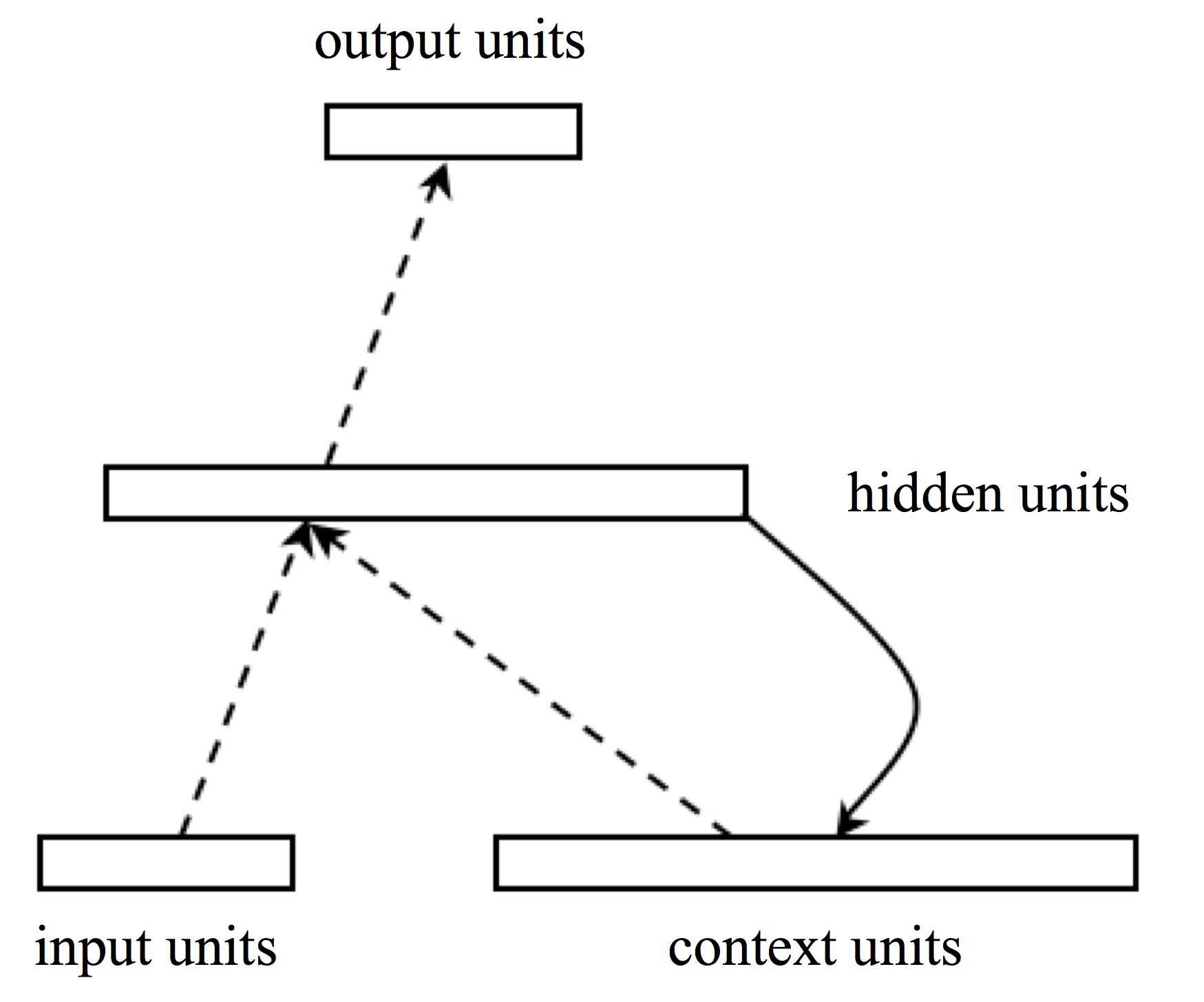

Artificial neural nets can differ in their fundamental architecture. A very simple feed-forward system will contain an input layer of processing units, a single hidden layer (where the computations or processing takes place) and an output layer. In each cycle of activity, the units in each layer can only be influenced by units below themselves, they cannot be influenced from above, i.e. by units closer to the output layer. Since the hidden layer’s state is significantly changed by each new cycle of inputs, it has no way of retaining detailed information about its own prior state, and so is ill-suited to serve as an analogue of a Husserlian ‘now’. A far more promising candidate, Lloyd suggests (2004: 282), are the simple recurrent networks described by Elman (1990). These comprise layers of input units, output units and hidden units as per usual. They also, however, include a layer of ‘context units’ whose function it is to record the exact state of the hidden units, and feed this information forward to the hidden units in the next cycle of processing. (See Figure 23 for a schematic depiction.) The processing layer is simultaneously presented with the new input stimuli and detailed information about its own prior state.

Figure 23. A simple recurrent network in which activations are copied from hidden layer to context layer on a one-for-one basis (Elman 1990: 184).

Because this ‘echo dates from one cycle ago, the next internal state can enfold both present and past information. Then that new internal state, combining present and just prior information, itself recycles … In principle, this neuronal hall of mirrors can keep a pattern alive indefinitely.’ (Lloyd 2004: 282). In his own research Lloyd exposed a network of this sort – dubbed ‘CNVnet’ – to a large number of (suitably encoded) ‘beeps’ and ‘boops’. These signals followed a fixed pattern: beeps come randomly, but are invariably followed by boops a short (and fixed) period of time later. With appropriate tweaking of connection weights, the network eventually had no difficulty whatsoever in predicting the timing of a boop after any given beep. Thanks to the combination of recursive and predictive capacities, CVNet’s hidden units can reflect both the past and the future, in addition to the current cycle of inputs.

Lloyd does not claim that his simple networks are actually conscious, but he does see points of similarity with Husserl’s tripartite analysis of temporal awareness. Taking things a step further, he set artificial networks the task of analyzing each other. He created a metanet, tuned to respond to the hidden units of the CNVnet. After the usual training (several million cycles), the metanet proved able to extract three sorts of information from the hidden units: the current input, the next output, and (with less accuracy) a record of the layer’s entire past state. Might these not reasonably be taken to correspond with Husserl’s primal impressions, protentions and retentions? Moving to real rather than artificial brains, Lloyd (2002, 2004: ch.4) argues that it might prove possible to find some confirming evidence for Husserl’s analysis in the data pertaining to the global condition of conscious brains that can be derived – using sophisticated multidimensional scaling methods – from fMRI scans. Simplifying a good deal, his working hypothesis is that if our consciousness at any one moment contains a detailed representation of its immediately prior states, this should be reflected in similarities between the conditions of our brain over the relevant short periods: ‘images taken close together in time should be more similar, compared to images separated by greater temporal intervals’ (2004: 313). And, broadly speaking, this is what he found. To round things off, he fed the fMRI data to an artificial neural net. His aim, as with CVNnet, was to find out whether the metanet could extract information about a brain’s prior states from a description of its later states. Again the results were positive: ‘Overall, the analysis suggests that patterns of activation in the human brain encode past patterns of activation, and particularly the immediate past’ (2004: 327).

3. Grush: a trajectory estimation model

In a series of recent papers Grush moves in a rather different, more classical direction (computationally speaking). Although he too is sympathetic to Husserl’s analysis of the phenomenal present, he is highly critical of the efforts of Varela, van Gelder and Lloyd. He reproaches a tendency in all three to assume without sufficient further argument that we can draw conclusions about the temporal properties of experiences (or of properties that are represented in the contents of experiences) from facts pertaining to the ‘vehicles’ of these experiences, e.g., the neural processes which underlie them (2006: 422). From the fact that a global brain state bears traces of its recent past it does not automatically follow that any experiences produced by this brain also bear traces of their past. Consequently, before it is legitimate to conclude that such a discovery about the brain vindicates the Husserlian conception a compelling story needs to be told as how or why the temporal features at the neural level transfer to the phenomenal level.[38] There are more specific complaints. Lloyd’s fMRI results, Grush argues, may well be nothing more than an artefact of the way the data was processed. As for the geometricization of retention advocated by Varela and van Gelder, if the analysis were correct ‘every physical system in the universe would exhibit Husserlian retention’ (2006: 425)[39]

Grush’s positive proposal comes in the form of the ‘trajectory estimation model’, which draws on ideas and analyses from control theory and signal processing – for further details, see Grush (2005a, 2005b). The core idea is that a system (e.g. a brain) has:

an internal model of the perceived entity (typically the environment and entities in it, but perhaps also the body), and at each time t, the state of the internal model embodies an estimate of the state of the perceived domain. The model can be run off-line in order to produce expectations of what the modelled domain might do in this or that circumstances (or if this or that action were taken by the agent); the model can also be run online, in parallel with the modelled domain, in order to help filter noise from sensory signals, and in order to overcome potential problems with feedback delays. (Grush 2006: §4)

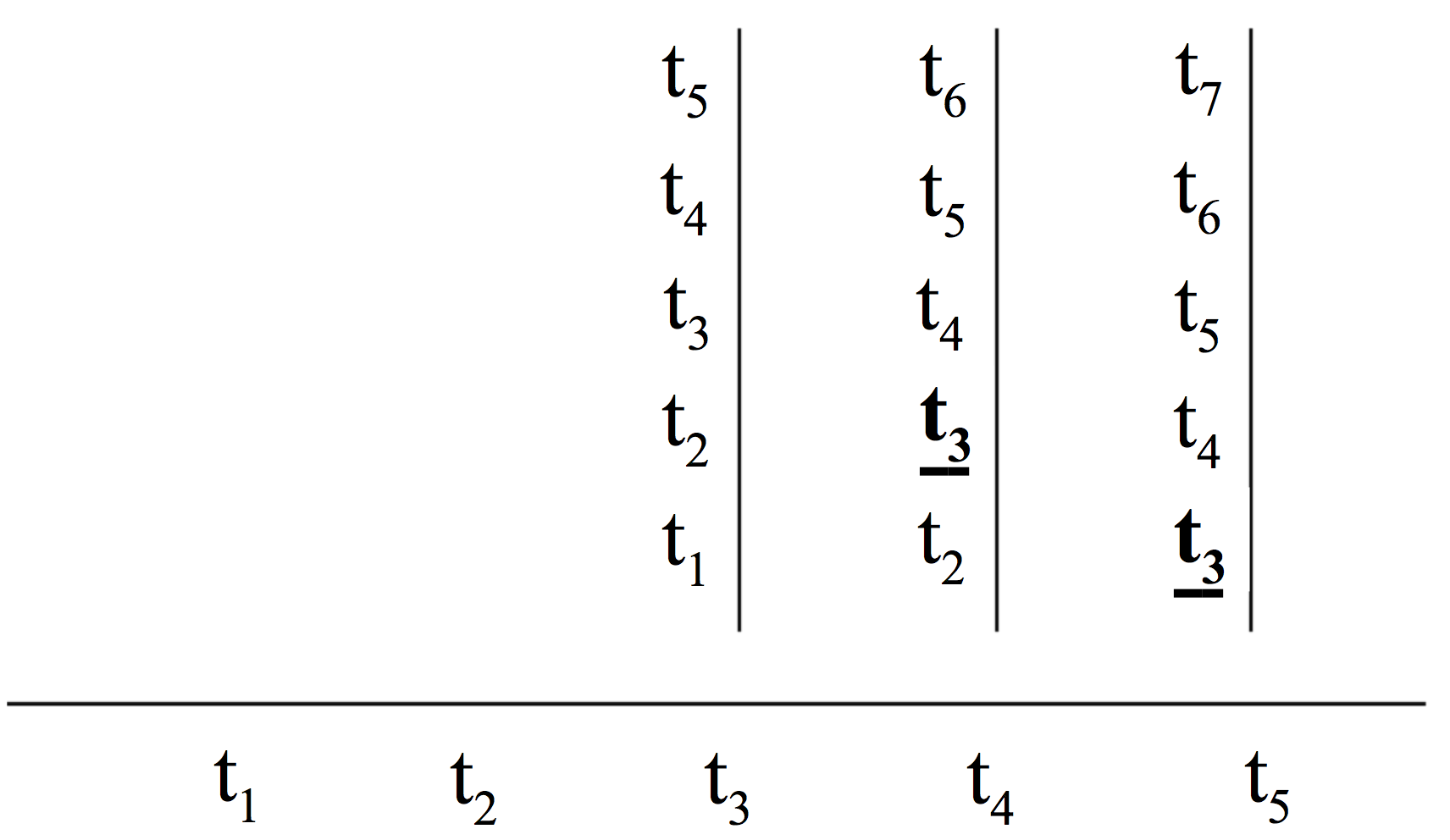

So far as analogies with Husserl’s doctrines are concerned, a key point is that the internal model employed by these systems is not confined to modelling instantaneous states of the relevant domain. What is modelled, rather, is the trajectory of the domain over a short interval of time, i.e., the entire succession of states that the system estimates that domain is most likely to have been in during the relevant interval. It is this trajectory – which is a detailed representation, and so not to be confused with the phase-space vectors of van Gelder and Varela – which Grush takes to be the information-processing analogue of Husserl’s tri-partite specious presents. When construed in experiential terms, an (online, parallel) trajectory is a subject’s course of experience over a brief interval, in the manner indicated in Figure 24 below. At each of three distinct times t1, t2, t3 a distinct trajectory is modelled, ranging from t1-t5, t2-t6, t3-t7 respectively. Within these trajectories, ‘t3’ denotes what the system is representing as happening at t3, ‘t2’ denotes what the system is representing as happening at t2, and so forth. These trajectories extend a short way into the past, but also include projections (or expectations) about the likely future course of experience, and in this manner reflect the protentional aspect of Husserlian specious presents. As for the temporal scope of these retentions and protentions, Grush suggests around a 100 msec in either direction is a plausible estimate. As in previous cases of diagrams of this sort, for the sake of clarity only a small sample of the actual representations is depicted; in reality the representations are being produced continually, generating a gap-free continuum of trajectories.

Figure 24.

Leaving aside their predictive capabilities, the trajectories (or specious presents) Grush’s systems produce from one moment to the next are not simply passive reflections of current and past sensory inputs. When producing their internal models of their environments, many controlling systems – e.g., those used as navigational aids on ships – have to rely on external sensors which are supplying partial, fragmentary or less than entirely accurate information concerning what is going on around them. Our brains are typical in this respect: the signals they receive from our eyes, ears and skin are very difficult indeed to interpret. To help circumvent these obstacles, engineers build a variety of sub-systems into control systems whose task it is to make the best of the imperfect information available. For example, these sub-systems will smooth out irregularities that are likely to be due to imperfect sensory data rather than sudden and dramatic alterations in the external environment, or fill-in holes or gaps by extrapolating from the available data in the most plausible way. To accomplish this, the relevant sub-systems will often themselves possess internal models of likely courses of events and likely external environments. In effect, in modelling current trajectories, these control systems rely on models they already possess. As a consequence of this active or interpretive stance, there is no guarantee that the trajectories generated by a system will remain constant or consistent over time. Since each trajectory represents a temporal interval, it is quite possible that later trajectories will re-write or supersede earlier trajectories, simply because the relevant control systems have more (and perhaps better) information to work with. This ‘re-writing of the past’ is illustrated schematically in the diagram above. What the system is representing as happening at t3 undergoes a change between t3 and t4 – the change is signified by the switch to bold font + underlining – and as can be seen, the revised version of t3 is preserved at t5.

Grush argues that the abilities of his systems to generate different (and incompatible) trajectories at different times is a positive boon, for it allows his model to accommodate a variety of ‘temporal illusions’. One such is the cutaneous rabbit described by Geldard & Sherrick (1972). The experiment involved devices capable of delivering controlled brief (2 msec) pulses to the skin being fitted along the arms of subjects. Surprisingly, when the devices were clustered in just three tight configurations – and the wrist, the elbow and in-between – and five pulses were delivered to each location, rather than experiencing three tight clusters of pulses (one at the wrist, one at the elbow and one in-between) subjects report feeling a succession of evenly spaced pulses starting at the wrist and terminating a the elbow (and often seeming continue a bit further). There are several questions that can be asked about this, but perhaps the most puzzling is: what is happening at the time of the second pulse? Although this actually occurs at the wrist, it is felt further along the arm, but at the time the second pulse occurs the brain can’t yet know that pulses further along the arm will be delivered. A similar problem is posed by the colour phi phenomenon (Kolers & von Grünau 1976, Dennett 1991: 114–123). When two differently coloured spots were flashed on and off for 150 msec each – with a 50 msec gap – subjects reported not only seeing a single spot moving back and forth (rather than two distinct spots flashing on and off), they also saw the first spot suddenly changing in colour mid-way along its path. Evidently, at some level the brain is ‘deciding’ that it is more likely to be confronted with a single spot of light that is both in motion and changing its colour than two flashing lights of different colours. But how is the brain able to impose this interpretation of events on experience before the second flash even occurs? Grush’s trajectory model has no difficulty explaining what is going on in such cases. In the case of the rabbit, the second pulse is initially represented as occurring at the wrist. But as the brain updates its models in the light of subsequent sensory information and its own expectations as to the likely scenario confronting it, it alters its verdict and starts representing the second pulse as occurring further along the arm, as part of an evenly spaced succession. And when subjects are subsequently queried as to what they experienced, it is the later representations (or trajectories) which get reported – the earlier representations are not remembered. The colour phi phenomenon is susceptible to an analogous interpretation and explanation: the earliest trajectories do not represent motion (or a fortiori a mid-path change of colour) subsequent ones do, and it is these which are reported.[40]

Taking a step back, the various attempts to put computational (or even biological) flesh on the bones of Husserl’s Retentional model are certainly intriguing, and Grush makes out a plausible case for supposing that our brains may contain internal modelling (or emulation) systems similar to the ones he describes, at least from the perspective of the sort of information processing they can carry out. But what conclusions should we draw from this? It would certainly be a mistake to conclude, without any further argument, that the existence within our brains of information processing systems along the lines of the trajectory estimation model entails that Husserl’s theory (or some other form of Retentional model) is true. Grush was right to criticize Varela, van Gelder and Lloyd for paying insufficient attention to the distinction between brains and the properties possessed by (or represented in) the experiences brains produce. From the fact that momentary global brain states retain traces of their recent past, we cannot conclude – without further argument – that momentary phases of experiences do likewise. A precisely analogous point can be made with regard to information processing models. Suppose our brains do contain (what are in effect) data-structures representing in quite detailed ways their environments (external and bodily) over short intervals. Let us further suppose that these data-structures are embodied in momentary phases of our brains, and are updated on a moment-to-moment basis (or at least, at time-scales shorter by far than the specious present). Should we conclude that our the contents of momentary (or very brief) phases of our streams of consciousness also reflect intervals of time, the same intervals as are represented in the data-structures? Not without a good deal of further argument. After all, the relationship between the phenomenal and the computational levels is as unclear and disputed as the relationship between the phenomenal and the physical (or neuronal) levels. Some realists about the phenomenal – e.g. Searle – reject any significant link between computation and experience; even those who think there is some significant relationship between information processing and experience may resist the claim that all instances of information processing are associated with experience. It is thus an option for the Extensional theorist to say:

Yes, our brains may well contain continually updated representations (in informational or computational form) of our internal and external environments; it may well be that at any one time it is possible to find in the brain data-structures corresponding to courses of events over brief periods of time; it may also be the case that these data-structures impact on our behaviour. But since we cannot conclude from this that our experience at any one moment embodies the data found in these structures, it may well be that our experience of change and persistence is itself temporally extended, in the way that Extensional models predict.

Until the relationship between matter, information processing and phenomenal consciousness is settled, this response cannot be dismissed.