The Neuroscience of Consciousness

Conscious experience in humans depends on brain activity, so neuroscience will contribute to explaining consciousness. What would it be for neuroscience to explain consciousness? How much progress has neuroscience made in doing so? What challenges does it face? How can it meet those challenges? What is the philosophical significance of its findings? This entry addresses these and related questions.

To bridge the gulf between brain and consciousness, we need neural data, computational and psychological models, and philosophical analysis to identify principles to connect brain activity to conscious experience in an illuminating way. This entry will focus on identifying such principles without shying away from the neural details. The theories and data to be considered will be organized around constructing answers to two questions (see section 1.4 for more precise formulations):

- Generic Consciousness: How might neural properties explain when a state is conscious rather than not?

- Specific Consciousness: How might neural properties explain what the content of a conscious state is?

A challenge for an objective science of consciousness is to dissect an essentially subjective phenomenon. As investigators cannot experience another subject’s conscious states, they rely on the subject’s observable behavior to track consciousness. Priority is given to a subject’s introspective reports as these express the subject’s take on her experience. Introspection thus provides a fundamental way, perhaps the fundamental way, to track consciousness. That said, consciousness pervasively influences human behavior and affects physiological responses, so other forms of behavior and physiological data beyond introspective reports provide a window on consciousness. How to leverage disparate evidence is a central issue.

The term “neuroscience” covers those scientific fields whose explanations advert to the properties of neurons, populations of neurons, or larger parts of the nervous system.[1] This includes, but is not limited to, psychologists’ and cognitive neuroscientists’ use of various neuroimaging methods to monitor the activity of tens of millions of neurons, computational theorists’ modeling of biological and artificial neural networks, neuroscientists’ use of electrodes inserted into brain tissue to record neural activity from individual or populations of neurons, and clinicians’ study of patients with altered conscious experiences in light of damage to brain areas. Given the breadth of neuroscience so conceived, this review focuses mostly on cortical activity that sustains perceptual consciousness, with emphasis on vision. This is not because visual consciousness is more important than other forms of consciousness. Rather, the level of detail in empirical work on vision often speaks more comprehensively to the issues that we shall confront.

That said, there are many forms of consciousness that we will not discuss. Some are covered in other entries such as split-brain phenomena (see the entry on the unity of consciousness, section 4.1.1), animal consciousness (see the entry on animal consciousness), and neural correlates of the will and agency (see the entry on agency, section 5). In addition, this entry will not discuss the neuroscience of consciousness in audition, olfaction, or gustation; disturbed consciousness in mental disorders such as schizophrenia; conscious aspects of pleasure, pain and the emotions; the phenomenology of thought; the neural basis of dreams; and modulations of consciousness during sleep and anesthesia among other issues. These are important topics, and the principles and approaches highlighted in this discussion will apply to many of these domains.

- 1. Fundamentals

- 2. Methods for Tracking Consciousness

- 3. Neurobiological Theories of Consciousness

- 4. Neuroscience of Generic Consciousness: Unconscious Vision as Case Study

- 5. Specific Consciousness

- 6. The Future

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. Fundamentals

1.1 A Map of the Brain

The brain can be divided into the cerebral cortex and the subcortex. The cortex is divided into two hemispheres, left and right, each of which can be divided into four lobes: frontal, parietal, temporal and occipital.

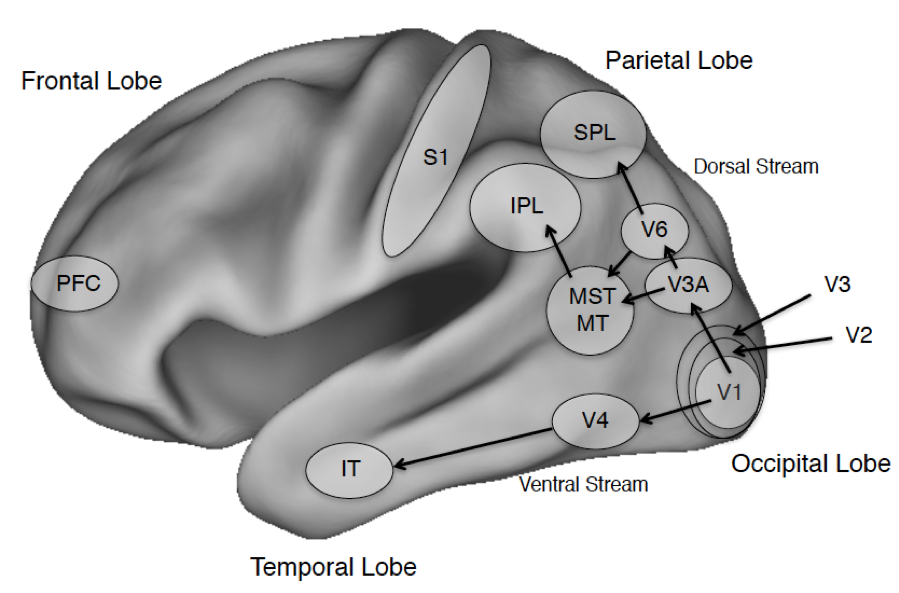

Figure 1. The Cerebral Cortex and Salient Areas

Figure Legend: The four lobes of the primate brain, shown for the left hemisphere. Some areas of interest are highlighted. Abbreviations: PFC: prefrontal cortex; IT: inferotemporal cortex; S1: primary somatosensory cortex; IPL and SPL: Inferior and Superior Parietal Lobule; MST: medial superior temporal visual area; MT: middle temporal visual area (also called V5 in humans); V1: primary visual cortex; V2-V6 consist of additional visual areas.

The discussion that follows will highlight specific areas of cortex including the prefrontal cortex that will figure in discussions of confidence (section 2.3), the global neuronal workspace (section 3.1) and higher order theories (section 3.3); the dorsal visual stream that projects into parietal cortex and the ventral visual stream that projects into temporal cortex including visual areas specialized for processing places, faces, and word forms (see sections 2.5 on places, 4.1 on visual agnosia and 5.3 on seeing words); primary somatosensory cortex S1 (see section 5.3.2 on tactile sensation); and early visual areas in the occipital cortex including the primary visual area, V1 (see sections 4.2 on blindsight and 5.2 on binocular rivalry) and a motion sensitive area V5, also known as the middle temporal area (MT; section 5.3.1 on seeing motion). Beneath the cortex is the subcortex, divided into the forebrain, midbrain, and hindbrain, which covers many regions although our discussion will largely touch on the superior colliculus and the thalamus. The latter is critical for regulating wakefulness and general arousal, and both areas play an important role in visual processing.

1.2 Neurons and Brain

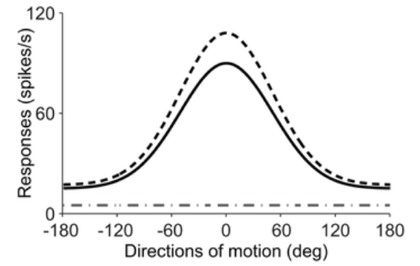

A neuroscientific explanation of consciousness adduces properties of the brain, typically the brain’s electrical properties. A salient phenomenon is neural signaling through action potentials or spikes. A spike is a large change in electrical potential across a neuron’s cellular membrane which can be transmitted between neurons that form a neural circuit. For a sensory neuron, the spikes it generates are tied to its receptive field. For example, in a visual neuron, its receptive field is understood in spatial terms and corresponds to that area of external space where an appropriate stimulus triggers the neuron to spike. Given this correlation between stimulus and spikes, the latter carries information about the former. Information processing in sensory systems involves processing of information regarding stimuli within receptive fields.

Which electrical property provides the most fruitful explanatory basis for understanding consciousness remains an open question. For example, when looking at a single neuron, neuroscientists are not interested in spikes per se but the spike rate generated by a neuron per unit time. Yet spike rate is one among many potentially relevant neural properties. Consider the blood oxygen level dependent signal (BOLD) measure in functional magnetic resonance imaging (fMRI). The BOLD signal is a measure of changes in blood flow in the brain when neural tissue is active and is postulated to be a function of post-synaptic activity while spikes are tied to presynaptic activity. Furthermore, neuroscientists are often not interested in the response of a single neuron but rather that of a population of neurons, of whole brain regions, and/or their interactions. Higher order properties of brain regions include the local field potential generated by populations of neurons and correlated activity such as synchrony between activity in different areas of the brain as measured by, for example, electrocorticography (EEG).

The number of neural properties potentially relevant to explaining mental phenomena is dizzying. This review focuses on the facts that neural sensory systems carry information about the subject’s environment and that neural information processing can be tied to a notion of neural representation. How precisely to understand neural representation is itself a vexed question (Cao 2012, 2014; Shea 2014; Baker, Lansdell, & Kording 2022), but we will deploy a simple assumption with respect to spikes which can be reconfigured for other properties: where a sensory neuron generates spikes when a stimulus is placed in its receptive field, the spikes carry information about the stimulus. The sensory neuron’s activity thus represent the relevant aspect of the stimulus that drives its response (e.g., direction of motion or intensity of a sound).[2] We shall return to neural representation in the final section when discussing how neural representations might explain conscious contents.

1.3 Access Consciousness and Phenomenal Consciousness

An important distinction separates access consciousness from phenomenal consciousness (Block 1995). “Phenomenal consciousness” refers to those properties of experience that correspond to what it is like for a subject to have those experiences (Nagel 1974 and the entry on qualia). These features are apparent to the subject from the inside, so tracking them arguably depends on one’s having the relevant experience. For example, one understands what it is like to see red only if one has visual experiences of the relevant type (Jackson 1982).

As noted earlier, introspection is the first source of evidence about consciousness. Introspective reports bridge the subjective and objective. They serve as a behavioral measure that expresses the subject’s own take on what it is like for them in having an experience. While there have been recent concerns about the reliability or empirical usefulness of introspection (Schwitzgebel 2011; Irvine 2012a), there are plausibly many contexts where introspection is reliable (Spener 2015; Wu 2023: chap. 7; see Irvine 2012b for an extended discussion of introspection in consciousness science; for philosophical theories, see Smithies & Stoljar 2012).

Introspective reports demonstrate that the subject can access the targeted conscious state. That is, the state is access-conscious: it is accessible for use in reasoning, report, and the control of action. Talk of access-consciousness must keep track of the distinction between actual access versus accessibility. When one reports on one’s conscious state, one accesses the state. Thus, access consciousness provides much of the evidence for empirical theories of consciousness. Still, it seems plausible that a state can be conscious even if one does not access it in report so long as that state is accessible. One can report it. Access-consciousness is usually defined in terms of this dispositional notion of accessibility.

We must also consider the type of access/accessibility. Block’s original characterization of access-consciousness emphasized accessibility in terms of the rational control of behavior, so we can summarize his account as follows:

A representation is access-conscious if it is poised for free use in reasoning and for direct “rational” control of action and speech.

Rational access contrasts with a broader conception of intentional access that takes a mental state to be access-conscious if it can inform goal-directed or intentional behavior including behavior that is not rational or done for a reason. This broader notion allows for additional measurable behaviors as relevant in assessing phenomenal consciousness especially in non-linguistic animals, non-verbal infants, and non-communicative patients. So, if access provides us with evidence for phenomenal consciousness, this can be (a) through introspective reports; (b) through rational behavior, (c) through intentional behavior including nonrational behavior. Indeed, in certain contexts, reflexive behavior and autonomic physiological measures provide measures of consciousness (section 2.2).

1.4 Generic and Specific Consciousness

There are two ways in which consciousness is understood in this entry. The first focuses on a mental state’s being conscious in general as opposed to not being conscious. Call this property generic consciousness, a property shared by specific conscious states such as seeing a red rose, feeling a touch, or being angry. Thus:

Generic Consciousness: What conditions/states N of nervous systems are necessary and/or sufficient for a mental state, M, to be conscious as opposed to not?

If there is such an N, then the presence of N entails that an associated mental state M is conscious and/or its absence entails that M is unconscious.

A second focus will be on the content of consciousness, say that associated with a perceptual experience’s being of some perceptible X. This yields a question about specific contents of consciousness such as experiencing the motion of an object (see section 5.3.1) or a vibration on one’s finger (see section 5.3.2):

Specific Consciousness: What neural states or properties are necessary and/or sufficient for a conscious perceptual state to have content X rather than Y?

In introspectively accessing their conscious states, a subject reports what their experience is like by reporting what they experiences. Thus, the subject can report seeing an object moving, changing color, or being of a certain kind (e.g., a mug) and thus specify the content of the perceptual state. Discussion of specific consciousness will focus on perceptual states described as consciously perceiving X where X can be a particular such as a face or a property such as the color of an object.

Posing a clear question involves grasping its possible answers and in science, this is informed by identifying experiments that can provide evidence for such answers. The emphasis on necessary and sufficient conditions in our two questions indicates how to empirically test specific proposals. To test sufficiency, one would aim to produce or modulate a certain neural state and then demonstrate that consciousness of a certain form arises. To test necessity, one would eliminate a certain neural state and demonstrate that consciousness is abolished. Notice that such tests go beyond mere correlation between neural states and conscious states (see section 1.6 on neural correlates and sections 2.2, 4 and 5 for tests of necessity and sufficiency).

In many experimental contexts, the underlying idea is causal necessity and sufficiency. However, if A = B, then A’s presence is also necessary and sufficient for B’s presence since they are identical. Thus, a brain lesion that eliminates N and thereby eliminates conscious state S might do so either because N is causally necessary for S or because N = S. An intermediate relation is that N constitutes or grounds S which does not imply that N = S (see the entry on metaphysical grounding). Whichever option holds for S, the first step is to find N, a neural correlate of consciousness (section 1.6).

In what follows, to explain generic consciousness, various global properties of neural systems will be considered (section 3) as well as specific anatomical regions that are tied to conscious versus unconscious vision as a case study (section 4). For specific consciousness, fine-grained manipulations of neural representations will be examined that plausibly shift and modulate the contents of perceptual experience (section 5).

1.5 The Hard Problem

David Chalmers presents the hard problem as follows:

It is undeniable that some organisms are subjects of experience. But the question of how it is that these systems are subjects of experience is perplexing. Why is it that when our cognitive systems engage in visual and auditory information-processing, we have visual or auditory experience: the quality of deep blue, the sensation of middle C? How can we explain why there is something it is like to entertain a mental image, or to experience an emotion? It is widely agreed that experience arises from a physical basis, but we have no good explanation of why and how it so arises. Why should physical processing give rise to a rich inner life at all? It seems objectively unreasonable that it should, and yet it does. If any problem qualifies as the problem of consciousness, it is this one. (Chalmers 1995: 212)

The Hard Problem can be specified in terms of generic and specific consciousness (Chalmers 1996). In both cases, Chalmers argues that there is an inherent limitation to empirical explanations of phenomenal consciousness in that empirical explanations will be fundamentally either structural or functional, yet phenomenal consciousness is not reducible to either. This means that there will be something that is left out in empirical explanations of consciousness, a missing ingredient (see also the explanatory gap [Levine 1983]).

There are different responses to the hard problem. One response is to sharpen the explanatory targets of neuroscience by focusing on what Chalmers calls structural features of phenomenal consciousness, such as the spatial structure of visual experience, or on the contents of phenomenal consciousness. When we assess explanations of specific contents of consciousness, these focus on the neural representations that fix conscious contents. These explanations leave open exactly what the secret ingredient is that shifts a state with that content from unconsciousness to consciousness. On ingredients explaining generic consciousness, a variety of options have been proposed (see section 3), but it is unclear whether these answer the Hard Problem, especially if any answer to that the Problem has a necessary condition that the explanation must conceptually close off certain possibilities, say the possibility that the ingredient could be added yet consciousness not ignite as in a zombie, a creature without phenomenal consciousness (see the entry on zombies). Indeed, some philosophers deny the hard problem (see Dennett 2018 for a recent statement). Patricia Churchland urges: “Learn the science, do the science, and see what happens” (Churchland 1996: 408).

Perhaps the most common attitude for neuroscientists is to set the hard problem aside. Instead of explaining the existence of consciousness in the biological world, they set themselves to explaining generic consciousness by identifying neural properties that can turn consciousness on and off and explaining specific consciousness by identifying the neural representational basis of conscious contents.

1.6 Neural Correlates of Consciousness

Modern neuroscience of consciousness has attempted to explain consciousness by focusing on neural correlates of consciousness or NCCs (Crick & Koch 1990; LeDoux, Michel, & Lau 2020; Morales & Lau 2020). Identifying correlates is an important first step in understanding consciousness, but it is an early step. After all, correlates are not necessarily explanatory in the sense of answering specific questions posed by neuroscience. That one does not want a mere correlate was recognized by Chalmers who defined an NCC as follows:

An NCC is a minimal neural system N such that there is a mapping from states of N to states of consciousness, where a given state of N is sufficient under conditions C, for the corresponding state of consciousness. (Chalmers 2000: 31)

A similar way of putting this is that an NCC is “the minimal set of neuronal events and mechanisms jointly sufficient for a specific conscious percept” (Koch 2004: 16). One wants a minimal neural system since, crudely put, the brain is sufficient for consciousness but to point this out is hardly to explain consciousness even if it provides an answer to questions about sufficiency. There is, of course, much more to be said that is informative even if one does not drill down to a “minimal” neural system which is tricky to define or operationalize (see Chalmers 2000 for discussion; for criticisms of the NCC approach, see Noë & Thompson 2004; for criticisms of Chalmers’ definition, see Fink 2016).

The emphasis on sufficiency goes beyond mere correlation, as neuroscientists aim to answer more than the question: What is a neural correlate for conscious phenomenon C? For example, Chalmers’ and Koch’s emphases on sufficiency indicate that they aim to answer the question: What neural phenomenon is sufficient for consciousness? Perhaps more specifically: What neural phenomenon is causally sufficient for consciousness? Accordingly, talk of “correlate” is unfortunate since sufficiency implies correlation but not vice versa. After all, assume that the NCC is type identical to a conscious state. Then many neural states will correlate with the conscious state: (1) the NCC’s typical effects, (2) its typical causes, and (3) states that are necessary for the NCCs obtaining (e.g., the presence of sufficient oxygen). Thus, some correlated effects will not be explanatory. For example, citing the effects of consciousness will not provide causally sufficient conditions for consciousness.

Establishing necessary conditions for consciousness is also difficult. Neuroplasticity, redundancy, and convergent evolution make necessity claims extremely hard to support experimentally. Under normal conditions, healthy humans may require certain brain areas or processes for supporting consciousness. However, this does not mean those regions or processes are necessary in any strong metaphysical sense. For example, after a lesion, the brain’s functional connections may change allowing a different structural support for consciousness to emerge. There is also nothing in the cortical arrangement of specialized regions that fix them to a particular function. The so-called “visual cortex” is recruited in blind individuals to perform auditory, numerical, and linguistic processing (Bedny 2017). Similarly, as with many other complex structures, the brain is highly redundant. Different areas may perform the same function, preventing any single one from being strictly necessary. Finally, the way in which the mammalian brain operates is not the only way to support awareness. Birds—as well as cephalopods and insects (Barron & Klein 2016; Birch, Schnell, & Clayton 2020)—and even other primates have different anatomical and functional neural mechanisms and yet their nervous systems may support consciousness (Nieder, Wagener, & Rinnert 2020). Thus, there may not be a single necessary structure or process for supporting conscious awareness.

While many theorists are focused on explanatory correlates, it is not clear that the field has always grasped this, something recent theorists have been at pains to emphasize (Aru et al. 2012; Graaf, Hsieh, & Sack 2012). In other contexts, neuroscientists speak of the neural basis of a phenomenon where the basis does not simply correlate with the phenomenon but also explains and possibly grounds it. However, talk of correlates is entrenched in the neuroscience of consciousness, so one must remember that the goal is to find the subset of neural correlates that are explanatory, in answering concrete questions. Reference to neural correlates in this entry will always mean neural explanatory correlates of consciousness (on occasion, we will speak of these as the neural basis of consciousness). That is, our two questions about specific and generic consciousness focus the discussion on neuroscientific theories and data that contribute to explaining them. This project allows that there are limits to neural explanations of consciousness, precisely because of the explanatory gap (Levine 1983).

2. Methods for Tracking Consciousness

Since studying consciousness requires that scientists track its presence, it will be important to examine various methods used in neuroscience to isolate and probe conscious states.

2.1 Introspection and Report

Scientists primarily study phenomenal consciousness through subjective reports; objective measures such as performance in a task are often used too (for a critical assessment, see Irvine 2013). We can treat reports in neuroscience as conceptual in that they express how the subject recognizes things to be, whether regarding what they perceive (perceptual or observational reports, as in psychophysics) or regarding what mental states they are in (introspective reports). A report’s conceptual content can be conveyed in words or other overt behavior whose significance is fixed within an experiment (e.g., pressing a button to indicate that a stimulus is present or that one sees it). Subjective reports of conscious states draw on distinctively first-personal access to that state. The subject introspects.

Introspection raises questions that science has only recently begun to address systematically in large part because of longstanding suspicion regarding introspective methods or, in contrast, because of an unquestioning assumption that introspection is largely and reliably accurate. Early modern psychology relied on introspection to parse mental processes but ultimately abandoned it due to worries about introspection’s reliability (Feest 2012; Spener 2018). Introspection was judged to be an unreliable method for addressing questions about mental processing (and it is still seen with suspicion by some; Schwitzgebel 2008). To address these worries, we must understand how introspection works, but unlike many other psychological capacities, detailed models of introspection of consciousness are hard to develop (Feest 2014; for theories of introspecting propositional attitudes, see Nichols & Stich 2003; Heal 1996; Carruthers 2011). This makes it difficult to address long-standing worries about introspective reliability regarding consciousness.

In science, questions raised about the reliability of a method are answered by calibrating and testing the method. This calibration has not been done with respect to the type of introspection commonly practiced by philosophers. Such introspection has revealed many phenomenal features that are targets of active investigation such as the phenomenology of mineness (Ehrsson 2009), sense of agency (Bayne 2011; Vignemont & Fourneret 2004; Marcel 2003; Horgan, Tienson, & Graham 2003), transparency (Harman 1990; Tye 1992), self-consciousness (Kriegel 2003: 122), cognitive phenomenology (Bayne & Montague 2011); phenomenal unity (Bayne & Chalmers 2003) among others. A scientist might worry that philosophical introspection merely recycles rejected methods of a century ago, indeed without the stringent controls or training imposed by earlier psychologists. How can we ascertain and ensure the reliability of introspection in the empirical study of consciousness?

Model migration, applying well-understood concepts in less well-understood domains, provides conceptual resources to reveal patterns across empirical systems and to promote theoretical insights (Lin 2018; Knuuttila & Loettgers 2014; see the entry on models in science). Introspection’s range of operation and its reliability conditions—when and why it succeeds and when and why it fails—can be calibrated by drawing parallels to how Signal Detection Theory (SDT) models perception (see Dołęga 2023 for an evaluation of introspective theories). In SDT (Tanner & Swets 1954; Hautus, Macmillan, & Creelman 2021), perceptually detecting or discriminating a stimulus is the joint outcome of the observer’s perceptual sensitivity (e.g., how well a perceptual system can tell signal from noise) and a decision to classify the available perceptual evidence as signal or not (i.e., observers set a response criterion for what counts as signal). Consider trying to detect something moving in the brush at twilight versus at noon. In the latter, the signal will be greatly separated from noise (the object will be easier to detect because it generates a strong internal perceptual response). In contrast, at twilight the signal will not be easy to disentangle from noise (the object will be harder to detect because of the weaker internal perceptual response it generates in the observer). Importantly, even at noon when the signal is strong, rare as they might be, misses and false alarms are to be expected. Yet in either case, one might operate with a conservative response criterion, say because one is afraid to be wrong. Thus, even if the signal is detectable, one might still opt not to report on it given a conservative bias (criterion), say if one is in the twilight scenario and would be ridiculed for “false alarms”, i.e., claiming the object to be present when it is not.

Introspection can be modeled as a signal detection mechanism that operates over conscious states. Pains can be strong or weak, mental images can be vivid or faint, and perceptions can be more or less striking. In other words, conscious experiences admit degrees of intensity (Lee 2023; Morales 2023). According to introspective Signal Detection Theory or iSDT (Morales forthcoming), the intensity of conscious experiences (their mental strength) modulates the introspective internal response generated by the intensity of the conscious experience under examination, which in turn modulates introspective sensitivity. Everything else being equal, introspectors are more likely to introspect accurately an intense experience (e.g., a strong pain, a vivid mental image, etc.) than a weak experience (e.g., a mild pain, a faint mental image, etc.). As in perception, a biased introspective criterion may affect an introspector’s response without necessarily implying changes in the introspectability of a state. Even when rare, introspection errors (misses and false alarms) should be possible and in fact expected. By modeling introspection as any other detection mechanism, iSDT aims to explain the range of reliability of introspection while preserving our intuitions that it provides highly accurate judgments in familiar cases such as detecting severe pain while also accounting for introspection’s potential fallibility.

Thus, in the context of scientific experiments of consciousness, iSDT provides a model that predicts higher introspective reliability when judging strong experiences. When the experiences are weak—as one may expect from stimuli presented quickly and at threshold—introspective judgments are expected to be more unreliable (Dijkstra & Fleming 2023).

Another way to link scientific introspection to consciousness is to connect it to models of attention. Philosophical conceptions of introspective attention construe it as capable of directly focusing on phenomenal properties and experiences. As this idea is fleshed out, however, it is clearly not a form of attention studied by cognitive science, for the posited direct introspective attention is neither perceptual attention nor what psychologists call internal attention (e.g., the retrieval of thought contents as in memory recollection; Chun, Golomb, & Turk-Browne 2011). Calibrating introspection as it is used in the science of consciousness would benefit from concrete models of introspection (Chirimuuta 2014; Spener 2015; Wu 2023; Kammerer & Frankish 2023; Morales forthcoming).

We can draw inspiration from a proposal inspired by Gareth Evans (1982): in introspecting perceptual states, say judging that one sees an object, one draws on the same perceptual capacities used to answer the question whether the object is present. In introspection, one then appends a further concept of “seeing” to one’s perceptual report.[3] Thus, instead of simply reporting that a red stimulus is present, one reports that one sees the red stimulus. Paradoxically, introspection relies on externally directed perceptual attention, but as noted earlier, identifying what one perceives is a way of characterizing what one’s perception is like, so this “outward” perspective can provide information about the inner.

Further, the advantage of this proposal is that questions of reliability come down to questions of the reliability of psychological capacities that can be empirically assessed, say perceptual, attentional and conceptual reliability. For example, Peters and Lau (2015) showed that accuracy in judgments about the visibility of a stimulus, the introspective measure, coincided with accuracy in judgments about stimulus properties, the objective measure (see also Rausch & Zehetleitner 2016). Further, in many of the examples to be discussed, the perceptual attention-based account provides a plausible cognitive model of introspection (Wu, 2023). Subjects report on what they perceptually experience by attending to the object of their experience, and where perception and attention are reliable, a plausible hypothesis is that their introspective judgments will be reliable as well. Accordingly, the reliability of introspection in the empirical studies to be discussed can be assumed. Still, given that no scientist should assert the reliability of a method without calibration, introspection must be subject to the same standards. There is more work to be done.

2.2 Access Consciousness and No-Report Paradigms

Introspection illustrates a type of cognitive access, for a state that is introspected is access conscious. This raises a question that has epistemic implications: is access consciousness necessary for phenomenal consciousness? If it is not, then there can be phenomenal states that are not access conscious, so are in principle not reportable. That is, phenomenal consciousness can overflow access consciousness (Block 2007).

Access is tied to attention, and attention is tied to report. Some views hold that attention is necessary for access, which entails phenomenal consciousness (e.g., the Global Workspace theory [section 3.1]).[4] In contrast, other theories (e.g., recurrent processing theory section 3.2]), hold that there can be phenomenal states that are not accessible.

Many scientists of consciousness take there to be evidence for no phenomenal consciousness without access and little if no evidence of phenomenal consciousness outside of access. While those antagonistic to overflow have argued that it is not empirically testable (Cohen & Dennett 2011) testing that attention is necessary for consciousness may be equally untestable. After all, we must eliminate attention to a target while gathering evidence for the absence of consciousness. Yet if gathering evidence for consciousness requires attention, then in fulfilling the conditions for testing the necessity of attention, we undercut the access needed to substantiate the absence of (Wu 2017; for a monograph length discussion of attention and consciousness, see Montemayor & Haladjian 2015).[5] How then can we gather the required evidence to assess competing theories?

One response is to draw on no-report paradigms which measure reflexive behaviors correlated with conscious states to provide a window on the phenomenal that is independent of access (Lumer & Rees 1999; Tse et al. 2005). In binocular rivalry paradigms (see section 5.2), researchers show subjects different images to each eye. Due to their very different features, these images cannot be fused into a single percept. This results in alternating experiences that transition back and forth between one image and the other. Since the presented images remain constant while experience changes, binocular rivalry has been used to find the neural correlates of consciousness (see Zou, He, & Zhang 2016; and Giles, Lau, & Odegaard 2016 for concerns about using binocular rivalry for studying the neural correlates of consciousness). However, binocular rivalry tasks have typically involved asking subjects to report what their experience is like, requiring the recruitment of attention and explicit report. To overcome this issue, Hesse & Tsao (2020) recently introduced an important variation by adding a small fixation point on different locations of each image. For example, subjects’ right eye was shown a photo of a person with a fixation point drawn on the bottom of the image, and their left eye was presented with a photo of some tacos with a fixation point on the top. People and monkeys were trained to look at the fixation point while ignoring the image. This way, the experimenters could use their eye movement behavior as a proxy for which image they were experiencing without having to collect explicit reports: if subjects look up, they are experiencing the tacos; if they look down, they are experiencing the person. They confirmed with explicit reports from humans that both monkeys and humans in the no-report condition behaved similarly. Importantly, they found from single-cell recordings in the monkeys that neurons in inferotemporal cortex—a downstream region associated with high-level visual processing—represented the experienced image. Because these monkeys were never trained to report their percept (they were just following the fixation point wherever it was), the experimenters could more confidently conclude that the activity linked to the alternating images was due to consciously experiencing them and not due to introspecting or reporting.

No-report paradigms use indirect responses to track the subject’s perceptual experience in the absence of explicit (conceptualized) report. These can include eye movements, pupil changes, electroencephalographic (EEG) activity or galvanic skin conductance changes, among others. No-report paradigms seem to provide a way to track phenomenal consciousness even when access is eliminated. This would broaden the evidential basis for consciousness beyond introspection and indeed, beyond intentional behavior (our “broad” conception of access). However, in practice they do not always result in drastically different results (Michel & Morales 2020). Moreover, they do not fully circumvent introspection (Overgaard & Fazekas 2016). For example, the usefulness of any indirect physiological measure depends on validating its correlation with alternating experience given subjective reports. Once it is validated, monitoring these autonomic responses can provide a way to substitute for subjective reports within that paradigm. One cannot, however, simply extend the use of no-report paradigms outside the behavioral contexts within which the method is validated. With each new experimental context, we must revalidate the measure with introspective report. Moreover, no-report paradigms do not match post-perceptual processing between conscious and unconscious conditions (Block 2019). Even if overt report is matched, the cognitive consequences of perceiving a stimulus consciously and failing to do so are not the same. For example, systematic reflections may be triggered by one stimulus (the person) but not the other (the tacos). These post-cognitive differences would generate different neural activity that is not necessarily related to consciousness. To avoid this issue, post-perceptual cognition would also need to be matched to rule out potential confounds (Block 2019). However, this is easier said than done and no uncontroversial solutions around this issue have been found (Phillips & Morales 2020; Panagiotaropoulos, Dwarakanath, & Kapoor 2020).

Can we use no report paradigms to address whether access is necessary for phenomenal consciousness? A likely experiment would be one that validates no-report correlates for some conscious phenomenon P in a concrete experimental context C. With this validation in hand, one then eliminates accessibility and attention with respect to P in C. If the no-report correlate remains, would this clearly support overflow? Perhaps, it could still be argued that the result does not rule out the possibility that phenomenal consciousness disappears with access consciousness despite the no-report correlate remaining. For example, the reflexive response and phenomenal consciousness might have a common cause that remains even if phenomenal consciousness is selectively eliminated by removing access.

2.3 Confidence and Metacognitive Approaches

Given worries about calibrating introspection, researchers have asked subjects to provide a different metacognitive assessment of conscious states via reports about confidence (Fleming 2023a, 2020; Pouget, Drugowitsch, & Kepecs 2016). A standard approach is to have subjects perform a task, say perceptual discrimination of a stimulus, and then report how confident they are that their perceptual judgment was accurate. This metacognitive judgment, a confidence rating, about perception can be assessed for accuracy by comparing it with perceptual performance (for discussion of formal methods such as metacognitive signal detection theory, see Maniscalco & Lau 2012, 2014). Related paradigms include post-decision wagering where subjects place wagers on specific responses as a way of estimating their confidence (Persaud, McLeod, & Cowey 2007; but see Dienes & Seth 2010).

There are some advantages of using confidence judgments for studying consciousness (Michel 2023; Morales & Lau 2022; Peters 2022). While standard introspective judgments about conscious experiences may capture more directly the phenomenon of interest, confidence judgments are easier to explain to subjects and they are also more interpretable from the experimenter’s point of view. Confidence judgments provide an objective measure of metacognitive sensitivity: how well subjects’ confidence judgments track their performance in the task. Subjects can also receive feedback on those ratings and, at least in principle, improve their metacognitive sensitivity (though this has proved hard to achieve in laboratory tasks; Rouy et al. 2022; Haddara & Rahnev 2022). Metacognitive judgments also have the advantage over direct judgments about conscious experiences (e.g., Ramsøy & Overgaard 2004) in that they allow for comparisons between different domains that have very different phenomenology (e.g., perception vs memory) (e.g., Gardelle, Corre, & Mamassian 2016; Faivre et al. 2017; Morales, Lau, & Fleming 2018; Mazancieux et al. 2020).

One concern with metacognitive approaches is that they also rely on introspection (Rosenthal 2019; see also Sandberg et al. 2010; Dienes & Seth 2010). If metacognition relies on introspection, does it not accrue all the disadvantages of the latter? Perhaps, but an important gain in metacognitive approaches is that it allows for quantitative psychophysical analysis. While it does not replace introspection, it brings an analytical rigor to addressing certain aspects of conscious awareness.

How might we bridge metacognition with consciousness as probed by traditional introspection? The metacognitive judgment reflects introspective assessment of the quality of perceptual states and can provide information about the presence of consciousness. For example, Peters and Lau (2015) found that increases in metacognitive confidence tracked increases in perceptual sensitivity, which presumably underly the quality of perceptual experiences. They also did not find significant differences between asking for confidence or visibility judgments. Even studies that have found (small) differences between the two kinds of judgments indicate considerable association between the two ratings, suggesting similar behavioral patterns (Rausch & Zehetleitner 2016; Zehetleitner & Rausch 2013).

Beyond behavior, neuroscientific work shows that similar regions of the brain (e.g., prefrontal cortex) may be involved in supporting both conscious awareness and metacognitive judgments. Studies with non-human primates and rodents have begun to shed light on neural processing for metacognition (for reviews, see Grimaldi, Lau, & Basso 2015; Pouget, Drugowitsch, & Kepecs 2016). From animal studies, one theory is that metacognitive information regarding perception is already present in perceptual areas that guide observational judgments, and these studies implicate parietal cortex (Kiani & Shadlen 2009; Fetsch et al. 2014) and the superior colliculus (Kim & Basso 2008; but see Odegaard et al. 2018). Alternatively, information about confidence might be read out by other structures (see section 3.3 on Higher-Order Theory; also the entry on higher order theories of consciousness). In both human and animal studies, the prefrontal cortex (specifically, subregions in dorsolateral and orbitofrontal prefrontal cortex) has been found to support subjective reports of awareness (Cul et al. 2009; Lau & Passingham 2006), subjective appearances (Liu et al. 2019); visibility ratings (Rounis et al. 2010); confidence ratings (Fleming, Huijgen, & Dolan 2012; Shekhar & Rahnev 2018) and conscious experiences without reports (Mendoza-Halliday & Martinez-Trujillo 2017; Kapoor et al. 2022; Michel & Morales 2020).

2.4 The Intentional Action Inference

Metacognitive and introspective judgments result from intentional action, so why not look at intentional action, broadly construed, for evidence of consciousness? Often, when subjects perform perception guided actions, we infer that they are relevantly conscious. It would be odd if a person cooks dinner and then denies having seen any of the ingredients. That they did something intentionally provides evidence that they were consciously aware of what they acted on. An emphasis on intentional action embraces a broader evidential basis for consciousness. Consider the Intentional Action Inference to phenomenal consciousness:

If some subject acts intentionally, where her action is guided by a perceptual state, then the perceptual state is phenomenally conscious.

An epistemic version takes the action to provide good evidence that the state is conscious. Notice that introspection is typically an intentional action so it is covered by the inference. In this way, the Inference effectively levels the evidential playing field: introspective reports are simply one form among many types of intentional actions that provide evidence for consciousness. Those reports are not privileged.

The intentional action inference and no-report paradigms highlight that the science of consciousness has largely restricted its behavioral data to one type of intentional action, introspection. What is the basis of privileging one intentional action over others? Consider the calibration issue. For many types of intentional action deployed in experiments, scientists can calibrate performance by objective measures such as accuracy. This has not been formally done for introspection of consciousness, so scientists have privileged an uncalibrated measure over a calibrated one. This seems empirically ill-advised. On the flip side, one worry about the intentional action inference is that it ignores guidance by unconscious perceptual states (see sections 4 and 5.3.1).

2.5 Unresponsive Wakefulness Syndrome and the Intentional Action Inference

The Intentional Action Inference is operative when subjective reports are not available. For example, it is deployed in arguing that some patients diagnosed with unresponsive wakefulness syndrome are conscious (Shea & Bayne 2010; Drayson 2014).

A patient [with unresponsive wakefulness syndrome] appears at times to be wakeful, with cycles of eye closure and eye opening resembling those of sleep and waking. However, close observation reveals no sign of awareness or of a ‘functioning mind’: specifically, there is no evidence that the patient can perceive the environment or his/her own body, communicate with others, or form intentions. As a rule, the patient can breathe spontaneously and has a stable circulation. The state may be a transient stage in the recovery from coma or it may persist until death. (Working Party RCP 2003: 249)

These patients are not clinically comatose but fall short of being in a “minimally conscious state”. Unlike unresponsive wakeful patients, minimally conscious state patients seemingly perform intentional actions.

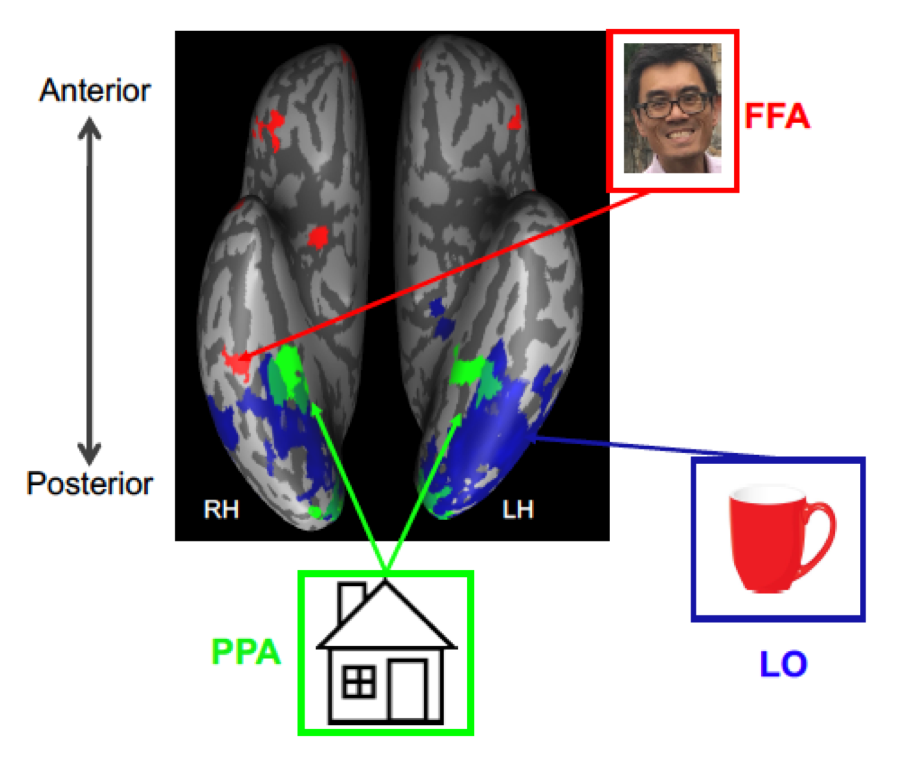

Recent work suggests that some patients diagnosed with unresponsive wakeful syndrome are conscious. Owen et al. (2006) used fMRI to demonstrate correlated activity in such patients in response to commands to deploy imagination. In an early study, a young female patient was scanned by fMRI while presented with three auditory commands: “imagine playing tennis”, “imagine visiting the rooms in your home”, “now just relax”. The commands were presented at the beginning of a thirty-second period, alternating between imagination and relax commands. The patient demonstrated similar activity when matched to control subjects performing the same task: sustained activation of the supplementary motor area (SMA) was observed during the motor imagery task while sustained activation of the parahippocampal gyrus including the parahippocampal place area (PPA) was observed during the spatial imagery task. Later work reproduced this result in other patients and in one patient, the tasks were used as a proxy for “yes”/ “no” responses to questions (Monti et al. 2010; for a review, see Fernández-Espejo & Owen 2013). Note that these tasks probe specific contents of consciousness by monitoring neural correlates of conscious imagery.

Several authors (Greenberg 2007; Nachev & Husain 2007) have countered that the observed activity was an automatic, non-intentional response to the command sentences, specifically to the words “tennis” and “house”. In normal subjects, reading action words is known to activate sensorimotor areas (Pulvermüller 2005). Owen and colleagues (2007), responded that the sustained activity over thirty-seconds made an automatic response less likely than an intentional response. One way to rule out automaticity is to provide the patient with different sentences such as “do not imagine playing tennis” or “Sharlene was playing tennis”. Owen et al. (2007) demonstrated that presenting “Sharlene was playing tennis” to a normal subject did not induce the same activity as when the subject obeyed the command “imagine playing tennis”, but the same intervention was not tried on patients. However, subsequent experiments using other measures such as EEG (Goldfine et al. 2011; Curley et al. 2018; Cruse et al. 2012) and functional connectivity (Demertzi et al. 2015), indicate conscious awareness is indeed present in patients with (wrongly diagnosed) unresponsive wakefulness syndrome (for a review, see Edlow et al. 2021; for ethical reflections around diagnosing awareness in patients with disorders of consciousness, see Young et al. 2021).

Owen et al. draw on a neural correlate of imagination, a mental action. Arguing that the neural correlate provides evidence of the patient’s executing an intentional action, they invoke a version of the Intentional Action Inference to argue that performance provides evidence for specific consciousness tied to the information carried in the brain areas activated.[6]

3. Neurobiological Theories of Consciousness

Recall that the Generic Consciousness question asks:

What conditions/states N of nervous systems are necessary and/or sufficient for a mental state, M, to be conscious as opposed to not?

Victor Lamme notes:

Deciding whether there is phenomenality in a mental representation implies putting a boundary—drawing a line—between different types of representations…We have to start from the intuition that consciousness (in the phenomenal sense) exists, and is a mental function in its own right. That intuition immediately implies that there is also unconscious information processing. (Lamme 2010: 208)

It is uncontroversial that there is unconscious information processing, say processing occurring in a computer. What Lamme means is that there are conscious and unconscious mental states (representations). For example, there might be visual states of seeing X that are conscious or not (section 4).

In what follows, the theories discussed provide higher level neural properties that are necessary and/or sufficient for generic consciousness of a given state. To provide a gloss on the hypotheses: For the Global Neuronal Workspace, entry into the neural workspace is necessary and sufficient for a state or content to be consciousness. For Recurrent Processing Theory, a type of recurrent processing in sensory areas is necessary and sufficient for perceptual consciousness, so entry into the Workspace is not necessary. For Higher-Order Theories, the presence of a higher-order state tied to prefrontal areas is necessary and sufficient for phenomenal experience, so recurrent processing in sensory areas is not necessary nor is entry into the workspace. For Information Integration Theories, a type of integration of information is necessary and sufficient for a state to be conscious.

3.1 The Global Neuronal Workspace

One explanation of generic consciousness invokes the global neuronal workspace. Bernard Baars first proposed the global workspace theory as a cognitive/computational model (Baars 1988), but we will focus on the neural version of Stanislas Dehaene and colleagues: a state is conscious when and only when it (or its content) is present in the global neuronal workspace making the state (content) globally accessible to multiple systems including long-term memory, motor, evaluational, attentional and perceptual systems (Dehaene, Kerszberg, & Changeux 1998; Dehaene & Naccache 2001; Dehaene et al. 2006). Notice that the previous characterization does not commit to whether it is phenomenal or access consciousness that is being defined.

Access should be understood as a relational notion:

A system X accesses content from system Y iff X uses that content in its computations/processing.

The accessibility of information is then defined as its potential access by other systems. Dehaene (Dehaene et al. 2006) introduces a threefold distinction: (1) neural states that carry information that is not accessible (subliminal information); (2) states that carry information that is accessible but not accessed (not in the workspace; preconscious information); and (3) states whose information is accessed by the workspace (conscious information) and is globally accessible to other systems. So, a necessary and sufficient condition for a state’s being conscious rather than not is the access of a state or content by the workspace, making that state or content accessible to other systems. Hence, only states in (3) are conscious.

![see legend. The top figure is a series of dotted concentric circles with a network of lines and nodes imposed on top; the innermost circle is labeled 'Global Workspace'. The circles are divided into five sectors [except the sector radii don't cross the innermost circle]; each sector has a labels in an arrow pointing in [unless otherwise noted] clockwise from the top as, 'Evaluative Systems (VALUE)', 'Attentional Systems (FOCUSING)', 'Motor systems (FUTURE)' [the only one with an arrow pointing out], 'Perceptual systems (PRESENT)', 'Long-Term Memory (PAST)'. The bottom figure is the same network of lines and nodes (minus circles, arrows, and labels but with on the left a picture labeled 'frontal' and on the right a picture labeled 'sensory'.](figure2.png)

Figure 2. The Global Neuronal Workspace

Figure Legend: The top figure provides a neural architecture for the workspace, indicating the systems that can be involved. The lower figure sets the architecture within the six layers of the cortex spanning frontal and sensory areas, with emphasis on neurons in layers 2 and 3. Figure reproduced from Dehaene, Kerszberg, and Changeux 1998. Copyright (1998) National Academy of Sciences.

The global neuronal workspace theory ties access to brain architecture. It postulates a cortical structure that involves workspace neurons with long-range connections linking systems: perceptual, mnemonic, attentional, evaluational and motoric.

What is the global workspace in neural terms? Long-range workspace neurons within different systems can constitute the workspace, but they should not necessarily be identified with the workspace. A subset of workspace neurons becomes the workspace when they exemplify certain neural properties. What determines which workspace neurons constitute the workspace at a given time is the activity of those neurons given the subject’s current state. The workspace then is not a rigid neural structure but a rapidly changing neural network, typically only a proper subset of all workspace neurons.

Consider then a neural population that carries content p and is constituted by workspace neurons. In virtue of being workspace neurons, the content p is accessible to other systems, but it does not yet follow that the neurons then constitute the global workspace. A further requirement is that workspace neurons are (1) put into an active state that must be sustained so that (2) the activation generates a recurrent activity between workspace systems. Only when these systems are recurrently activated are they, along with the units that access the information they carry, constituents of the workspace. This activity accounts for the idea of global broadcast in that workspace contents are accessible to further systems. Broadcasting explains the idea of consciousness as for the subject: globally broadcasted content is accessible for the subject’s use in informing behavior.

The global neuronal workspace theory provides an account of access consciousness but what of phenomenal consciousness? The theory predicts widespread activation of a cortical workspace network as correlated with phenomenal conscious experience, and proponents often appeal to imaging results that reveal widespread activation when consciousness is reported (Dehaene & Changeux 2011). There is, however, a potential confound. We track phenomenal consciousness by access in introspective report, so widespread activity during reports of conscious experience correlates with both access and phenomenal consciousness. Correlation cannot tell us whether the observed activity is the basis of phenomenal consciousness or of access consciousness in report (Block 2007). This remains a live question for as discussed in section 2.2, we do not have empirical evidence that overflow is false.

To eliminate the confound, experimenters ensure that performance does not differ between conditions where consciousness is present and where it is not. Where this was controlled, widespread activation was not clearly observed (Lau & Passingham 2006). Still, the absence of observed activity by an imaging technique does not imply the absence of actual activity for the activity might be beyond the limits of detection of that technique. Further, there is a general concern about the significance of null results given that neuroscience studies focused on prefrontal cortex are typically underpowered (for discussion, see Odegaard, Knight, & Lau 2017).

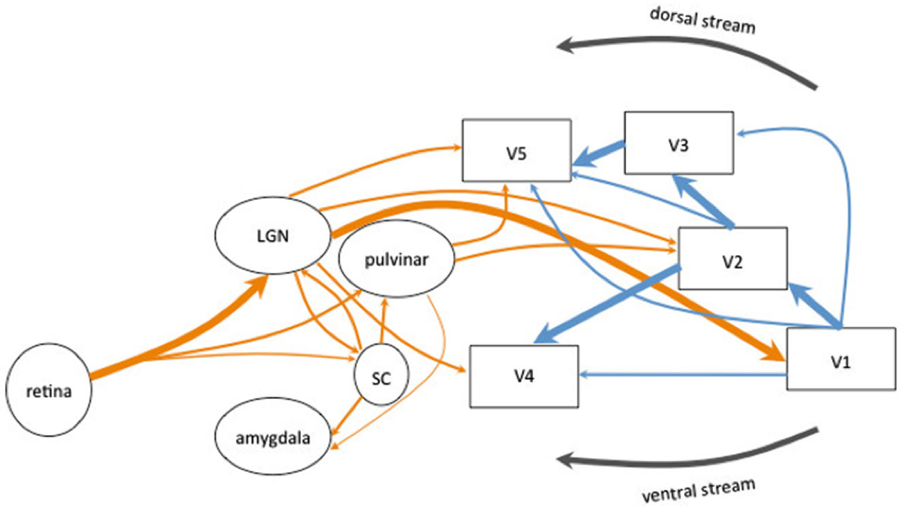

3.2 Recurrent Processing Theory

A different explanation ties perceptual consciousness to processing independent of the workspace, with focus on recurrent activity in sensory areas. This approach emphasizes properties of first-order neural representation as explaining consciousness. Victor Lamme (2006, 2010) argues that recurrent processing is necessary and sufficient for consciousness. Recurrent processing occurs where sensory systems are highly interconnected and involve feedforward and feedback connections. For example, forward connections from primary visual area V1, the first cortical visual area, carry information to higher-level processing areas, and the initial registration of visual information involves a forward sweep of processing. At the same time, there are many feedback connections linking visual areas (Felleman & Van Essen 1991), and later in processing, these connections are activated yielding dynamic activity within the visual system.

Lamme identifies four stages of normal visual processing:

- Stage 1: Superficial feedforward processing: visual signals are processed locally within the visual system.

- Stage 2: Deep feedforward processing: visual signals have travelled further forward in the processing hierarchy where they can influence action.

- Stage 3: Superficial recurrent processing: information has traveled back into earlier visual areas, leading to local, recurrent processing.

- Stage 4: Widespread recurrent processing: information activates widespread areas (and as such is consistent with global workspace access).

Lamme holds that recurrent processing in Stage 3 is necessary and sufficient for consciousness. Thus, what it is for a visual state to be conscious is for a certain recurrent processing state to hold of the relevant visual circuitry. This identifies the crucial difference between the global neuronal workspace and recurrent processing theory: the former holds that recurrent processing at Stage 4 is necessary for consciousness while the latter holds that recurrent processing at Stage 3 is sufficient. Thus, recurrent processing theory affirms phenomenal consciousness without access by the global neuronal workspace. In that sense, it is an overflow theory (see section 2.2).

Why think that Stage 3 processing is sufficient for consciousness? Given that Stage 3 processing is not accessible to introspective report, we lack introspective evidence for sufficiency. Lamme appeals to experiments with brief presentation of stimuli such as letters where subjects are said to report seeing more than they can identify in report (Lamme 2010). For example, in George Sperling’s partial report paradigm (Sperling 1960), subjects are briefly presented with an array of 12 letters (e.g., in 300 ms presentations) but are typically able to report only three to four letters even as they claim to see more letters (but see Phillips 2011). It is not clear that this is strong motivation for recurrent processing, since the very fact that subjects can report seeing more letters shows that they have some access to them, just not access to letter identity.

Lamme also presents what he calls neuroscience arguments. This strategy compares two neural networks, one taken to be sufficient for consciousness, say the processing at Stage 4 as per Global Workspace theories, and one where sufficiency is in dispute, say recurrent activity in Stage 3. Lamme argues that certain features found in Stage 4 are also found in Stage 3 and given this similarity, it is reasonable to hold that Stage 3 processing suffices for consciousness. For example, both stages exhibit recurrent processing. Global neuronal workspace theorists can allow that recurrent processing in stage 3 is correlated, even necessary, but deny that this activity is explanatory in the relevant sense of identifying sufficient conditions for consciousness.

It is worth reemphasizing the empirical challenge in testing whether access is necessary for phenomenal consciousness (sections 2.1–2.2). The two theories return different answers, one requiring access, the other denying it. As we saw, the methodological challenge in testing for the presence of phenomenal consciousness independently of access remains a hurdle for both theories.

3.3 Higher-Order Theory

A long-standing approach to conscious states holds that one is in a conscious state if and only if one relevantly represents oneself as being in such a state. For example, one is in a conscious visual state of seeing a moving object if and only if one suitably represents oneself being in that visual state. This higher-order state, in representing the first-order state that represents the world, results in the first order state’s being conscious as opposed to not. The intuitive rationale for such theories is that if one were in a visual state but in no way aware of that state, then the visual state would not be conscious. Thus, to be in a conscious state, one must be aware of it, i.e., represent it (Rosenthal 2002; see the entry on higher order theories of consciousness). On certain higher-order theories (Higher-Order Thought Theory, Rosenthal 2005; and Higher-Order Representation of a Representation (HOROR), Brown 2015), one can be in a conscious visual state even if there is no visual system activity, so long as one represents oneself as being in that state (for a debate, see Block 2011; Rosenthal 2011). Other family of theories postulates that experiences are jointly determined by first- and higher-order states [e.g., Higher-Order State Space (HOSS) (Fleming 2020); Perceptual Reality Monitoring (PRM) (Lau 2019)]. An intermediate perspective proposes that higher-order states track our mental attitudes towards first-order states along different dimensions that include familiarity, vividness, value, and so on (Self-Organizing Meta-Representational Account (SOMA)—Cleeremans 2011; Cleeremans et al. 2020). These differences apart, higher-order theories merge with empirical work by tying high-order representations with brain activity, typically in the prefrontal cortex, which is taken to be the neural substrate of the required higher-order representations.

The focus on the prefrontal cortex allows for empirical tests of the higher-order theory against other accounts (Lau & Rosenthal 2011; LeDoux & Brown 2017; Brown, Lau, & LeDoux 2019; Lau 2022). For example, on the higher-order theory, lesions to prefrontal cortex should affect consciousness (see Kozuch 2013, 2022, 2023), testing the necessity of prefrontal cortex for consciousness. Against higher-order theories, some reports claim that patients with prefrontal cortex surgically removed maintain preserved perceptual consciousness (Boly et al. 2017) and that intracranial electrical stimulation (iES) to the prefrontal cortex does not alter consciousness (Raccah, Block, & Fox 2021). This would lend support to recurrent processing theories that hold that prefrontal cortical activity is not necessary for consciousness (and would be evidence against both GWT and higher-order theories). It is not clear, however, that the interventions succeeded in removing all of prefrontal cortex, leaving perhaps sufficient frontal areas needed to sustain consciousness (Odegaard, Knight, & Lau 2017), or that simple, localized stimulation to prefrontal cortex would be the right kind of stimulation for altering awareness (see Naccache et al. 2021). Bilateral suppression of prefrontal activity using transcranial magnetic stimulation also seems to selectively impair visibility as evidenced by metacognitive report (Rounis et al. 2010). Furthermore, certain syndromes and experimental manipulations suggest consciousness in the absence of appropriate sensory processing as predicted by some higher-order accounts (Lau & Brown 2019), a claim that coheres with the theory’s sufficiency claims.

Subjective reports of conscious versus unconscious trials activate frontal regions as shown with EEG (Cul et al. 2009) and fMRI (Lau & Passingham 2006). Liu and colleagues (Liu et al. 2019) leveraged the “double-drift illusion” to show that real and apparent motion shared patterns of neural activity only in lateral and medial frontal cortex, not visual cortex. The double-drift illusion is a dramatic mismatch between physical and apparent motion created by a patch of gratings moving vertically while the gratings cycle horizontally; this creates the illusion of the patch’s path to be more than 45º away from vertical. The conscious experience of seeing a stimulus veer off diagonally, whether physically or illusorily, was similarly encoded only in the prefrontal cortex, suggesting that the conscious experience of stimulus’s diagonal motion was represented there, not in visual cortex. In a carefully designed no-report paradigm, Hatamimajoumerd and colleagues (2022) found that conscious stimuli were decodable from prefrontal cortex well above chance. Intracranial electrophysiological recording, where electrodes are placed directly on the surface of the brain, reveals prefrontal activity related to visual consciousness even when subjects were not required to respond to the stimulus (Noy et al. 2015). Fazekas and Nemeth (2018) discuss studies using different neuroimaging techniques showing significant increases in activity in the prefrontal cortex during dreams, a natural case of phenomenal awareness without report. Convergent evidence about the role of the prefrontal cortex sustaining awareness comes from single-cell recordings in macaques. Using binocular rivalry (see section 5.2), Dwarakanath et al. (2023) and Kapoor et al. (2022) show that dynamic subjective changes in monkeys’ conscious experiences are robustly represented in prefrontal cortex.

3.4 Information Integration Theory

Information Integration Theory of Consciousness (IIT) draws on the notion of integrated information, symbolized by Φ, as a way to explain generic consciousness—specifically the quantity of consciousness present in a system (Tononi 2004, 2008; Oizumi, Albantakis, & Tononi 2014; Albantakis et al. 2023). IIT also aims to explain specific consciousness (i.e., the quality or content of conscious experiences) by appealing to the conceptual causal structure of the integrated information complex (i.e., the set of units of the physical substrate that is maximally integrated).

Integrated information theory (IIT) “starts from phenomenology and makes use of thought experiments to claim that consciousness is integrated information” (Tononi 2008: 216). IIT’s first step is to find “phenomenological axioms”, that is, immediately given essential properties of every conceivable experience. Once properly understood, these phenomenological axioms are taken by IIT to be irrefutably true (Albantakis et al. 2023: 3). These axioms, obtained by drawing on introspection and reason, are that consciousness exists, and that it is intrinsic, specific, unitary, definite, and structured. These axioms lead to postulates of physical existence. In other words, physical implementations that respect the properties first discovered through introspection. Finally, IIT develops mathematical formalisms that aim to preserve all these features and that can in principle help calculate the quality and quantity of integrated information in a system, or Φ.[7]

Integrated information is defined in terms of the effective information carried by the parts of the system in light of its causal profile. For example, consider a circuit (neuronal, electrical, or otherwise). We can focus on a part of the whole circuit, say two connected nodes, and compute the effective information that can be carried by this microcircuit. The system carries integrated information if the effective informational content of the whole is greater than the sum of the informational content of the parts. If there is no partitioning where the summed informational content of the parts equals the whole, then the system as a whole carries integrated information and it has a positive value for Φ. Intuitively, the interaction of the parts adds more to the system than the parts do alone.

IIT holds that an above-zero value for Φ implies that a system is conscious, with more consciousness going with greater values for Φ. For example, Tononi argues that the human cerebellum does not contribute to consciousness due to its highly modular anatomical organization. Thus, it is hypothesized that the cerebellum has a value of zero Φ despite there being four to five times the number of neurons in the cerebellum versus in the human cortex. On IIT, what matters is the presence of appropriate connections and not the number of neurons (the soundness of this argument about cerebellum has been contested; Aaronson 2014b; Merker, Williford, & Rudrauf 2022). For this same reason, IIT also makes counterintuitive predictions. From panpsychist conclusions, such as admitting that even a 2D grid of inactivated logic gates “doing absolutely nothing, may in fact have a large value of PHI” (Tononi 2014 in Other Internet Resources), to neuroscientifically implausible conclusions: “a brain where no neurons were activated, but were kept ready to respond in a differentiated manner to different perturbations, would be conscious (perhaps that nothing was going on)” (Tononi 2004). (In Other Internet Resources, see Aaronson 2014a and 2014b for striking counterexamples; see Tononi 2014 for a response).

It is important to note that IIT is not in and of itself a neuroscientific theory of consciousness. Rather, IIT is probably best understood as a metaphysical theory about the essential features of consciousness. Accordingly, these features could be present not just in organisms with neural systems but in any physical system (organic or not) that integrates information with a Φ larger than 0 (Tononi et al. 2016). Evidence of its metaphysical status are the theory’s idealist corollaries (see entry for Idealism). According to Tononi, and in stark contrast to current neuroscientific assumptions, only intrinsic entities (i.e., conscious entities) “truly exist and truly cause, whereas my neurons or my atoms neither truly exist nor truly cause” (Tononi, et al. 2022: 2 [Other Internet Resources]). Relatedly, IIT has received criticisms about its soundness and its neuroscientific status based on both empirical and theoretical arguments. One notable concern is the lack of clarity between IIT’s metaphysical claims and their potential relevance for a neuroscientific understanding of consciousness.[8]

3.5 Frontal or Posterior?

In recent years, one way to frame the debate between theories of generic consciousness is whether the “front” or the “back” of the brain is crucial. Using this rough distinction allows us to draw the following contrasts: Recurrent processing theories focus on sensory areas (in vision, the “back” of the brain) such that where processing achieves a certain recurrent state, the relevant contents are conscious even if no higher-order thought is formed or no content enters the global workspace. Similarly, proponents of IIT have recently emphasized a “posterior hot zone” covering parietal and occipital areas as a neural correlate for consciousness, as they speculate that this zone may have the highest value for Φ (Boly et al. 2017; but see Lau 2023). For certain higher-order thought theories, having a higher-order state, supported by prefrontal cortex, without corresponding sensory states can suffice for conscious states. In this case, the front of the brain would be sufficient for consciousness. Finally, the global neuronal workspace, drawing on workspace neurons that are present across brain areas to form the workspace, might be taken to straddle the difference, depending on the type of conscious state involved. They require entry into the global workspace such that neither sensory activity nor a higher order thought on its own is sufficient, i.e., neither just the front nor the back of the brain.

The point of talking coarsely of brain anatomy in this way is to highlight the neural focus of each theory and thus, of targets of manipulation as we aim for explanatory neural correlates in terms of what is necessary and/or sufficient for generic consciousness. What is clear is that once theories make concrete predictions of brain areas involved in generic consciousness, neuroscience can test them.

4. Neuroscience of Generic Consciousness: Unconscious Vision as Case Study

Since generic consciousness is a matter of a state’s being conscious or not, we can examine work on specific types of mental state that shift between being conscious or not and isolate neural substrates. Work on unconscious vision provides an informative example. In the past decades, scientists have argued for unconscious seeing and investigated its brain basis especially in neuropsychology, the study of subjects with brain damage. Interestingly, if there is unconscious seeing, then the intentional action inference must be restricted in scope since some intentional behaviors might be guided by unconscious perception (section 2.4). That is, the existence of unconscious perception blocks a direct inference from perceptually guided intentional behavior to perceptual consciousness. The case study of unconscious vision promises to illuminate more specific studies of generic consciousness along with having repercussions for how we attribute conscious states.

4.1 Unconscious Vision and the Two Visual Streams

Since the groundbreaking work of Leslie Ungerleider & Mortimer Mishkin (1982), scientists divide primate cortical vision into two streams: dorsal and ventral (for further dissection, see Kravitz et al. 2011). The dorsal stream projects into the parietal lobe while the ventral stream projects into the temporal lobe (see Figure 1). Controversy surrounds the functions of the streams. Ungerleider and Mishkin originally argued that the streams were functionally divided in terms of what and where: the ventral stream for categorical perception and the dorsal stream for spatial perception. David Milner and Melvyn Goodale (1995) have argued that the dorsal stream is for action and the ventral stream for “perception”, namely for guiding thought, memory and complex action planning (see Goodale & Milner 2004 for an engaging overview). There continues to be debate surrounding the Milner and Goodale account (Schenk & McIntosh 2010) but it has strongly influenced philosophers of mind.

Substantial motivation for Milner and Goodale’s division draws on lesion studies in humans. Lesions to the dorsal stream do not seem to affect conscious vision in that subjects are able to provide accurate reports of what they see (but see Wu 2014a). Rather, dorsal lesions can affect visual-guidance of action with optic ataxia being a common result. Optic ataxic subjects perform inaccurate motor actions. For example, they grope for objects, yet they can accurately report the object’s features (for reviews, see Andersen et al. 2014; Pisella et al. 2009; Rossetti, Pisella, & Vighetto 2003). Lesions in the ventral stream disrupt normal conscious vision, yielding visual agnosia, an inability to see visual form or to visually categorize objects (Farah 2004).

Dorsal stream processing is said to be unconscious. If the dorsal stream is critical in the visual guidance of many motor actions such as reaching and grasping, then those actions would be guided by unconscious visual states. The visual agnosic patient DF provides critical support for this claim.[9] Due to carbon monoxide poisoning, DF suffered focal lesions largely in the ventral stream spanning the lateral occipital complex that is associated with processing of visual form (high resolution imaging also reveals small lesions in the parietal lobe; James et al. 2003). Like other visual agnosics with similar lesions, DF is at chance in reporting aspects of form, say the orientation of a line or the shape of objects. Nevertheless, she retains color and texture vision. Strikingly, DF can generate accurate visually guided action, say the manipulation of objects along specific parameters: putting an object through a slot or reaching for and grasping round stones in a way sensitive to their center of mass. Simultaneously, DF denies seeing the relevant features and, if asked to verbally report them, she is at chance. In this dissociation, DF’s verbal reports give evidence that she does not visually experience the features to which her motor actions remain sensitive.