Animal Consciousness

There are many reasons for philosophical interest in nonhuman animal (hereafter “animal”) consciousness. First, if philosophy often begins with questions about the place of humans in nature, one way humans have attempted to locate themselves is by comparison and contrast with those things in nature most similar to themselves, i.e., other animals. Second, the problem of determining whether animals are conscious stretches the limits of knowledge and scientific methodology (beyond breaking point, according to some). Third, the question of whether animals are conscious beings or “mere automata”, as Cartesians would have it, is of considerable moral significance given the dependence of modern societies on mass farming and the use of animals for biomedical research. Fourth, while theories of consciousness are frequently developed without special regard to questions about animal consciousness, the plausibility of such theories has sometimes been assessed against the results of their application to animal consciousness.

Questions about animal consciousness are just one corner of a more general set of questions about animal cognition and mind. The so-called “cognitive revolution” that took place during the latter half of the 20th century has led to many innovative experiments by comparative psychologists and ethologists probing the cognitive capacities of animals. The philosophical issues surrounding the interpretation of experiments to investigate perception, learning, categorization, memory, spatial cognition, numerosity, communication, language, social cognition, theory of mind, causal reasoning, and metacognition in animals are discussed in the entry on animal cognition. Despite all this work, the topic of consciousness per se in animals has remained controversial, even taboo, among scientists, even while it remains a matter of common sense to most people that many other animals do have conscious experiences.

- 1. Concepts of Consciousness

- 2. Historical Background

- 3. Basic Philosophical Questions: Epistemological and Ontological

- 4. Applying Philosophical Theories

- 5. Arguments Against Animal Consciousness

- 6. Arguments For Animal Consciousness

- 7. Current Scientific Investigations

- 8. Summary

- Bibliography

- Other Internet Resources

- Related Entries

1. Concepts of Consciousness

In discussions of animal consciousness there is no clearly agreed upon sense in which the term “consciousness” is used. Having origins in folk psychology, “consciousness” has a multitude of uses that may not be resolvable into a single, coherent concept (Wilkes 1984). Nevertheless, several useful distinctions among different notions of consciousness have been made, and with the help of these distinctions it is possible to gain some clarity on the important questions that remain about animal consciousness.

Two ordinary senses of consciousness which are not in dispute when applied to animals are the sense of consciousness involved when a creature is awake rather than asleep[1], or in a coma, and the sense of consciousness implicated in the basic ability of organisms to perceive and thereby respond to selected features of their environments, thus making them conscious or aware of those features. Consciousness in both these senses is identifiable in organisms belonging to a wide variety of taxonomic groups (see, e.g., Mather 2008).

A third, more technical notion of consciousness, access consciousness, has been introduced by Block (1995) to capture the sense in which mental representations may be poised for use in rational control of action or speech. This “dispositional” account of access consciousness — the idea that the representational content is available for other systems to use — is amended by Block (2005) to include an occurrent aspect in which the content is “broadcast” in a “global workspace” (Baars 1997) which is then available for higher cognitive processing tasks such as categorization, reasoning, planning, and voluntary direction of attention. Block believes that many animals possess access consciousness (speech is not a requirement). Indeed, some of the neurological evidence cited by Block (2005) in support of the global workspace is derived from monkeys. But clearly an author such as Descartes, who, we will see, denied speech, language, and rationality to animals, would also deny access consciousness to them. Those who follow Davidson (1975) in denying intentional states to animals would likely concur.

There are two remaining senses of consciousness that cause controversy when applied to animals: phenomenal consciousness and self-consciousness.

Phenomenal consciousness refers to the qualitative, subjective, experiential, or phenomenological aspects of conscious experience, sometimes identified with qualia. (In this article I also use the term “sentience” to refer to phenomenal consciousness.) To contemplate animal consciousness in this sense is to consider the possibility that, in Nagel's (1974) phrase, there might be “something it is like” to be a member of another species. Nagel disputes our capacity to know, imagine, or describe in scientific (objective) terms what it is like to be a bat, but he assumes that there is something it is like. There are those, however, who would challenge this assumption directly. Others would less directly challenge the possibility of scientifically investigating its truth. Nevertheless, there is broad commonsense agreement that phenomenal consciousness is more likely in mammals and birds than it is in invertebrates, such as insects, crustaceans or molluscs (with the possible exception of some cephalopods), while reptiles, amphibians, and fish constitute an enormous grey area for most scientists and philosophers. However, some researchers are even willing to attribute a minimal form of experiential consciousness to organisms that are phylogenetically very remote from humans and that have just a few neurons (Ginsburg & Jablonka 2007a).

Self-consciousness refers to an organism's capacity for second-order representation of the organism's own mental states. Because of its second-order character (“thought about thought”) the capacity for self consciousness is closely related to questions about “theory of mind” in nonhuman animals — whether any animals are capable of attributing mental states to others. Questions about self-consciousness and theory of mind in animals are a matter of active scientific controversy, with the most attention focused on chimpanzees and to a more limited extent on the other great apes. As attested by this controversy (and unlike questions about animal sentience) questions about self-consciousness in animals are commonly regarded as tractable by empirical means.

The remainder of this article deals primarily with the attribution of consciousness in its phenomenal sense to animals, although there will be some discussion of access consciousness, self-consciousness and theory of mind in animals, especially where these have been related theoretically to phenomenal consciousness — as, for instance, in Carruthers' (1998a,b, 2000) argument that theory of mind is required for phenomenal consciousness. (For more discussion of self awareness and metacognition see the section on theory of mind and metacognition in the Encyclopedia's entry on Animal Cognition.)

2. Historical Background

Questions about animal consciousness in the Western tradition have their roots in ancient discussions about the nature of human beings, as filtered through the “modern” philosophy of Descartes. It would be anachronistic to read modern ideas about consciousness back into the ancient literature. Nevertheless, because consciousness is sometimes thought to be a uniquely human mental phenomenon, it is important to understand the origins of the idea that humans are qualitatively (and “qualia-tatively”) different from animals.

Aristotle asserted that only humans had rational souls, while the locomotive souls shared by all animals, human and nonhuman, endowed animals with instincts suited to their successful reproduction and survival. Sorabji (1993) argues that the denial of reason to animals created a crisis for Greek thought, requiring a “wholesale reanalysis” (p. 7) of the nature of mental capacities, and a revision in thinking about “man and his place in nature above the animals” (ibid.). The argument about what is reasoning, and whether animals display it, remains with us 25 centuries later, as evidenced by the volume Rational Animals? (Hurley & Nudds 2006). The Great Chain of Being derived from early Christian interpretation of Aristotle's scale of nature (Lovejoy 1936) provides another Aristotelian influence on the debate about animal minds.

Two millennia after Aristotle, Descartes' mechanistic philosophy introduced the idea of a reflex to explain the behavior of nonhuman animals. Although his conception of animals treated them as reflex-driven machines, with no intellectual capacities, it is important to recognize that he took mechanistic explanation to be perfectly adequate for explaining sensation and perception — aspects of animal behavior that are nowadays often associated with consciousness. He drew the line only at rational thought and understanding. Given the Aristotelian division between instinct and reason and the Cartesian distinction between mechanical reflex and rational thought, it's tempting to map the one distinction onto the other. Nevertheless, Crowley & Allen (2008) argue that it would be a mistake to assimilate the two. First, a number of authors before and after Darwin have believed that conscious experience can accompany instinctive and reflexive actions. Second, the dependence of phenomenal consciousness on rational, self-reflective thought is a strong and highly doubted claim (although it has current defenders, discussed below).

Although the roots of careful observation and experimentation of the natural world go back to ancient times, studies of animal behavior remained largely anecdotal until long after the scientific revolution. In the intervening period, animals were, of course, widely used in pursuit of anatomical, physiological, and embryological questions, and vivisection was carried out by such luminaries as Galen. Whether or not Descartes himself practiced vivisection (his own words indicate that he did), the mechanists who followed him used Descartes' denial of reason and a soul to animals as a rationale for their belief that live animals felt nothing under their knives.

A few glimmers of experimental approaches to animal behavior can be seen in the late 18th century (e.g., Barrington 1773; White 1789), and soon thereafter Frédéric Cuvier worked from 1804 until his death in 1838 on the development of sexual and social behavior in captive mammals. By the mid 19th century Alfred Russel Wallace (1867) was arguing explicitly for an experimental approach to animal behavior, and Douglas Spalding's (1872) experiments on instinctual feeding behaviors in chicks were seminal. Still, the emergence of experimental approaches had very little to say about consciousness per se, though Spalding's work can be seen as a contribution to the discussion about instinct and reason.

In the same vein of instinct vs. reason, Darwin in the Origin of Species wrote, “It is a significant fact, that the more the habits of any particular animal are studied by a naturalist, the more he attributes to reason, and the less to unlearnt instinct” (1871, Book I, p.46). He devoted considerable attention in both the Origin and in the Descent of Man to animal behavior, with the obvious goal of demonstrating mental continuity among the species. To make his case, Darwin relied heavily on anecdotes provided by his correspondents — a project infamously pursued after Darwin's death by his protégé George Romanes (1882). Darwin also carried out experiments and was a keen observer, however. In his final work he describes experiments on the flexibility of earthworm behavior in manipulating leaves, which he took to show considerable intelligence (Darwin 1881; see also Crist 2002).

The idea of behavioral flexibility is central to discussions of animal mind and consciousness. Descartes' conception of animals as automata seems to make phenomenal consciousness superfluous at best — a connection whose philosophical development was traced by T.H. Huxley (1874). Huxley reported a series of experiments on a frog, showing very similar reflexive behavior even when its spinal cord had been severed, or large portions of its brain removed. He argued that without a brain, the frog could not be conscious, but since it could still do the same sort of things that it could do before, there is no need to assume consciousness even in the presence of the entire brain, going on to argue that consciousness is superfluous. (The argument is somewhat curious since it seems to show too much by making the brain itself superfluous to the frog's behavior!)

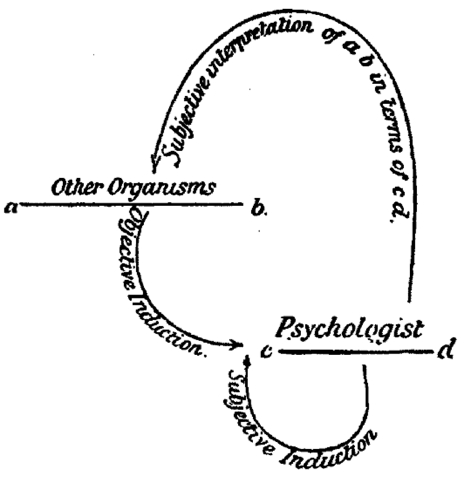

Still, for those (including Huxley) who became quickly convinced of the correctness of Darwin's theory of evolution, understanding and defending mental continuity between humans and animals loomed large. In his Principles of Psychology (1890), William James promoted the idea of differing intensities of conscious experience across the animal kingdom, an idea that was echoed by the leading British psychologist of his day, Conwy Lloyd Morgan in his 1894 textbook An Introduction to Comparative Psychology. Morgan had been very skeptical and critical of the anecdotal approach favored by Darwin and Romanes, but he came around to the Darwinian point of view about mental continuity if not about methodology. To address the methodological deficit he introduced his “double inductive” method for understanding the mental states of animals (Morgan 1894). The double induction consisted of inductive inferences based on observation of animal behavior combined with introspective knowledge of our own minds. At the same time, to counteract the anthropomorphic bias in the double inductive method, Lloyd Morgan introduced a principle now known as Morgan's canon: “in no case may we interpret an action as the outcome of the exercise of a higher psychical faculty, if it can be interpreted as the outcome of the exercise of one which stands lower in the psychological scale.” (Lloyd Morgan 1894, p.53).

Lloyd Morgan's Double Induction Method from his 1894 textbook

Even though the double inductive method is now mainly of historical interest, Morgan's canon lives on. Questions about quite what the canon means and how to justify it are active topics of historical and philosophical investigation (e.g., Burghardt 1985; Sober 1998, 2005; Radick 2000; Thomas 2001). The questions include what Lloyd Morgan means by ‘higher’ and ‘lower’, to what extent the principle can or should be justified by evolutionary considerations, and whether the canon collapses to a principle of parsimony, a version of Ockham's razor, or some general principles of empirical justification. Despite current uncertainty about what it really means, Morgan's canon, interpreted (or, perhaps, misinterpreted; Thomas 2001) as a strong parsimony principle, served a central rhetorical role for behavioristic psychologists, who sought to eliminate any hint of Cartesian dualism from comparative psychology.

Behaviorism dominated American psychology in the early part of the 20th century, beginning with Thorndike's (1911) experiments on animals learning by trial and error to escape from the “puzzle boxes” that he had constructed. But even Thorndike's famous “law of effect” refers to the animal's “satisfaction or discomfort” (1911, p.244). It was with the radical anti-mentalism of John B. Watson (1928) and B.F. Skinner (1953), both of whom strongly rejected any attempts to explain animal behavior in terms of unobservable mental states, that American psychology became the science of behavior rather than, as the dictionary would have it, the science of mind and behavior.

At the same time, things were progressing rather differently in Europe, where ethological approaches to animal behavior were more dominant. Ethology is part natural history with an emphasis on fieldwork and part experimental science conducted on captive animals, reflecting the different styles of its two seminal figures, Konrad Lorenz and Niko Tinbergen (see Burkhardt 2005). Initially, “innate” behaviors were the central focus of Lorenz's work. According to Lorenz, it is the investigation of innate behaviors in related species that puts the study of animal behavior on a par with other branches of evolutionary biology, and he demonstrated that it was possible to derive the phylogenetic relations among species by comparing their instinctive behavioral repertoires (Lorenz 1971a). In pursuing this direction, Lorenz and Tinbergen explicitly sought to distance ethology from the purposive, mentalistic, animal psychology of Bierens de Haan and the lack of biological concern they detected in American comparative psychology (see Brigandt 2005). Like Lloyd Morgan, the ethologists rejected Romanes anecdotal approach, but they also criticized Lloyd Morgan's subjectivist approach.

In the 1970s, Donald Griffin, who made his reputation taking careful physical measurements to prove that bats use echolocation, made a considerable splash with his plea for a return to questions about animal minds, especially animal consciousness. Griffin (1978) coined the term “cognitive ethology” to describe this research program. Fierce criticism of Griffin emerged both from psychologists and classically trained ethologists (Bekoff & Allen 1997). Griffin emphasized behavioral flexibility and versatility as the chief source of evidence for consciousness, which he defined as “the subjective state of feeling or thinking about objects and events” (Griffin & Speck 2004, p. 6). In seeing subjectivity, at least in simple forms, as a widespread phenomenon in the animal kingdom, Griffin's position also bears considerable resemblance to Lloyd Morgan's. Burghardt reports that “considerable discomfort with subjectivism” (Burghardt 1985, p. 907) arose during the Dahlem conference that Griffin convened in an early discipline-building exercise (Griffin 1981). Griffin's subjectivist position, and the suggestion that even insects such as honeybees are conscious, seemed to many scientists to represent a lamentable return to the anthropomorphic over-interpretation of anecdotes seen in Darwin and Romanes. This criticism may be partly unfair in that Griffin does not repeat the “friend-of-a-farmer” kinds of story collected by Romanes, but bases his interpretations on results from the more sophisticated scientific literature that had accumulated more than a century after Darwin (e.g., Giurfa et al. 2001). However, the charge of over-interpretation of those results may be harder to avoid. It is also important to note the role played by neurological evidence in his argument, when he concludes that the intensive search for neural correlates of consciousness has not revealed “any structure or process necessary for consciousness that is found only in human brains” (Griffin & Speck 2004). This view is widely although not universally shared by neuroscientists.

3. Basic Philosophical Questions: Epistemological and Ontological

The topic of consciousness in nonhuman animals has been primarily of epistemological interest to philosophers of mind. Two central questions are:

- Can we know which animals beside humans are conscious? (The Distribution Question)[2]

- Can we know what, if anything, the experiences of animals are like? (The Phenomenological Question)

In his seminal paper “What is it like to be a bat?” Thomas Nagel (1974) simply assumes that there is something that it is like to be a bat, and focuses his attention on what he argues is the scientifically intractable problem of knowing what it is like. Nagel's confidence in the existence of conscious bat experiences would generally be held to be the commonsense view and, as the preceding section illustrates, a view that is increasingly taken for granted by many scientists too. But, as we shall see, it is subject to challenge and there are those who would argue that the Distribution Question is just as intractable as the Phenomenological Question.

The two questions might be seen as special cases of the general skeptical “problem of other minds”, which even if intractable is nevertheless generally ignored to good effect by psychologists. However it is often thought that knowledge of animal minds — what Allen & Bekoff (1997) refer to as “the other species of mind problem” and Prinz (2005) calls “The Who Problem” — presents special methodological difficulties because we cannot interrogate animals directly about their experiences (but see Sober 2000 for discussion of tractability within an evolutionary framework, and Farah 2008 for a neuroscientist's perspective). Although there have been attempts to teach human-like languages to members of other species, none has reached a level of conversational ability that would solve this problem directly (see Anderson 2004 for a review). Furthermore, except for some language-related work with parrots and dolphins, such approaches are generally limited to those animals most like ourselves, particularly the great apes. But there is great interest in possible forms of consciousness in a much wider variety of species than are suitable for such research, both in connection with questions about the ethical treatment of animals (e.g., Singer 1990 [1975]; Regan 1983; Rollin 1989; Varner 1998; Steiner 2008), and in connection with questions about the natural history of consciousness (Griffin 1976, 1984, 1992; Bekoff et al. 2002).

Griffin's agenda for the discipline he labeled “cognitive ethology” features the topic of animal consciousness and advocates a methodology, inherited from classical ethology, that is based in naturalistic observations of animal behavior and the attempt to understand animal minds in the context of evolution (see Allen 2004a). This agenda has been strongly criticized, with his behavior-based methodological suggestions for studying consciousness often dismissed as anthropomorphic (see Bekoff & Allen 1997 for a survey). But such criticisms may have overestimated the dangers of anthropomorphism (Fisher 1990) and many of the critics themselves rely on claims for which there are scant scientific data (e.g., Kennedy 1992, who claims that the “sin” of anthropomorphism may be programmed into humans genetically). At the same time, other scientists, whether or not they have explicitly endorsed Griffin's program, have sought to expand evolutionary investigation of animal consciousness to include the neurosciences and a broad range of functional considerations (e.g., Ârhem et al. 2002).

While epistemological and related methodological issues have been at the forefront of discussions about animal consciousness, the main wave of recent philosophical attention to consciousness has been focused on ontological questions about the nature of phenomenal consciousness. One might reasonably think that the question of what consciousness is should be settled prior to tackling the Distribution Question — that ontology should drive the epistemology. In an ideal world this order of proceeding might be the preferred one, but as we shall see in the next section, the current state of disarray among the philosophical theories of consciousness makes such an approach untenable.

4. Applying Philosophical Theories

4.1 Varieties of Dualism

Dualistic theories of consciousness deny that it can be accounted for in the current terms of the natural sciences. Traditional dualists may argue that the reduction of consciousness to physically describable mechanisms is impossible on any concept of the physical. Others may hold that consciousness is an as-yet-undescribed fundamental constituent of the physical universe, not reducible to any known physical principles. Such accounts of consciousness (with the possible exception of those based in anthropocentric theology) provide no principled reasons, however, for doubting that animals are conscious.

Cartesian dualism is, of course, traditionally associated with the view that animals lack minds. But Descartes' argument for this view was not based on any ontological principles, but upon what he took to be the failure of animals to use language rationally, or to reason generally. On this basis he claimed that nothing in animal behavior requires a non-mechanistic, mental explanation; hence he saw no reason to attribute possession of mind to animals. There is, however, no conceptual reason why animal bodies are any less suitable vehicles for embodying a Cartesian mind than are human bodies. Hence Cartesian dualism does not preclude animal minds as a matter of conceptual necessity, but only as a matter of empirical contingency concerning the animals' alleged lack of specific cognitive capacities.

No past or current dualists can prove that animals necessarily lack the fundamental mental properties or substance. Furthermore, given that none of these theories specify empirical means for detecting the right stuff for consciousness, and indeed most dualist theories cannot do so, they seem forced to rely upon behavioral criteria for deciding the Distribution Question. In adopting such criteria, they have some non-dualist allies. For example, Dennett (1969, 1995, 1997), while rejecting Cartesian dualism, nevertheless denies that animals are conscious in anything like the same sense that humans are, due to what he sees as the thoroughly intertwined aspect of language and human experience (see also Carruthers 1996). However, other human beings are less convinced of the centrality of language to their own mental lives (e.g., Grandin 1995).

4.2 Physicalist accounts

Early physicalist accounts of consciousness explored the philosophical consequences of identifying consciousness with unspecified physical or physiological properties of neurons. In this generic form, such theories do not provide any particular obstacles to attributing consciousness to animals, given that animals and humans are built upon the same biological, chemical, and physical principles. If it could be determined that phenomenal consciousness was identical to (or at least perfectly correlated with) some general property such as quantum coherence in the microtubules of neurons, or brain waves of a specific frequency, then settling the Distribution Question would be a straightforward matter of establishing whether or not members of other species possess the specified properties (see Seth et al. 2005). Searle (1998) too, although he rejects the physicalist/dualist dialectic, also suggests that settling the Distribution Question for hard cases like insects will become trivial once neuroscientists have carried out the non-trivial task of determining the physiological basis of consciousness in animals for which no reasonable doubt of their consciousness can be entertained (i.e., mammals).

4.3 Neurofunctional accounts

Some philosophers have sought more specific grounding in the neurosciences for their accounts of consciousness. Block (2005) pursues a strategy of using tentative functional characterizations of phenomenal and access consciousness to interpret evidence from neuroscientists' search for neural correlates of consciousness. He argues, on the basis of evidence from both humans and monkeys, that recurrent feedback activity in sensory cortex is the most plausible candidate for being the neural correlate of phenomenal consciousness in these species. Prinz (2005) also pursues a neurofunctional account, but identifies phenomenal consciousness with a different functional role than Block. He argues for identifying phenomenal consciousness with brain processes that are involved in attention to intermediate-level perceptual representations which feed into working memory via higher level, perspective-invariant representations. Since the evidence for such processes is at least partially derived from animals, including other primates and rats, his view is supportive of the idea that phenomenal consciousness is found in some nonhuman species (presumably most mammals). Nevertheless, he maintains that it may be impossible ever to answer the Distribution Question for more distantly related species; he mentions octopus, pigeons, bees, and slugs in this context.

4.4 Representationalist accounts

Representational theories of consciousness link phenomenal consciousness with the representational content of mental states, subject to some further functional criteria.

First-order representationalist accounts hold that if a particular state of the visual system of an organism represents some property of the world in a way that is functionally appropriate (e.g., not conceptually mediated, and operating as part of a sensory system), then the organism is said to be phenomenally conscious of that property. First-order accounts are generally quite friendly to attributions of consciousness to animals, for it is relatively uncontroversial that animals have internal states that have the requisite functional and representational properties (insofar as mental representation itself is uncontroversial, that is). Such a view underlies Dretske's (1995) claim that phenomenal consciousness is inseparable from a creature's capacity to perceive and respond to features of its environment, i.e., one of the uncontroversial senses of consciousness identified above. On Dretske's view, phenomenal consciousness is therefore very widespread in the animal kingdom. Likewise, Tye (2000) argues, based upon his first-order representational account of phenomenal consciousness, that it extends even to honeybees.

4.5 Higher-order theories

Dissatisfaction with the first-order theories of consciousness has resulted in several higher-order accounts which invoke mental states directed towards other mental states to explain phenomenal consciousness. Carruthers' “higher order thought” (HOT) theory a mental state is phenomenally conscious for a subject just in case it is available to be thought about directly by that subject (Carruthers 1998a,b, 2000). The term “available” here makes this a “dispositionalist” account, as opposed to an “actualist” which requires the actual occurrence of the 2nd order thought for subject to be conscious in the relevant sense. According to Carruthers, such higher-order thoughts are not possible unless a creature has a “theory of mind” to provide it with the concepts necessary for thought about mental states. Carruthers' view is of particular interest in the current context because he has used it explicitly to deny phenomenal consciousness to (almost) all nonhuman animals.

Carruthers argues, there is little, if any, scientific support for theory of mind in nonhuman animals, even among the great apes — with the possible exception of chimpanzees — from which he concludes that there is little support either for the view that any animals possess phenomenological consciousness. Further evaluation of this argument will be taken up further below, but it is worth noting here that if (as experiments on the attribution of false beliefs suggest) young children before the age of 4 lack a theory of mind, Carruthers' view entails that they are not sentient either — fear of needles notwithstanding! This is a bullet Carruthers bites, although for many it constitutes a reductio of his view (a response Carruthers would certainly regard as question-begging).

In contrast to Carruthers' higher-order thought account of sentience, other theorists such as Armstrong (1980), and Lycan (1996) have preferred a higher-order experience account, where consciousness is explained in terms of inner perception of mental states, a view that can be traced back to Aristotle, and also to John Locke. Because such models do not require the ability to conceptualize mental states, proponents of higher-order experience theories have been slightly more inclined than higher-order theorists to allow that such abilities may be found in other animals[3]. Gennaro (2004) argues, however, that a higher order thought theory is compatible with consciousness in nonhuman animals, arguing that Carruthers and others have overstated the requirements for the necessary mental concepts and that reentrant pathways in animal brains provide a structure in which higher- and lower-order representations could actually be combined into a unified conscious state.

4.6 Limits of philosophical theories

Phenomenal consciousness is just one feature (some would say the defining feature) of mental states or events. Any theory of animal consciousness must be understood, however, in the context of a larger investigation of animal cognition that (among philosophers) will also be concerned with issues such as intentionality (in the sense described by the 19th C. German psychologist Franz Brentano) and mental content (Dennett 1983, 1987; Allen 1992a,b, 1995, 1997).

Philosophical opinion divides over the relation of consciousness to intentionality with some philosophers maintaining that they are strictly independent, others (particularly proponents of the functionalist theories of consciousness described in this section) arguing that intentionality is necessary for consciousness, and still others arguing that consciousness is necessary for genuine intentionality (see Allen 1997 for discussion). Many behavioral scientists accept cognitivist explanations of animal behavior that attribute representational states to their subjects. Yet they remain hesitant to attribute consciousness. If the representations invoked within cognitive science are intentional in Brentano's sense, then these scientists seem committed to denying that consciousness is necessary for intentionality.

There remains great uncertainty about the proper explanation of consciousness. It is beyond the scope of this article to survey the strong attacks that have been mounted against the various accounts of consciousness, but it is safe to say that none of them seems secure enough to hang a decisive endorsement or denial of animal consciousness upon it. Accounts of consciousness in terms of basic neurophysiological properties, the quantum-mechanical properties of neurons, or sui generis properties of the universe are just as insecure as the various functionalist accounts. And even those accounts that are compatible with animal in their general outline, are not specific enough to permit ready answers to the Distribution Question in its full generality. Hence no firm conclusions about the distribution of consciousness can be drawn on the basis of the philosophical theories of consciousness that have been offered thus far.

Where does this leave the epistemological questions about animal consciousness? While it may seem natural to think that we must have a theory of what consciousness is before we try to determine whether other animals have it, this may in fact be putting the conceptual cart before the empirical horse. In the early stages of the scientific investigation of any phenomenon, putative samples must be identified by rough rules of thumb (or working definitions) rather than complete theories. Early scientists identified gold by contingent characteristics rather than its atomic essence, knowledge of which had to await thorough investigation of many putative examples — some of which turned out to be gold and some not. Likewise, at this stage of the game, perhaps the study of animal consciousness would benefit from the identification of animal traits worthy of further investigation, with no firm commitment to idea that all these examples will involve conscious experience.

Of course, as a part of this process some reasons must be given for identifying specific animal traits as “interesting” for the study of consciousness, and in a weak sense such reasons will constitute an argument for attributing consciousness to the animals possessing those traits. These reasons can be evaluated even in the absence of an accepted theory of consciousness. Furthermore, those who would bring animal consciousness into the scientific fold in this way must also explain how scientific methodology is adequate to the task in the face of various arguments that it is inadequate. These arguments, and the response to them, can also be evaluated in the absence of ontological certitude. Thus there is plenty to cover in the remaining sections of this encyclopedia entry.

5. Arguments Against Animal Consciousness

5.1 Dissimilarity arguments

Recall the Cartesian argument from the previous section against animal consciousness (or animal mind) on the grounds that animals do not use language conversationally or reason generally. This argument, based on the alleged failure of animals to display certain intellectual capacities, is illustrative of a general pattern of using certain dissimilarities between animals and humans to argue that animals lack consciousness.

A common refrain in response to such arguments is that, in situations of partial information, “absence of evidence is not evidence of absence”. Descartes dismissed parrots vocalizing human words because he thought it was merely meaningless repetition. This judgment may have been appropriate for the few parrots he encountered, but it was not based on a systematic, scientific investigation of the capacities of parrots. Nowadays many would argue that Pepperberg's study of the African Grey parrot “Alex” (Pepperberg 1999) should lay the Cartesian prejudice to rest. This study, along with several on the acquisition of a degree of linguistic competence by chimpanzees and bonobos (e.g., Gardner et al. 1989; Savage-Rumbaugh 1996) would seem to undermine Descartes' assertions about lack of conversational language use and general reasoning abilities in animals. (See, also, contributions to Hurley & Nudds 2006.)

Cartesians respond by pointing out the limitations shown by animals in such studies (they can't play a good game of chess, after all, let alone tell us what they are thinking about), and they join linguists in protesting that the subjects of animal-language studies have not fully mastered the recursive syntax of natural human languages.[4] But this kind of post hoc raising of the bar suggests to many scientists that the Cartesian position is not being held as a scientific hypothesis, but as a dogma to be defended by any means. Convinced by evidence of sophisticated cognitive abilities, most philosophers these days (including Carruthers) agree with Block that something like access consciousness is properly attributed to many animals. Nevertheless, when it comes to phenomenal consciousness, dissimilarity arguments are not entirely powerless to give some pause to defenders of animal sentience, for surely most would agree that, at some point, the dissimilarities between the capacities of humans and the members of another species (the common earthworm Lumbricus terrestris, for example) are so great that it is unlikely that such creatures are sentient. A grey area arises precisely because no one can say how much dissimilarity is enough to trigger the judgment that sentience is absent.

5.2 Similarity arguments

A different kind of strategy that has been used to deny animal consciousness is to focus on certain similarities between animal behaviors and behaviors which may be conducted unconsciously by humans. Thus, for example, Carruthers (1989, 1992) argued that all animal behavior can be assimilated to the non-conscious activities of humans, such as driving while distracted (“on autopilot”), or to the capacities of “blindsight” patients whose damage to visual cortex leaves them phenomenologically blind in a portion of their visual fields (a “scotoma”) but nonetheless able to identify things presented to the scotoma. (He refers to both of these as examples of “unconscious experiences”.)

This comparison of animal behavior to the unconscious capacities of humans can be criticized on the grounds that, like Descartes' pronouncements on parrots, it is based only on unsystematic observation of animal behavior. There are grounds for thinking that careful investigation would reveal that there is not a very close analogy between animal behavior and human behaviors associated with these putative cases of unconscious experience. For instance, it is notable that the unconscious experiences of automatic driving are not remembered by their subjects, whereas there is no evidence that animals are similarly unable to recall their allegedly unconscious experiences. Likewise, blindsight subjects do not spontaneously respond to things presented to their scotomas, but must be trained to make responses using a forced-response paradigm. There is no evidence that such limitations are normal for animals, or that animals behave like blindsight victims with respect to their visual experiences (Jamieson & Bekoff 1991).

Nevertheless, there are empirical grounds for concern that behavior suggesting consciousness in animals may be the product of unconscious processes. Allen et al. (2009) describe work on learning in spinal cords of rats that shows phenomena analogous to latent inhibition and overshadowing. In intact animals, these learning and memory related phenomena have been argued to involve attention. But their similarity to mechanisms in the spinal cord, assumed by most not to involve consciousness, calls into question their status as evidence for consciousness. There are, of course, differences between the learning capacities of spinal cords and the learning capacities of intact organisms, and there are prima facie reasons for thinking that sophisticated forms of learning are related to consciousness (Clark & Squire 1998; Allen 2004b; Ginsburg & Jablonka 2007b; Allen et al. 2009). But the current point is similar to that made about blindsight: a more fine-grained analysis of these similarities and differences is needed before conclusions about consciousness can be drawn.

5.3 Arguments from the absence of self-consciousness

In his subsequent publications, Carruthers (1998a,b, 2000) appears to have moved on from the similarity argument of the previous section, now placing more stock in the argument based on his higher order thought theory that was described above. Recall that according to this argument, phenomenal consciousness requires the capacity to think about, and therefore conceptualize, one's own thoughts.[5] Such conceptualization requires, according to Carruthers, a theory of mind. And, Carruthers maintains, there is little basis for thinking that any nonhuman animals have a theory of mind, with the possible exception of chimpanzees. This argument is, of course, no stronger than the higher-order thought account of consciousness upon which it is based. But setting that aside for the sake of argument, this challenge by Carruthers deserves further attention as perhaps the most empirically-detailed case against animal consciousness to have been made in the philosophical literature.

Gordon Gallup (1970) developed an experimental test of mirror self-recognition (see the section on self-consciousness and metacognition below), and argues the performance of chimpanzees in this test indicates that they are self-aware. Gallup et al. (2002) claim that “the ability to infer the existence of mental states in others (known as theory of mind, or mental state attribution) is a byproduct of being self-aware” and they describe the connection between self-awareness and theory of mind thus: “If you are self-aware then you are in a position to use your experience to model the existence of comparable processes in others.” The success of chimpanzees on the mirror self-recognition task thus may give some reason to maintain that they are phenomenally conscious on Carruthers' account.

Carruthers neither endorses nor outright rejects the conclusion that chimpanzees are sentient. His suspicion that even chimpanzees might lack theory of mind, and therefore (on his view) phenomenal consciousness, is based on some ingenious laboratory studies by Povinelli (1996) showing that in interactions with human food providers, chimpanzees apparently fail to understand the role of eyes in providing visual information to the humans, despite their outwardly similar behavior to humans in attending to cues such as facial orientation. The interpretation of Povinelli's work remains controversial. Hare et al. (2000) conducted experiments in which dominant and subordinate animals competed with each other for food, and concluded that “at least in some situations chimpanzees know what conspecifics do and do not see and, furthermore, that they use this knowledge to formulate their behavioral strategies in food competition situations.” They suggest that Povinelli's negative results may be due to the fact that his experiments involve less natural chimp-human interactions. Given the uncertainty, Carruthers is therefore well-advised in the tentative manner in which he puts forward his claims about chimpanzee sentience.

A full discussion of the controversy over theory of mind deserves an entry of its own (see also Heyes 1998), but it is worth remarking here that the theory of mind debate has origins in the hypothesis that primate intelligence in general, and human intelligence in particular, is specially adapted for social cognition (see Byrne & Whiten 1988, especially the first two chapters, by Jolly and Humphrey). Consequently, it has been argued that evidence for the ability to attribute mental states in a wide range of species might be better sought in natural activities such as social play, rather than in laboratory designed experiments which place the animals in artificial situations (Allen & Bekoff 1997; see esp. chapter 6; see also Hare et al. 2000, Hare et al. 2001, and Hare & Wrangham 2002). Furthermore, to reiterate the maxim that absence of evidence is not evidence of absence, it is quite possible that the mirror test is not an appropriate test for theory of mind in most species because of its specific dependence on the ability to match motor to visual information, a skill that may not have needed to evolve in a majority of species. Alternative approaches that have attempted to provide strong evidence of theory of mind in nonhuman animals under natural conditions have generally failed to produce such evidence (see, e.g., the conclusions about theory of mind in vervet monkeys and baboons by Cheney & Seyfarth 1990, 2007), although anecdotal evidence tantalizingly suggests that researchers still have not managed to devise the right experiments.

5.4 Methodological arguments

Many scientists remain convinced that even if questions about self-consciousness are empirically tractable, no amount of experimentation can provide access to phenomenal consciousness in nonhuman animals. This remains true even among those scientists who are willing to invoke cognitive explanations of animal behavior that advert to internal representations. Opposition to dealing with consciousness can be understood as a legacy of behavioristic psychology first because of the behaviorists' rejection of terms for unobservables unless they could be formally defined in terms of observables, or otherwise operationalized experimentally, and second because of the strong association in many behaviorists' minds between the use of mentalistic terms and the twin bugaboos of Cartesian dualism and introspectionist psychology (Bekoff & Allen 1997). In some cases these scientists are even dualists themselves, but they are strongly committed to denying the possibility of scientifically investigating consciousness, and remain skeptical of all attempts to bring it into the scientific mainstream.

It is worth remarking that there is often a considerable disconnect between philosophers and psychologists (or ethologists) on the topic of animal minds. Some of this can be explained by the failure of some psychologists to heed the philosophers' distinction between intentionality in its ordinary sense and intentionality in the technical sense derived from Brentano (with perhaps most of the blame being apportioned to philosophers for failing to give clear explanations of this distinction and its importance). Indeed, some psychologists, having conflated Brentano's notion with the ordinary sense of intentionality, and then identifying the ordinary sense of intentionality with “free will” and conscious deliberation, have literally gone on to substitute the term “consciousness” in their criticisms of philosophers who were discussing the intentionality of animal mental states and who were not explicitly concerned with consciousness at all (see, e.g., Blumberg & Wasserman 1995).

Because consciousness is assumed to be private or subjective, it is often taken to be beyond the reach of objective scientific methods (see Nagel 1974). This claim might be taken in either of two ways. On the one hand it might be taken to bear on the possibility of answering the Distribution Question, i.e., to reject the possibility of knowledge that a member of another taxonomic group (e.g., a bat) has conscious states. On the other hand it might be taken to bear on the possibility of answering the Phenomenological Question, i.e., to reject the possibility of knowledge of the phenomenological details of the mental states of a member of another taxonomic group. The difference between believing with justification that a bat is conscious and knowing what it is like to be a bat is important because, at best, the privacy of conscious experience supports a negative conclusion only about the latter. To support a negative conclusion about the former one must also assume that consciousness has absolutely no measurable effects on behavior, i.e., one must accept epiphenomenalism. But if one rejects epiphenomenalism and maintains that consciousness does have effects on behavior then a strategy of inference to the best explanation may be used to support its attribution. More will be said about this in the next section.

6. Arguments For Animal Consciousness

6.1 Similarity arguments

Most people, if asked why they think familiar animals such as their pets are conscious, would point to similarities between the behavior of those animals and human behavior. Similarity arguments for animal consciousness thus have roots in common sense observations. But they may also be bolstered by scientific investigations of behavior and neurology as well as considerations of evolutionary continuity (homology) between species. Nagel's own confidence in the existence of phenomenally conscious bat experiences is based on nothing more than this kind of reliance on shared mammalian traits (Nagel 1974).

Many judgments of the similarity between human and animal behavior are readily made by ordinary observers. The reactions of many animals, particularly other mammals, to bodily events that humans would report as painful are easily and automatically recognized by most people as pain responses. High-pitched vocalizations, fear responses, nursing of injuries, and learned avoidance are among the responses to noxious stimuli that are all part of the common mammalian heritage. Similar responses are also visible to some degree or other in organisms from other taxonomic groups.

Less accessible to casual observation, but still in the realm of behavioral evidence are scientific demonstrations that members of other species, even of other phyla, are susceptible to the same visual illusions as we are (e.g., Fujita et al. 1991) suggesting that their visual experiences are similar.

Neurological similarities between humans and other animals have also been taken to suggest commonality of conscious experience. All mammals share the same basic brain anatomy, and much is shared with vertebrates more generally. Even structurally different brains may be neurodynamically similar in ways that enable inferences about animal consciousness to be drawn (Seth et al. 2005).

As well as generic arguments about the connections among consciousness, neural activity, and behavior, a considerable amount of scientific research is directed towards understanding particular conscious states, especially using animals as proxies for humans. Much of the research that is of direct relevance to the treatment of human pain, including on the efficacy of analgesics and anesthetics, is conducted on rats and other animals. The validity of this research depends on the similar mechanisms involved[6] and to many it seems arbitrary to deny that injured rats, who respond well to opiates for example, feel pain.[7] Likewise, much of the basic research that is of direct relevance to understanding human visual consciousness has been conducted on the very similar visual systems of monkeys. Monkeys whose primary visual cortex is damaged even show impairments analogous to those of human blindsight patients (Stoerig & Cowey 1997) suggesting that the visual consciousness of intact monkeys is similar to that of intact humans. It is often argued that the use of animals to model neuropsychiatric disorders presupposes convergence of emotional and other conscious states and further refinements of those models may strengthen the argument for attributing such states to animals (Sufka et al. 2009). An interesting reversal of the modeling relationship can be found in the work of Temple Grandin, Professor of Animal Science at Colorado State University, who uses her experience as a so-called “high-functioning autistic” as the basis for her understanding of the nature of animal experience (Grandin 1995, 2004).

Such similarity arguments are, of course, inherently weak for it is always open to critics to exploit some disanalogy between animals and humans to argue that the similarities don't entail the conclusion that both are sentient (Allen 1998, 2004b). Even when bolstered by evolutionary considerations of continuity between the species, the arguments are vulnerable, for the mere fact that humans have a trait does not entail that our closest relatives must have that trait too. There is no inconsistency with evolutionary continuity to maintain that only humans have the capacity to learn to play chess. Likewise for consciousness. Povinelli & Giambrone (2000) also argue that the argument from analogy fails because superficial observation of quite similar behaviors even in closely related species does not guarantee that the underlying cognitive principles are the same, a point that Povinelli believes is demonstrated by his research (described in the previous section) into how chimpanzees use cues to track visual attention (Povinelli 1996). (See Allen 2002 for criticism of their analysis of the argument by analogy.)

Perhaps a combination of behavioral, physiological and morphological similarities with evolutionary theory amounts to a stronger overall case[8]. But in the absence of more specific theoretical grounds for attributing consciousness to animals, this composite argument — which might be called “the argument from homology” — despite its comportment with common sense, is unlikely to change the minds of those who are skeptical.

6.2 Inference to the best explanation

One way to get beyond the weaknesses in the similarity arguments is to try to articulate a theoretical basis for connecting the observable characteristics of animals (behavioral or neurological) to consciousness. As mentioned above, one approach to bringing consciousness into the scientific fold is to try identify behaviors for which it seems that an explanation in terms of mechanisms involving consciousness might be justified over unconscious mechanisms by a strategy of inference to the best explanation. This form of inference would be strengthened by a good understanding of the biological function or functions of consciousness. If one knew what phenomenal conscious is for then one could exploit that knowledge to infer its presence in cases where that function is fulfilled, so long as other kinds of explanations can be shown less satisfactory.

If phenomenal consciousness is completely epiphenomenal, as some philosophers believe, then a search for the functions of consciousness is doomed to futility. In fact, if consciousness is completely epiphenomenal then it cannot have evolved by natural selection. On the assumption that phenomenal consciousness is an evolved characteristic of human minds, at least, and therefore that epiphenomenalism is false, then an attempt to understand the biological functions of consciousness may provide the best chance of identifying its occurrence in different species.

Such an approach is nascent in Griffin's attempts to force ethologists to pay attention to questions about animal consciousness. (For the purposes of this discussion I assume that Griffin's proposals are intended to relate to phenomenal consciousness, as well, perhaps, to consciousness in its other senses.) In a series of books, Griffin (who made his scientific reputation by carefully detailing the physical and physiological characteristics of echolocation by bats) provides examples of communicative and problem-solving behavior by animals, particularly under natural conditions, and argues that these are prime places for ethologists to begin their investigations of animal consciousness (Griffin 1976, 1984, 1992).

Although he thinks that the intelligence displayed by these examples suggests conscious thought, many critics have been disappointed by the lack of systematic connection between Griffin's examples and the attribution of consciousness (see Alcock 1992; Bekoff & Allen 1997; Allen & Bekoff 1997). Griffin's main positive proposal in this respect has been the rather implausible suggestion that consciousness might have the function of compensating for limited neural machinery. Thus Griffin is motivated to suggest that consciousness may be more important to honey bees than to humans.

If compensating for small sets of neurons is not a plausible function for consciousness, what might be? The commonsensical answer would be that consciousness “tells” the organism about events in the environment, or, in the case of pain and other proprioceptive sensations, about the state of the body. But this answer begs the question against opponents of attributing conscious states to animals for it fails to respect the distinction between phenomenal consciousness and mere awareness (in the uncontroversial sense of detection) of environmental or bodily events. Opponents of attributing the phenomenal consciousness to animals are not committed to denying the more general kind of consciousness of various external and bodily events, so there is no logical entailment from awareness of things in the environment or the body to animal sentience.

Perhaps more sophisticated attempts to spell out the functions of consciousness are similarly doomed. But Allen & Bekoff (1997, ch. 8) suggest that progress might be made by investigating the capacities of animals to adjust to their own perceptual errors. Not all adjustments to error provide grounds for suspecting that consciousness is involved, but in cases where an organism can adjust to a perceptual error while retaining the capacity to exploit the content of the erroneous perception, then there may be a robust sense in which the animal internally distinguishes its own appearance states from other judgments about the world. (Humans, for instance, have conscious visual experiences that they know are misleading — i.e., visual illusions — yet they can exploit the erroneous content of these experiences for various purposes, such as deceiving others or answering questions about how things appear to them.) Given that there are theoretical grounds for identifying conscious experiences with “appearance states”, attempts to discover whether animals have such capacities might be a good place to start looking for animal consciousness. It is important, however, to emphasize that such capacities are not themselves intended to be definitive or in any way criterial for consciousness.

Carruthers (2000) makes a similar suggestion about the function of consciousness, relating it to the general capacity for making an appearance-reality distinction; of course he continues to maintain that this capacity depends upon having conceptual resources that are beyond the grasp of nonhuman animals.

6.3 Interpretivism

Some philosophers have argued that the attribution of consciousness to nonhuman animals is not a matter of drawing an inference at all, but a response more akin to interpretation. As Searle (1998) puts it,

I do not infer that my dog is conscious, any more than, when I came into this room, I inferred that the people present are conscious. I simply respond to them as is appropriate to conscious beings. I just treat them as conscious beings and that is that.

As an account of the psychology of his response to animals, Searle may be correct, although such an account seems inadequate to the actual demands for justification encountered in scientific contexts and in legal or ethical contexts. Searle's point is that such demands are unwarranted — signs of a Cartesian mindset, he would claim, which regards mental states as hidden (albeit material) causes.

Dennett (1987) and Jamieson (1998) have also argued that our understanding of the mental states of animals has more in common with perception and interpretation — a form of animal hermeneutics. Jamieson points out how deeply ingrained and conceptually unifying our everyday practices of interpreting animals mentalistically are, and he quite reasonably makes the point that familiarity with his dog makes him a more sensitive interpreter of her emotional and cognitive states than other observers. Strands of the same point of view can also be found in scientists writing about cognitive ethology (Allen 2004a) and in Wittgensteinian attitudes towards questions of animal mind (e.g, Gaita 2003).

Nevertheless, even among scientists who are sympathetic to the idea of themselves as sensitive observers of animals with rich mental lives, there is the recognition that the larger scientific context requires them to provide a particular kind of empirical justification of mental state attributions. This demand requires those who would say that a tiger pacing in the zoo is “bored”, or that the hooked fish is in pain to define their terms, state empirical criteria for their application, and provide experimental or observational evidence for their claims. Even if interpretivism is a viable theory of folk practice with respect to attributing animal consciousness, it seems unlikely to make inroads against scientific epistemology.

7. Current Scientific Investigations Relevant to Animal Consciousness

With the gradual loosening of behaviorist strictures in psychology and ethology, and independent advances in neuroscience, there has been a considerable increase in the number of animal studies that have some bearing on animal consciousness. Some of these studies focus on specific kinds of experience, such as pain, while others focus on cognitive abilities such as self-awareness that seem to be strongly correlated with human consciousness. This section contains brief reviews of some of the main areas of investigation. The aim is to provide some quick entry points into the scientific literature.

7.1 Animal pain and suffering

Given the centrality of pain to most accounts of our ethical obligations towards animals, as well as the importance of animal models of pain in clinical medical research (see Mogil 2009 for a review), it is hardly surprising that there is a substantial (albeit controversial) scientific literature bearing on animal pain. Reports by the Nuffield Council on Bioethics in the U.K. (Nuffield Council 2005; see esp. chapter 4) and the U.S. National Academy of the Sciences Institute for Animal Laboratory Research (ILAR 2009) have recently covered the definition of pain and the physiological, neurological, and behavioral evidence for pain in nonhuman animals. These reviews also distinguish pain from suffering, and the ILAR has divided what used to be a single report on recognition and alleviation of pain and suffering into two separate reports, although the scientific investigation of suffering is relatively rudimentary (but see Dawkins 1985; Farah 2008).

A proper understanding of neurological studies of animal pain begins with the distinction between nociception and pain. Nociception — the capacity to sense noxious stimuli — is one of the most primitive sensory capacities. Neurons functionally specialized for nociception have been described in invertebrates such as the medical leech and the marine snail Aplysia californica (Walters 1996). Because nociceptors are found in a very wide range of species, and are functionally effective even in decerebrate or spinally transected animals (Allen 2004b), their presence and activity in a species provides little or no direct evidence for phenomenally conscious pain experiences. The gate control theory of Melzack and Wall (1965) describes a mechanism by which “top-down” signals from the brain modulate “bottom-up” nociception, providing space for the distinction between felt pain and nociception.

Smith & Boyd (1991) assess the evidence for the pain-sensing capabilities of animals in the categories of whether nociceptors are connected to the central nervous system, whether endogenous opioids are present, whether analgesics affect responses, and whether the ensuing behavioral responses are analogous to those of humans (see table 2.3 in Varner 1998, p. 53, which updates the one presented by Smith & Boyd). On the basis of these criteria, Varner follows Smith & Boyd in concluding tentatively that the most obvious place to draw a line between pain-conscious organisms and those not capable of feeling pain consciously is between vertebrates and invertebrates. However, Elwood & Appel (2009) conducted an experiment on hermit crabs which they interpret as providing evidence that pain is experienced and remembered by these crustaceans. Varner also expressed some hesitation about the evidence for conscious pain in “lower” vertebrates: fish, reptiles and amphibians. Allen (2004b) argues, however, that subsequent research indicates that the direction of discovery seems uniformly towards identifying more similarities among diverse species belonging to different taxonomic classes, especially in the domains of anatomy and physiology of the nociceptive and pain systems.

It is generally accepted that the mammalian pain system has both a sensory and an affective pathway, and that these can be dissociated to some degree both pharmacologically (with morphine, e.g.) and surgical lesions. The anterior cingulate cortex (ACC) is a particularly important structure of the mammalian brain in this regard (Price 2000). Allen et al. (2005) and Shriver (2006) argue that this dissociability provides a route to empirical assessment of the affective component of animal consciousness, and Farah (2008) uses it to distinguish suffering from “mere pain”.

Detailed analysis of other taxonomic groups may, however, indicate important anatomical differences. Rose (2002) argues that because fish lack an ACC they may not be bothered by pain. This is in contrast to Sneddon et al. (2003) who argue that there is adequate behavioral and physiological evidence to support pain attributions to fish. (See, also, Chandroo et al. 2004 for a review.) While the ACC is important to mammals, there remains the possibility that other taxa may have functionally similar structures, such as the corticoidea dorsolateralis in birds (Atoji & Wild 2005; Dubbeldam 2009). Genetic knockout animals are also providing further clues about the affective aspects of pain (see Shriver 2009 for a review and application of these findings to animal welfare.)

7.2 Animal emotions

The idea of animal emotions is, of course, prominent in Darwin's work with his 1872 book The Expression of the Emotions in Man and Animals. Willingness to see animals as emotional beings (and humans, by contrast, as endowed with rationality that can override the emotions) goes back at least to Ancient Greek philosophy. Konrad Lorenz seems to have held a similar view, echoing Oskar Heinroth's statement that “animals are highly emotional people of very limited intelligence” (Lorenz 1971b, 334). These days it is more fashionable to regard emotions as an important component of intelligence. Regardless of the merits of that view, the scientific study of animal emotions has gained its own momentum. Early in the 20th century, although they are not arguing for or about animal consciousness, physiologists recognized that significance of emotion in animal behavior. Dror (1999) explains how the emotional state of animals was considered to be a source of noise in physiological experiments at that time, and researchers took steps to ensure that animals were calm before their experiments. According to Dror, although physiologists were forced to deal with the problem of emotional noise, attempts to treat emotion as a subject of study in its own right never crystallized to the extent of generating a journal or other institutional features (Dror 1999, 219). More recently, Jaak Panksepp (2004, 2005) has been conducting a research program that he calls “affective neuroscience” and that encompasses direct study of animal emotions (2004), exemplified for example in the experimental investigation of rats “laughing” and seeking further contact in response to tickling by humans (Panksepp & Burgdorf 2003). Sufka et al. (2009) have also proposed that animal models of neuropsychiatric disorders may also support the experimental investigation of animal emotions. Although depending on a more anecdotal, non-experimental approach, Smuts (2001) and Bekoff (2007) each defend the attribution of conscious emotions to animals from a scientist's perspective. Bekoff has made much of play behavior as an indicator of animal emotions, an idea that is also taken up by Cabanac et al. (2009).

Empathy in animals is also a topic of current investigation (e.g., Preston & de Waal 2002). Langford et al. (2002) argue for empathy in mice based on experiments in mice who observe a cagemate given a noxious stimulus, or in pain, are more sensitive to painful stimuli than control mice who observe an unfamiliar mouse similarly treated. Byrne et al. (2008) argue for empathy in elephants as an inference to the best explanation of various capacities, such as diagnosing animacy and goal directedness, and assessing the physical abilities and emotional states of other elephants when these different from their own.

Further discussion and additional scientific references for the topic of emotions and empathy in animals can be found in the section on emotions and empathy in the Encyclopedia's entry on Animal Cognition.

7.3 Perceptual phenomenology

The idea that careful psychophysical work with could help us understand the nature of their subjective experiences of the world can be traced at least to Donald Griffin's experimental tests of the limits of bat echolocation. It is also behind the idea that knowing that horses have near 360° vision, or that raptors have two fovea on each retina, may tell us something about the nature of their experiences — how the world appears to them — if it is granted that they have such experiences. Neural investigation adds a further layer of analysis to scientific understanding of the nature of perception. For instance, Leopold & Logothetis (1996) used neural data to support inferences about the percepts of monkeys under conditions of binocular rivalry (see also: Myserson et al. 1981; Rees et al. 2002). And Leopold et al. (2003) argue that neural recordings can be used to corroborate the non-verbal “reports” of monkeys shown ambiguous visual stimuli. (Think here of whether it is possible for the monkey to report that it is subject to Gestalt switches like those arising from ambiguous figures such as the duck-rabbit or figure-vase illusions.)

The phenomenon of blindsight, a form of unconscious visual perception that arises with damage to specific areas of primary visual cortex, has also been investigated in surgically lesioned monkeys (Stoerig & Cowey 1997), with a close correspondence between the monkeys' deficits and those of the human patients vis-à-vis parts of the visual field that can be processed only unconsciously, and those for which the patients retain consciousness. The non-verbal approach to assessing visual awareness has been further validated by Stoerig et al. (2002). Blindsight subjects, both human and monkey, do not spontaneously respond to things presented to their scotomas (areas where they are visually unaware), but must be trained to make responses using a forced-response paradigm (Stoerig & Cowey 1997).

The emphasis on visual perception in most of these examples, no doubt reflects a primatocentric bias. We human primates are highly visual creatures, and, as Nagel (1974) argued, we face considerable hurdles in imagining (again, a visual metaphor) the subjective experiences of creatures in modalities in which humans are weak or completely unendowed. The examples of research also reflect an anthropocentric bias in that much of the animal experimentation is explicitly targeted at relieving human disorders. Although there is good work by neuroethologists on the psychophysics of echolocation by bats and dolphins, or on the sensory capacities of weakly electric fish, questions of subjective awareness are generally not broached in that literature.

As with the investigation of animal pain, fine-grained analysis of the neural correlates of consciousness may reveal subtle differences between different species. For instance, Rees et al. (2002) report that while the behavior of rhesus monkeys is rather similar to humans under conditions of binocular rivalry, “the firing of most cells in and around V1 primarily reflects stimulus properties rather than the conscious percept reported by the animal ... However, several neuroimaging studies in humans have presented evidence that argues for a stronger role of V1 in binocular rivalry and hence, by implication, visual awareness.” Nevertheless, it is noteworthy that they take the behavioral evidence to be unproblematically describable as report of a conscious percept by the monkey.

7.4 Self-consciousness and metacognition

Systematic study of self-consciousness and theory of mind in nonhuman animals has roots in an approach to the study of self-consciousness pioneered by Gallup (1970). Gallup's rationale for linking mirror-self recognition to self-awareness has already been discussed above. The idea for the experiment came from observations well-known to comparative psychologists that chimpanzees would, after a period of adjustment, use mirrors to inspect their own images. Gallup used these observations to develop a widely-replicated protocol that appears to allow a scientific determination of whether it is merely the mirror image per se that is the object of interest to the animal inspecting it, or whether it is the image qua proxy for the animal itself that is the object of interest. Taking chimpanzees who had extensive prior familiarity with mirrors, Gallup anesthetized his subjects and marked their foreheads with a distinctive dye, or, in a control group, anesthetized them only. Upon waking, marked animals who were allowed to see themselves in a mirror touched their own foreheads in the region of the mark significantly more frequently than controls who were either unmarked or not allowed to look into a mirror.

Although it is typically reported that chimpanzees consistently “pass” the mirror-mark test, a survey of the scientific literature by Shumaker & Swartz (2002) indicates that of 163 chimpanzees tested, only 73 showed mark-touching behavior (although there was considerable variation in the age and mirror experience among these animals). Shumaker & Swartz also report mark-touching behavior in 5 of 6 tested orang utans and 6 of 23 gorillas. They suggest that the lower incidence of mark touching by gorillas may be due to avoidance of socially-significant direct eye contact.

Modified versions of Gallup's experiment have also been conducted with non-primate species. Notoriously, Epstein et al. (1981) trained pigeons to peck at a mark on their own bodies that was visible only in a mirror, and they used this to call into question the attribution of “self-awareness” on the basis of the mirror-mark test, preferring an associative learning explanation. Gallup et al. (2002) reject the claimed equivalence, pointing out that chimpanzees were not trained to touch marks before the test was administered. Reiss & Marino (2001) have offered evidence of mirror self-recognition in bottlenose dolphins. Using a modified version of Gallup's procedure that involved no anesthesia, they inferred self-recognition from bodily contortions in front of the mirror (self-touching being anatomically impossible for dolphins). This evidence has been disputed (e.g. Wynne 2004). The mirror-mark test continues to be an area of active investigation in various species including elephants (Plotnik et al. 2006) and magpies (Prior et al. 2008). Various commentators have pointed out that the mirror test may not be entirely fair for species which depend more heavily on senses other than vision (Mitchell 2002; Bekoff 2002).

An intriguing line of research into animals' knowledge of their own mental states considers the performance of animals in situations of cognitive uncertainty. When primates and dolphins are given a “bailout” response allowing them to avoid making difficult discriminations, they have been shown to choose the bailout option in ways that are very similar to humans (Smith et al. 2003). The fact that animals who have no bailout option and are thus forced to respond to the difficult comparisons do worse than those who have the bailout option but choose to respond to the test has been used to argue for some kind of higher-order self understanding. The original experiments have attracted both philosophical criticism of the second-order interpretation (e.g. Carruthers 2008) and methodological criticism by psychologists (reviewed by Crystal & Foote 2009), although alternative approaches to establishing metacognition in non-linguistic animals may be capable of avoiding these criticisms (Terrace & Son 2009).