Recursive Functions

The recursive functions, which form a class of computable functions, take their name from the process of "recurrence" or "recursion". In its most general numerical form the process of recursion consists in defining the value of a function by using other values of the same function. In this entry, we provide an account of the class of recursive functions, with particular emphasis on six basic kinds of recursion: iteration, primitive recursion, primitive recursion with parameters, course-of-value recursion, and double recursion. We then examine some theorems relating to these types of recursion.

One recurring theme that motivates the present discussion is the question of how the basic ideas and methods used in recursion theory, which is a defining area of of logic, derive from, or at least interact with, a wider mathematical and intellectual experience. Though there are some historical references, the entry does not attempt a systematic history of the subject.

- 1. Types of Recursion

- 2. The First Recursion Theorem

- 3. The Second Recursion Theorem

- Bibliography

- Other Internet Resources

- Related Entries

1. Types of Recursion

1.1 The Initial Functions

The recursive functions are characterized by the process in virtue of which the value of a function for some argument is defined in terms of the value of that function for some other (in some appropriate sense "smaller") arguments, as well as the values of certain other functions. In order to get the whole process started a certain class of functions need to be singled out, whose values do not in turn depend of their values for smaller arguments. These are called the initial functions. The following functions comprise the class of the initial functions:

- The successor function s, which when given an argument n as an argument returns its immediate successor s(n);

- The constant function z, which returns 0 for any argument n: z(n)=0;

- The projection functions, one for each pair of integers n and i (with i≤n), where pn,i(k1,…, kn) = ki.

For simplicity, we omit the first index on the projection functions, since these can be inferred from the number of argument places. Thus, we abbreviate pn,i(k1,…, kn) as pi(k1,…, kn)

It is immediate to obtain further functions by combining initial functions, by a process referred to as "composition". For instance, for each number k, one can obtain the constant function equal to k, denoted by ck, by combining z with the appropriate number of s: the function s(s(s(z(n)))) = c3(n) always returns value 3 for any input n.

The operation of composition (or substitution), is formally defined as follows:

If g is a function of m arguments, and each of h1,…,hm is a function of n arguments, then the function ƒƒ(x1,…,xn) = g(h1(x1,…,x n), … , hm(x1,…,x n))is definable by composition from g and h1,…,hm. We write ƒ = [g

h1,…,hm], and in the simple case where m=1 and h1 is designated h, we write ƒ(x) = [g

h](x)

As an example, consider the function ƒ which maps any 3 numbers as argument to the successor of the second argument. We can define this function by composition from initial functions. In this case, let g in the above definition be the successor function s and let h in the above definition be the projection function p2. Then,

ƒ(x,y,z) = [s p2](x,y, z)

= s(p2(x,y, z)) = g(h(x,y,z))

Thus, given this definition of ƒ, compute its values:

ƒ(0,0,0) = 1

ƒ(0,1,0) = 2

ƒ(0,0,1) = 1

ƒ(1,0,0) = 1

ƒ(0,2,0) = 3

ƒ(1,2,1) = 3

…

1.2 Iteration

The simplest type of recursion occurs when a given function is iterated. Technically, the n-th iteration of a function f is defined as follows:

ƒ(0)(x) = x ƒ(n+1)(x) = ƒ(ƒ(n)(x)).

The first clause is needed to obtain ƒ(1)(x) = ƒ(x) from the second clause. We also have ƒ(2)(x) = ƒ(ƒ(x)), etc.

Notice that ƒ(n)(x) is a function of two arguments, n and x. It is possible to "fix" the first argument, thereby obtaining a fixed iteration of a function. So for instance s(2)(x) is the function that returns the successor of the successor of x; such a function enumerates all the even numbers since its values are 0,2,4,…

One of the earliest examples of iteration comes from the Rhind Papyrus, written about 1700 B.C., which gives as Problem 79 the following:

In each of 7 houses are 7 cats; each cat kills 7 mice; each mouse would have eaten 7 ears of spelt (wheat); each ear of spelt would have produced 7 hekat (half a peck) of grain. How much grain is saved by the 7 house cats?

The solution amounts to computing the sixth term of a geometrical progression with first term 1 and multiplier 7, i.e. ƒ(6)(7), with ƒ(x) = 7x. The papyrus gives not only the correct answer (16,807), but also the sum of the first five terms of the progression (19,607).

A similar use of a geometrical progression comes from a medieval story about the origin of chess:

According to an old tale, the Grand Vizier Sissa Ben Dahir was granted a boon for having invented chess for the Indian King, Shirham.Sissa addressed the King: "Majesty, give me a grain of wheat to place on the first square of the board, and two grains of wheat to place on the second square, and four grains of wheat to place on the third, and eight grains of wheat to place on the fourth, and so on. Oh, King, let me cover each of the 64 squares of the board."

"And is that all you wish, Sissa, you fool?" exclaimed the astonished King.

"Oh, Sire," Sissa replied, "I have asked for more wheat than you have in your entire kingdom. Nay, for more wheat that there is in the whole world, truly, for enough to cover the whole surface of the earth to the depth of the twentieth part of a cubit." (Reported in Newman [1956])

Some version of the story was known to Dante, since he refers to it in the Paradiso (XXVIII, 92-93) to describe the abundance of Heaven's lights:

eran tante, che ‘l numero loro

più che ‘l doppiar degli scacchi s'immilla.They were so many, that their number

piles up faster than the chessboard doubling.

As in the previous Egyptian problem, the solution amounts to computing the sum of the first 64 terms of a geometrical progression with first term 1 and multiplier 2, i.e.,

1 + 2 + 22 + … + 263 = 264 − 1 = 18, 446, 744, 073, 709, 551, 615.

Coming closer to our times, an interesting use of iteration was made by Church [1933] in the λ-Calculus, which he had concocted as an alternative foundation for mathematics based on the notion of function and application, as opposed to set and membership. Church's idea was to represent the natural number n in the λ-Calculus as the binary operator ñ that, when applied to the arguments ƒ and x, produces the n-th iteration ƒ(n)(x).

Apparently unnoticed by Church, the same idea had been proposed earlier by Wittgenstein [1921], as follows:

6.02 And this is how we arrive at numbers. I give the following definitions

x = Ω0′ Def. Ω′Ων′ = Ων+1′ Def. So, in accordance with these rules, which deal with signs, we write the series

x, Ω′x, Ω′Ω′x, Ω′Ω′Ω′x, …in the following way

Ω0′, Ω0+1′x, Ω0+1+1′x, …[…] And I give the following definitions

0 + 1 = 1 Def., 0 + 1 + 1 = 2 Def., 0 + 1 + 1 + 1 = 3 Def., (and so on)

6.021 A number is the exponent of an operation.

Even earlier, Peano [1891] had suggested the same idea:

Then, if b is an N, by aαb we want to indicate what is obtained by executing the operation α on a, b times in a row. Hence, if a is a number, a + b represents what is obtained by executing b times on a the operation +, that is the successor of a of order b, i.e., the sum of a and b. […]

If a and b indicate two numbers, by their product a×b we will mean what is obtained by executing b times on 0 the operation + a. […]

If a and b indicate two numbers, by ab we will mean what is obtained by executing b times on 1 the operation ×a.

Thus Peano, like Church but unlike Wittgenstein, saw that the definition of the numbers as iterators gives for free the representability of a number of functions obtained by iteration.

1.3 Primitive recursion

Primitive recursion is a procedure that defines the value of a function at an argument n by using its value at the previous argument n − 1 (see Odifreddi, 1989, I.1.3). Iteration is obviously a special case of primitive recursion, on the number of iterations. And so is the predecessor function, defined by

pd(n) = {

0 if n=0 or n=1 pd(n−1)+1 otherwise

It is not immediate that the predecessor function can be reduced to an iteration, and hence is representable in the λ-Calculus. It was Kleene [1935] who saw how to do this, apparently during a visit to the dentist. Basically, pd(n) is the second component of the n-th iteration of the function on pairs defined as

ƒ((x, y)) = (x + 1, x),

started on (0, 0).

More generally, it is possible to prove that any primitive recursion can be reduced to an iteration, in the presence of a coding and decoding mechanism (see Odifreddi, 1989, I.5.10). This implies that all primitive recursive functions are actually representable in the λ-Calculus, as proved by Kleene [1936].

In many modern textbooks (Mendelson [1964], Boolos & Jeffrey [1974]), it has become standard to define the primitive recursive functions as precisely those obtained from the initial functions by means of composition and primitive recursion. We have already given the formal definition of composition. The second operation which forms new primitive recursive functions from initial primitive recursive functions is called ‘primitive recursion’ and is formally defined as follows:

A function ƒ is definable by primitive recursion from g and h if:

ƒ(x,0) = g(x) ƒ(x,s(y)) = h(x, y, ƒ(x,y)) We write ƒ = PR[g,h] when ƒ is definable by primitive recursion from g and h.

As a simple example of a function definable by primitive recursion, consider arithmetic addition. The function sum(x,y) can be defined as follows:

sum(x,0) = 0 sum(x,s(y)) = s(sum(x,y))

Let us convince ourselves that this shows sum to be definable by recursion. Note here that in the first clause, sum(x,0) can be understood as identified with the unary projection function of x, namely, p1(x). So the first clause in the definition of sum satisfies the first clause in the definition of definable by primitive recursion, by letting ƒ(x,0) be sum(x,0) and letting g(x) be p1(x). To see that the second clause of the definition of definable by primitive recursion is satisfied, note that the definiens can be understood as the function h(x, y, ƒ(x,y)), where h is identified as the composition of the successor function and the projection function p3:

s(sum(x,y)) = [sp3](x,y,sum( x,y)))

Thus, the second clause of definition of sum(x,y)

has the form required by the definition of definable by primitive

recursion. Consequently, sum(x,y) is definable

by recursion from the functions

p1(x) and [s p3].

p3].

In what follows, we shall replace our definition of sum(x,y) with a definition that uses infix notation and which uses the notation x′ instead of s(x) to denote the successor of x:

x + 0 = 0 x + y′ = (x + y)′

To see how sum works, suppose x = 2. Thus, by the first clause, 2 + 0 is defined as 2. Moreover,

2 + 1 = 2 + 0′ = (2 + 0)′ = 2′ = 3

And so on, for 2 + 2, 2 + 3, ….

Here is a series of examples of arithmetic functions which are primitive recursive. We use the simpler infix notation throughout, and leave it as an exercise for the reader to show that, in each case, the function is definable by recursion from other functions:

- Multiplication:

x · 0 = 0 x · y′ = x + (x · y) - Exponential:

x0 = 1 xy′ = x · (x · xy) - Factorial:

0! = 1 y′! = y′ · y! - Predecessor:

pred(0) = 0 pred(y′) = y - Truncated subtraction:

x ∸ 0 = x x ∸ y′ = pred(x ∸ y) - Minimum:

min(x,y) = x ∸ (x ∸ y)

For a variety of other examples, see Mendelson [1964] (123) and Boolos & Jeffrey [1974] (84-85).

1.4 Primitive recursion with parameters

When defining a function of many variables by primitive recursion, all variables except one are kept fixed. Primitive recursion with parameters relaxes this condition, and it allows substitutions for these variables. Although apparently more general, this notion actually turns out to be reducible to the usual primitive recursion (see Odifreddi, VIII.8.3.a).

One ancient example of a primitive recursion with parameters is the solution to the old problem known as the Towers of Hanoi or the Towers of Brahma:

In the great temple of Benares, beneath the dome which marks the centre of the world, rests a brass-plate in which are fixed three diamond needles, each a cubit high and as thick as the body of a bee. On one of these needles, at the creation, God placed sixty-four disks of pure gold, the largest disk resting on the brass plate, and the others getting smaller and smaller up to the top one. This is the Tower of Brahma. Day and night unceasingly the priests transfer the disks from one diamond needle to another according to the fixed and immutable laws of Brahma, which require that the priest must not move more than one disk at a time and that he must place this disk on a needle so that there is no smaller disk below it. When the sixty-four disks shall have been thus transferred from the needle on which at the creation God placed them to one of the other needles, tower, temple, and Brahmins alike will crumble into dust, and with a thunderclap the world will vanish. (Reported in Rouse Ball [1905])

The natural recursive solution is the following: to move n disks from needle A to needle C, first move n − 1 disks from needle A to needle B, then move one disk from needle A to needle C, and then move n − 1 disks from needle B to needle C. More concisely:

move(n,A,C) = move(n−1,A,B) & move(n−1,B,C).

Notice the use of move(n−1,A,B) and move(n−1,B,C), as opposed to move(n−1,A,C), in the computation of move(n,A,C), which makes this a primitive recursion with parameters (the value move(1,A,C) does not count, being constant).

If we let ƒ(n) be the number of moves needed for n disks provided by the previous solution, then

ƒ(1) = 0 ƒ(n + 1) = 1 + 2ƒ(n),

i.e.,

ƒ(n) = 1 + 2 + 22 + … + 2n−1 = 2n−1,

and it is known that this is the least possible number of moves needed to solve the problem. In particular, according to the previous story, the doomsday will be reached after 264 − 1 moves, i.e. the same number provided by the chessboard problem. If one correct move is made every second, for 24 hours a day and 365 days a year, the time required for the completion of the task would be of approximately 58 billion centuries.

1.5 Course-of-value recursion

When defining by primitive recursion a function at a given argument, only the value for the immediately preceeding argument can be used. Course-of-value recursion relaxes this condition, and it allows the use of any number of values for previous arguments. Although apparently more general, this notion actually turns out to be reducible to the usual primitive recursion as well (see Odifreddi, 1989, I.7.1).

An early example of a course-of-value recursion was given by Leonardo da Pisa, also called Fibonacci, in his Liber abaci, written in 1202 and revised in 1228, when discussing the famous rabbit problem (paria coniculorum):

How many pairs of rabbits can be bred in one year from one pair?

A man has one pair of rabbits at a certain place entirely surrounded by a wall. We wish to know how many pairs can be bred from it in one year, if the nature of these rabbits is such that they breed every month one other pair, and begin to breed in the second month after their birth. Let the first pair breed a pair in the first month, then duplicate it and there will be 2 pairs in a month. From these pairs one, namely the first, breeds a pair in the second month, and thus there are 3 pairs in the second month. From these in one month two will become pregnant, so that in the third month 2 pairs of rabbits will be born. Thus there are 5 pairs in this month. From these in the same month 3 will be pregnant, so that in the fourth month there will be 8 pairs. […] In the margin Fibonacci writes the sequence

1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233, 377

and continues:

You can see in the margin how we have done this, namely by combining the first number with the second, hence 1 and 2, and the second with the third, and the third with the fourth … At last we combine the 10th with the 11th, hence 144 and 233, and we have the sum of the above-mentioned rabbits, namely 377, and in this way you can do it for the case of infinite numbers of months.

This provides the definition of the Fibonacci sequence:

ƒ(0) = 0 ƒ(1) = 1 ƒ(n + 2) = ƒ(n) + ƒ(n + 1).

Notice the use of the two values ƒ(n) and ƒ(n + 1) in the definition of ƒ(n + 2), which makes this a course-of-value recursion.

The earliest record of a Fibonacci sequence is probably a set of weights discovered a few decades ago in Turkey, going back to around 1200 B.C. and arranged into a progression approximately equal to it (Petruso [1985]). The sequence was also known in Egypt and Crete (Preziosi [1983]), and it was used by the ancient and medieval Indians to define the metric laws of sanscrit poetry (Singh [1985]).

1.6 Double recursion

Primitive recursion can be used to define functions of many variables, but only by keeping all but one of them fixed. Double recursion relaxes this condition, and it allows the recursion to happen on two variables instead of only one. Although apparently more general, this notion actually turns out to be reducible in many cases (but not all) to the usual primitive recursion (see Odifreddi, 1989, VIII.8.3.b and VIII.8.11).

The first use of a double recursion was made around 220 B.C. by Archimedes in his Sand Reckoner to solve the following problem:

There are some, King Gelon, who think that the number of the sand is infinite in multitude; and I mean the sand not only which exists about Syracuse and the rest of Sicily, but also that which is found in every region whether inhabited or uninhabited. Again there are some who, without regarding it as infinite, yet think that no number has been named which is great enough to exceed this multitude. And it is clear that they who hold this view, if they imagined a mass made up of sand in other respects as large as the mass of the earth, including in it all the seas and the hollows of the earth filled up to a height equal to that of the highest of the mountains, would be many times further still from recognizing that any number could be expressed which exceeded the multitude of the sand so taken. But I will try to show you by means of geometrical proofs, which you will be able to follow, that, of the numbers named by me and given in the work which I sent to Zeuxippus, some exceed not only the number of the mass of sand equal in magnitude to the earth filled up in the way described, but also that of a mass equal in magnitude to the universe.

(Archimedes is referring here to a work now lost.) To denote his large number, Archimedes fixes a number a of units and defines the number hn(x) by a double recursion, on the cycle x and the period n, as follows:

h0(x) = 1 hn+1(0) = hn(a) hn+1(x + 1) = a · hn+1(x),

so that

hn(x) = (ax)n = axn.

Then he considers

ha(a) = (aa)a = a(a2)

for the particular value a = 108, i.e., a myriad myriads (the myriad, i.e., 10,000, was the largest number for which the Greeks had a proper name). This takes him up to

(108)(1016) = 108 · 1016 ≈ 101017,

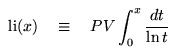

which he calls “a myriad myriads units of the myriad-myriadesimal order of the myriad-myriadesimal period”. This number, consisting of 80 million billions ciphers, remained the largest number used in mathematics until Skewes [1933], who needed 10101034 as a bound to the first place where the function π(x) − li(x) first changes sign. [Note: π(x) is the number of primes ≤ x, and

where PV is the Cauchy principal value of the function.]

By an evaluation of the sizes of a grain of sand and of the then known universe, Archimedes gets an estimate of 1063 for the number of grains of sand needed to fill the universe, well below the bound above. It may be interesting to note that by using the values for the sizes of an electron (10-18 meters) and of the currently known universe (1035 light years), we get an estimate of 10207 for the number of electrons needed to fill the universe, still well below the bound above.

Archimedes' concludes his work as follows:

I conceive that these things, King Gelon, will appear incredible to the great majority of people who have not studied mathematics, but that to those who are conversant therewith and have given thought to the question of the distances and sizes of the earth, the sun and moon and the whole universe, the proof will carry conviction. And it was for this reason that I thought the subject would not be inappropriate for your consideration.

It is clear from the identity

hn(x) = axn.

that Archimedes' double recursion is reducible to primitive recursion. Other forms of double recursion, however, are not so reducible. The primary example of a doubly-recursive function that is not primitive recursive is due to Wilhelm Ackermann. Ackermann's function is defined by the following three equations:

a(0, n) = n + 1 a(m + 1, 0) = a(m, 1) a(m +1, n +1) = a(m, a(m +1, n))

Ackermann's function grows extremely fast, in fact eventually it grows faster than any primitive recursive function. To see this consider a series of primitive recursive functions ƒ1,ƒ2,…, where ƒ1 is the successor function, and each function is obtained from the previous one by primitive recursion. So for instance ƒ2(x, y) is addition, ƒ3(x, y) multiplication, ƒ4(x, y) exponentiation, etc. It is clear that each function in the sequence eventually grows faster than all the previous ones. We can now think of defining a function of three arguments, ƒ(x, y, z) defined as follows:

ƒ(x, y, z) = ƒx(y, z)

A moment's reflection shows that in this function the argument x determines the which function in the sequence ƒ1,ƒ2,… needs to be used, while z is the recursion parameter and y is essentially idle. By dropping the middle parameter, then, one essentially obtains Ackermann's function.

1.7 Minimization (least search)

Whereas Ackermann's function cannot be obtained by means of primitive recursion, it can be obtained by further expanding the class of recursive functions by introducing a "minimization" or "least search" operator μ. This operator allows to define, for each 2-place function ƒ(x,y) yet another function, g(x) = μy[ƒ(x,y)=0], where g(x) returns the smallest number y such that ƒ(x,y) = 0, provided that the following two conditions hold:

- there actually exists at least one z such that ƒ(x,z) = 0; and

- for every y′ ≤ y, the value ƒ(x,y′) exists and is positive.

If at least one of the two conditions above fails, then g(x) fails to return a value and is undefined. Notice that with the introduction of the μ operator for the first time we encounter a recursive function that might fail to be defined for some arguments. Such functions are called partial. Since the μ operator itself can be iterated, and therefore applied to partial functions, we need to require (in condition (2) above) that ƒ(x,y′) be defined for every y′ ≤ y. One way to think about μ is in terms of an operator that tries to compute in succession all the values ƒ(x,0), ƒ(x,1), ƒ(x,2), ... until for some m ƒ(x,m) returns 0, in which case such an m is returned. This procedure might fail to return a value in two cases, namely if no such m exists, or if some of the computations ƒ(x,0), ƒ(x,1), ƒ(x,2), ... itself fails to return a value. We have thus generated a class of partial recursive functions, namely those functions that can obtained from the initial functions by means of composition, primitive recursion, and least search. This class turns out to coincide with the class of the Turing-computable functions introduced by Alan Turing as well as with the class of the λ-definable functions introduced by Alonzo Church.

2. The First Recursion Theorem

The so-called First Recursion Theorem (Odifreddi, 1989, II.3.15) provides a basic tool to compute values of functions which are solutions to recursive equations, implicitly defining functions by circular definitions involving the function itself.

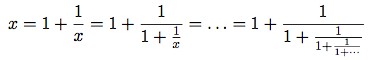

The procedure is similar to a classical method to compute approximations to real numbers which are solutions to algebraic equations, implicitly defining real numbers by circular definitions involving the number itself. For example, consider the equation

x = 1 +

1 x

Then x can be thought of as a fixed point of the function

ƒ(x) = 1 +

1 x

in the sense that

x = ƒ(x).

To make x explicit, we have at least two ways.

For example, we can transform the equation into the equivalent form

x2 − x − 1 = 0,

and use the well-known formula for the solution to the second degree equation that was already known to the Babylonians around 2000 B.C., thus getting

x =

1±√5 2 .

However, this works only for simple functions. Moreover, the solutions are not circular anymore, but are still implicit (the radical √5 still needs to be evaluated by other methods).

Alternatively, we can perform repeated substitutions of the right-hand-side for x, thus obtaining a continuous function of the kind introduced in 1572 by Raffaele Bombelli in his Algebra:

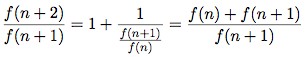

The infinite expression is built up as a limit of finite expressions, that provide approximations to the solution. More precisely, if we write

ƒ(n+1) ƒ(n)

for the n-th approximation, then

i.e.,

ƒ(n + 2) = ƒ(n) + ƒ(n + 1).

In other words, ƒ is simply the Fibonacci sequence, and the approximations are given by the ratios of its successive terms:

2 1

3 2

5 3

8 5

13 8

21 13 …

This iterative method is the same underlying the proof of the First Recursion Theorem, and it has a long history.

2.1 Differentiable functions

An early appearance of the method is found in the Indian Sulvasutra, composed between 600 and 200 B.C. To compute numerical approximations to √2, the following recursive algorithm is proposed.

A first approximation is obtained by dissecting a rectangle of edges 1 and 2 (i.e., of area 2) into two squares of edge 1. One square is cut into two rectangles of short edge ½, which are placed along the other square. The square of edge 1 + ½ (= 3/2) has an area that exceeds 2 by a small square of edge ½, thus producing an error equal to ¼.

A second approximation is obtained by subtracting from the square of edge 3/2 giving the first approximation the error, i.e., two rectangular stripes of area 1/8 and short edge 1/8 · 2/3 = 1/12. This produces a square of edge 3/2 − 1/12 = 17/12, whose area differs from 2 by a small square of edge 1/12, thus producing an error equal to 1/144.

A third approximation is obtained by subtracting from the square of edge 17/12 giving the second approximation the error, i.e., two rectangular stripes of area 1/288 and short edge 1/288 · 12/17 = 1/408. This produces a square of edge 17/12 − 1/408 = 577/408, which is the approximation to √2 given by the Sulvasutra, and is correct to 5 decimal places.

The procedure can be iterated as follows. Given an approximation xn, we produce a new approximation

xn+1 = xn −

xn2 − 2 2xn ,

where xn2 − 2 is the error of the n-th approximation,

xn2 − 2 2 ,

the area of each of the two rectangular stripes, and

xn2 − 2 2xn ,

their short edge.

If we let ƒ(x) = x2 − 2, then ƒ′(x) = 2x and ƒ(√2) = 0. The previous recursive formula can thus be rewritten as

xn+1 = xn −

ƒ(xn) ƒ′(xn) .

When generalized to any derivable functions, this becomes Newton's formula (1669) to approximate a zero of the given function by starting from a point x0 sufficiently close to a zero and having a nonzero derivative.

In the case of the ƒ considered above, Newton's formula can be obtained directly by looking for an increment h such that

ƒ(xn + h) = 0,

i.e.,

(xn + h)2 − 2 = xn2 + 2xnh + h2 − 2 = 0.

By disregarding the quadratic term h2 (which is the reason for the persistence of an error), we get

xn2 + 2xnh = 2,

i.e.,

h =

xn2 − 2 2xn .

Similar proofs hold for any polynomial. In general, for an analytical function ƒ the increment is obtained from Taylor's formula (1715):

ƒ(x+h) = ƒ(x) +

h 1! ƒ′(x) +

h2 2! ƒ′′(x) + … +

hn n! ƒ(n)(x) + …

2.2 Contractions

When discussing the problem of consciousness, Royce [1899] observed that an individual must have an infinite mental image of its own mind, since the image must contain an image of the image, which must contain an image of the image of the image, and so on.

Abstracting from the problem of consciousness, Royce presented a paradoxical metaphor that caught the fancy of the writer Jorge Luis Borges, who quoted it at least three times in his work with the following words:

Imagine a portion of the territory of England has been perfectly levelled, and a cartographer traces a map of England. The work is perfect. There is no particular of the territory of England, small as it can be, that has not been recorded in the map. Everything has its own correspondence. The map, then, must contain a map of the map, that must contain a map of the map of the map, and so on to infinity.

The metaphor has been interpreted as a proof by contradiction that a perfect map is impossible, supporting the well-known aphorism of Korzybski [1941]: “the map is not the territory”.

Actually, from a mathematical point of view a perfect map that contains a copy of itself is not a contradiction, but rather a contraction, in the sense that it defines a function ƒ such that

|ƒ(x)−ƒ(y)| ≤ c · |x−y|,

for some c such that 0 < c < 1. Banach [1922] has proved that a contraction on a complete metric space has a unique fixed point, and the proof is a typical iteration. Indeed, by induction,

|ƒ(n+1)(x) − ƒ(n)(x)| ≤ cn · |ƒ(x) − x|.

By the triangular inequality,

|ƒ(n+m)(x) − ƒ(n)(x)| ≤ ∑i<m |ƒ(n+i+1)(x) − ƒ(n+i)(x)| ≤ ( ∑i<m cn+i ) · |ƒ(x) − x|

Thus the sequence {ƒ(n)(x)}n∈ω converges to a point x0, and hence so does the sequence {ƒ(n+1)(x)}n∈ω. Since ƒ is continuous, the second sequence also converges to ƒ(x0), which must then be equal to x0. In other words, x0 is a fixed point of ƒ. Moreover, if x1 is another fixed point, then

|x0 − x1| = |ƒ(x0) − ƒ(x1)| ≤ c · |x0 − x1|.

Since c < 1, it follows that x0 = x1, i.e., x0 is the unique fixed point of ƒ.

In the case of a perfect map, this means that there must be a point of the territory that coincides with its image on the map. Thus a perfect map is not the territory in general, but it is so in one (and only one) point.

2.3 Continuous functions

Banach's result was obtained as an abstraction of the technique of successive substitution developed in the 19th century by Liouville, Neumann and Volterra to find solutions to integral equations, in which an unknown function appears under an integral sign. A similar technique was used by Peano [1888] to find solutions to systems of linear differential equations. In both cases an appropriate contraction is determined by the usual continuity and Lipschitz conditions, which ensure existence and uniqueness of the solution.

An extension of Banach's Fixed Point Theorem, for more special spaces but more general maps, was obtained by Brouwer [1911], who proved that a continuous function of a convex compact subset of a Euclidean space on itself has a fixed point.

Brouwer's original proof determined the existence of a fixed point by contradiction, without actually exhibiting it (this was quite ironical, due to Brouwer's costructive philosophy). In the special case of a closed disk, Brouwer's proof amounted to the following. If a continuous function of a closed disk on itself had no fixed point, every point would be moved to a different point. By extending the vector determined by an argument and its image, we could associate to every point on the disk a point on the border. This would determine an impossible continuous deformation of the whole disk into the border.

However, a constructive version of Brouwer's Fixed Point Theorem for a continuous function on a closed square on itself can be obtained by the iteration technique, as follows. Suppose there is no fixed point on the border. Then the vector determined as above makes a complete turn while the point moves around the border. Divide the square into four equal squares. Either the vector vanishes on a point of the border on one of the squares, thus determining a fixed point of the given function, or there is at least one square on which the vector makes a complete turn while the point moves around the border, and the process can be started again. If no fixed point is found along the way, the process determines a sequence of telescopic squares which uniquely identifies a point. Since any neighborhood of the point contains vectors in every direction, by continuity the vector field must vanish at it, i.e., the process determines a fixed point.

In one dimension Brouwer's Fixed Point Theorem becomes a version of the Intermediate Value Theorem proved by Bolzano [1817], according to which a continuous function on a closed interval that takes values respectively greater and smaller than c on the extremes of the interval, must take value c at some point of the interval. In this case, an intermediate value can be found by a bisection method similar to the above.

Even more abstract versions of Banach's theorem than Brouwer's were obtained by Knaster [1928] and Tarski [1955], who proved the existence of fixed points for any monotone function on a complete lattice. Abian and Brown [1961] replaced complete lattices by chain-complete partial orderings, in which every chain of elements has a least upper bound. In particular, a chain-complete partial ordering has a least element ⊥, since the empty chain must have a l.u.b.

Given a monotone function ƒ on a chain complete partial ordering, consider the following transfinite sequence of elements:

x0 = ⊥ xα+1 = ƒ(xα) xβ = the l.u.b. of {ƒ(xα)}α<β, if β is a limit ordinal.

Since ƒ is monotone, this defines a chain, whose length cannot exceed the maximal length of chains on the given partial ordering. Then there is a largest element xα0, otherwise the l.u.b. of the chain would be a larger element. And ƒ(xα0) = xα0, otherwise xα0 would not be the largest element of the chain. Moreover, xα0 is the least fixed point, because every element of the chain is below any other fixed point, by induction.

It thus follows that any monotone function on a chain complete partial ordering has a least fixed point. If, moreover, ƒ is continuous (in the sense of preserving l.u.b.'s), then the fixed point is obtained in at most ω iterations, because

ƒ(xω) = ƒ(n∈ω xn) =

n∈ω ƒ(xn) =

n∈ω xn+1 = xω.

As an application, we can sketch a proof of the First Fixed Point Theorem of Kleene [1952]. Consider the chain complete partial ordering consisting of the partial functions on the integers, ordered by inclusion. Since a recursive functional is monotone and continuous, it has a least fixed point xω by the theorem. Moreover, the least fixed point is recursive by the proof.

3. The Second Recursion Theorem

The so-called Second Recursion Theorem (see Odifreddi, 1989, II.2.13) provides a basic tool to find explicit solutions to recursive equations, implicitly defining programs of recursive functions by circular definitions involving the program itself.

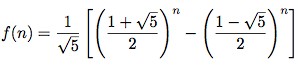

The procedure is the analogue of a classical method to find explicit definitions for functions implicitly defined by recursive equations. For example, consider the implicit definition of the Fibonacci sequence:

ƒ(0) = 0 ƒ(1) = 1 ƒ(n + 2) = ƒ(n) + ƒ(n + 1).

To make ƒ explicit, we can use De Moivre's method (1718) of generating functions, and let

F(x) = ƒ(0) + ƒ(1)·x + ƒ(2)·x2 + … + ƒ(n)·xn + …

By computing

F(x) − F(x)·x − F(x)·x2

we notice that most terms cancel out, since they have null coefficients of the form ƒ(n + 2) − ƒ(n + 1) − ƒ(n). We thus get

F(x) =

x 1−x−x2 .

By factoring the denominator, expanding the right-hand-side into a power series and comparing it term by term to F(x), we obtain the following explicit description for ƒ:

This result, which uses (1±√5)/2 to express the Fibonacci sequence, is the complement of the result proved above, which uses the Fibonacci sequence to approximate (1±√5)/2.

Kronecker [1881] generalized the previous example to show that every linear recursive relation determines the coefficients of a power series defining a rational function. Conversely, every rational function can be expressed as a power series with coefficients satisfying a linear recursive relation.

The Second Recursion Theorem serves a similar purpose, by turning recursive programs which define functions by recursive calls, into programs for the same functions without any recursive call.

3.1 The diagonal method

The proofs of the Second Recursion Theorem and its variants (see Odifreddi 1989, II.2.10 and II.2.13) are elaborate and abstract forms of the diagonal method, which can be considered the most pervasive tool of Recursion Theory. Its essence is the following.

Given an infinite matrix {aij}ij, we first transform the elements ann on the diagonal by means of a switching function d, thus obtaining d(ann). If the switching function d is never the identity on the elements of the matrix, then the transformed diagonal function is not a row of the matrix. More precisely, it differs on the n-th element from the n-th row.

Equivalently, if the transformed diagonal function is a row of the matrix, e.g. the n-th, then the switching function d must be the identity on some element of the matrix. More precisely, it leaves the n-th element of the n-th row unchanged. In this form, the diagonal method provides a fixed point of the function d.

3.2 The diagonal

The first ingredient of the diagonal method is the consideration of the elements on the diagonal of an appropriate matrix.

This was done in a nontrivial way already by Archimedes in the Sand Reckoner discussed above, when stepping from the matrix {hn(x)}n,x to the diagonal element ha(a).

In modern times, Du Bois Reymond has made a substantial use of diagonalization in his study of orders of infinity, reported in Hardy [1910]. Basically, he defines an ordering based on domination (i.e. a function is greater than another if it is above it for almost all arguments), and classifies classes of functions by means of skeletons of fast growing functions obtained by starting with functions ƒ greater than the identity, iterating at successor stages, and diagonalizing at limit stages. More precisely, a function ƒ such that ƒ(x) ≥ x for almost all arguments defines the following skeleton:

ƒ0(x) = ƒ(x) ƒα+1(x) = ƒα(x)(x) ƒα(x) = ƒαx(x),

where in the last clause α is the limit of the ascending sequence of the ordinals αx (the definition obviously depends on the choice of the ascending sequence).

Today these skeletons have become standard in Complexity Theory, to classify complexity classes such as the primitive recursive functions (see Odifreddi 1989, VIII.8.10).

3.3 The switching function

The second ingredient of the diagonal method is the use of the switching function on the elements of the diagonal.

This was first done by Cantor [1874], in his historical proof that the sets of natural numbers are more than the numbers themselves. By considering characteristic functions, the proof amounts to the observation that given a sequence {ƒn}n∈ω of 0,1-valued functions, the function

d(x) = 1 − ƒx(x)

is 0,1-valued but not in the sequence, since it differs from ƒn on the argument n. The switching function, true to its name, is here the function that interchanges 0 and 1.

The same type of argument was used by Russell [1903], to prove his celebrated paradox. This time we consider the set

R = {x : ¬(x ∈ x)}.

Then

x ∈ R ↔ ¬(x ∈ x),

and thus

R ∈ R ↔ ¬(R ∈ R),

contradiction. The switching function is now the negation operator that interchanges the truth values “true” and “false”.

Russell's paradox was turned into a theorem by Curry [1942], who proved the existence of fixed points for any λ-term in the untyped λ-Calculus, according to the following correspondence:

Set Theory λ-Calculus element argument set term membership application set formation { } λ-abstraction set equality term equality

If the term N is supposed to correspond to negation, then the set R corresponds to the term

C = λx . N(xx).

By the reduction rules of the Lambda Calculus,

Cx = N(xx),

and thus

CC = N(CC).

However, this is not a contradiction, but rather a proof that CC is a fixed point of N. In other words, in the λ-Calculus there is no switching function, in the sense of a term that always changes its arguments.

Curry's Fixed Point Theorem is a version of the Recursion Theorems, and together with the representability of the predecessor function quoted above implies the representability of all recursive functions in the λ-Calculus, as proved by Kleene [1936] (see Odifreddi 1989, I.6.6.c).

3.4 Self-reference

In the last two arguments above, diagonalization takes the form of a self-reference. Indeed, the conditions “x ∈ x” in Russell's paradox can be read as: “x belongs to itself”. Similarly, the condition “xx” in Curry's theorem can be read as: “x applied to itself”.

Self-Reference is obviously trivial in any language possessing the pronoun “I”. The best known ancient reference is God's own description in Exodus (3.14): “I am that I am”. However, this kind of self-reference is somewhat indirect, since the pronoun is a linguistic object that refers not to itself, but to the person who is pronouncing it. A better example is a phrase that talks of itself, for example: “This phrase consists of six words”.

The first paradoxical self-reference was probably the Liar paradox, attributed to Eubulides (4th century B.C.) in the form: “I am lying”. A purely linguistic analogue is: “This phrase is false”.

It is not paradoxical, instead, for a Cretan such as Epimenides (6th century B.C.) to say: “All Cretans always lie”. This phrase cannot be true, otherwise Epimenides would be a Cretan who is not always lying. Then it must be false, i.e., some Cretan does not always lie. It does not follow that such a Cretan is Epimenides. Nor would it follow, if he were, that the phrase is one of his truths. So being, the following comment by St. Paul in the Epistle to Titus (1.12) turns out to be even more cretin than it looks at first sight:

For there are many unruly and vain talkers and deceivers, specially they of the circumcision: whose mouths must be stopped, who subvert whole houses, teaching things which they ought not, for filthy lucre's sake. One of themselves, even a prophet of their own, said, “The Cretans are always liars, evil beasts, slow bellies”. This witness is true.

The Liar paradox had counteless versions in history. In particular, the original one-step self-reference was turned into a two-step one by Philip Jourdain in 1913 (following Buridan of the 14th century), as follows:

The following phrase is false.

The previous phrase is true.

Finite n-steps versions are obtained in a similar fashion. An infinite diabolical version, as the name suggests, has been proposed by Yablo [1985], [1993]:

All the following phrases are false.

All the following phrases are false.

…

Suppose the first phrase is true. Then all the following ones are false, in particular the second. Moreover, all the remaining phrases are false, and hence the second one is true, contradiction. Then the first phrase is false, i.e., one of the following phrases is true, and a contradiction is reached as for the first. Thus the first phrase is contradictory. Similarly, so are all the remaining ones.

The turning point in these developments came with Gödel [1931], who made an explicit reference to the Liar paradox in his paper. His main result can be stated as follows: given any property P weakly representable in a sufficiently strong formal system for Arithmetic, there is a sentence saying of itself that it has the property P (see Odifreddi 1989, I.165). For the proof, consider an enumeration {φn}n∈ω of the formulas with one free variable, the matrix

aij = the sentence “φj has the property expressed by φi”

and the switching function

d(φ) = the sentence “φ has the property P”

Since P is weakly representable, the transformed diagonal sequence is still a row of the matrix, up to provable equivalence. Thus there is a φ such that d(φ) is provably equivalent to φ, i.e., φ says of itself that it has the property P.

A first consequence is that truth cannot be weakly representable in any consistent and sufficiently strong formal system for Arithmetic. Otherwise so would be its negation, and the general result would give a contradictory sentence asserting its own negation, as in the Liar paradox. Though Goedel recognized the unrepresentability of truth in 1931 (as documented by a letter to Zermelo; see Murawski 1998), he did not explicitly prove or publish a theorem involving the concept of truth (possibly because he had concerns about that concept; see Feferman 1984). The result is thus usually attributed to Tarski [1933], [1936].

A second consequence is that, since provability is weakly representable in any consistent and sufficiently strong formal system for Arithmetic, the general result gives a sentence asserting its own unprovability. From this one can easily obtain all the epochal results of Gödel [1931], Rosser [1936] and Church [1936] (see CRT, pp. I.166-169).

By the same type of argument we can also prove the Second Recursion Theorem of Kleene [1938], following Owings [1973]. Given an effective transformation of programs ƒ, consider an enumeration {φn}n∈ω of the partial recursive unary functions, the matrix

aij = the function with program coded by φi(j)

and the switching function

d(φe) = the function with program coded by ƒ(e).

Since ƒ is effective, the transformed diagonal sequence is still a row of the matrix. Thus there is an e such that d(φe) and φe are the same function. Equivalently, the programs coded by e and ƒ(e) compute the same function.

Bibliography

- Abian, S., and Brown, A.B., 1961, ‘A theorem on partially ordered sets with applications to fixed-point theorems,’ Can. J. Math, 13: 78-83.

- Banach, S., 1922, ‘Sur les operations dans les ensembles abstraits et leurs applications aux equations integrales,’ Fund. Math., 3: 7-33.

- Bolzano, B., 1817, Rein analytischer Beweis des Lehrsatzes dass zwischen je zwey Werthen, die ein entgegengesetzes Resultat gewähren, wenigstens eine reelle Wurzel der Gleichung liege.

- Boolos, G., and Jeffrey, R., 1974, Computability and Logic, Cambridge: Cambridge University Press.

- Brouwer, L., 1911, ‘Über Abbildungen von Mannigfaltigkeiten,’ Math. Ann., 71: 97-115.

- Cantor, G., 1874, ‘Über eine Eigenschaft des Inbegriffes aller reellen algebraischen Zahlen,’ J. Math., 77: 258-262.

- Church, A., 1933, ‘A set of postulates for the foundation of logic,’ (second paper), Ann. Math., 34: 839-864.

- ------, 1936, ‘A note on the Entscheidungsproblem,’ J. Symb. Log., 1: 40-41.

- Curry, H.B., 1942, ‘The inconsistency of certain formal logics,’ J. Symb. Log., 7: 115-117.

- Feferman, S., 1984, ‘Kurt Goedel: Conviction and Caution’, in P. Weingartner and C. Pühringer (eds.), Philosophy of Science, History of Science: A Selection of Contributed Papers of the 7th International Congress of Logic, Methodology and Philosophy of Science, Salzburg: A. Hain, 1984.

- Gödel, K., 1931, ‘Über formal unentscheidbare Sätze der Principia Mathematica und verwandter Systeme I,’ Monash. Math. Phys., 38: 173-198.

- Hardy, G.H., 1910, Orders of infinity

- Kleene, S.K., 1935, ‘A theory of positive integers in formal logic,’ Am. J. Math., 57: 153-173, 219-244.

- ------, 1936, ‘λ-definability and recursiveness,’ Duke Math. J., 2: 340-353.

- ------, 1938, ‘On notations for ordinal numbers,’ J. Symb. Log., 3: 150-155.

- ------, 1952, Introduction to metamathematics.

- Knaster, B., 1928, ‘Un théorème sur les fonctions d'ensembles,’ Ann. Soc. Polon. Math., 6: 133-134.

- Korzybski, A., 1941, Science and sanity.

- Kronecker, L., 1881, ‘Zur Theorie der Elimination einer Variablen aus zwei algebraischen Gleichungen,’ Monat. Kön. Preuss. Akad. Wiss. Berlin, pp. 535-600.

- Mendelson, E., 1964, An Introduction to Mathematical Logic, New York, D. Van Nostrand.

- Murawski, R., 1998, ‘Undefinability of Truth. The Problem of Priority: Tarski vs. Gödel,’ History and Philosophy of Logic, 19: 153-160.

- Newman, J.R., 1956, The World of Mathematics.

- Odifreddi, P.G., 1989, Classical Recursion Theory, North Holland; second edition, 1999.

- ------, 1999, Classical Recursion Theory, Volume II, North Holland.

- Owings, J.C., 1973, ‘Diagonalization and the recursion theorem,’ Notre Dame J. Form. Log., 14: 95-99.

- Peano, G., 1888, ‘Intégration par séries des équations différentielles linéaires,’ Math. Ann., 32: 450-456.

- ------, ,1891, ‘Sul concetto di numero,’ Rivista di Matematica, 1: 87-102 and 256-267.

- Petruso, K.M., 1985, ‘Additive progressions in prehistoric mathematics: a conjecture,’ Hist. Math., 12: 101-106.

- Preziosi, D., 1983, Minoan Architectural Design.

- Rosser, B.J., 1936, ‘Extensions of some theorems of Gödel and Church,’ J. Symb. Log., 1: 87-91.

- Rouse Ball, W.W., 1905, Mathematical Recreations and Essays.

- Royce, J., 1899, The world and the individual.

- Russell, B., 1903, The principles of mathematics.

- Shashkin, Y., 1991, Fixed Points, American Mathematical Society.

- Singh, P., 1985, ‘The socalled Fibonacci numbers in Ancient and Medieval India,’ Hist. Math., 12: 229-244.

- Skewes, S., 1933, ‘On the difference π(x) − li(x),’ J. Math. Soc., 8: 277-283.

- Tarski, A., 1933, Pojęcie prawdy w językach nauk dedukcyjnych, Nakładem Towarzystwa Naukowego Warszawskiego: Warszawa.

- Tarski, A., 1936, ‘Der Wahrheitsbegriff in der formalisierten Sprachen,’ Studia Phil., 1: 261-405.

- ------, 1955, ‘A lattice-theoretical fixed-point theorem and its applications,’ Pac. J. Math., 5: 285-309.

- Wittgenstein, L., 1921, ‘Logisch-philosophische Abhandlung,’ Ann. Naturphil., 14: 185-262.

- Yablo, S., 1985, ‘Truth and reflection,’ J. Phil. Log., 14: 297-349.

- ------, 1993, ‘Paradox without self-reference,’ Analysis, 53: 251-252.

Other Internet Resources

[Please contact the author with suggestions.]

Related Entries

Church, Alonzo | computability and complexity | function | liar paradox | Russell's paradox | Turing, Alan | Turing machines