Scientific Objectivity

Scientific objectivity is a characteristic of scientific claims, methods and results. It expresses the idea that the claims, methods and results of science are not, or should not be influenced by particular perspectives, value commitments, community bias or personal interests, to name a few relevant factors. Objectivity is often considered as an ideal for scientific inquiry, as a good reason for valuing scientific knowledge, and as the basis of the authority of science in society.

Many central debates in the philosophy of science have, in one way or another, to do with objectivity: confirmation and the problem of induction; theory choice and scientific change; realism; scientific explanation; experimentation; measurement and quantification; evidence and the foundations of statistics; evidence-based science; feminism and values in science. Understanding the role of objectivity in science is therefore integral to a full appreciation of these debates. As this article testifies, the reverse is true too: it is impossible to fully appreciate the notion of scientific objectivity without touching upon many of these debates.

The ideal of objectivity has been criticized repeatedly in philosophy of science, questioning both its value and its attainability. This article focuses on the question of how scientific objectivity should be defined, whether the ideal of objectivity is desirable, and to what extent scientists can achieve it. In line with the idea that the epistemic authority of science relies primarily on the objectivity of scientific reasoning, we focus on the role of objectivity in scientific experimentation, inference and theory choice.

- 1. Introduction: Product and Process Objectivity

- 2. Objectivity as Faithfulness to Facts

- 3. Objectivity as Absence of Normative Commitments and the Value-Free Ideal

- 4. Objectivity as Freedom from Personal Biases

- 5. Issues in the Special Sciences

- 6. Instrumentalism to the Rescue?

- 7. Conclusions

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. Introduction: Product and Process Objectivity

Objectivity is a value. To call a thing objective implies that it has a certain importance to us and that we approve of it. Objectivity comes in degrees. Claims, methods and results can be more or less objective, and, other things being equal, the more objective, the better. Using the term “objective” to describe something often carries a special rhetorical force with it. The admiration of science among the general public and the authority science enjoys in public life stems to a large extent from the view that science is objective or at least more objective than other modes of inquiry. Understanding scientific objectivity is therefore central to understanding the nature of science and the role it plays in society.

Given the centrality of the concept for science and everyday life, it is not surprising that attempts to find ready characterizations are bound to fail. For one thing, there are two fundamentally different ways to understand the term: product objectivity and process objectivity. According to the first understanding, science is objective in that, or to the extent that, its products—theories, laws, experimental results and observations—constitute accurate representations of the external world. The products of science are not tainted by human desires, goals, capabilities or experience. According to the second understanding, science is objective in that, or to the extent that, the processes and methods that characterize it neither depend on contingent social and ethical values, nor on the individual bias of a scientist. Especially this second understanding is itself multi-faceted; it contains, inter alia, explications in terms of measurement procedures, individual reasoning processes, or the social and institutional dimension of science. The semantic richness of scientific objectivity is also reflected in the multitude of categorizations and subdivisions of the concept (e.g., Megill 1994; Douglas 2004).

If what is so great about science is its objectivity, then objectivity should be worth defending. The close examinations of scientific practice that philosophers of science have undertaken in the past fifty years have shown, however, that several conceptions of the ideal of objectivity are either questionable or unattainable. The prospects for a science providing a non-perspectival “view from nowhere” or for proceeding in a way uninformed by human goals and values are fairly slim, for example.

This article discusses several proposals to characterize the idea and ideal of objectivity in such a way that it is both strong enough to be valuable, and weak enough to be attainable and workable in practice. We begin with a natural conception of objectivity: faithfulness to facts, which is closely related to the idea of product objectivity. We motivate the intuitive appeal of this conception, discuss its relation to scientific method and discuss arguments challenging both its attainability as well as its desirability. We then move on to a second conception of objectivity as absence of normative commitments and value-freedom, and once more we contrast arguments in favor of such a conception with the challenges it faces. The third conception of objectivity which we discuss at length is the idea of absence of personal bias. After discussing three case studies about objectivity in scientific practice (from economics, social science and medicine) as well as a radical alternative to the traditional conceptions of objectivity, instrumentalism, we draw some conclusions about what aspects of objectivity remain defensible and desirable in the light of the difficulties we have discussed.

2. Objectivity as Faithfulness to Facts

The idea of this first conception of objectivity is that scientific claims are objective in so far as they faithfully describe facts about the world. The philosophical rationale underlying this conception of objectivity is the view that there are facts “out there” in the world and that it is the task of a scientist to discover, to analyze and to systematize them. “Objective” then becomes a success word: if a claim is objective, it successfully captures some feature of the world.

In this view, science is objective to the degree that it succeeds at discovering and generalizing facts, abstracting from the perspective of the individual scientist. Although few philosophers have fully endorsed such a conception of scientific objectivity, the idea figures recurrently in the work of prominent 20th century philosophers of science such as Carnap, Hempel, Popper, and Reichenbach. It is also, in an evident way, related to the claims of scientific realism, according to which it is the goal of science to find out the truths about the world, and according to which we have reason to believe in the truth of our best-confirmed scientific theories.

2.1 The View From Nowhere

Humans experience the world from a perspective. The contents of an individual's experiences vary greatly with the individual's perspective, which is affected by his or her personal situation, details of his or her perceptual apparatus, language and culture, the physical conditions in which the perspective is made. While the experiences vary, there seems to be something that remains constant. The appearance of a tree will change as one approaches it but, at least possibly, the tree itself doesn't. A room may feel hot or cold depending on the climate one is used to but it will, at least possibly, have a degree of warmth that is independent of one's experiences. The object in front of a person does not, at least not necessarily, disappear just because the lights are turned off.

There is a conception of objectivity that presupposes that there are two kinds of qualities: ones that vary with the perspective one has or takes, and ones that remain constant through changes of perspective. The latter are the objective properties. Thomas Nagel explains that we arrive at the idea of objective properties in three steps (Nagel 1986: 14). The first step is to realize (or postulate) that our perceptions are caused by the actions of things on us, through their effects on our bodies. The second step is to realize (or postulate) that since the same properties that cause perceptions in us also have effects on other things and can exist without causing any perceptions at all, their true nature must be detachable from their perspectival appearance and need not resemble it. The final step is to form a conception of that “true nature” independently of any perspective. Nagel calls that conception the “view from nowhere”, Bernard Williams the “absolute conception” (Williams 1985 [2011]). It represents the world as it is, unmediated by human minds and other “distortions”.

Many scientific realists maintain that science, or at least natural science, does and indeed ought to aim to describe the world in terms of this absolute conception and that it is to some extent successful in doing so (for a detailed discussion of scientific realism, see the entry on scientific realism). There is an immediate sense in which the absolute conception is an attractive one to have. If two people looking at a colored patch in front of them disagree whether it is green or brown, the absolute conception provides an answer to the question (e.g., “The patch emits light at a wavelength of 510 nanometers”). By making these facts accessible through, say, a spectroscope, we can arbitrate between the conflicting viewpoints (viz., by stating that the patch should look green to a normal observer in daylight).

Another reason for this conception to be attractive is that it will provide for a simpler and more unified representation of the world. Theories of trees will be very hard to come by if they use predicates such as “height as seen by an observer” and a hodgepodge if their predicates track the habits of ordinary language users rather than the properties of the world. To the extent, then, that science aims to provide explanations for natural phenomena, casting them in terms of the absolute conception would help to realize this aim. Bernard Williams makes a related point about explanation:

The substance of the absolute conception (as opposed to those vacuous or vanishing ideas of “the world” that were offered before) lies in the idea that it could nonvacuously explain how it itself, and the various perspectival views of the world, are possible. (Williams 1985 [2011]: 139)

Thus, a scientific account cast in the language of the absolute conception may not only be able to explain why a tree is as tall as it is but also why we see it in one way when viewed from one standpoint and in a different way when viewed from another.

A third reason to find the view from nowhere attractive is that if the world came in structures as characterized by it and we did have access to it, we could use our knowledge of it to ground predictions (which, to the extent that our theories do track the absolute structures, will be borne out). A fourth and related reason is that attempts to manipulate and control phenomena can similarly be grounded in our knowledge of these structures. To attain any of the four purposes—settling disagreements, explaining the world, predicting phenomena and manipulation and control—the absolute conception is at best sufficient but not necessary. We can, for instance, settle disagreements by imposing the rule that the person who speaks first is always right or the person who is of higher social rank or by an agreed-upon measurement procedure that does not track absolute properties. We can explain the world and our image of it by means of theories that do not represent absolute structures and properties, and there is no need to get things (absolutely) right in order to predict successfully. Nevertheless, there is something appealing in the idea that disagreements concerning certain matters of fact can be settled by the very facts themselves, that explanations and predictions grounded in what's really there rather than in a distorted image of it.

No matter how desirable, it is clear that our ability to use scientific claims to represent all and only facts about the world depends on whether these claims can unambiguously be established on the basis of evidence. We test scientific claims by means of their implications, and it is an elementary principle of logic that claims whose implications are true need not themselves be true. It is the job of scientific method to make sure that observations, measurements, experiments, tests—pieces of the scientific evidence—speak in favor of the scientific claim at hand. Alas, the relation between evidence and scientific hypothesis is not straightforward. Subsection 2.2 and Subsection 2.3 will look at two challenges of the idea that even the best scientific method will yield claims that describe an aperspectival view from nowhere. Subsection 2.4 will challenge the idea that the view from nowhere is a good thing to have.

2.2 Theory-Ladenness and Incommensurability

According to a popular picture, science progresses toward truth by adding true and eliminating false beliefs from our best scientific theories. By making these theories more and more verisimilar, that is, truthlike, scientific knowledge grows over time (e.g., Popper 1963). If this picture is correct, then over time scientific knowledge will become more objective, that is, more faithful to facts. However, scientific theories often change, and sometimes several theories compete for the place of the best scientific account of the world.

It is inherent in the above picture of scientific objectivity that observations can, at least in principle, decide between competing theories: if they did not, the conception of objectivity as faithfulness would be a pointless one to have, as we would not be in a position to verify it. This position has been adopted by Karl R. Popper, Rudolf Carnap and other leading figures in (broadly) empiricist philosophy of science. Many philosophers have argued that the relation between observation and theory is way more complex and that influences can actually run both ways (e.g., Duhem 1906 [1954]; Wittgenstein 1953 [2001]; Hanson 1958). The most lasting criticism, however, was delivered by Thomas S. Kuhn (1962 [1970]) in his book “The Structure of Scientific Revolutions”.

Kuhn's analysis is built on the assumption that scientists always view research problems through the lens of a paradigm, defined by set of relevant problems, axioms, methodological presuppositions, techniques, and so forth. Kuhn provided several historical examples in favor of this claim. Scientific progress—and the practice of normal, everyday science—happens within a paradigm that guides the individual scientists' puzzle-solving work and that sets the community standards.

Can observations undermine such a paradigm, and speak for a different one? Here, Kuhn famously stresses that observations are “theory-laden” (cf. also Hanson 1958): they depend on a body of theoretical assumptions through which they are perceived and conceptualized. This hypothesis has two important aspects.

First, the meaning of observational concepts is influenced by theoretical assumptions and presuppositions. For example, the concepts “mass” and “length” have different meanings in Newtonian and relativistic mechanics; so does the concept “temperature” in thermodynamics and statistical mechanics (cf. Feyerabend 1962). In other words, Kuhn denies that there is a theory-independent observation language. The “faithfulness to reality” of an observation report is always mediated by a theoretical Überbau, disabling the role of observation reports as an impartial, merely fact-dependent arbiter between different theories.

Second, not only the observational concepts, but also the perception of a scientist depends on the paradigm she is working in.

Practicing in different worlds, the two groups of scientists [who work in different paradigms, J.R./J.S.] see different things when they look from the same point in the same direction. (Kuhn 1962 [1970]: 150)

That is, our own sense data are shaped and structured by a theoretical framework, and may be fundamentally distinct from the sense data of scientists working in another one. Where a Ptolemaic astronomer like Tycho Brahe sees a sun setting behind the horizon, a Copernican astronomer like Johannes Kepler sees the horizon moving up to a stationary sun. If this picture is correct, then it is hard to assess which theory or paradigm is more faithful to the facts, that is, more objective.

The thesis of the theory-ladenness of observation has also been extended to the incommensurability of different paradigms or scientific theories, problematized independently by Thomas S. Kuhn (1962 [1970]) and Paul Feyerabend (1962). Literally, this concept means “having no measure in common”, and it figures prominently in arguments against a linear and standpoint-independent picture of scientific progress. For instance, the Special Theory of Relativity appears to be more faithful to the facts and therefore more objective than Newtonian mechanics because it reduces, for low speeds, to the latter, and it accounts for some additional facts that are not predicted correctly by Newtonian mechanics. This picture is undermined, however, by two central aspects of incommensurability. First, not only do the observational concepts in both theories differ, but the principles for specifying their meaning may be inconsistent with each other (Feyerabend 1975: 269–270). Second, scientific research methods and standards of evaluation change with the theories or paradigms. Not all puzzles that could be tackled in the old paradigm will be solved by the new one—this is the phenomenon of “Kuhn loss”.

A meaningful use of objectivity presupposes, according to Feyerabend, to perceive and to describe the world from a specific perspective, e.g., when we try to verify the referential claims of a scientific theory. Only within a peculiar scientific worldview, the concept of objectivity may be applied meaningfully. That is, scientific method cannot free itself from the particular scientific theory to which it is applied; the door to standpoint-independence is locked. As Feyerabend puts it:

our epistemic activities may have a decisive influence even upon the most solid piece of cosmological furniture—they make gods disappear and replace them by heaps of atom in empty space. (1978: 70)

Kuhn and Feyerabend's theses about theory-ladenness of observation, and their implications for the objectivity of scientific inquiry have been much debated afterwards, and have often been misunderstood in a social constructivist sense. Therefore Kuhn later returned to the topic of scientific objectivity, of which he gives his own characterization in terms of the shared cognitive values of a scientific community. We discuss Kuhn's later view in section 3.1. For a more profound coverage, see section 4 in the entry on theory and observation in science, section 3 in the entry on the incommensurability of scientific theories and section 4.2 in the entry on Thomas S. Kuhn.

2.3 The Experimenter's Regress

Most of the earlier critics of “objective” verification or falsification focused on the relation between evidence and scientific theories. There is a sense in which the claim that this relation is problematic is not so surprising. Scientific theories contain highly abstract claims that describe states of affairs far removed from the immediacy of sense experience. This is for a good reason: sense experience is necessarily perspectival, so to the extent to which scientific theories are to track the absolute conception, they must describe a world different from that of sense experience. But surely, one might think, the evidence itself is objective. So even if we do have reasons to doubt that abstract theories faithfully represent the world, we should stand on firmer grounds when it comes to the evidence against which we test abstract theories.

Theories are seldom tested against brute observations, however. This too is for good reason: if they were, they'd be unlikely to track the absolute conception. Simple generalizations such as “all swans are white” are directly learned from observations (say, of the color of swans) but they do not represent the view from nowhere (for one thing, the view from nowhere doesn't have colors). Genuine scientific theories are tested against experimental facts or phenomena, which are themselves unobservable to the unaided senses. Experimental facts or phenomena are instead established using intricate procedures of scientific measurement and experimentation.

We therefore need to ask whether the results of scientific measurements and experiments can be aperspectival. In an important debate in the 1980s and 1990s some commentators answered that question with a resounding “no”, which was then rebutted by others. The debate concerns the so-called “experimenter's regress” (Collins 1985). Collins, a prominent sociologist of science, claims that in order to know whether an experimental result is correct, one first needs to know whether the apparatus producing the result is reliable. But one doesn't know whether the apparatus is reliable unless one knows that it produces correct results in the first place and so on and so on ad infinitum. Collins' main case concerns attempts to detect gravitational waves, which were very controversially discussed among physicists in the 1970s.

Collins argues that the circle is eventually broken not by the “facts” themselves but rather by factors having to do with the scientist's career, the social and cognitive interests of his community, and the expected fruitfulness for future work. It is important to note that in Collins's view these factors do not necessarily make scientific results arbitrary. But what he does argue is that the experimental results do not represent the world according to the absolute conception. Rather, they are produced jointly by the world, scientific apparatuses, and the psychological and sociological factors mentioned above. The facts and phenomena of science are therefore necessarily perspectival.

In a series of contributions, Allan Franklin, a physicist-turned-philosopher of science, has tried to show that while there are indeed no algorithmic procedures for establishing experimental facts, disagreements can nevertheless be settled by reasoned judgement on the basis of bona fide epistemological criteria such as experimental checks and calibration, elimination of possible sources of error, using apparatuses based on well-corroborated theory and so on (Franklin 1994, 1997). Collins responds that “reasonableness” is a social category that is not drawn from physics (Collins 1994).

The main issue for us in this debate is whether there are any reasons to believe that experimental results provide an aperspectival view on the world. According to Collins, experimental results are co-determined by the facts as well as social and psychological factors. According to Franklin, whatever else influences experimental results other than facts is not arbitrary but instead based on reasoned judgment. What he has not shown is that reasoned judgment guarantees that experimental results reflect the facts alone and are therefore aperspectival in any interesting sense.

2.4 Standpoint Theory, Contextual Empiricism and Trust in Science

Feminist standpoint theorists and proponents of “situated knowledge” such as Donna Haraway (1988), Sandra Harding (1991, 1993) and Alison Wylie (2003) deny the internal coherence of a view from nowhere: all human knowledge is at base human knowledge and is therefore necessarily perspectival. But they argue more than that. Not only is perspectivality the human condition, it is also a good thing to have. This is because perspectives, especially the perspectives of underprivileged classes, come along with certain epistemic advantages.

Standpoint theory is a development of certain Marxist ideas that epistemic position is related to social position. According to this view, workers, being members of an underprivileged class, have both greater incentives to understand social relations better, and better access to them because they live under the capitalists' rule and therefore have access to the lives of capitalists as well as their own lives. Feminist standpoint theory builds on these ideas but focuses on gender, racial and other social relations.

These ideas are controversial but they draw attention to the possibility that attempts to rid science of perspectives might not only be futile because scientific knowledge is necessarily perspectival, they can also be epistemically costly because they prevent scientists from having the epistemic benefits certain standpoints afford.

If there are no methods that guarantee objective outcomes or objective criteria against which to assess outcomes, what might “procedural objectivity” consist in? A particular answer that goes back to Karl R. Popper (1972, 1934 [2002]) has been taken up and modified by Helen Longino. Popper claimed that “the objectivity of scientific statements lies in the fact that they can be inter-subjectively tested” (1934 [2002]: 22), where “intersubjectively testable” may be understood as there being verifiable facts with evidential bearing on the theory in question. So Popper does not see the objectivity of a scientific claim in a direct correspondence to facts: rather, the claim must be testable and subject to rational criticism.

Longino (1990) reinforces Popper's focus on intersubjective criticism: for her, scientific knowledge is essentially a social product. Thus, our conception of scientific objectivity must directly engage with the social process that generates knowledge. In response to the failures of attempts to define objectivity as faithfulness of theory to facts, she concludes that social criticism fulfills crucial functions in securing the epistemic success of science. The objectivity of science is no more grounded in correspondence between theory and facts, or in all scientists seeing the same result (called “concordant objectivity” by Douglas 2011), but in the “interactive objectivity” that emerges through the scientists' open discourse. Specifically, she develops an epistemology called contextual empiricism which regards a method of inquiry as “objective to the degree that it permits transformative criticism” (Longino 1990: 76). For an epistemic community to achieve transformative criticism, there must be:

- avenues for criticism: criticism is an essential part of scientific institutions (e.g., peer review);

- shared standards: the community must share a set of cognitive values for assessing theories (more on this in section 3.1);

- uptake of criticism: criticism must be able to transform scientific practice in the long run;

- equality of intellectual authority: intellectual authority must be shared equally among qualified practitioners.

Longino's contextual empiricism can be understood as a development of John Stuart Mill's view that beliefs should never be suppressed, independently of whether they are true or false (Mill 1859 [2003]). Even the most implausible beliefs might, for all we know, be true as we are not infallible; and if they are false, they might contain a grain of truth which is worth preserving or, if wholly false, help to better articulate and defend those beliefs which are true (Mill 1859 [2003]: 72).

Social epistemologists such as Longino similarly see objectivity neither in the products of science (as there is no view from nowhere) nor in its methods (as there aren't any standards that are valid independently of the contexts of specific inquiries) but rather in the idea that many and competing voices are heard. The underlying intuition is supported by recent empirical research on the epistemic benefits of a diversity of opinions and perspectives (Page 2007).

The turn from scientific results and methods to the social organization of science involves numerous problems. On the one hand, we might ask how many and which voices must be heard for science to be objective. It is not clear for instance whether non-scientists should have as much authority as trained scientists. The condition of equality of intellectual requires only “qualified” practitioners to equally share authority—but who qualifies as “qualified”? Nor is it clear whether it is always a good idea to subject every scientific result to democratic approval, as Paul Feyerabend proposed (Feyerabend 1975: 1978). There is no guarantee that democratized science leads to true theories, or even reliable ones. So why should we value objectivity in the sense of social epistemologists?

One answer to this question has been given by Arthur Fine who argues that we value objectivity in this sense because it promotes trust in science (Fine 1998: 17). While there is no guarantee that the process leads to true theories, it is nevertheless trusted because it is fair. We will consider Fine's views on objectivity in more detail below in section 6.

3. Objectivity as Absence of Normative Commitments and the Value-Free Ideal

The previous section has presented us with forceful arguments against the view of objectivity as faithfulness to facts and an impersonal “view from nowhere”. How can we maintain the view that objectivity is one of the essential features of science—and the one that grounds its epistemic authority? A popular reply contends that science should be value-free and that scientific claims or practices are objective to the extent that they are free of moral, political and social values.

3.1 Epistemic and Contextual Values

Before addressing what we will call the “value-free ideal”, it will be helpful to distinguish four stages at which values may affect science. They are: (i) the choice of a scientific research problem; (ii) the gathering of evidence in relation to the problem; (iii) the acceptance of a scientific hypothesis or theory as an adequate answer to the problem on the basis of the evidence; (iv) the proliferation and application of scientific research results (Weber 1917 [1988]).

Most philosophers of science would agree that the role of values in science is contentious only with respect to dimensions (ii) and (iii): the gathering of evidence and the acceptance of scientific theories. It is almost universally accepted that the choice of a research problem is often influenced by the interests of individual scientists, funding parties, and society as a whole. This influence may make science more shallow and slow down its long-run progress, but it has benefits, too: scientists will focus on providing solutions to those intellectual problems that are considered urgent by society and they may actually improve people's lives. Similarly, the proliferation and application of scientific research results is evidently affected by the personal values of journal editors and end users, and there seems to be little one can do about this. The real debate is about whether or not the “core” of scientific reasoning—the gathering of evidence and the assessment and acceptance scientific theories—is, and should be, value-free.

An obvious, but ultimately unconvincing criticism of the value-free ideal invokes the “underdetermination of theory by evidence” (see the entry on underdetermination of scientific theory). As we have seen above, the relationship between theory and evidence is rather complex. More often than not in the history of science, the existing body of evidence in some domain does not pick out a unique theoretical account of that domain. “Crucial experiments” do not refute a specific scientific claim, but only indicate that there is an error in an entire network of hypotheses (Duhem 1906 [1954]). Thus, existing bodies of evidence often underdetermine the choice of rival theoretical accounts.

According to the critics of the value-free ideal, the gap between evidence and theory must be filled in by scientific values. Consider a classical curve-fitting problem. When fitting a curve to a data set, the researcher often has the choice between either using a higher-order polynomial, which makes the curve less simple but fits the data more accurately, or using a lower-order polynomial, which makes the curve simpler albeit less accurate. Simplicity and accuracy are both scientific values: for instance, econometricians have a preference for solving curve-fitting problems by means of linear regression, thereby valuing simplicity over accuracy (though see Forster and Sober 1994 for a competing account of this practice).

Philosophers of science however tend to regard value-ladenness in this sense as benign. Epistemic (or cognitive) values such as predictive accuracy, scope, unification, explanatory power, simplicity and coherence with other accepted theories are taken to be indicative of a good scientific theory and figure in standard arguments for preferring one theory over another. Kuhn (1977) even claims that epistemic values define the shared commitments of science, that is, the standards of theory assessment that characterize the scientific approach as a whole.

A word on terminology. Sometimes epistemic values are regarded as a subset of cognitive values and identified with values such as empirical adequacy and internal consistency that directly bear on the veracity of a scientific theory (Laudan 2004). Values such as scope and explanatory power would then count as cognitive values that express scientific desiderata, but without properly epistemic implications. We have decided, however, to adopt a broader reading of “epistemic” where truth is not the only aim of scientific inquiry, but supplemented by providing causal mechanisms, finding natural laws, creating understanding, etc. In this sense, values such as scope or explanatory power contribute to achieving our epistemic goals. Neat distinctions between strictly truth-conducive and purely cognitive scientific values are hard to come by (see Douglas 2013 for a classification attempt).

Not every philosopher entertains the same list of epistemic values. In Lycan's (1985) pragmatic perspective, simplicity is included because it reduces the cognitive workload of the scientific practitioner, and because it facilitates the use of scientific theories in dealing with real-world problems. McMullin (2009), on the other hand, does not include simplicity because the notion is ambiguous, and because there are no conclusive arguments that simpler theories are more likely to be true, or empirically adequate. Subjective differences in ranking and applying epistemic values do not vanish, a point Kuhn made emphatically. This is also one of the reasons for using the term “value” rather than “rule”: the assessment of a scientific theory rather corresponds to a judgment where different criteria are carefully weighed than to the mechanic application of a rule or algorithm to determine the best theory (McMullin 1982: 17).

In most views, the objectivity and authority of science is not threatened by epistemic, but only by contextual (non-cognitive) values. Contextual values are moral, personal, social, political and cultural values such as pleasure, justice and equality, conservation of the natural environment and diversity. The most notorious cases of improper uses of such values involve travesties of scientific reasoning, where the intrusion of contextual values led to an intolerant and oppressive scientific agenda with devastating epistemic and social consequences. In the Third Reich, a large part of contemporary physics, such as the theory of relativity, was condemned because its inventors were Jewish; in the Soviet Union, biologist Nikolai Vavilov was sentenced to death (and died in prison) because his theories of genetic inheritance did not match Marxist-Leninist ideology. Both states tried to foster a science that was motivated by political convictions (Lenard's “Deutsche Physik” in Nazi Germany, Lysenko's anti-genetic theory of inheritance in the Soviet Union), leading to disastrous epistemic and institutional effects.

Less spectacular but numerically more significant cases analyzed by feminist philosophers of science involve gender or racial bias in biological theories (e.g., Okruhlik 1994; Lloyd 2005). Moreover, a lot of industry-sponsored research in medicine (and elsewhere) is demonstrably biased toward the interests of the sponsors, usually large pharmaceutic firms (e.g., Resnik 2007; Reiss 2010). This preference bias, defined by Wilholt (2009) as the infringement of conventional standards of the research community, with the aim of arriving at a particular result, is clearly epistemically harmful. Especially for sensitive high-stakes issues such as the admission of medical drugs or the consequences of anthropogenic global warming, it seems desirable that research scientists assess theories without being influenced by such considerations. This is the core idea of the

Value-Free Ideal (VFI): Scientists should strive to minimize the influence of contextual values on scientific reasoning, e.g., in gathering evidence and assessing/accepting scientific theories.

According to the VFI, scientific objectivity is characterized by absence of contextual values and by exclusive commitment to epistemic values in scientific reasoning. See Dorato (2004: 53–54), Ruphy (2006: 190) or Biddle (2013: 125) for alternative formulations.

The next question is then whether the VFI is actually attainable. This is the subject of the

Value-Neutrality Thesis (VNT): Scientists can—at least in principle—gather evidence and assess/accept theories without making contextual value judgments.

While this latter thesis is defended less frequently than the VFI, it serves as a useful foil for discussing its attainability. Note that the VNT is not normative: it only investigates whether the judgments that scientists make are, or could possibly be, free of contextual values.

The VNT is denied by the value-laden thesis, which asserts that contextual values are essential for scientific research.

Value-Laden Thesis (VLT): Scientists cannot gather evidence and assess/accept theories without making contextual value judgments.

The latter thesis is sometimes strengthened to the claim that both epistemic and contextual values are essential to scientific research—and pursuit of a science without contextual values would be harmful both epistemically and socially (see section 3.4). Either way, the acceptance of the value-laden thesis poses a challenge for re-defining scientific objectivity: one can either conclude that the ideal of objectivity is harmful and should be rejected (as Feyerabend does), or one can come up with a different and refined conception of objectivity (as Douglas and Longino do).

This section discusses the VNT as applied to the assessment and acceptance of scientific hypothesis, the role of the VFI at the interface between scientific reasoning and policy advice, and Paul Feyerabend's radical attack on the VNT.

3.2 Acceptance of Scientific Hypotheses and Value Neutrality

Regarding the assessment of scientific theories, the VNT is a relatively recent position in philosophy of science. Its rise is closely connected to Reichenbach's famous distinction between context of discovery and context of justification. Reichenbach first made this distinction with respect to the epistemology of mathematics:

the objective relation from the given entities to the solution, and the subjective way of finding it, are clearly separated for problems of a deductive character […] we must learn to make the same distinction for the problem of the inductive relation from facts to theories. (Reichenbach 1938: 36–37)

The standard interpretation of this statement marks contextual values, which may have contributed to the discovery of a theory, as irrelevant for justifying the acceptance of a theory, and for assessing how evidence bears on theory—the relation that is crucial for the objectivity of science. Contextual values are restricted to a matter of individual psychology that may influence the discovery, development and proliferation of a scientific theory, but not its epistemic status.

This distinction played a crucial role in post-World War II philosophy of science. It presupposes, however, a clear-cut distinction between epistemic values on the one hand and contextual values on the other. While this may be prima facie plausible for disciplines such as physics, there is an abundance of contextual values in the social sciences, for instance, in the conceptualization and measurement of a nation's wealth, or in different ways to measure the inflation rate (cf. Dupré 2007; Reiss 2008). More generally, three major lines of criticism can be identified.

First, Helen Longino (1996) has argued that traditional “epistemic” values such as consistency, simplicity, breadth of scope and fruitfulness are not purely epistemic after all, and that their use imports political and social values into contexts of scientific judgment. According to her, the use of epistemic values in scientific judgments is not always, not even normally, politically neutral. She proposes to juxtapose these values with feminist values such as novelty, ontological heterogeneity, mutuality of interaction, applicability to human needs and diffusion of power, and argues that the use of the traditional value instead of its alternative (e.g., simplicity instead of ontological heterogeneity) can lead to biases and adverse research results. Longino's argument here is different from the one discussed in section 3.1. It casts the very distinction between epistemic and contextual values into doubt.

The use of language in descriptions of scientific hypotheses and results poses a second challenge to VNT. As Hilary Putnam has recently argued, fact and value are frequently entangled because of the use of so-called “thick” ethical concepts in scientific descriptions (Putnam 2002). Consider Putnam's own example, the word “cruel”. The statement “Susan is a cruel teacher” entails certain statements about Susan's behavior towards her pupils, perhaps that she gives unnecessarily low grades, puts them on the spot, pokes fun at them or slaps them. It has descriptive content. But it also expresses our moral disapproval of Susan's behavior. To call someone cruel is to reprehend him or her. The term has therefore also normative content. Thick ethical terms are terms that, like cruel, have a mixed descriptive and normative content. They contrast with “thin” ethical terms that are purely normative: “good”/“bad”, “ought”/“must not”, “right”/“wrong” and so on.

Putnam argues at some length that (a) the normative content of thick ethical terms is ineliminable; and (b) thick ethical concepts cannot be factored into descriptive and normative components. Neither of these arguments would, if successful, necessarily cause concern for a defender of the VNT. The existence of terms in which facts and values are inextricably entangled does not pose a threat to scientists who wish to describe their hypotheses and results in a value-free manner: they could simply avoid using thick ethical terms. The crucial question is therefore whether or not scientific hypotheses and the description of results necessarily involves such terms.

John Dupré has argued that thick ethical terms are ineliminable from science, at least certain parts of it (Dupré 2007). Dupré's point is essentially that scientific hypotheses and results concern us because they are relevant to human interests, and thus they will necessarily be couched in a language that uses thick ethical terms. While it will often be possible to translate ethically thick descriptions into neutral ones, the translation cannot be made without losses, and these losses obtain precisely because human interests are involved. According to Dupré, then, there are many scientific statements that are value-free but they are value-free because their truth or falsity does not matter to us:

Whether electrons have a positive or a negative charge and whether there is a black hole in the middle of our galaxy are questions of absolutely no immediate importance to us. The only human interests they touch (and these they may indeed touch deeply) are cognitive ones, and so the only values that they implicate are cognitive values. (2007: 31)

A third challenge to VNT was posed by Richard Rudner in his influential article “The Scientist Qua Scientist Makes Value Judgments” (Rudner 1953). Rudner disputes the core of the VNT and the context of discovery/justification distinction: the idea that the acceptance of a scientific theory can in principle be value-free. We now discuss Rudner's argument in some detail.

First, Rudner argues that

no analysis of what constitutes the method of science would be satisfactory unless it comprised some assertion to the effect that the scientist as scientist accepts or rejects hypotheses. (1953: 2)

This assumption stems from the practice of industrial quality control and other application-oriented research. In such contexts, it is often necessary to accept or to reject a hypothesis (e.g., the efficacy of a drug) in order to make effective decisions.

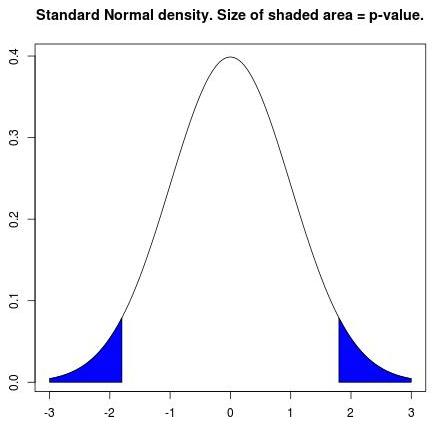

Second, he notes that no scientific hypothesis is ever confirmed beyond reasonable doubt—some probability of error always remains. When we accept or reject a hypothesis, there is always a chance that our decision is mistaken. Hence, our decision is also “a function of the importance, in the typically ethical sense, of making a mistake in accepting or rejecting a hypothesis” (1953: 2): we are balancing the seriousness of two possible errors (erroneous acceptance/rejection of the hypothesis) against each other. This corresponds to type I and type II error in statistical inference.

Hence, ethical judgments and contextual values enter the scientist's core activity of accepting and rejecting hypotheses, and the VNT stands refuted. Closely related arguments can be found in Churchman (1948) and Braithwaite (1953). Hempel (1965: 91–92) gives a modified account of Rudner's argument by distinguishing between judgments of confirmation, which are free of contextual values, and judgments of acceptance. Since even strongly confirming evidence cannot fully prove a universal scientific law, we have to live with a residual “inductive risk” in inferring that law. Contextual values influence scientific methods by determining the acceptable amount of inductive risk.

But how general are Rudner's findings? Apparently, the result holds true of applied science, but not necessarily of fundamental research. For instance, Richard Jeffrey (1956) notes that lawlike hypotheses in theoretical science (e.g., the gravitational law in Newtonian mechanics) are characterized by their general scope and not confined to a particular application. Obviously, a scientist cannot fine-tune her decisions to their possible consequences in a wide variety of different contexts. So she should just refrain at all from the essentially pragmatic decision to accept or reject a hypothesis and restrict herself to gathering and interpreting the evidence. This objection was foreshadowed by the statistician, methodologist and geneticist Ronald A. Fisher:

in the field of pure research no assessment of the cost of wrong conclusions […] can conceivably be more than a pretence, and in any case such an assessment would be inadmissible and irrelevant in judging the state of the scientific evidence. (Fisher 1935: 25–26, our emphasis)

By restricting scientific reasoning to gathering and interpreting evidence, possibly supplemented by assessing the probability of a hypothesis, and abandoning the business of accepting/rejecting hypotheses, Jeffrey tries to save the VNT in fundamental scientific research, and the objectivity of scientific reasoning.

A related attempt to save the VNT is given by Isaac Levi (1960). Levi observes that scientists commit themselves to certain standards of inference when they become a member of the profession. This may, for example, lead to the statistical rejection of a hypothesis when the observed significance level is smaller than 5%. These community standards may eliminate any room for contextual ethical judgment on behalf of the scientist: they determine when he/she should accept a hypothesis as established. Value judgments may be implicit in the standards of scientific inference, but not in the daily work of an individual scientist. Such conventional standards are especially prolific in theoretical research where it does not make sense to specify application-oriented utilities of accepting or rejecting a hypothesis (cf. Wilholt 2013). The VNT, and the idea of scientific objectivity as value freedom, could then be saved for the case of individual scientific reasoning.

Both defenses of the VNT focus on the impact of values in theory choice, either by denying that scientists actually choose theories (Jeffrey), or by referring to community standards (Levi). Douglas (2000: 563–565) points out, however, that the “acceptance” of scientific theories is only one of several places for values to enter scientific reasoning, albeit an especially prominent and explicit one. Many decisions in the process of scientific inquiry may conceal implicit value judgments: the design of an experiment, the methodology for conducting it, the characterization of the data, the choice of a statistical method for processing and analyzing data, the interpretational process findings, etc. None of these methodological decisions could be made without consideration of the possible consequences that could occur. Douglas gives, as a case study, a series of experiments where carcinogenic effects of dioxin exposure on rats were probed. Contextual values such as safety and risk aversion affected the conducted research at various stages: first, in the classification of pathological samples as benign or cancerous (over which a lot of expert disagreement occurred), second, in the extrapolation from the high-dose experimental conditions to the more realistic low-dose conditions. In both cases, the choice of a conservative classification or model had to be weighed against the adverse consequences for society that could result from underestimating the risks (cf. Biddle 2013).

These diagnoses cast a gloomy light on attempts to divide scientific labor between gathering evidence and determining the degree of confirmation (value-free) and accepting scientific theories (value-laden). The entire process of conceptualizing, gathering and interpreting evidence is so entangled with contextual values that no neat division, as Jeffrey envisions, will work outside the narrow realm of statistical inference—and even there, doubts may be raised (see section 4.2).

Philip Kitcher (2011a: 31–40) gives an alternative argument, based on his idea of “significant truths”. According to Kitcher, even staunch scientific realists will not hold that science aims at truth as a goal in itself. There are simply too many truths that are of no interest whatsoever—consider all the truths about the areas of triangles whose vertices are three arbitrarily chosen objects (2011a: 106). Science, then, doesn't aim at truth simpliciter but rather at something more narrow: truth worth pursuing from the point of view of our cognitive, practical and social goals. Any truth that is worth pursuing in this sense is what he calls a “significant truth”. Clearly, it is value judgments that help us decide whether or not any given truth is significant.

What Kitcher argues so far is consistent with the traditional view according to which values enter mainly at the first stage of scientific investigation, problem selection. But then he goes on to observe that the process of scientific investigation cannot neatly be divided into a stage in which the research question is chosen, one in which the evidence is gathered and one in which a judgment about the question is made on the basis of the evidence.

Rather, the sequence is multiply iterated. At each stage, the researcher has to decide whether previous results warrant further investigation in the same line of research or whether it would be more fruitful to switch to another avenue, even if the overall goal remains constant. These choices are laden with contextual values.

To add some precision to this idea, Kitcher distinguishes three schemes of values: a broad scheme, a cognitive scheme and a probative scheme. The broad scheme of values is the set of commitments around which someone's life is organized, including personal ideals and goals and those people have for the societies in which they live. The cognitive scheme of values concerns the kinds of knowledge a person values, for their own sake or for the sake of their practical consequences. The probative scheme of values, lastly, concerns more specific questions a researcher finds worth pursuing.

Kitcher now argues that the three schemes mutually interact. Thus, the cognitive scheme might change in response to pressures from the probative and the broad scheme. We give an example of our own. Assume that the cognitive scheme endorses predictive success as an important goal of science. However, the probative scheme finds no available or conceivable strategy to reach this goal in some domain of science, for instance because that domain is characterized by strong non-linear dependencies. In this case, predictive success might have to yield to other forms of scientific knowledge. After all, we would be irrational if we continued to pursue a goal that is unattainable in principle. Conversely, changes in the broad scheme will often necessitate adjustments in the cognitive and probative schemes: changing social goals lead to revaluations of scientific knowledge and research methods.

Science, then, cannot be value-free because no scientist ever works exclusively in the supposedly value-free zone of assessing and accepting hypotheses. Evidence is gathered and hypotheses are assessed and accepted in the light of their potential for application and fruitful research avenues. Both epistemic and contextual value judgments guide these choices and are themselves influenced by their results. More than that, to portray science as value-free enterprise carries a danger with it:

The deepest source of the current erosion of scientific authority consists in insisting on the value-freedom of Genuine Science…

(Kitcher 2011a: 40)

3.3 Science, Policy and the Value-Free Ideal

While the previous discussion focused on the VNT, and the practical attainability of the VFI, little has been said about whether value freedom is desirable in the first place. This subsection discusses this topic with special attention to informing and advising public policy from a scientific perspective. While the VFI, and many arguments for and against it, can be applied to science as a whole, the interface of science and public policy is the place where the intrusion of values into science is especially salient, and where it is surrounded by the greatest controversy. Quite recently, the discovery that climate scientists were pursuing a particular socio-political agenda (the “Climategate” affair) did much to damage the authority of science in the public arena.

Indeed, many debates at the interface of science and public policy are characterized by disagreements on propositions that combine a factual basis with specific goals and values. Take, for instance, the view that growing transgenic crops carries too much risk in terms of biosecurity, or that global warming needs to be addressed by cutting CO2 emissions. The critical question in such debates is whether there are theses T such that one side in the debate endorses T, the other side rejects it, the evidence is shared, and both sides have good reasons for their respective positions.

According to the VFI, scientists should uncover an epistemic, value-free basis for resolving such disagreements and restrict the dissent to the realm of value judgments. Even if the VNT should turn out to be untenable, and a strict separation to be impossible, the VFI may have an important function for guiding scientific research and for minimizing the impact of values on an objective science. In the philosophy of science, one camp of scholars defends the VFI as a necessary antidote to individual and institutional interests, like Hugh Lacey (1999, 2002), Ernan McMullin (1982) and Sandra Mitchell (2004), while others adopt a critical attitude, like Helen Longino (1990, 1996), Philip Kitcher (2011a) or Heather Douglas (2009). These criticisms may refer to the desirability, attainability or the conceptual (un)clarity of the VFI. We begin with defenses of the VFI.

Lacey distinguishes three components or interpretations of the VFI: impartiality, neutrality and autonomy. Impartiality implies that theories are solely accepted or appraised in virtue of their contribution to the epistemic values of science, such as truth, accuracy or explanatory power. In particular, the choice of theories is not influenced by contextual values. Neutrality means that scientific theories make no value statements about the world: they are concerned with what there is, not with what there should be. Finally, scientific autonomy means that the scientific agenda is shaped by the desire to increase scientific knowledge, and that contextual values have no place in scientific method.

These three interpretations of the VFI can be combined with each other, or used individually. All of them, however, are subject to criticisms. First, at a descriptive level, it is clear that autonomy of science often fails in practice due to the presence of external interests, e.g., funding agencies and industry lobbies. Neutrality is questionable in the light of the implicit role of values in social science, e.g., in Rational Choice Theory (see section 5.2). Impartiality has been criticized in the above discussion of the VNT.

Second, it has been argued that the VFI is not desirable at all. Feminist philosophers (e.g., Harding 1991; Okruhlik 1994; Lloyd 2005) have argued that science often carries a heavy androcentric values, for instance in biological theories about sex, gender and rape. The charge against these values is not so much that they are contextual rather than epistemic, but that they are unjustified. The explicit consideration of feminist values may act as a helpful antidote, in stark contrast with the VFI. Moreover, if scientists did follow the VFI rigidly, policy-makers would pay even less attention to them, with a detrimental effect on the decisions they take (Cranor 1993). Given these shortcomings, the VFI has to be rethought if it is supposed to play a useful role for guiding scientific research and leading to better policy decisions.

Douglas (2009: 7–8) proposes that the epistemic authority of science can be detached from its autonomy by distinguishing between direct and indirect roles for values in science. The assessment of evidence may legitimately be affected indirectly by contextual values: they may determine how we interpret noisy datasets, what is the appropriate standard of evidence for a specific claim, how the severity of consequences of a decision must be assessed, and so on. This concerns, above all, policy-related disciplines such as climate science or economics that routinely perform scientific risk analyses for real-world problems (cf. also Shrader-Frechette 1991). What must not happen, however, is that contextual values trump scientific evidence, or are used as a reason to ignore evidence:

cognitive, ethical and social values all have legitimate, indirect roles to play in the doing of science […]. When these values play a direct role in the heart of science, problems arise as unacceptable reasoning occurs and the reason for valuing science is undermined. (Douglas 2009: 108)

Douglas's conception of objectivity emphasizes a prohibition for values to replace or dismiss scientific evidence—she calls this detached objectivity—but it is complemented by various other aspects that relate to a reflective balancing of various perspectives and the procedural, social aspects of science (ch. 6). Instead of subscribing to the traditional VFI, Douglas suggests to rescue scientific integrity and objectivity by “keeping values to their proper roles” (2009: 175).

That said, Douglas' proposal is not very concrete when it comes to implementation, e.g., regarding the way diverse values should be balanced. Compromising in the middle cannot be the solution (Weber 1917 [1988]). First, no standpoint is, just in virtue of being in the middle, evidentially supported vis-à-vis more extreme positions. Second, these middle positions are also, from a practical point of view, the least functional when it comes to advising policy-makers.

Moreover, the distinction between direct and indirect roles of values in science may not be sufficiently clear-cut to police the legitimate use of values in science. Douglas (2009: 96) distinguishes between values as “reasons in themselves”, that is, treating them as evidence or defeaters for evidence (direct role, illegitimate) and as “helping to decide what should count as a sufficient reason for a choice” (indirect role, legitimate). But can we always draw a neat borderline? Assume that a scientist considers, for whatever reason, the consequences of erroneously accepting hypothesis H undesirable. Therefore he uses a statistical model whose results are likely to favor ¬H over H. Is this a matter of reasonable conservativeness? Or doesn't it amount to reasoning to a foregone conclusion, and to treating values as evidence (cf. Elliott 2011: 320–321)?

The most recent literature on values and evidence in science presents us with a broad spectrum of opinions. Steele (2012) bolsters Douglas's approach by arguing that various probabilistic assessments of uncertainty, e.g., imprecise probability, involve contextual value judgments as well. Betz (2013) argues, by contrast, that scientists can largely avoid making contextual value judgments if they carefully express the uncertainty involved with their evidential judgments, e.g., by using a scale ranging from purely qualitative evidence (such as expert judgment) to precise probabilistic assessments. The issue of value judgments at earlier stages of inquiry is not addressed by this proposal; however, disentangling evidential judgments and judgments involving contextual values at the stage of theory assessment may be a good thing in itself.

Thus, should we or should we not worried about values in scientific reasoning? While Douglas and others make a convincing case that the interplay of values and evidential considerations need not be pernicious, it is unclear why it adds to the success or the authority of science. After all, the values of an individual scientist who makes a risk assessment, need not agree with those of society. How are we going to ensure that the permissive attitude towards values in setting evidential standards etc. is not abused? In the absence of a general theory about which contextual values are beneficial and which are pernicious, can't we defend the VFI as a first-order approximation to a sound, transparent and objective science? Science seems to require some independence from contextual values in order to maintain its epistemic authority.

3.4 Feyerabend: The Tyranny of the Rational Method

This section looks at Paul Feyerabend's radical assault on the rationality and objectivity of scientific method (see also the entry on Feyerabend). His position is exceptional in the philosophical literature since traditionally, the threat for objectivity is located in contextual rather than epistemic values. Feyerabend turns this view upside down: it is the “tyranny” of rational method, and the emphasis on epistemic rather than contextual values that prevents us from having a science in the service of society. Thus, Feyerabend vociferously denies the VFI and also the VNT by his claim that Western science is loaded with all kinds of pernicious values.

Feyerabend's writings on objectivity and values in science have an epistemic as well as a political dimension. Regarding the first, the leading philosophy of science figures in Feyerabend's young days such as Carnap, Hempel and Popper characterized scientific method in terms of rules for rational scientific reasoning. Some of them, like Popper, devoted special attention to the demarcation of science from “pseudo-science” and imposture, thereby derogating other traditions as irrational or, in any case, inferior. Feyerabend thinks, however, that science must be protected from a “rule of rationality”, identified with strict adherence to scientific method: such rules only suppress an open exchange of ideas, extinguish scientific creativity and prevent a free and truly democratic science.

In his classic “Against Method” (1975: chs. 8–13), Feyerabend elaborates on this criticism by examining a famous episode in the history of science: the development of Galilean mechanics and the discovery of the Jupiter moons. In superficial treatments of this episode, it is stressed that an obscurantist and value-driven Catholic Church forced Galilei to recant from a scientifically superior position backed by value-free, objective findings. But in fact, Feyerabend argues, the Church had the better arguments by the standards of 17th century science. Their conservatism regarding their Weltanschauung was scientifically backed: Galilei's telescopes were unreliable for celestial observations, and many well-established phenomena (no fixed star parallax, invariance of laws of motion) could at first not be explained in the heliocentric system. Hence, scientific method was not on Galilei's side, but on the side of the Church who gave preference to the old, Ptolemaic worldview. With hindsight, Galilei managed to achieve groundbreaking scientific progress just because he deliberately violated rules of scientific reasoning, because he stubbornly stuck to a problematic approach until decisive theoretical and technological innovations were made. Hence Feyerabend's dictum “Anything goes”: no methodology whatsoever is able to capture the creative and often irrational ways by which science deepens our understanding of the world.

The drawbacks of an objective, value-free and method-bound view on science and scientific method are not only epistemic. Such a view narrows down our perspective and makes us less free, open-minded, creative, and ultimately, less human in our thinking (Feyerabend 1975: 154). It is therefore neither possible nor desirable to have an objective, value-free science (cf. Feyerabend 1978: 78–79). As a consequence, Feyerabend sees traditional forms of inquiry about our world (e.g., Chinese medicine) on a par with their Western competitors. He denounces appeals to objective standards as barely disguised statements of preference for one's own worldview:

there is hardly any difference between the members of a “primitive” tribe who defend their laws because they are the laws of the gods […] and a rationalist who appeals to “objective” standards, except that the former know what they are doing while the latter does not. (1978: 82)

In other words, the defenders of scientific method abuse the word “objective” for proving the superiority of Western science vis-à-vis other worldviews. To this, Feyerabend adds that when dismissing other traditions, we actually project our own worldview, and our own value judgments, into them instead of making an impartial comparison (1978: 80–83). There is no purely rational justification for dismissing other perspectives in favor of the Western scientific worldview. To illustrate his point, Feyerabend compares the defenders of a strong, value-free notion of objectivity to scientists who stick to the concepts of absolute length and time in spite of the Theory of Relativity. A staunch defense of objectivity and value freedom may just expose our own narrow-mindedness. This is not meant to say that truth loses its function as a normative concept in science, nor that all scientific claims are equally acceptable. Rather, Feyerabend demands that we move toward a genuine epistemic pluralism that accepts diverse approaches to searching an acquiring knowledge. In such an epistemic pluralism, science may regain its objectivity in the sense of respecting the diversity of values and traditions that drive our inquiries about the world (1978: 106–107).

All this has a political aspect, too. In the times of the scientific revolution or the Enlightenment, science acted as a liberating force that fought intellectual and political oppression by the sovereign, the nobility or the clergy. Nowadays, Feyerabend continues, the ideals of value-freedom and objectivity are often abused for excluding non-experts from science, proving the superiority of the Western way of life, and undergirding the power of an intellectual elite.

(Here it is important to keep in mind that Feyerabend's writings on this issue date mostly from the 1970s and were much influenced by the Civil Rights Movement in the US and the increasing emancipation of minorities, such as Blacks, Asians and Hispanics.)

Feyerabend therefore argues that democratic societies need to exert much greater control over scientific research. Laymen have to supervise science. This includes areas where even defenders of the VFI refrain from demanding value freedom, such as setting up a research agenda, distributing funds, and supervising scientific inquiry. But it also concerns areas that are more central to the VFI, such as the choice of a research method or the assessment of scientific theories. Contrary to commonly held beliefs, lack of specialized training need not imply lack of relevant knowledge (1975: xiii). Feyerabend sums up his view as follows:

a community will use science and scientists in a way that agrees with its values and aims and it will correct the scientific institutions in its midst to bring them closer to these aims. (1975: 251)

4. Objectivity as Freedom from Personal Biases

This section deals with scientific objectivity as a form of intersubjectivity—as freedom from personal biases. According to this view, science is objective to the extent that personal biases are absent from scientific reasoning, or that they can be eliminated in a social process. Perhaps all science is necessarily perspectival. Perhaps we cannot sensibly draw scientific inferences without a host of background assumptions, which may include assumptions about values. But scientific results should certainly not depend on researchers' personal preferences or idiosyncratic experiences. That, among other things, is what distinguishes science from the arts and other more individualistic human activities—or so it is said. Paradigmatic ways to achieve objectivity in this sense are measurement and quantification. What has been measured and quantified has been verified relative to a standard. The truth, say, that the Eiffel Tower is 324 meters tall is relative to a standard unit and conventions about how to use certain instruments, so it is neither aperspectival nor free from assumptions, but it is independent of the person making the measurement.

We will begin with a discussion of objectivity, so conceived, in measurement, discuss the ideal of “mechanical objectivity” and then investigate to what extent freedom from personal biases can be implemented in statistical and inductive inference—arguably the core of scientific reasoning, especially in experimentally working sciences.

4.1 Measurement and Quantification

Measurement is often thought to epitomize scientific objectivity, most famously captured in Lord Kelvin's dictum

when you cannot express it in numbers, your knowledge is of a meagre and unsatisfactory kind; it may be the beginning of knowledge, but you have scarcely, in your thoughts, advanced to the stage of science, whatever the matter may be. (Kelvin 1883)

Measurement can certainly achieve some independence of perspective. Yesterday's weather in Durham UK may have been “really hot” to the average North Eastern Brit and “very cold” to the average Mexican, but they'll both accept that it was 21°C. Clearly, however, measurement does not result in a “view from nowhere”, nor are typical measurement results free from presuppositions. Measurement instruments interact with the environment, and so results will always be a product of both the properties of the environment we aim to measure as well as the properties of the instrument. Instruments, thus, provide a perspectival view on the world (cf. Giere 2006).

Moreover, making sense of measurement results requires interpretation. Consider temperature measurement. Thermometers function by relating an unobservable quantity, temperature, to an observable quantity, expansion (or length) of a fluid or gas in a glass tube; that is, thermometers measure temperature by assuming that length is a function of temperature: length = \(f\)(temperature). The function \(f\) is not known a priori, and it cannot be tested either (because it could in principle only be tested using a veridical thermometer, and the veridicality of the thermometer is just what is at stake here). Making a specific assumption, for instance that \(f\) is linear, solves that problem by fiat. But this “solution” does not take us very far because different thermometric substances (e.g., mercury, air or water) yield different results for the points intermediate between the two fixed points 0°C and 100°C, and so they can't all expand linearly.

In Hasok Chang's account of early thermometry (Chang 2004), the problem was eventually solved by using a “principle of minimalist overdetermination”, whose goal it was to find a reliable thermometer while making as few substantial assumptions (e.g., about the form for f) as possible. It was argued, eventually, that if a thermometer was to be reliable, different tokens of the same thermometer type should agree with each other, and the results of air thermometers agreed the most. “Minimal” doesn't mean zero, however, and indeed this procedure makes an important presupposition (in this case a metaphysical assumption about the one-valuedness of a physical quantity). Moreover, the procedure yielded at best a reliable instrument, not necessarily one that was best at tracking the uniquely real temperature (if there is such a thing).

What Chang argues about early thermometry is true of measurement more generally. Measurements are always made against a backdrop of metaphysical presuppositions, theoretical expectations and other kinds of belief. Whether or not any given procedure is regarded as adequate depends to a large extent on the purposes pursued by the individual scientist or group of scientists making the measurements. Especially in the social sciences, this often means that measurement procedures are laden with normative assumptions, i.e., values.

Julian Reiss (2008, 2013) has argued that economic indicators such as consumer price inflation, gross domestic product and the unemployment rate are value-laden in this sense. Consumer-price indices, for instance, assume that if a consumer prefers a bundle x over an alternative y, then x is better for her than y, which is as ethically charged as it is controversial. National income measures assume that nations that exchange a larger share of goods and services on markets are richer than nations where the same goods and services are provided by the government, which too is as ethically charged and controversial.

While not free of assumptions and values, the goal of many measurement procedures remains to reduce the influence of personal biases and idiosyncrasies. The Nixon administration, famously, indexed social security payments to the consumer-price index in order to eliminate the dependence of security recipients on the flimsiest of party politics: to make increases automatic instead of a result of political negotiations (Nixon 1969). Lorraine Daston and Peter Galison refer to this as mechanical objectivity. They write:

Finally, we come to the full-fledged establishment of mechanical objectivity as the ideal of scientific representation. What we find is that the image, as standard bearer of is objectivity is tied to a relentless search to replace individual volition and discretion in depiction by the invariable routines of mechanical reproduction. (Daston and Galison 1992: 98)

The artist Salvador Dalí, no doubt unwittingly, describes his surrealist paintings as a product of mechanical objectivity in Daston and Galison's sense:

In truth I am no more than an automaton that registers, without judgment and as exactly as possible, the dictate of my subconscious: my dreams, hypnagogic images and visions, and all the concrete and irrational manifestations of the dark and sensational world discovered by Freud. (Dalí 1935)

Mechanical objectivity reduces the importance of human contributions to scientific results to a minimum, and therefore enables science to proceed on a large scale where bonds of trust between individuals can no longer hold (Daston 1992). Trust in mechanical procedures thus replaces trust in individual scientists.

In his book Trust in Numbers, Theodore Porter pursues this line of thought in great detail. In particular, on the basis of case studies involving British actuaries in the mid-nineteenth century, of French state engineers throughout the century, and of the US Army Corps of Engineers from 1920 to 1960, he argues for two causal claims. First, measurement instruments and quantitative procedures originate in commercial and administrative needs and affect the ways in which the natural and social sciences are practiced, not the other way around. The mushrooming of instruments such as chemical balances, barometers, chronometers was largely a result of social pressures and the demands of democratic societies. Administering large territories or controlling diverse people and processes is not always possible on the basis of personal trust and thus “objective procedures” (which do not require trust in persons) took the place of “subjective judgments” (which do). Second, he argues that quantification is a technology of distrust and weakness, and not of strength. It is weak administrators who do not have the social status, political support or professional solidarity to defend their experts' judgments. They therefore subject decisions to public scrutiny, which means that they must be made in a publicly accessible form.

This is the situation in which scientists who work in areas where the science/policy boundary is fluid find themselves: