Computational Complexity Theory

Computational complexity theory is a subfield of theoretical computer science one of whose primary goals is to classify and compare the practical difficulty of solving problems about finite combinatorial objects – e.g. given two natural numbers \(n\) and \(m\), are they relatively prime? Given a propositional formula \(\phi\), does it have a satisfying assignment? If we were to play chess on a board of size \(n \times n\), does white have a winning strategy from a given initial position? These problems are equally difficult from the standpoint of classical computability theory in the sense that they are all effectively decidable. Yet they still appear to differ significantly in practical difficulty. For having been supplied with a pair of numbers \(m \gt n \gt 0\), it is possible to determine their relative primality by a method (Euclid’s algorithm) which requires a number of steps proportional to \(\log(n)\). On the other hand, all known methods for solving the latter two problems require a ‘brute force’ search through a large class of cases which increase at least exponentially in the size of the problem instance.

Complexity theory attempts to make such distinctions precise by proposing a formal criterion for what it means for a mathematical problem to be feasibly decidable – i.e. that it can be solved by a conventional Turing machine in a number of steps which is proportional to a polynomial function of the size of its input. The class of problems with this property is known as \(\textbf{P}\) – or polynomial time – and includes the first of the three problems described above. \(\textbf{P}\) can be formally shown to be distinct from certain other classes such as \(\textbf{EXP}\) – or exponential time – which includes the third problem from above. The second problem from above belongs to a complexity class known as \(\textbf{NP}\) – or non-deterministic polynomial time – consisting of those problems which can be correctly decided by some computation of a non-deterministic Turing machine in a number of steps which is a polynomial function of the size of its input. A famous conjecture – often regarded as the most fundamental in all of theoretical computer science – states that \(\textbf{P}\) is also properly contained in \(\textbf{NP}\) – i.e. \(\textbf{P} \subsetneq \textbf{NP}\).

Demonstrating the non-coincidence of these and other complexity classes remain important open problems in complexity theory. But even in its present state of development, this subject connects many topics in logic, mathematics, and surrounding fields in a manner which bears on the nature and scope of our knowledge of these subjects. Reflection on the foundations of complexity theory is thus of potential significance not only to the philosophy of computer science, but also to philosophy of mathematics and epistemology as well.

- 1. On computational complexity

- 2. The origins of complexity theory

- 3. Technical development

- 4. Connections to logic and philosophy

- 5. Further reading

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. On computational complexity

Central to the development of computational complexity theory is the notion of a decision problem. Such a problem corresponds to a set \(X\) in which we wish to decide membership. For instance the problem \(\sc{PRIMES}\) corresponds to the subset of the natural numbers which are prime – i.e. \(\{n \in \mathbb{N} \mid n \text{ is prime}\}\). Decision problems are typically specified in the form of questions about a class of mathematical objects whose positive instances determine the set in question – e.g.

\(\sc{SAT}\ \) Given a formula \(\phi\) of propositional logic, does there exist a satisfying assignment for \(\phi\)?

\(\sc{TRAVELING}\ \sc{SALESMAN}\ (\sc{TSP}) \ \) Given a list of cities \(V\), the integer distance \(d(u,v)\) between each pair of cities \(u,v \in V\), and a budget \(b \in \mathbb{N}\), is there a tour visiting each city exactly once and returning to the starting city of total distance \(\leq b\)?

\(\sc{INTEGER}\ \sc{PROGRAMMING}\ \) Given an \(n \times m\) integer matrix \(A\) and an \(n\)-dimensional vector of integers \(\vec{b}\), does there exist an \(m\)-dimensional vector \(\vec{x}\) of integers such that \(A \vec{x} = b\)?

\(\sc{PERFECT} \ \sc{MATCHING}\ \) Given a finite bipartite graph \(G \), does there exist a perfect matching in \(G \)? (\(G\) is bipartite just in case its vertices can be partitioned into two disjoints sets \(U\) and \(V\) such that all of its edges \(E \) connect a vertex in \(U\) to one in \(V\). A matching is a subset of edges \(M \subseteq E\) no two members of which share a common vertex. \(M\) is perfect if it matches all vertices.)

These problems are typical of those studied in complexity theory in two fundamental respects. First, they are all effectively decidable. This is to say that they may all be decided in the ‘in principle’ sense studied in computability theory – i.e. by an effective procedure which halts in finitely many steps for all inputs. Second, they arise in contexts in which we are interested in solving not only isolated instances of the problem in question, but rather in developing methods which allow it to be efficiently solved on a mass scale – i.e. for all instances in which we might be practically concerned. Such interest often arises in virtue of the relationship of computational problems to practical tasks which we seek to analyze using the methods of discrete mathematics. For example, instances of \(\sc{SAT}\) arise when we wish to check the consistency of a set of specifications (e.g. those which might arise in scheduling the sessions of a conference or designing a circuit board), instances of \(\sc{TSP}\) and \(\sc{INTEGER}\ \sc{PROGRAMMING}\) arise in many logistical and planning applications, instances of \(\sc{PERFECT} \ \sc{MATCHING}\) arise when we wish to find an optimal means of pairing candidates with jobs, etc.[1]

The resources involved in carrying out an algorithm to decide an instance of a problems can typically be measured in terms of the number of processor cycles (i.e. elementary computational steps) and the amount of memory space (i.e. storage for auxiliary calculations) which are required to return a solution. The methods of complexity theory can be useful not only in deciding how we can most efficiently expend such resources, but also in helping us to distinguish which effectively decidable problems possess efficient decision methods in the first place. In this regard, it is traditional to distinguish pre-theoretically between the class of feasibly decidable problems – i.e. those which can be solved in practice by an efficient algorithm – and the class of intractable problems – i.e. those which lack such algorithms and may thus be regarded as intrinsically difficult to decide (despite possibly being decidable in principle). The significance of this distinction is most readily appreciated by considering some additional examples.

1.1 A preliminary example

A familiar example of a computational problem is that of primality testing – i.e. that of deciding \(n \in \sc{PRIMES} \)? This problem was intensely studied in mathematics long before the development of digital computers. (See, e.g., (Williams 1998) for a history of primality testing and (Crandall and Pomerance 2005) for a recent survey of the state of the art.) After a number of preliminary results in the 19th and 20th centuries, the problem \(\sc{PRIMES}\) was shown in 2004 to possess a so-called polynomial time decision algorithm – i.e. the so-called AKS primality test (Agrawal, Kayal, and Saxena 2004). This qualifies \(\sc{PRIMES}\) as feasibly decidable relative to the standards which are now widely accepted in complexity theory and algorithmic analysis (see Section 2.2).

Two related problems can be used to illustrate the sort of contrasts in difficulty which complexity theorists seek to analyze:

\(\sc{RELATIVE}\ \sc{PRIMALITY}\ \) Given natural numbers \(x\) and \(y\), do \(x\) and \(y\) possess greatest common divisor \(1\)? (I.e. are \(x\) and \(y\) relatively prime?)

\(\sc{FACTORIZATION}\ \) Given natural numbers \(x\) and \(y\), does there exist \(1 \lt d \leq y\) such that \(d \mid x\)?

\(\sc{RELATIVE}\ \sc{PRIMALITY}\) can be solved by applying Euclid’s greatest common divisor algorithm – i.e. on input \(y \leq x\), repeatedly compute the remainders \(r_0 = \text{rem}(x,y)\), \(r_1 = \text{rem}(y, r_0)\), \(r_2 = \text{rem}(r_0, r_1)\) …, until \(r_i = 0\) and then return ‘yes’ if \(r_{i-1} = 1\) and ‘no’ otherwise. It may be shown that the number of steps in this sequence is always less than or equal to \(5 \cdot \log_{10}(x)\).[2] This means that in order to determine if \(x\) and \(y\) are relatively prime, it suffices to calculate a number of remainders which is proportional to the number of digits in the decimal representation of the smaller of the two numbers. As this may also be accomplished by an efficient algorithm (e.g. long division), it may plausibly be maintained that if we are capable of inscribing a pair of numbers \(x,y\) – e.g. by writing their numerical representations in binary or decimal notation on a blackboard or by storing such numerals in the memory of a digital computer of current design – then either we or such a computer will also be able to carry out these algorithms in order to decide whether \(x\) and \(y\) are relatively prime. This is the hallmark of a feasibly decidable problem – i.e. one which can be decided in the ‘in practice’ sense of everyday concretely embodied computation.

\(\sc{FACTORIZATION}\) is a decision variant of the familiar problem of finding the prime factorization of a given number \(x\) – i.e. the unique sequence of primes \(p_i\) and exponents \(a_i\) such that \(x = p_1^{a_1} \cdot \ldots \cdot p_k^{a_k}\). It is not difficult to see that if there existed an efficient algorithm for deciding \(\sc{FACTORIZATION}\), then there would also exist an efficient algorithm for determining prime factorizations.[3] It is also easy to see that the function taking \(x\) to its prime factorization is effectively computable in the traditional sense of computability theory. For instance, it can be computed by the trial division algorithm.

In its simplest form, trial division operates by successively testing \(x\) for divisibility by each integer smaller than \(x\) and keeping track of the divisors which have been found thus far. As the number of divisions required by this procedure is proportional to \(x\) itself, it might at first seem that it is not a particularly onerous task to employ this method to factor numbers of moderate size using paper and pencil calculation – say \(x \lt 100000\). Note, however, that we conventionally denote natural numbers using positional notations such as binary or decimal numerals. A consequence of this is that the length of the expression which is typically supplied as an input to a numerical algorithm to represent an input \(x \in \mathbb{N}\) is proportional not to \(x\) itself, but rather to \(\log_b(x)\) where \(b \geq 2\) is the base of the notation system in question.[4] As a consequence it is possible to concretely inscribe positional numerals of moderate length which denote astronomically large numbers. For instance a binary numeral of 60 digits denotes a number which is larger than the estimated age of the universe in seconds and a binary numeral of 250 digits denotes a number which is larger than the estimated age of the universe in Planck times.[5]

There are thus natural numbers whose binary representations we can easily inscribe, but for which no human mathematician or foreseeable computing device can carry out the trial division algorithm. This again might not seem particularly troubling as this algorithm is indeed ‘naive’ in the sense that it admits to several obvious improvements – e.g. we need only test \(x\) for divisibility by the numbers \(2, \ldots, \sqrt{x}\) to find an initial factor, and of these we need only test those which are themselves prime (finitely many of which can be stored in a lookup table). Nonetheless, mathematicians have been attempting to find more efficient methods of factorization for several hundred years. The most efficient factorization algorithm yet developed is similar to the trial division algorithm in that it requires a number of primitive steps which grows roughly in proportion to \(x\) (i.e. the size of its input, as opposed to the length of its binary representation).[6] A consequence of these observations is that there exist concretely inscribable numbers – say on the order of 400 decimal digits – with the following properties: (i) we are currently unaware of their factorizations; and (ii) it is highly unlikely we could currently find them even if we had access to whatever combination of currently available computing equipment and algorithms we wish.

Like the problems introduced above, \(\sc{FACTORIZATION}\) is of considerable practical importance, perhaps most famously because the security of well known cryptographic protocols assume that it is intractable in the general case (see, e.g., Cormen, Leiserson, and Rivest 2005). But the foregoing observations still do not entail any fundamental limitation on our ability to know a number’s prime factorization. For it might still be hoped that further research will yield a more efficient algorithm which will allow us to determine the prime factorization of every number \(x\) in which we might take a practical interest. A comparison of Euclid’s algorithm and trial division again provides a useful context for describing the properties which we might expect such an algorithm to possess. For note that the prior observations suggest that we ought to measure the size of the input \(x \in \mathbb{N}\) to a numerical algorithm not by \(x\) itself, but rather in terms of the length of \(x\)’s binary representation. If we let \(\lvert x\rvert =_{df} \log_2(x)\) denote this quantity, then it is easy to see that the efficiency of Euclid’s algorithm is given by a function which grows proportionally to \(\lvert x\rvert^{c_1}\) for fixed \(c_1\) (in fact, \(c_1 = 1\)), whereas the efficiency of trial division is given by a function proportional \(c_2^{\lvert x\rvert}\) for fixed \(c_2\) (in fact, \(c_2 = 2\)).

The difference in the growth rate of these functions illustrates the contrast between polynomial time complexity – which is currently taken by complexity theorists as the touchstone of feasibility – and exponential time complexity – which has traditionally been taken as the touchstone of intractability. For instance, if it could be shown that no polynomial time factorization algorithm exists, it might then seem reasonable to conclude that \(\sc{FACTORIZATION}\) is a genuinely intractable problem.

Although it is currently unknown whether this is the case, contemporary results provide circumstantial evidence that \(\sc{FACTORIZATION}\) is indeed intractable (see Section 3.4.1). Stronger evidence can be adduced for the intractability of conjecture \(\sc{SAT}\), \(\sc{TSP}\), and \(\sc{INTEGER}\ \sc{PROGRAMMING}\) (and similarly for a great many other problems of practical interest in subjects like logic, graph theory, linear algebra, formal language theory, game theory, and combinatorics). The technical development of complexity theory aims to make such comparisons of computational difficulty precise and to show that the classification of certain problems as intractable admits to rigorous mathematical analysis.

1.2 Basic conventions

As we have just seen, in computational complexity theory a problem \(X\) is considered to be complex in proportion to the difficulty of carrying out the most efficient algorithm by which it may be decided. Similarly, one problem \(X\) is understood to be more complex (or harder) than another problem \(Y\) just in case \(Y\) possesses a more efficient decision algorithm than the most efficient algorithm for deciding \(X\). In order to make these definitions precise, a number of technical conventions are employed, many of which are borrowed from the adjacent fields of computability theory (e.g. Rogers 1987) and algorithmic analysis (e.g. Cormen, Leiserson, and Rivest 2005). It will be useful to summarize these before proceeding further.

-

A reference model of computation \(\mathfrak{M}\) is chosen to represent algorithms. \(\mathfrak{M}\) is assumed to be a reasonable model in the sense that it accurately reflects the computational costs of carrying out the sorts of informally specified algorithms which are encountered in mathematical practice. The deterministic Turing machine model \(\mathfrak{T}\) is traditionally selected for this purpose. (See Section 2.2 for further discussion of reasonable models and the justification of this choice.)

-

Decision problems are represented as sets consisting of objects which can serve as the inputs for a machine \(M \in \mathfrak{M}\). For instance, if \(\mathfrak{T}\) is used as the reference model then it is assumed that all problems \(X\) are represented as sets of finite binary strings – i.e. \(X \subseteq \{0,1\}^*\). This is accomplished by defining a mapping \(\ulcorner \cdot \urcorner:X \rightarrow \{0,1\}^*\) whose definition will depend on the type of objects which comprise \(X\). For instance, if \(X \subseteq \mathbb{N}\), then \(\ulcorner n \urcorner\) will typically be the binary numeral representing \(n\). And if \(X\) is a subset of \(\text{Form}_{\mathcal{L}}\) – i.e. the set of formulas over a formal language \(\mathcal{L}\) such as that of propositional logic – then \(\ulcorner \phi \urcorner\) will typically be a (binary) Gödel numeral for \(\phi\). Based on these conventions, problems \(X\) will henceforth be identified with sets of strings \(\{\ulcorner x \urcorner : x \in X\} \subseteq \{0,1\}^*\) (which are often referred to as languages) corresponding to their images under such an encoding.

-

A machine \(M\) is said to decide a language \(X\) just in case \(M\) computes the characteristic function of \(X\) relative to the standard input-output conventions for the model \(\mathfrak{M}\). For instance, a Turing machine \(T\) decides \(X\) just in case for all \(x \in \{0,1\}^*\), the result of applying \(T\) to \(x\) yields a halting computation ending in a designated accept state if \(x \in X\) and a designated reject state if \(x \not\in X\). A function problem is that of computing the values of a given function \(f:A \rightarrow B\). \(M\) is said to solve a function problem \(f:A \rightarrow B\) just in case the mapping induced by its operation coincides with \(f(x)\) – i.e. if \(M(x) = f(x)\) for all \(x \in A\) where \(M(x)\) denotes the result of applying machine \(M\) to input \(x\), again relative to the input-output conventions for the model \(\mathfrak{M}\).

-

For each problem \(X\), it is also assumed that an appropriate notion of problem size is defined for its instances. Formally, this is a function \(\lvert\cdot \rvert: X \rightarrow \mathbb{N}\) chosen so that the efficiency of a decision algorithm for \(X\) will varying uniformly in \(\lvert x\rvert\). As we have seen, if \(X \subseteq \mathbb{N}\), it is standard to take \(\lvert n\rvert = \log_2(n)\) – i.e. the number of digits (or length) of the binary numeral \(\ulcorner n \urcorner\) representing \(n\). Similarly if \(X\) is a class of logical formulas over a language \(\mathcal{L}\) (e.g. that or propositional or first-order logic), then \(\lvert\phi\rvert\) will typically be a measure of \(\phi\)’s syntactic complexity (e.g. the number of propositional variables or clauses it contains). If \(X\) is a graph theoretic problem its instances will consist of the encodings of finite graphs of the form \(G = \langle V,E \rangle\) where \(V\) is a set of vertices and \(E \subseteq V \times V\) is a set of edges. In this case \(\lvert G\rvert\) will typically be a function of the cardinalities of the sets \(V\) and \(E\).

-

The efficiency of a machine \(M\) is measured in terms of its time complexity – i.e. the number of basic steps \(time_M(x)\) required for \(M\) to halt and return an output for the input \(x\) (where the precise notion of ‘basic step’ will vary with the model \(\mathfrak{M}\)). This measure may be converted into a function of type \(\mathbb{N} \rightarrow \mathbb{N}\) by considering \(t_{M}(n) = \max \{time_M(x) : \lvert x\rvert = n \}\) – i.e. the worst case time complexity of \(M\) defined as the maximum number of basic steps required for \(M\) to halt and return an output for all inputs \(x\) of size \(n\). The worst case space complexity of \(M\) – denoted \(s_{M}(n)\) – is defined similarly – i.e. the maximum number of tape cells (or other form of memory locations) visited or written to in the course of \(M\)’s computation for all inputs of size \(n\).

-

The efficiency of two machines is compared according to the order of growth of their time and space complexities. In particular, given a function \(f:\mathbb{N} \rightarrow \mathbb{N}\) we define its order of growth to be \(O(f(n)) = \{g(n) : \exists c \exists n_0 \forall n \geq n_0(g(n) \lt c \cdot f(n)) \}\) – i.e. the set of all functions which are asymptotically bounded by \(f(n)\), ignoring scalar factors. For instance, for all fixed \(k, c_1, c_2\ \in \mathbb{N}\) the following functions are all in \(O(n^2)\): the constant \(k\)-function, \(\log(n), n, c_1 \cdot n^2 + c_2\). However \(\frac{1}{1000}n^3 \not\in O(n^2)\). A machine \(M_1\) is said to have lower time complexity (or to run faster than) another machine \(M_2\) if \(t_{M_1}(n) \in O(t_{M_2}(n))\), but not conversely. Space complexity comparisons between machines are performed similarly.

-

The time and space complexity of a problem \(X\) are measured in terms of the worst case time and space complexity of the asymptotically most efficient algorithm for deciding \(X\). In particular, we say that \(X\) has time complexity \(O(t(n))\) if the worst case time complexity of the most time efficient machine \(M\) deciding \(X\) is in \(O(t(n))\). Similarly, \(Y\) is said to be harder to decide (or more complex) than \(X\) if the time complexity of \(X\) is asymptotically bounded by the time complexity of \(Y\). The space complexity of a problem is defined similarly.

A complexity class can now be defined to be the set of problems for which there exists a decision procedure with a given running time or running space complexity. For instance, the class \(\textbf{TIME}(f(n))\) denotes the class of problems with time complexity \(f(n)\). \(\textbf{P}\) – or polynomial time – is used to denote the union of the classes \(\textbf{TIME}(n^k)\) for \(k \in \mathbb{N}\) with respect to the reference model \(\mathfrak{T}\). \(\textbf{P}\) hence subsumes all problems for which there exists a decision algorithm which can be implemented by a Turing machine whose time complexity is of polynomial order of growth. \(\textbf{SPACE}(f(n))\) and \(\textbf{PSPACE}\) – or polynomial space – is defined similarly. Several other complexity classes we will consider below (e.g. \(\textbf{NP}\), \(\textbf{BPP}\), \(\textbf{BQP}\)) are defined by changing the reference model of computation, the definition of what it means for a machine to accept or reject an input, or both.

1.3 Distinguishing notions of complexity

With these conventions in place, we can now record several respects in which the meaning assigned to the word ‘complexity’ in computational complexity theory differs from that which is assigned to this term in several other fields. In computational complexity theory, it is problems – i.e. infinite sets of finite combinatorial objects like natural numbers, formulas, graphs – which are assigned ‘complexities’. As we have just seen, such assignments are based on the time or space complexity of the most efficient algorithms by which membership in a problem can be decided. A distinct notion of complexity is studied in Kolmogorov complexity theory (e.g., Li and Vitányi 1997). Rather than studying the complexity of sets of mathematical objects, this subject attempts to develop a notion of complexity which is applicable to individual combinatorial objects – e.g. specific natural numbers, formulas, graphs, etc. For instance, the Kolmogorov complexity of a finite string \(x \in \{0,1\}^*\) is defined to be the size of the smallest program for a fixed universal Turing machine which outputs \(x\) given the empty string as input. In this setting, the ‘complexity’ of an object is thus viewed as a measure of the extent to which its description can be compressed algorithmically.

Another notion of complexity is studied in descriptive complexity theory (e.g., Immerman 1999). Like computational complexity theory, descriptive complexity theory also seeks to classify the complexity of infinite sets of combinatorial objects. However, the ‘complexity’ of a problem is now measured in terms of the logical resources which are required to define its instances relative to the class of all finite structures for an appropriate signature. As we will see in Section 4.4 this approach often yields alternative characterizations of the same classes studied in computational complexity theory.

Yet another subject related to computational complexity theory is algorithmic analysis (e.g. Knuth (1973), Cormen, Leiserson, and Rivest 2005). Like computational complexity theory, algorithmic analysis studies the complexity of problems and also uses the time and space measures \(t_M(n)\) and \(s_M(x)\) defined above. The methodology of algorithmic analysis is different from that of computational complexity theory in that it places primary emphasis on gauging the efficiency of specific algorithms for solving a given problem. On the other hand, in seeking to classify problems according to their degree of intrinsic difficulty, complexity theory must consider the efficiency of all algorithms for solving a problem. Complexity theorists thus make greater use of complexity classes such as \(\textbf{P},\textbf{NP}\), and \(\textbf{PSPACE}\) whose definitions are robust across different choices of reference model. In algorithmic analysis, on the other hand, algorithms are often characterized relative to the finer-grained hierarchy of running times \(\log_2(n), n, n \cdot \log_2(n), n^2, n^3, \ldots\) within \(\textbf{P}\).[7]

2. The origins of complexity theory

2.1 Church’s Thesis and effective computability

The origins of computational complexity theory lie in computability theory and early developments in algorithmic analysis. The former subject began with the work of Gödel, Church, Turing, Kleene, and Post originally undertaken during the 1930s in attempt to answer Hilbert’s Entscheidungsproblem – i.e. is the problem \(\sc{FO}\text{-}\sc{VALID}\) of determining whether a given formula of first-order logic is valid decidable? At this time, the concept of decidability at issue was that of effective decidability in principle – i.e. decidability by a rule governed method (or effective procedure) each of whose basic steps can be individually carried out by a finitary mathematical agent but whose execution may require an unbounded number of steps or quantity of memory space.

We now understand the Entscheidungsproblem to have been answered in the negative by Church (1936a) and Turing (1937). The solution they provided can be reconstructed as follows: 1) a mathematical definition of a model of computation \(\mathfrak{M}\) was presented; 2) an informal argument was given to show that \(\mathfrak{M}\) contains representatives of all effective procedures; 3) a formal argument was then given to show that no machine \(M \in \mathfrak{M}\) decides \(\sc{FO}\text{-}\sc{VALID}\). Church (1936b) took \(\mathfrak{M}\) to be the class of terms \(\Lambda\) in the untyped lambda calculus, while Turing took \(\mathfrak{M}\) to correspond the class of \(\mathfrak{T}\) of Turing machines. Church also showed the class \(\mathcal{F}_{\Lambda}\) of lambda-definable functions is extensionally coincident with the class \(\mathcal{F}_{\mathfrak{R}}\) of general recursive functions (as defined by Gödel 1986b and Kleene 1936). Turing then showed that the class \(\mathcal{F}_{\mathfrak{T}}\) of functions computable by a Turing machine was extensionally coincident with \(\mathcal{F}_{\Lambda}\).

The extensional coincidence of the classes \(\mathcal{F}_{\Lambda}, \mathcal{F}_{\mathfrak{R}}\), and \(\mathcal{F}_{\mathfrak{T}}\) provided the first evidence for what Kleene (1952) would later dub Church’s Thesis – i.e.

- (CT) A function \(f:\mathbb{N}^k \rightarrow \mathbb{N}\) is effectively computable if and only if \(f(x_1, \ldots, x_k)\) is recursive.

CT can be understood to assign a precise epistemological significance to Church and Turing’s negative answer to the Entscheidungsproblem. For if it is acknowledged that \(\mathcal{F}_{\mathfrak{R}}\) (and hence also \(\mathcal{F}_{\Lambda}\) and \(\mathcal{F}_{\mathfrak{T}}\)) contain all effectively computable functions, it then follows that a problem \(X\) can be shown to be effectively undecidable – i.e. undecidable by any algorithm whatsoever, regardless of its efficiency – by showing that the characteristic function \(c_X(x)\) of \(X\) is not recursive. CT thus allows us to infer from the fact that problems \(X\) for which \(c_X(x)\) can be proven to be non-recursive – e.g. the Halting Problem (Turing 1937) or the word problem for semi-groups (Post 1947) – are not effectively decidable.

It is evident, however, that our justification for such classifications can be no stronger than the stock we place in CT itself. One form of evidence often cited in favor of the thesis is that the coincidence of the class of functions computed by the members of \(\Lambda, \mathfrak{R}\) and \(\mathfrak{T}\) points to the mathematical robustness of the class of recursive functions. Two related forms of inductive evidence are as follows: (i) many other independently motivated models of computation have subsequently been defined which describe the same class of functions; (ii) the thesis is generally thought to yield a classification of functions which has thus far coincided with our ability to compute them in the relevant ‘in principle’ sense.

But even if the correctness of CT is granted, it is also important to keep in mind that the concept of computability which it seeks to analyze is an idealized one which is divorced in certain respects from our everyday computational practices. For note that CT will classify \(f(x)\) as effectively computable even if it is only computable by a Turing machine \(T\) with time and space complexity functions \(t_T(n)\) and \(s_T(n)\) whose values may be astronomically large even for small inputs.[8]

Examples of this sort notwithstanding, it is often claimed that Turing’s original characterization of effective computability provides a template for a more general analysis of what it could mean for a function to be computable by a mechanical device. For instance, Gandy (1980) and Sieg (2009) argue that the process by which Turing originally arrived at the definition of a Turing machine can be generalized to yield an abstract characterization of a mechanical computing device. Such characterizations may in turn be understood as describing the properties which a physical system would have to obey in order for it to be concretely implementable. For instance the requirement that a Turing machine may only access or modify the tape cell which is currently being scanned by its read-write head may be generalized to allow modification to a machine’s state at a bounded distance from one or more computational loci. Such a requirement can in turn be understood as reflecting the fact that classical physics does not allow for the possibility of action at a distance.

On this basis CT is also sometimes understood as making a prediction about which functions are physically computable – i.e. are such that their values can be determined by measuring the states of physical systems which we might hope to use as practical computing devices. We might thus hope that further refinements of the Gandy-Sieg conditions (potentially along the lines of the proposals of Leivant (1994) or Bellantoni and Cook (1992) discussed in Section 4.5) will eventually provide insight as to why some mathematical models of computation appear to yield a more accurate account than others of the exigencies of concretely embodied computation which complexity theory seeks to analyze.

2.2 The Cobham-Edmond’s Thesis and feasible computability

Church’s Thesis is often cited as a paradigm example of a case in which mathematical methods have been successfully employed to provide a precise analysis of an informal concept – i.e. that of effective computability. It is also natural to ask whether the concept of feasible computability described in Section 1 itself admits a mathematical analysis similar to Church’s Thesis.

We saw above that \(\sc{FACTORIZATION}\) is an example of a problem of antecedent mathematical and practical interest for which more efficient algorithms have historically been sought. The task of efficiently solving combinatorial problems of the sort exemplified by \(\sc{TSP}\), \(\sc{INTEGER}\ \sc{PROGRAMMING}\) and \(\sc{PERFECT} \ \sc{MATCHING}\) grew in importance during the 1950s and 1960s due to their role in scientific, industrial, and clerical applications. At the same time, the availability of digital computers began to make many such problems mechanically solvable on a mass scale for the first time.

This era also saw several theoretical steps which heralded the attempt to develop a general theory of feasible computability. The basic definitions of time and space complexity for the Turing machine model were first systematically formulated by Hartmanis and Stearns (1965) in a paper called “On the Computational Complexity of Algorithms”. This paper is also the origin of the so-called Hierarchy Theorems (see Section 3.2) which demonstrate that a sufficiently large increase in the time or space bound for a Turing machine computation allows more problems to be decided.

A systematic exploration of the relationships between different models of computation was also undertaken during this period. This included variants of the traditional Turing machine model with additional heads, tapes, and auxiliary storage devices such as stacks. Another important model introduced at about this time was the random access (or RAM) machine \(\mathfrak{A}\) (see, e.g, Cook and Reckhow 1973). This model provides a simplified representation of the so-called von Neumann architecture on which contemporary digital computers are based. In particular, a RAM machine \(A\) consists of a finite sequence of instructions (or program) \(\langle \pi_1,\ldots,\pi_n \rangle\) expressing how numerical operations (typically addition and subtraction) are to be applied to a sequence of registers \(r_1,r_2, \dots\) in which values may be stored and retrieved directly by their index.

Showing that one of these models \(\mathfrak{M}_1\) determines the same class of functions as some reference model \(\mathfrak{M}_2\) (such as \(\mathfrak{T}\)) requires showing that for all \(M_1 \in \mathfrak{M}_1\), there exists a machine \(M_2 \in \mathfrak{M}_2\) which computes the same function as \(M_1\) (and conversely). This is typically accomplished by constructing \(M_2\) such that each of the basic steps of \(M_1\) is simulated by one or more basic steps of \(M_2\). Demonstrating the coincidence of the classes of functions computed by the models \(\mathfrak{M}_1\) and \(\mathfrak{M}_2\) thus often yields additional information about their relative efficiencies. For instance, it is generally possible to extract from the definition of a simulation between \(\mathfrak{M}_1\) and \(\mathfrak{M}_2\) time and space overhead functions \(o_t(x)\) and \(o_s(x)\) such that if the value of a function \(f(x)\) can be computed in time \(t(n)\) and space \(s(n)\) by a machine \(M_1 \in \mathfrak{M}_1\), then it can also be computed in time \(o_t(t(n))\) and space \(o_s(s(n))\) by some machine \(M_2 \in \mathfrak{M}_2\).

For a wide class of models, a significant discovery was that efficient simulations can be found. For instance, it might at first appear that the model \(\mathfrak{A}\) allows for considerably more efficient implementations of familiar algorithms than does the model \(\mathfrak{T}\) in virtue of the fact that a RAM machine can access any of its registers in a single step whereas a Turing machine may move its head only a single cell at a time. Nonetheless it can be shown that there exists a simulation of the RAM model by the Turing machine model with cubic time overhead and constant space overhead – i.e. \(o_t(t(n)) \in O(t(n)^3)\) and \(o_s(s(n)) \in O(s(n))\) (Slot and Emde Boas 1988). On the basis of this and related results, Emde Boas (1990) formulated the following proposal to characterize the relationship between reference models which might be used for defining time and space complexity:

Invariance Thesis ‘Reasonable’ models of computation can simulate each other within a polynomially bounded overhead in time and a constant-factor overhead in space.

The 1960s also saw a number of advances in algorithmic methods applicable to problems in fields like graph theory and linear algebra. One example was a technique known as dynamic programming. This method can sometimes be used to find efficient solutions to optimization problems which ask us to find an object which minimizes or maximizes a certain quantity from a range of possible solutions. An algorithm based on dynamic programming solves an instance of such a problem by recursively breaking it into subproblems, whose optimal values are then computed and stored in a manner which can then be efficiently reassembled to achieve an optimal overall solution.

Bellman (1962) showed that the naive time complexity of \(O(n!)\) for \(\sc{TSP}\) could be improved to \(O(2^n n^2)\) via the use of dynamic programming. The question thus arose whether it was possible to improve upon such algorithms further, not only for \(\sc{TSP}\), but also for other problem such as \(\sc{SAT}\) for which efficient algorithms had been sought but were not known to exist. In order to appreciate what is at stake with this question, observe that the naive algorithm for \(\sc{TSP}\) works as follows: 1) enumerate the set \(S_G\) of all possible tours in \(G\) and compute their weights; 2) check if the cost of any of these tours is \(\leq b\). Note, however, that if \(G\) has \(n\) nodes, then \(S_G\) may contain as many as \(n!\) tours.

This is an example of a so-called brute force algorithm -- i.e. one which solves a problem by exhaustively enumerating all possible solutions and then successively testing whether any of them are correct. Somewhat more precisely, a problem \(X\) is said to admit a brute force solution if there exists a feasibly decidable relation \(R_X\) and a family of uniformly defined finite sets \(S_x\) such that \(x \in X\) if and only if there exists a feasibly sized witness \(y \in S_x\) such that \(R_X(x,y)\). Such a \(y\) is often called a certificate for \(x\)’s membership in \(X\). The procedure of deciding \(x \in X\) by exhaustively searching through all of the certificates \(y_0, y_1, \ldots \in S_x\) and checking if \(R_X(x,y)\) holds at each step is known as a brute force search. For instance, the membership of a propositional formula \(\phi\) with atomic letters among \(P_0,\ldots, P_{n-1}\) in the problem \(\sc{SAT}\) can be established by searching through the set \(S_{\phi}\) of possible valuation functions of type \(v:\{0,\ldots,n-1\} \rightarrow \{0,1\}\), to determine if there exists \(v \in S_{\phi}\) such that \(\llbracket \phi \rrbracket_v = 1\). Note, however, that since there are \(2^n\) functions in \(S_{\phi}\), this yields only an exponential time decision algorithm.

Many other problems came to light in the 1960s and 1970s which, like \(\sc{SAT}\) and \(\sc{TSP}\), can easily be seen to possess exponential time brute force algorithms but for which no polynomial time algorithm could be found. On this basis, it gradually came to be accepted that a sufficient condition for a decidable problem to be intractable is that the most efficient algorithm by which it can be solved has at best exponential time complexity. The corresponding positive hypothesis that possession of a polynomial time decision algorithm should be regarded as sufficient grounds for regarding a problem as feasibly decidable was first put forth by Cobham (1965) and Edmonds (1965a).

Cobham began by citing the evidence motivating the Invariance Thesis as suggesting that the question of whether a problem admits a polynomial time algorithm is independent of which model of computation is used to measure time complexity across a broad class of alternatives. He additionally presented a machine-independent characterization of the class \(\textbf{FP}\) – i.e. functions \(f:\mathbb{N}^k \rightarrow \mathbb{N}\) which are computable in polynomial time – in terms of a restricted form of primitive recursive definition known as bounded recursion on notation (see Section 4.5).

Edmonds (1965a) first proposed that polynomial time complexity could be used as a positive criterion of feasibility – or, as he put it, possessing a “good algorithm” – in a paper in which he showed that a problem which might a priori be thought to be solvable only by brute force search (a generalization of \(\sc{PERFECT}\ \sc{MATCHING}\) from above) was decidable by a polynomial time algorithm. Paralleling a similar study of brute force search in the Soviet Union, in a subsequent paper Edmonds (1965b) also provided an informal description of the complexity class \(\textbf{NP}\). In particular, he characterized this class as containing those problems \(X\) for which there exists a “good characterization” – i.e. \(X\) is such that the membership of an instance \(x\) may be verified by using brute force search to find a certificate \(y\) of feasible size which certifies \(x\)’s membership in \(X\).[9]

These observations provided the groundwork for has come to be known as the Cobham-Edmonds Thesis (see, e.g., Brookshear et al. 2006; Goldreich 2010; Homer and Selman 2011):

- (CET) A function \(f:\mathbb{N}^k \rightarrow \mathbb{N}\) is feasibly computable if and only if \(f(\vec{x})\) is computed by some machine \(M\) such that \(t_M(n) \in O(n^k)\) where \(k\) is fixed and \(M\) is drawn from a reasonable model of computation \(\mathfrak{M}\).

CET provides a characterization of the notion of a feasibly computable function discussed in Section 1 which is similar in form to Church’s Thesis. The corresponding thesis for decision problems holds that a problem is feasibly decidable just in case it is in the class \(\textbf{P}\). As just formulated, however, CET relies on the informal notion of a reasonable model of computation. A more precise formulation can be given by replacing this notion with a specific model such as \(\mathfrak{T}\) or \(\mathfrak{A}\) from the first machine class as discussed in Section 3.1 below.

CET is now widely accepted within theoretical computer science for reasons which broadly parallel those which are traditionally given in favor of Church’s Thesis. For instance, not only is the definition of the class \(\textbf{FP}\) stable across different machine-based models of computation in the manner highlighted by the Invariance Thesis, but there also exist several machine independent characterizations of this class which we will consider in Section 4. Such results testify to the robustness of the definition of polynomial time computability.

It is also possible to make a case for CET which parallels the quasi-inductive argument for CT. For in cases where we can compute the values of a function (or decide a problem) uniformly for the class of instances we are concerned with in practice, this is typically so precisely because we have discovered a polynomial time algorithm which can be implemented on current computing hardware (and hence also as a Turing machine). And in instances where we are currently unable to uniformly compute the values of a function (or decide a problem) for all arguments in which we take interest, it is typically the case that we have not discovered a polynomial time algorithm (and in many cases may also possess circumstantial evidence that such an algorithm cannot exist).

Nonetheless, there are several features of CET which suggest it should be regarded as less well established than CT. Paramount amongst these is that we do not as yet know whether \(\textbf{P}\) is properly contained in complexity classes such as \(\textbf{NP}\) which appear to contain highly intractable problems. The following additional caveats are also often issued with respect to the claim that the class of computational problems we can decide in practice neatly aligns with those decidable in polynomial time using a conventional deterministic Turing machine.[10]

-

CET classifies as feasible those functions whose most efficient algorithms have time complexity \(c \cdot n^k\) for arbitrarily large scalar factors \(c\) and exponents \(k\). This means that if a function is only computable by an algorithm with time complexity \(2^{1000} \cdot n\) or \(n^{1000}\), it would still be classified as feasible. This is so despite the fact that we would be unable to compute its values in practice for most or all inputs.

-

CET classifies as infeasible functions whose most efficient algorithms have time complexity which is of super-polynomial order of growth inclusive of, e.g., \(2^{.000001n}\) or \(n^{\log(\log(\log(n)))}\). However, such algorithms would run more efficiently when applied to the sorts of inputs we are likely to be concerned with than an algorithm with time complexity of (say) \(O(n^2)\).

-

There exist problems for which the most efficient known decision algorithm has exponential time complexity in the worst case (and in fact are known to be \(\textbf{NP}\)-hard in the general case – see Section 3.2) but which operate in polynomial time either in the average case or for a large subclass of problem instances of practical interest. Commonly cited examples include \(\sc{SAT}\) (e.g. Cook and Mitchell 1997), as well as some problems from graph theory (e.g. Scott and Sorkin 2006), and computational algebra (e.g. Kapovich et al. 2003).

-

Many of the problems studied in complexity theory are decision variants of optimization problems. In cases where these problems are \(\textbf{NP}\)-complete, it is a consequence of CET (together with \(\textbf{P} \neq \textbf{NP}\) – see Section 4.1) that they do not admit feasible exact algorithms – i.e. algorithms which are guaranteed to always find a maximal or minimal solution. Nonetheless, it is known that a significant subclass of \(\textbf{NP}\)-complete problems possess polynomial time approximation algorithms -- i.e. algorithms which are guaranteed to find a solution which is within a certain constant factor of optimality. For instance the optimization version of the problem \(\sc{VERTEX}\ \sc{COVER}\) defined below possesses a simple polynomial time approximation algorithm which allows us to find a solution (i.e. a set of vertices including at least one from each edge of the input graph) which is no larger than twice the size of an optimal solution.

-

There are non-classical models of computation which are hypothesized to yield a different classification of problems with respect to the appropriate definition of ‘polynomial time computability’. A notable example is the existence of a procedure known as Shor’s algorithm which solves the problem \(\sc{FACTORIZATION}\) in polynomial time relative the a model of computation known as the Quantum Turing Machine (see Section 3.4.3).

3. Technical development

3.1 Deterministic and non-deterministic models of computation

According to the Cobham-Edmonds Thesis the complexity class \(\textbf{P}\) describes the class of feasibily decidable problems. As we have just seen, this class is defined in terms of the reference model \(\mathfrak{T}\) in virtue of the assumption that it is a ‘reasonable’ model of computation. Several other models of computation are also studied in complexity theory not because they are presumed to be accurate representations of the costs of concretely embodied computation, but rather because they help us better understand the limits of feasible computability. The most important of these is the non-deterministic Turing machine model \(\mathfrak{N}\).

Recall that a deterministic Turing machine \(T \in \mathfrak{T}\) can be represented as a tuple \(\langle Q,\Sigma,\delta,s \rangle\) where \(Q\) is a finite set of internal states, \(\Sigma\) is a finite tape alphabet, \(s \in Q\) is \(T\)’s start state, and \(\delta\) is a transition function mapping state-symbol pairs \(\langle q,\sigma \rangle\) into state-action pairs \(\langle q,a \rangle\). Here \(a\) is chosen from the set of actions \(\{\sigma,\Leftarrow,\Rightarrow \}\) – i.e. write the symbol \(\sigma \in \Sigma\) on the current square, move the head left, or move the head right. Such a function is hence of type \(\delta: Q \times \Sigma \rightarrow Q \times \alpha\). On the other hand, a non-deterministic Turing machine \(N \in \mathfrak{N}\) is of the form \(\langle Q,\Sigma,\Delta,s \rangle\) where \(Q,\Sigma\), and \(s\) are as before but \(\Delta\) is now only required to be a relation – i.e. \(\Delta \subseteq (Q \times \Sigma) \times (Q \times \alpha)\). As a consequence, a machine configuration in which \(N\) is in state \(q\) and reading symbol \(\sigma\) can lead to finitely many distinct successor configurations – e.g. it is possible that \(\Delta\) relates \(\langle q,\sigma \rangle\) to both \(\langle q',a' \rangle\) and \(\langle q'',a'' \rangle\) for distinct states \(q'\) and \(q''\) and actions \(a'\) and \(a''\).[11]

This difference in the definition of deterministic and non-deterministic machines also necessitates a change in the definition of what it means for a machine to decide a language \(X\). Recall that for a deterministic machine \(T\), a computation sequence starting from an initial configuration \(C_0\) is a finite or infinite sequence of machine configurations \(C_0,C_1,C_2, \ldots\) Such a configuration consists of a specification of the contents of \(T\)’s tape, internal state, and head position. \(C_{i+1}\) is the unique configuration determined by applying the transition function \(\delta\) to the active state-symbol pair encoded by \(C_i\) and is undefined if \(\delta\) is itself undefined on this pair (in which case the computation sequence is finite, corresponding to a halting computation). If \(N\) is a non-deterministic machine, however, there may be more than one configuration which is related to the current configuration by \(\Delta\) at the current head position. In this case, a finite or infinite sequence \(C_0,C_1,C_2, \ldots\) is said to be a computation sequence for \(N\) from the initial configuration \(C_0\) just in case for all \(i \geq 0\), \(C_{i+1}\) is among the configurations which are related by \(\Delta\) to \(C_i\) (and is similarly undefined if no such configuration exists).

We now also redefine what is required for the machine \(N\) to decide a language \(X\):

-

\(N\) always halts – i.e. for all initial configurations \(C_0\), the computation sequence \(C_0,C_1,C_2, \ldots\) of \(N\) is of finite length;

-

if \(x \in X\) and \(C_0(x)\) is the configuration of \(N\) encoding \(x\) as input, then there exists a computation sequence \(C_0(x),C_1(x), \ldots, C_n(x)\) of \(N\) such that \(C_n(x)\) is an accepting state;

-

if \(x \not\in X\), then all computation sequences \(C_0(x),C_1(x), \ldots, C_n(x)\) of \(N\) are such that \(C_{n}(x)\) is a rejecting state.

Note that this definition treats accepting and rejecting computations asymmetrically. For if \(x \in X\), some of \(N\)’s computation sequences starting from \(C_0(x)\) may still lead to rejecting states as long as it least one leads to an accepting state. On the other hand, if \(x \not\in X\), then all of \(N\)’s computations from \(C_0(x)\) are required to lead to rejecting states.

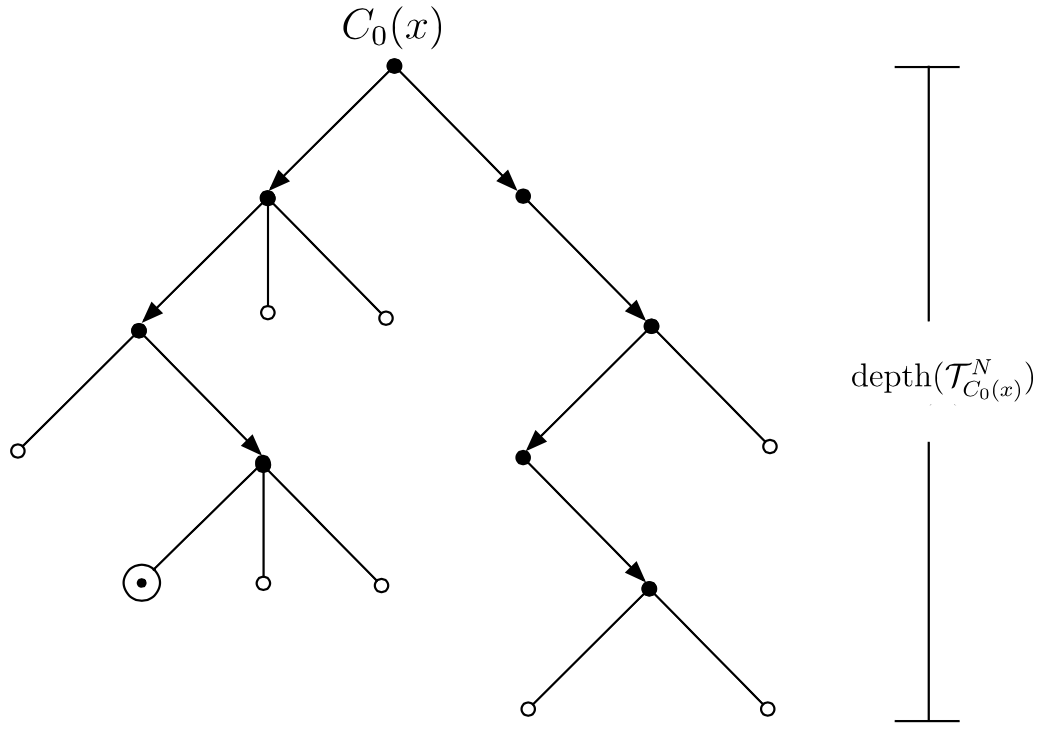

Non-deterministic machines are sometimes described as making undetermined ‘choices’ among different possible successor configurations at various points during their computation. But what the foregoing definitions actually describe is a tree \(\mathcal{T}^N_{C_0}\) of all possible computation sequences starting from a given configuration \(C_0\) for a deterministic machine \(N\) (an example is depicted in Figure 1). Given the asymmetry just noted, it will generally be the case that all of the branches in \(\mathcal{T}^N_{C_0(x)}\) must be surveyed in order to determine \(N\)’s decision about the input \(x\).

Figure 1. A potential computation tree \(\mathcal{T}^N_{C_0(x)}\) for a non-deterministic Turing machine \(N\) starting from the initial configuration \(C_0(x)\). Accepting states are labeled \(\odot\) and rejecting states \(\circ\). Note that although this tree contains an accepting computation sequence of length 4, its maximum depth of 5 still counts towards the determination of \(time_N(n) = \max \{\text{depth}(\mathcal{T}^N_{C_0(x)})\ :\ \lvert x\rvert = n\)}.

The time complexity \(t_N(n)\) of a non-deterministic machine \(N\) is the maximum of the depths of the computation trees \(\mathcal{T}^N_{C_0(x)}\) for all inputs \(x\) such that \(\lvert x\rvert = n\). Relative to this definition, non-deterministic machines can be used to implement many brute force algorithms in time polynomial in \(n\). For instance the \(\sc{SAT}\) problem can be solved by a non-deterministic machine which on input \(\phi\) uses part of its tape to non-deterministically construct (or ‘guess’) a string representing a valuation \(v\) assigning a truth value to each of \(\phi\)’s \(n\) propositional variables and then computes \(\llbracket \phi \rrbracket_v\) using the method of truth tables (which is polynomial in \(n\)). As \(\phi \in \sc{SAT}\) just in case a satisfying valuation exists, this is a correct method for deciding \(\sc{SAT}\) relative to conventions (i)–(iii) from above. This means that \(\sc{SAT}\) can be solved in polynomial time relative to \(\mathfrak{N}\).

This example also illustrates why adding non-determinism to the original deterministic model \(\mathfrak{T}\) does not enlarge the class of decidable problems. For if \(N \in \mathfrak{N}\) decides \(X\), then it is possible to construct a machine \(T_N \in \mathfrak{T}\) which also decides \(X\), by successively simulating each of the finitely many possible sequences of non-deterministic choices \(N\) might have made in the course of its computation.[12] It is evident that if \(N\) has time complexity \(f(n)\), then \(T_N\) must generally check \(O(k^{f(n)})\) sequences of choices (for fixed \(k\)) in order to determine the output of \(N\) for an input of length \(n\). While the availability of such simulations shows that the class of languages decided by \(\mathfrak{N}\) is the same as that decided by \(\mathfrak{T}\) – i.e. exactly the recursive ones – it also illustrates why the polynomial time decidability of a language by a non-deterministic Turing machine only guarantees that the language is decidable in exponential time by a deterministic Turing machine.

In order to account for this observation, Emde Boas (1990) introduced a distinction between two classes of models of computation which he labels the first and second machine classes. The first machine class contains the basic Turing machine model \(\mathfrak{T}\) as well as other models which satisfy the Invariance Thesis with respect to this model. As we have seen, this includes the multi-tape and multi-head Turing machine models as well as the RAM model. On the other hand, the second machine class is defined to include those deterministic models whose members can be used to efficiently simulate non-deterministic computation. This can be shown to include a number of standard models of parallel computation such as the PRAM machine which will be discussed in Section 3.4.3. For such models the definitions of polynomial time, non-deterministic polynomial time, and polynomial space coincide.

Experience has borne out that members of the first machine class are the ones which we should consider reasonable models of computation in the course of formulating the Cobham-Edmonds Thesis. It is also widely believed that members of the second machine class do not provide realistic representations of the complexity costs involved in concretely embodied computation (Chazelle and Monier (1983), Schorr (1983), Vitányi (1986)). Demonstrating this formally would, however, require proving separation results for complexity classes which are currently unresolved. Thus while it is widely believed that the second machine class properly extends the first, this is currently an open problem.

3.2 Complexity classes and the hierarchy theorems

Recall that a complexity class is a set of languages all of which can be decided within a given time or space complexity bound \(t(n)\) or \(s(n)\) with respect to a fixed model of computation. To avoid pathologies which would arise were we to define complexity classes for ‘unnatural’ time or space bounds (e.g. non-recursive ones) it is standard to restrict attention to complexity classes defined when \(t(n)\) and \(s(n)\) are time or space constructible. \(t(n)\) is said to be time constructible just in case there exists a Turing machine which on input consisting of \(1^n\) (i.e. a string of \(n\) 1s) halts after exactly \(t(n)\) steps. Similarly, \(s(n)\) is said to be space constructible just in case there exists a Turing machine which on input \(1^n\) halts after having visited exactly \(s(n)\) tape cells. It is easy to see that the time and space constructible functions include those which arise as the complexities of algorithms which are typically considered in practice – \(\log(n), n^k, 2^n, n!\), etc.

When we are interested in deterministic computation, it is conventional to base the definitions of the classical complexity classes defined in this section on the model \(\mathfrak{T}\). Supposing that \(t(n)\) and \(s(n)\) are respectively time and space constructible functions, the classes \(\textbf{TIME}(t(n))\) and \(\textbf{SPACE}(s(n))\) are defined as follows:

\[ \begin{align*} \textbf{TIME}(t(n)) = \{X \subseteq \{0,1\}^* \ : \ \exists & T \in \mathfrak{T} \forall n(time_T(n) \leq t(n)) \\ & \text{and } T \text{ decides } X \} \\ \textbf{SPACE}(s(n))= \{X \subseteq \{0,1\}^* \ : \ \exists & T \in \mathfrak{T}\forall n(space_T(n) \leq s(n)) \\ & \text{and } T \text{ decides } X \} \end{align*}\]Since all polynomials in the single variable \(n\) are of order \(O(n^k)\) for some \(k\), the classes known as polynomial time and polynomial space are respectively defined as \(\textbf{P} = \bigcup_{k \in \mathbb{N}} \textbf{TIME}(n^k)\) and \(\textbf{PSPACE} = \bigcup_{k \in \mathbb{N}} \textbf{SPACE}(n^k)\). It is also standard to introduce the classes \(\textbf{EXP} = \bigcup_{k \in \mathbb{N}} \textbf{TIME}(2^{n^k})\) (exponential time) and \(\textbf{L} = \textbf{SPACE}(\log(n))\) (logarithmic space).

In addition to classes based on the deterministic model \(\mathfrak{T}\), analogous non-deterministic complexity classes based on the model \(\mathfrak{N}\) are also studied. In particular, the classes \(\textbf{NTIME}(t(n))\) and \(\textbf{NSPACE}(s(n))\) are defined as follows:

\[ \begin{align*} \textbf{NTIME}(t(n)) = \{X \subseteq \{0,1\}^* \ : \ \exists & N \in \mathfrak{N} \forall n(time_N(n) \leq t(n)) \\ & \text{and } N \text{ decides } X \} \\ \textbf{NSPACE}(s(n)) = \{X \subseteq \{0,1\}^* \ : \ \exists & N \in \mathfrak{N} \forall n(space_N(n) \leq s(n)) \\ & \text{and } N \text{ decides } X \} \end{align*} \]The classes \(\textbf{NP}\) (non-deterministic polynomial time), \(\textbf{NPSPACE}\) (non-deterministic polynomial space), \(\textbf{NEXP}\) (non-deterministic exponential time), and \(\textbf{NL}\) (non-deterministic logarithmic space) are defined analogously to \(\textbf{P}\), \(\textbf{NP}\), \(\textbf{EXP}\) and \(\textbf{L}\) – e.g. \(\textbf{NP} = \bigcup_{k \in \mathbb{N}} \textbf{NTIME}(n^k)\).

Many classical results and important open questions in complexity theory concern the inclusion relationships which hold among these classes. Central among these are the so-called Hierarchy Theorems which demonstrate that the classes \(\textbf{TIME}(t(n))\) form a proper hierarchy in the sense that if \(t_2(n)\) grows sufficiently faster than \(t_1(n)\), then \(\textbf{TIME}(t_2(n))\) is a proper superset of \(\textbf{TIME}(t_1(n))\) (and similarly for \(\textbf{NTIME}(t(n))\) and \(\textbf{SPACE}(s(n))\)).

-

If

\[\lim_{n \rightarrow \infty} \frac{t_1(n) \log(t_1(n))}{t_2(n)} = 0, \]then \(\textbf{TIME}(t_1(n)) \subsetneq \textbf{TIME}(t_2(n))\). (Hartmanis and Stearns 1965)

-

If

\[\lim_{n \rightarrow \infty} \frac{t_1(n+1)}{t_2(n)} = 0, \]then \(\textbf{NTIME}(t_1(n)) \subsetneq \textbf{NTIME}(t_2(n))\). (Cook 1972)

-

If

\[\lim_{n \rightarrow \infty} \frac{s_1(n)}{s_2(n)} = 0, \]then \(\textbf{SPACE}(s_1(n)) \subsetneq \textbf{SPACE}(s_2(n))\). (Stearns, Hartmanis, and Lewis 1965)

These results may all be demonstrated by modifications of the diagonal argument by which Turing (1937) originally demonstrated the undecidability of the classical Halting Problem.[13] Nonetheless, Theorem 3.1 already has a number of interesting consequences about the relationships between the complexity classes introduced above. For instance, since the functions \(n^{k}\) and \(n^{k+1}\) satisfy the hypotheses of parts i), we can see that \(\textbf{TIME}(n^k)\) is always a proper subset of \(\textbf{TIME}(n^{k+1})\) . It also follows from part i) that \(\textbf{P} \subsetneq \textbf{TIME}(f(n))\) for any time bound \(f(n)\) which is of super-polynomial order of growth – e.g. \(2^{n^{.0001}}\) or \(2^{\log(n)^2}\). Similarly, parts i) and ii) respectively implies that \(\textbf{P} \subsetneq \textbf{EXP}\) and \(\textbf{NP} \subsetneq \textbf{NEXP}\). And it similarly follows from part iii) that \(\textbf{L} \subsetneq \textbf{PSPACE}\).

Note that since every deterministic Turing machine is, by definition, a non-deterministic machine, we clearly have \(\textbf{P} \subseteq \textbf{NP}\) and \(\textbf{PSPACE} \subseteq \textbf{NPSPACE}\). Some additional information about the relationship between time and space complexity is reported by the following classical results:

Theorem 3.2 Suppose that \(f(n)\) is both time and space constructible. Then

- \(\textbf{NTIME}(f(n)) \subseteq \textbf{SPACE}(f(n))\)

- \(\textbf{NSPACE}(f(n)) \subseteq \textbf{TIME}(2^{O(f(n))})\)

The first of these results recapitulates the prior observation that a non-deterministic machine \(N\) with running time \(f(n)\) may be simulated by a deterministic machine \(T_N\) in time exponential in \(f(n)\). In order to obtain Theorem 3.2.i), note this process can be carried out in space bounded by \(f(n)\) provided that we make sure to erase the cells which have been used by a prior simulation before starting a new one.[14]

Another classical result of Savitch (1970) relates \(\textbf{SPACE}(f(n))\) and \(\textbf{NSPACE}(f(n))\):

Theorem 3.3

For any space constructible function \(s(n)\), \(\textbf{NSPACE}(s(n)) \subseteq \textbf{SPACE}((s(n))^2)\).

A corollary is that \(\textbf{PSPACE} = \textbf{NPSPACE}\), suggesting that non-determinism is computationally less powerful with respect to space than it appears to be with respect to time.

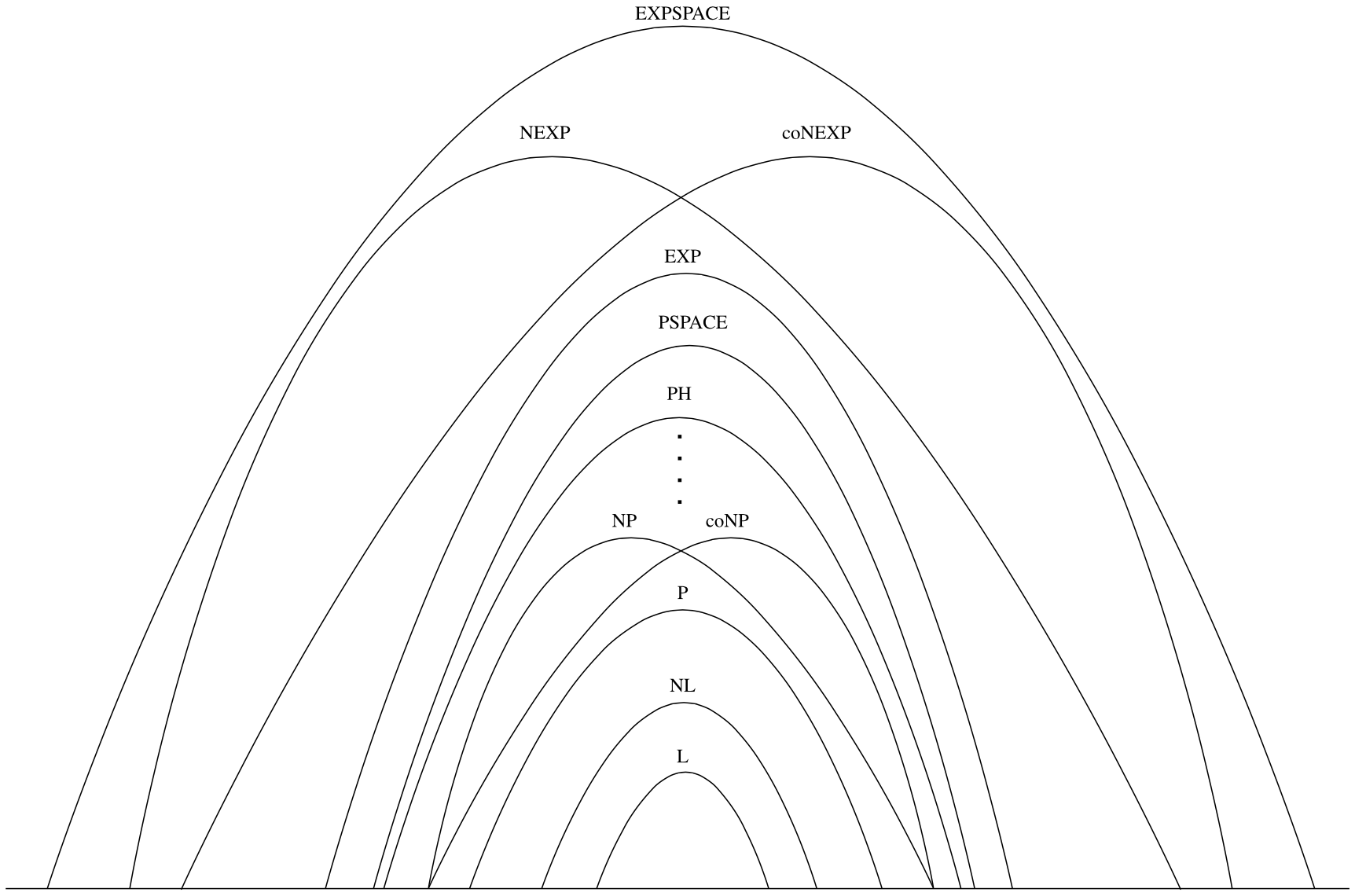

The foregoing results establish the following basic relationships among complexity classes which are also depicted in Figure 2:

\[ \textbf{L} \subseteq \textbf{NL} \subseteq \textbf{P} \subseteq \textbf{NP} \subseteq \textbf{PSPACE} \subseteq \textbf{EXP} \subseteq \textbf{NEXP} \subseteq \textbf{EXPSPACE} \]As we have seen, it is a consequence of Theorem 3.1 that \(\textbf{L} \subsetneq \textbf{PSPACE}\) and \(\textbf{P} \subsetneq \textbf{EXP}\). It thus follows that at least one of the first four displayed inclusions must be proper and also at least one of the third, fourth, or fifth.

Figure 2. Inclusion relationships among major complexity classes. The only depicted inclusions which are currently known to be proper are \(\textbf{L} \subsetneq \textbf{PSPACE}\) and \(\textbf{P} \subsetneq \textbf{EXP}\).

At the moment, however, this is all that is currently known – i.e. although various heuristic arguments can be cited in favor of the properness of the other displayed inclusions, none of them has been proven to hold. Providing unconditional proofs of these claims remains a major unfulfilled goal of complexity theory. For instance, the following is often described as the single most important open question in all of theoretical computer science:

Open Question 1 Is \(\textbf{P}\) properly contained in \(\textbf{NP}\)?

The significance of Open Question 1 as well as several additional unresolved questions about the inclusion relations among other complexity classes will be considered at greater length in Section 4.1.

3.3 Reductions and \(\textbf{NP}\)-completeness

Having now introduced some of the major classes studied in complexity theory, we next turn to the question of their internal structure. This can be studied using the notions of the reducibility of one problem to another and of a problem being complete for a class. Informally speaking, a problem \(X\) is said to be reducible to another problem \(Y\) just in case a method for solving \(Y\) would also yield a method for solving \(X\). The reducibility of \(X\) to \(Y\) may thus be understood as showing that solving \(Y\) is at least as difficult as solving \(X\). A problem \(X\) is said to be complete for a complexity class \(\textbf{C}\) just in case \(X\) is a member of \(\textbf{C}\) and all problems in \(\textbf{C}\) are reducible to \(X\). The completeness of \(X\) for \(\textbf{C}\) may thus be understood as demonstrating that \(X\) is representative of the most difficult problems in \(\textbf{C}\).

The concepts of reduction and completeness were originally introduced in computability theory. Therein a number of different definitions of reduction are studied, of which many-one and Turing reducibility are the most familiar (see, e.g., Rogers 1987). Analogues of both of these have been studied in complexity theory under the names polynomial time many-one reducibility – also known as Karp reducibility (Karp 1972) – and polynomial time Turing reducibility – also known as Cook reducibility (Cook 1971). For simplicity we will consider only the former here.[15]

Definition 3.1 For languages \(X, Y \subseteq \{0,1\}^*\), \(X\) is said to be polynomial time many-one reducible to \(Y\) just in case there exists a polynomial time computable function \(f(x)\) such that

\[ \text{for all } x \in \{0,1\}^*, x \in X \text{ if and only if } f(x) \in Y \]In this case we write \(X \leq_P Y\) and say that \(f(x)\) is a polynomial time reduction of \(X\) to \(Y\).

Note that if \(X\) is polynomial time reducible to \(Y\) via \(f(x)\), then an efficient algorithm \(A\) for deciding membership in \(Y\) would also yield an efficient algorithm for deciding membership in \(X\) as follows: (i) on input \(x\), compute \(f(x)\); (ii) use \(A\) to decide if \(f(x) \in Y\), accepting if so, and rejecting if not.

It is easy to see that \(\leq_P\) is a reflexive relation. Since the composition of two polynomial time computable functions is also polynomial time computable, \(\leq_P\) is also transitive. We additionally say that a class \(\textbf{C}\) is closed under \(\leq_P\) if \(Y \in \textbf{C}\) and \(X \leq_P Y\) implies \(X \in \textbf{C}\). It is also easy to see that the classes \(\textbf{P}, \textbf{NP}, \textbf{PSPACE},\textbf{EXP}, \textbf{NEXP}\) and \(\textbf{EXPSPACE}\) are closed under this relation.[16] A problem \(Y\) is said to be hard for a class \(\textbf{C}\) if \(X \leq_P Y\) for all \(X \in \textbf{C}\). Finally \(Y\) is said to be complete for \(\textbf{C}\) if it is both hard for \(\textbf{C}\) and also a member of \(\textbf{C}\).

Since the mid-1970s a major focus of research in complexity theory has been the study of problems which are complete for the class \(\textbf{NP}\) – i.e. so-called NP-complete problems. A canonical example of such a problem is a time-bounded variant of the Halting Problem for \(\mathfrak{N}\) (whose unbounded deterministic version is also the canonical Turing- and many-one complete problem in computability theory):

\(\sc{BHP}\ \) Given the index of a non-deterministic Turing machine \(N \in \mathfrak{N}\), an input \(x\), and a time bound \(t\) represented as a string \(1^t\), does \(N\) accept \(x\) in \(t\) steps?

It is evident that \(\sc{BHP}\) is in \(\textbf{NP}\) since on input \(\langle \ulcorner N \urcorner, x,1^t \rangle\) an efficient universal non-deterministic machine can determine if \(N\) accepts \(x\) in time polynomial in \(\lvert x\rvert\) and \(t\). To see that \(\sc{BHP}\) is hard for \(\textbf{NP}\), observe that if \(Y \in \textbf{NP}\), then \(Y\) corresponds to the set of strings accepted by some non-deterministic machine \(N \in \mathfrak{N}\) with polynomial running time \(p(n)\). If we now define \(f(x) = \langle \ulcorner N \urcorner,x,1^{p(\lvert x\rvert)} \rangle\), then it is easy to see that \(f(x)\) is a polynomial time reduction of \(Y\) to \(\sc{BHP}\).

Since \(\sc{BHP}\) is \(\textbf{NP}\)-complete, it follows from the closure of \(\textbf{NP}\) under \(\leq_P\) that this problem is in \(\textbf{P}\) only if \(\textbf{P} = \textbf{NP}\). Since this is widely thought not to be the case, this provides some evidence that \(\sc{BHP}\) is an intrinsically difficult computational problem. But since \(\sc{BHP}\) is closely related to the model of computation \(\mathfrak{N}\) itself this may appear to be of little practical significance. It is thus of considerably more interest that a wide range of seemingly unrelated problems originating in many different areas of mathematics are also \(\textbf{NP}\)-complete.

One means of demonstrating that a given problem \(X\) is \(\textbf{NP}\)-hard is to show that \(\sc{BHP}\) may be reduced to it. But since most mathematically natural problems bear no relationship to Turing machines, it is by no means obvious that such reductions exist. This problem was circumvented at the beginning of the study of \(\textbf{NP}\)-completeness by Cook (1971) and Levin (1973) who independently demonstrated the following:[17]

Theorem 3.4 \(\sc{SAT}\) is \(\textbf{NP}\)-complete.

We have already seen that \(\sc{SAT}\) is in \(\textbf{NP}\). In order to demonstrate Theorem 3.4 it thus suffices to show that all problems \(X \in \textbf{NP}\) are polynomial time reducible to \(\sc{SAT}\). Supposing that \(X \in \textbf{NP}\) there must again be a non-deterministic Turing machine \(N = \langle Q,\Sigma,\Delta,s \rangle\) accepting \(X\) with polynomial time complexity \(p(n)\). The proof of Theorem 3.4 then proceeds by showing that for all inputs \(x\) of length \(n\) for \(N\), we can construct a propositional formula \(\phi_{N,x}\) which is satisfiable if and only if \(N\) accepts \(x\) within \(p(n)\) steps.[18]

Although \(\sc{SAT}\) is still a problem about a particular system of logic, it is of a more combinatorial nature than \(\sc{BHP}\). In light of this, Theorem 3.4 opened the door to showing a great many other problems to be \(\textbf{NP}\)-complete by showing that \(\sc{SAT}\) may be efficiently reduced to them. For instance, the problems \(\sc{TSP}\) and \(\sc{INTEGER}\ \sc{PROGRAMMING}\ \) introduced above are both \(\textbf{NP}\)-complete. Here are some other examples of \(\textbf{NP}\)-complete problems:

\(3\text{-}\sc{SAT}\ \) Given a propositional formula \(\phi\) in 3-conjunctive normal form (\(3\text{-}\sc{CNF}\)) – i.e. \(\phi \) is the conjunction of disjunctive clauses containing exactly three negated or unnegated propositional variables – does there exist a satisfying assignment for \(\phi\)?

\(\sc{HAMILTONIAN}\ \sc{PATH}\ \) Given a finite graph \(G = \langle V,E \rangle\), does \(G\) contain a Hamiltonian Path (i.e. a path which visits each vertex exactly once)?

\(\sc{INDEPENDENT}\ \sc{SET}\ \) Given a graph \(G = \langle V,E \rangle\) and a natural number \(k \leq \lvert V\rvert\), does there exist a set of vertices \(V' \subseteq V\) of cardinality \(\geq k\) such that no two vertices in \(V'\) are connected by an edge?

\(\sc{VERTEX}\ \sc{COVER}\ \) Given a graph \(G = \langle V,E \rangle\) and a natural number \(k \leq \lvert V\rvert\), does there exist a set of vertices \(V' \subseteq V\) of cardinality \(\leq k\) such that for each edge \(\langle u,v \rangle \in E\), at least one of \(u\) or \(v\) is a member of \(V'\)?

\(\sc{SET}\ \sc{COVERING}\ \) Given a finite set \(U\), a finite family \(\mathcal{S}\) of subsets of \(U\) and a natural number \(k\), does there exist a subfamily \(\mathcal{S}' \subseteq \mathcal{S}\) of cardinality \(\leq k\) such that \(\bigcup \mathcal{S}' = U\)?

The problem \(3\text{-}\sc{SAT}\) was shown to be \(\textbf{NP}\)-complete in Cook’s original paper (Cook 1971).[19] The other examples just cited are taken from a list of 21 problems (most of which had previously been identified in other contexts) which were shown by Karp (1972) to be \(\textbf{NP}\)-complete. The reductions required to show the completeness of these problems typically require the construction of what has come to be known as a gadget – i.e. a constituent of an instance of one problem which can be used to simulate a constituent of an instance of a different problem. For instance, in order to see how \(3\text{-}\sc{SAT}\) may be reduced to \(\sc{INDEPENDENT}\ \sc{SET}\), first observe that a \(3\text{-}\sc{CNF}\) formula has the form

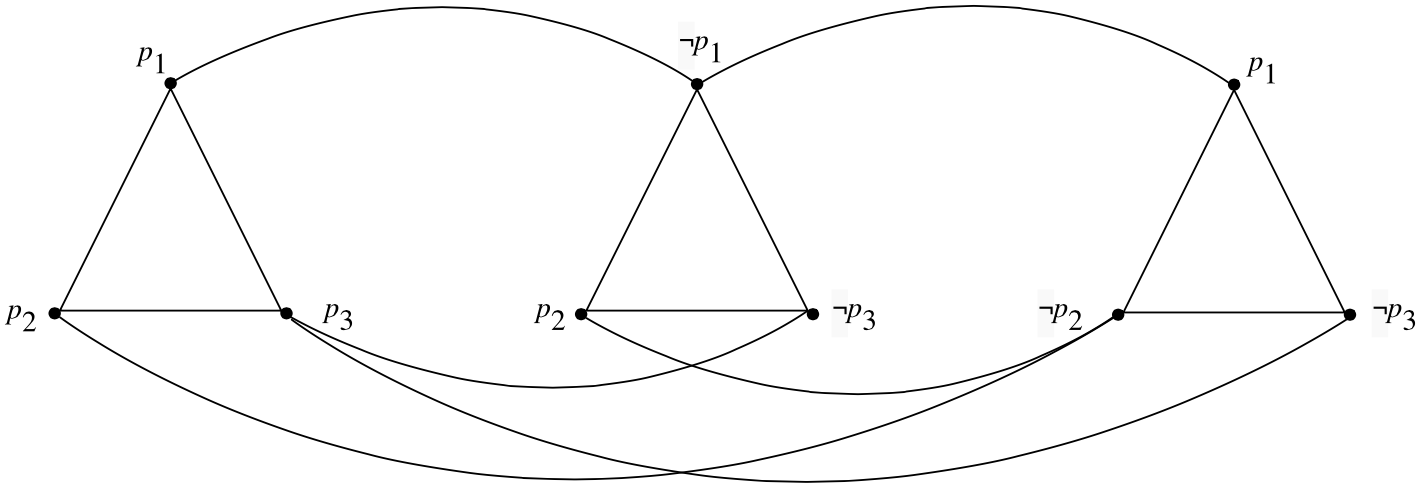

\[ (\ell^1_1 \ \vee \ \ell^1_2 \ \vee \ \ell^2_3) \ \wedge \ (\ell^2_1 \ \vee \ \ell^2_2 \ \vee \ \ell^2_3) \ \wedge \ \ldots \ \wedge \ (\ell^n_1 \ \vee \ \ell^n_2 \ \vee \ \ell^n_3) \]where each \(\ell^i_j\) is a literal – i.e. \(\ell^i_j = p_k\) or \(\ell^i_j = \neg p_k\) for some propositional variable \(p_k\). A formula \(\phi\) of this form is satisfiable just in case there exists a valuation satisfying at least one of the literals \(\ell^i_1, \ell^i_2\) or \(\ell^i_3\) for all \(1 \leq i \leq n\). On the other hand, suppose that we consider the family of graphs \(G\) which can be partitioned into \(n \) disjoint triangles in the manner depicted in Figure 3. It is easy to see that any independent set of size \(n\) in such a graph must contain exactly one vertex from each triangle in \(G\). This in turn suggests the idea of using a graph of this form as a gadget for representing the clauses of a \(3\text{-}\sc{CNF}\) formula.

Figure 3. The graph \(G_{\phi}\) for the formula \((p_1 \vee p_2 \vee p_3) \wedge (\neg p_1 \vee p_2 \vee \neg p_3) \wedge (p_1 \vee \neg p_2 \vee \neg p_3)\).

A reduction of \(3\text{-}\sc{SAT}\) to \(\sc{INDEPENDENT}\ \sc{SET}\) can now be described as follows:

-

Let \(\phi\) be a \(3\text{-}\sc{CNF}\) formula consisting of \(n\) clauses as depicted above.

-

We construct a graph \(G_{\phi} = \langle V,E \rangle\) consisting of \(n\)-triangles \(T_1,\ldots,T_n\) such that the nodes of \(T_i\) are respectively labeled with the literals \(\ell^i_1, \ell^i_2,\ell^i_3\) comprising the \(i\)th clause of \(\phi\). Additionally, \(G\) contains an edge connecting nodes in each triangle corresponding to literals of opposite sign as depicted in Figure 3.[20]

Now define a mapping from instances of \(3\text{-}\sc{SAT}\) to instances of \(\sc{INDEPENDENT}\ \sc{SET}\) as \(f(\phi) = \langle G_{\phi},n \rangle\). As \(G_\phi\) contains \(3n\) vertices (and hence at most \(O(n^2)\) edges), it is evident that \(f(x)\) can be computed in polynomial time. To see that \(f(\phi)\) is a reduction of \(3\text{-}\sc{SAT}\) to \(\sc{INDEPENDENT}\ \sc{SET}\), first suppose that \(v\) is a valuation such that \(\llbracket \phi \rrbracket_v = 1\). Then we must have \(\llbracket \ell^i_j \rrbracket_v = 1\) for at least one literal in every clause of \(\phi\). Picking the nodes corresponding to this literal in each triangle \(T_i\) in \(\phi\) thus yields an independent set of size \(n\) in \(G_{\phi}\). Conversely, suppose that \(V' \subseteq V\) is an independent set of size \(n\) in \(G_{\phi}\). By construction, \(V'\) contains exactly one vertex in each of the \(T_i\). And since there is an edge between each pair of nodes labeled with oppositely signed literals in different triangles in \(G_{\phi}\), \(V'\) cannot contain any contradictory literals. A satisfying valuation \(v\) for \(\phi\) can be constructed by setting \(v(p_i) = 1\) if a node labeled with \(p_i\) appears in \(V'\) and \(v(p_i) = 0\) otherwise.

Since \(\leq_P\) is transitive, composing polynomial time reductions together provides another means of showing that various problems are \(\textbf{NP}\)-complete. For instance, the completeness of \(\sc{TSP}\) was originally demonstrated by Karp (1972) via the series of reductions

\[ \sc{SAT} \leq_P 3\text{-}\sc{SAT} \leq_P \sc{INDEPENDENT}\ \sc{SET} \leq_P \sc{VERTEX}\ \sc{COVER} \leq_P \sc{HAMILTONIAN}\ \sc{PATH} \leq_P \sc{TSP}. \]Thus although the problems listed above are seemingly unrelated in the sense that they concern different kinds of mathematical objects – e.g. logical formulas, graphs, systems of linear equations, etc. – the fact that they are \(\textbf{NP}\)-complete can be taken to demonstrate that they are all computationally universal for \(\textbf{NP}\) in the same manner as \(\sc{BHP}\).[21]

It also follows from the transitivity of \(\leq_P\) that the existence of a polynomial time algorithm for even one \(\textbf{NP}\)-complete problem would entail the existence of polynomial time algorithms for all problems in \(\textbf{NP}\). The existence of such an algorithm would thus run strongly counter to expectation in virtue of the extensive effort which has been devoted to finding efficient solutions for particular \(\textbf{NP}\)-complete problems such as \(\sc{INTEGER}\ \sc{PROGRAMMING}\) or \(\sc{TSP}\). Such problems are thus standardly regarded as constituting the most difficult problems in \(\textbf{NP}\).