Experimental Moral Philosophy

Experimental moral philosophy emerged as a methodology in the last decade of the twentieth century, as a branch of the larger experimental philosophy (X-Phi) approach. Experimental moral philosophy is the empirical study of moral intuitions, judgments, and behaviors. Like other forms of experimental philosophy, it involves gathering data using experimental methods and using these data to substantiate, undermine, or revise philosophical theories. In this case, the theories in question concern the nature of moral reasoning and judgment; the extent and sources of moral obligations; the nature of a good person and a good life; even the scope and nature of moral theory itself. This entry begins with a brief look at the historical uses of empirical data in moral theory and goes on to ask what, if anything, is distinctive about experimental moral philosophy—how should we distinguish it from related work in empirical moral psychology? After discussing some strategies for answering this question, the entry examines two of the main projects within experimental moral philosophy, and then discusses some of the most prominent areas of reseatch within the field. As we will see, in some cases experimental moral philosophy has opened up new avenues of investigation, while in other cases it has influenced longstanding debates within moral theory.

- 1. Introduction and History

- 2. Moral Intuitions and Conceptual Analysis

- 3. Character, Wellbeing, and Emotion

- 4. Metaethics and Experimental Moral Philosophy

- 5. Criticisms of Experimental Moral Philosophy

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. Introduction and History

The idea that our actual moral judgments are an important source of information about the origins and justification of moral norms goes back to ancient Greece, if not further. Herodotus recounts a story in which the Persian emperor Darius invited Greek members of his court “and asked them what price would persuade them to eat the dead bodies of their fathers. They answered that there was no price for which they would do it.” Darius then summoned members of a different group, “and asked them… what would make them willing to burn their fathers at death. The Indians cried aloud, that he should not speak of so horrid an act.” Herodotus concludes that stories like these prove that, as the poet Pindar writes, “custom is king of all,” thereby providing an instance of the argument from moral disagreement for relativism. Likewise, in the Outlines of Skepticism, Sextus Empiricus stresses that empirical discoveries can destabilize our confidence in universal moral agreement:

even if in some cases we cannot see an anomaly, we should say that possibly there is dispute about these matters too… Just as, if we had not, for example, known about the Egyptian custom of marrying their sisters, we should have affirmed, wrongly, that it is agreed by all that you must not marry your sister. (Sextus Empiricus, Outlines of Skepticism)

While the use of empirical observation in moral theory has a long history, the contemporary movement known as experimental philosophy goes back only a few decades. The current experimental philosophy movement owes its beginnings to the work of Stephen Stich and Jonathan Weinberg (2001) and Joshua Knobe (2003), but the earliest instance of experimental philosophy may be Truth as Conceived by Those Who Are Not Professional Philosophers (Naess 1938), which surveyed ordinary speakers for their intuitions about the nature of truth. Contemporary philosophers have not been uniformly accepting of the movement, but as we will see, there are reasons to think that experimental evidence might have a distinctive role, significance, and importance in moral philosophy and theorizing.

The relationship between more traditional philosophy and experimental work is instructive and brings out some tensions within moral philosophy and theory: namely, morality is at once practical and normative, and these two aspects inform and constrain the extent to which it is accountable to human psychology.

Insofar as morality is practical, it should be accessible to and attainable by agents like us: if a theory is too demanding, or relies on intuitions, judgments, motivations, or capacities that people do not (or, worse, cannot) possess, we might on those grounds dismiss it. On the other hand, morality is also normative: it aims not just to describe what we actually do or think, but to guide our practice. For this reason, some philosophers have responded to experimental results claiming to show that attaining and reliably expressing virtues in a wide variety of situations is difficult (see section 3.1 below for a discussion of this literature) by pointing out that the fact that people do not always make the right judgment, or perform actions for the right reasons, does not falsify a theory—it simply shows that people often act in ways that are morally deficient. We will return to these issues when we discuss criticisms of experimental moral philosophy at the end of this entry; for now, we mention them to illustrate that the extent to which experimental moral philosophy challenges traditional philosophical approaches is itself a controversial issue. Some moral philosophers see themselves as deriving moral principles a priori, without appeal to contingent facts about human psychology. Others see themselves as working within a tradition, going back at least as far as Aristotle, that conceives of ethics as the study of human flourishing. These philosophers have not necessarily embraced experimental moral philosophy, but many practitioners envision their projects as outgrowths of the naturalistic moral theories developed by Aristotle, Hume, and others.

1.1 What Are Experiments?

As the examples discussed above reveal, a variety of types of empirical evidence are useful to moral theorizing (see also the entries on empirical moral psychology, empirical distributive justice, and empirical psychology and character). Anthropological observation and data have long played a role in moral philosophy. The twentieth-century moral philosophers John Ladd and Richard Brandt investigated moral relativism in part by conducting their own ethnographies in Native American communities. Brandt writes, “We have… a question affecting the truth of ethical relativism which, conceivably, anthropology can help us settle. Does ethnological evidence support the view that “qualified persons” can disagree in ethical attitude?” But, he notes, “some kinds of anthropological material will not help us—in particular, bare information about intercultural differences in ethical opinion or attitude.” (1952: 238). This is a caveat frequently cited by philosophers engaged in empirical research: it is important to have philosophers participate in experimental design and the gathering of empirical data, because there are certain questions that must be addressed for the data to have philosophical applications—in this case, whether moral disagreements involve different factual beliefs or other non-moral differences. Barry Hallen (1986, 2000) conducted a series of interviews and ethnographies among the Yoruba, investigating central evaluative concepts and language relating to epistemology, aesthetics, and moral value. Hallen was motivated by questions about the indeterminacy of translation, but his work provides an example of how in-depth interviews can inform investigations of philosophical concepts.

These examples show that ethnography has a valuable role to play in philosophical theory, but the remainder of this entry will focus primarily on experiments. Paradigmatic experiments involve randomized assignment to varying conditions of objects or people from a representative sample, followed by statistical comparison of the outcomes for each condition. The variation in conditions is sometimes called a manipulation. For example, an experimenter might try to select a representative sample of people, randomly assign them either to find or not to find a dime in the coin return of a pay phone, and then measure the degree to which they subsequently help someone they take to be in need (Isen and Levin 1972). Finding or not finding the dime is the condition; degree of helpfulness is the outcome variable.

While true experiments follow this procedure, other types of studies allow non-random assignment to conditions. Astronomers, for example, sometimes speak of natural experiments. Although we are in no position to affect the motions of heavenly bodies, we can observe them under a variety of conditions, over long periods of time, and with a variety of instruments. Likewise, anthropological investigation of different cultures’ moral beliefs and practices is unlikely to involve manipulating variables in the lives of the members of the culture, but such studies are valuable and empirically respectable. Still, such studies can at best demonstrate correlation, not causation, so their evidential value of studies is less than that of experiments; most published research in experimental philosophy involves true experiments.

Even within the category of experiments, we find a lot of diversity with respect to inputs, methods of measurement, and outputs. The experiment just described uses a behavioral measure and manipulation—finding the dime is the input, helping is the outcome measured. Other experiments measure not behavior but judgment or intuition, and this can be done using a survey or other form of self–report or informant–report where subjects respond explicitly to some question, situation, or dilemma. Studies measuring judgments might use either manipulations of the condition in which the subject makes the judgment, or they might look for correlations between judgments and some other factor, such as brain activity, emotional response, reaction time, visual attention, and so on (Strohminger et al. 2014).

The experimental methods available have also changed over time. Surveys have been the dominant method of experimental philosophy for the past few decades, but technology may change this: advances in virtual and augmented reality mean that philosophers can now immerse people in moral dilemmas such as Thompson’s (1971) violinist thought experiment and different versions of the trolley problem. Philosophers interested in the neural correlates of moral judgment can use transcranial magnetic stimulation (TMS) to investigate the effects of enhancing or lessening activity in certain areas of the brain. Even survey methods have seen advances thanks to technology; the ubiquity of smartphones allows researchers to ping people in real time, asking for reports on mood (see section 3.2 below for a discussion of surveys relating to happiness and mood).

Whether experimental moral philosophy has to use true experiments or can include studies and even ethnographies and other forms of qualitative data is partly a terminological question about how to define the field and whether we distinguish it from empirical moral psychology, a closely related research program. As we will see below, though, a diversity of both methods and subjects is important in helping experimental moral philosophy respond to its critics.

1.2 What Types of Questions and Data?

Like experimental philosophy more generally, experimental moral philosophy is interested in our intuitions and judgments about philosophical thought experiments and moral dilemmas. But research in this area also concerns the cognitive architecture underlying our moral judgments; the developmental processes that give rise to moral judgments; the neuroscience of moral judgment; and other related fields.

Direct experiments investigate whether a claim held (or denied) by philosophers is corroborated or falsified. This might mean investigating an intuition and whether it is as widely shared as philosophers claim, or it might mean investigating the claim that a certain behavior or trait is widespread or that two factors covary. For example, we find philosophers claiming that it is wrong to imprison an innocent person to prevent rioting; that a good life must be authentic; and that moral judgments are intrinsically motivating. Experimental research can be (and has been, as we will see below) brought to bear on each of these claims.

Indirect experiments look at the nature of some capacity or judgment: for example, whether certain types of moral dilemmas engage particular areas of the brain; how early children develop a capacity to empathize; and whether the moral/conventional distinction is universal across cultures. These claims have philosophical relevance insofar as they help us understand the nature of moral judgment.

In addition to distinctions involving the type of data and how directly it bears on the question, we can also distinguish among experimental applied ethics, experimental normative ethics, and experimental metaethics. The first involves the variables that influence judgments about particular practical problems, such as how a self-driving car should respond to sacrificial dilemmas (Bonnefon, Sharriff, and Rahwan 2016). The second involves investigations of how we ought to behave, act, and judge, as well as our intuitions about moral responsibility, character, and what constitutes a good life. The third involves debates over moral realism and antirealism. In this entry we focus on the latter two.

In many of these cases, the line between experimental moral philosophy and its neighbors is difficult to draw: what distinguishes experimental moral philosophy from empirical moral psychology? What is the difference between experimental moral philosophy and psychology or neuroscience that investigates morality? What is the difference between experimental moral philosophy and metaethics? We might try to answer these questions by pointing to the training of the experimenters—as philosophers or as scientists—but much of the work in these areas is done by collaborative teams involving both philosophers and social scientists or neuroscientists. In addition, some philosophers work in law and psychology departments, and graduate programs increasingly offer cross-disciplinary training. Another approach would be to look at the literature with which the work engages: many psychologists (e.g., Haidt, Greene) investigating moral judgment situate their arguments within the debate between Kantians and Humeans, so engagement with the philosophical tradition might be used as a criterion to distinguish experimental moral philosophy from experimental moral psychology. All this said, an inability to sharply distinguish experimental moral philosophy from adjoining areas of investigation may not be particularly important. Experimental philosophers often point out that the disciplinary divide between philosophy and psychology is a relatively recent phenomenon; early twentieth-century writers such as William James situated themselves in both disciplines, making vital contributions to each. If there is a problem here, it is not unique to experimental moral philosophy. For example, is work on semantics that uses both linguistics and analytic philosophy best understood as linguistics or philosophy of language? These debates arise, and may be largely moot, for many research programs that cut across disciplinary boundaries.

The rest of this entry proceeds as follows. Section 2 canvasses experimental research on moral judgments and intuitions, describing various programmatic uses to which experimental results have been put, then turning to examples of experimental research on moral judgment and intuition, including intuitions about intentionality and responsibility, as well as the so-called linguistic analogy. Section 3 discusses experimental results on thick (i.e., simultaneously descriptive and normative) topics, including character and virtue, wellbeing, and emotion and affect. Section 4 discusses questions about moral disagreement and moral language, both important sources of evidence in the longstanding debate over moral objectivity. Section 5 considers some objections to experimental moral philosophy.

2. Moral Intuitions and Conceptual Analysis

One role for experiments in moral philosophy, as indicated above, is to investigate our moral intuitions about cases, and to use the results gleaned from such investigations to guide or constrain our moral metaphysics, semantics, or epistemology. Philosophers often rely on such judgments—in the form of claims to the effect that “we would judge x” or, “intuitively, x”—as data or evidence for a theory (though see Cappelen 2012 and Deutsch 2016 for critical discussion of this point). Claims about what we would judge or about the intuitive response to a case are empirically testable, and one project in experimental moral philosophy (perhaps the dominant original project) has been to test such claims via an investigation of our intuitions and related moral judgments. In doing so, experimental moral philosophers can accomplish one of two things: first, they can test the claims of traditional philosophers about what is or is not intuitive; second, they can investigate the sources of our intuitions and judgments. These tasks can be undertaken as part of a positive program, which uses our intuitions and judgments as inputs and constructs theories that accommodate and explain them. Alternatively, these tasks can figure in a negative program, which uses experimental research to undermine traditional appeals to intuition as evidence in moral philosophy and conceptual analysis more broadly. The negative program can proceed directly or indirectly: either via testing and refuting claims about intuitions themselves, or by discrediting the sources of those intuitions by discovering that they are influenced by factors widely regarded as not evidential or unreliable. We discuss both the negative program and the positive program below.

2.1 The Negative Program in Experimental Moral Philosophy

Early work in experimental philosophy suggested cross-cultural differences in semantic, epistemic, and moral intuitions. For example, Machery, Mallon, and Stich (2004) argued that East Asian subjects were more likely to hold descriptivist intuitions than their Western counterparts, who tended to embrace causal theories of reference. Haidt (2006) argued that the extent to which people judged harmless violations such as eating a deceased pet, or engaging in consensual sibling incest, to be wrong depended on socioeconomic status. Since, presumably, moral wrongness doesn’t itself depend on socioeconomic status, gender, or culture of the person making the moral judgment, these results have been marshaled to argue either against the evidential value of intuitions or against the existence of moral facts altogether.

Negative experimental moral philosophy generates results that are then used to discount the evidential value of appeals to intuition. For example, Walter Sinnott-Armstrong (2008d), Eric Schwitzgebel, Fiery Cushman (2012), and Peter Singer (2005) have recently followed this train of thought, arguing that moral intuitions are subject to normatively irrelevant situational influences (e.g., order effects), while Feltz & Cokely (2009) and Knobe (2011) have documented correlations between moral intuitions and (presumably) normatively irrelevant individual differences (e.g., extroversion). Such results might warrant skepticism about moral intuitions, or at least about some classes of intuitions or intuiters.[1]

The studies just mentioned involve results that suggest that the content of intuitions varies along some normatively irrelevant dimension. Another source of evidence for the negative program draws on results regarding the cognitive mechanisms underlying or generating intuitions themselves. For example, studies that suggest that deontological intuitions are driven by emotional aversions to harming others have been used to argue that we ought to discount our deontological intuitions in favor of consequentialist principles—an idea discussed in more detail below (see Singer 2005, Weigman 2017).

The distinction between negative and positive experimental moral philosophy is difficult to draw, partly because the negative program often discounts particular classes or types of intuitions in favor of others that are supposed to be more reliable. For example, Singer offers an anti-deontological argument as part of the negative program insofar as his argument uses the emotional origins of deontological intuitions to discount them. But because he then argues for the superiority of consequentialist intuitions, his position also fits within the positive program. So the difference between the two programs is not in the data or the kinds of questions investigated, but in how the data are put to use—whether they are seen as debunking traditional philosophical appeals to intuition or as an addition to traditional philosophical appeals to intuition, by helping philosophers distinguish between reliable and unreliable intuitions and their sources. In the next section, we look at positive uses of intuitions as evidence.

2.2 The Positive Program in Experimental Moral Philosophy

Other philosophers are more sanguine about the upshot of experimental investigations of moral judgment and intuition. Knobe, for example, uses experimental investigations of the determinants of moral judgments to identify the contours of philosophically resonant concepts and the mechanisms or processes that underlie moral judgment. He has argued for the pervasive influence of moral considerations throughout folk psychological concepts (2009, 2010; see also Pettit & Knobe 2009), claiming, among other things, that the concept of an intentional action is sensitive to the foreseeable evaluative valence of the consequences of that action (2003, 2004b, 2006).[2] In a recent paper, Knobe (2016) shows that only ten percent of experimental philosophy aims to support or undercut a proposed conceptual analysis; instead, most published papers aim to shed light on the cognitive and affective processes underlying the relevant phenomenon.

Another line of research is due to Joshua Greene and his colleagues (Greene et al. 2001, 2004, 2008; Greene 2008), who investigate the neural bases of consequentialist and deontological moral judgments. Greene and his colleagues elicited the intuitions of subjects about a variety of trolley problems—cases that present a dilemma in which a trolley is racing towards five individuals, all of whom will be killed unless the trolley is instead diverted towards one individual—while inside an fMRI scanner. The researchers found that, when given cases in which the trolley is diverted by pulling a switch, most subjects agreed that diverting the trolley away from the five and towards the one person was the right action. When, instead, the case involved pushing a person off a bridge to land in front of and halt the trolley, subjects were less likely to judge that sacrificing the one person as morally permissible. In addition, Greene found that subjects who did judge it permissible to push the person took longer to arrive at their judgment, suggesting that they had to overcome conflicting intuitions to do so—a finding bolstered by the fact that when subjects considered pushing the person off the bridge, their brains revealed increased activity in areas associated with the aggregation and modulation of value signals (e.g., ventromedial prefrontal cortex and dorsolateral prefrontal cortex). Greene concludes that our aversion to pushing the person is due to an emotional response to the thought of causing physical harm to someone, while our willingness to pull the switch is due to the rational calculation that saving five people is preferable to letting five people die to spare one life. Greene and Singer use these findings as a basis for a debunking of deontological intuitions and a vindication of consequentialism, since the latter rests on intuitions which stem from a source we consider both generally reliable and appropriately used as the basis for moral reasoning. It should be noted, though, that this argument presupposes that emotional reactions are (at least in these cases) necessarily irrational or arational; philosophers who are unsympathetic to such a view of emotions need not follow Greene and Singer to their ultimate conclusions (Railton 2014).

A related approach aims to identify the features to which intuitions about philosophically important concepts are sensitive. Sripada (2011) thinks that the proper role of experimental investigations of moral intuitions is not to identify the mechanisms underlying moral intuitions. Such knowledge, it is claimed, contributes little of relevance to philosophical theorizing. It is rather to investigate, on a case by case basis, the features to which people are responding when they have such intuitions. On this view, people (philosophers included) can readily identify whether they have a given intuition, but not why they have it. An example from the debate over determinism and free will: manipulation cases have been thought to undermine compatibilist intuitions—intuition supporting the notion that determinism is compatible with “the sort of free will required for moral responsibility” (Pereboom 2001). In such cases, an unwitting victim is described as having been surreptitiously manipulated into having and reflectively endorsing a motivation to perform some action. Critics of compatibilism say that such cases satisfy compatibilist criteria for moral responsibility, and yet, intuitively, the actors are not morally responsible (Pereboom 2001). Sripada (2011) makes a strong case, however, through both mediation analysis and structural equation modeling, that to the extent that people feel the manipulee not to be morally responsible, they do so because they judge him in fact not to satisfy the compatibilist criteria.[3] Thus, by determining which aspects of the case philosophical intuitions are responding to, it might be possible to resolve otherwise intractable questions.

2.3 An Example: Intentionality and Responsibility

Since Knobe’s seminal (2003) paper, experimental philosophers have investigated the complex patterns in people’s dispositions to make judgments about moral notions (praiseworthiness, blameworthiness, responsibility), cognitive attitudes (belief, knowledge, remembering), motivational attitudes (desire, favor, advocacy), and character traits (compassion, callousness) in the context of violations of and conformity to various norms (moral, prudential, aesthetic, legal, conventional, descriptive).[4] In Knobe’s original experiment, participants first read a description of a choice scenario: the protagonist is presented with a potential policy (aimed at increasing profits) that would result in a side effect (either harming or helping the environment). Next, the protagonist explicitly disavows caring about the side effect, and chooses to go ahead with the policy. The policy results as advertised: both the primary and the side effect occur. Participants are asked to attribute intentionality or an attitude (or, in the case of later work by Robinson et al. 2013, a character trait) to the protagonist. What Knobe found was that participants were significantly more inclined to indicate that the protagonist had intentionally brought about the side effect when it was perceived to be bad (harming the environment) than when it was perceived to be good (helping the environment). This effect has been replicated dozens of times, and its scope has been greatly expanded from intentionality attributions after violations of a moral norm to attributions of diverse properties after violations of a wide variety of norms.

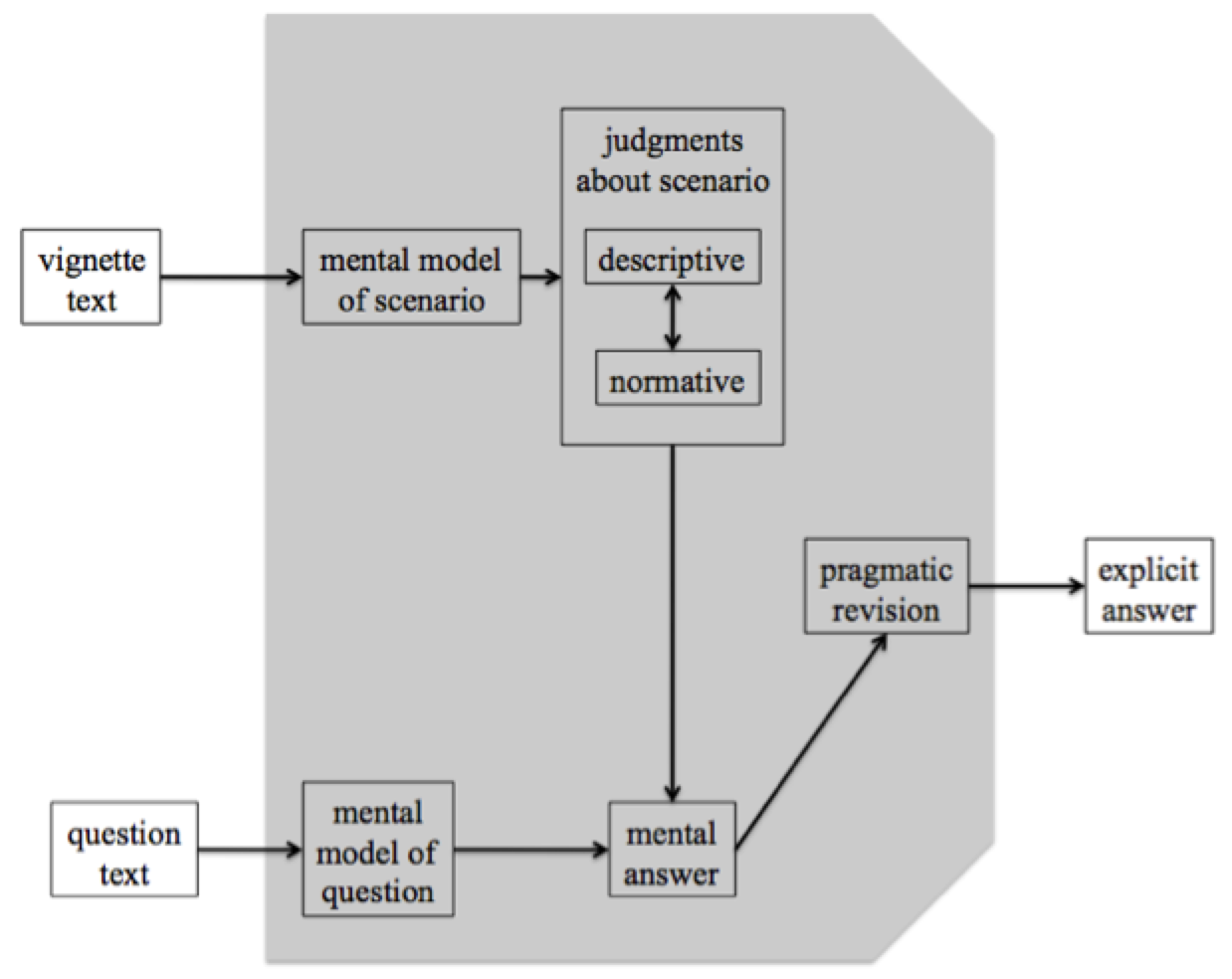

The first-order aim of interpreters of this body of evidence is to create a model that predicts when the attribution asymmetry will crop up. The second-order aims are to explain as systematically as possible why the effect occurs, and to determine the extent to which the attribution asymmetry can be considered rational. Figure 1, presented here for the first time, models how participants’ responses to this sort of vignette are produced:

Figure 1. Model of Participant Response to Experimental Philosophy Vignettes

In this model, the boxes represent constructs, the arrows represent causal or functional influences, and the area in grey represents the mind of the participant, which is not directly observable but is the target of investigation. In broad strokes, the idea is that a participant first reads the text of the vignette and forms a mental model of what happens in the story. On the basis of this model (and almost certainly while the vignette is still being read), the participant begins to interpret, i.e., to make both descriptive and normative judgments about the scenario, especially about the mental states and character traits of the people in it. The participant then reads the experimenter’s question, forms a mental model of what is being asked, and—based on her judgments about the scenario—forms an answer to that question. That answer may then be pragmatically revised (to avoid unwanted implications, to bring it more into accord with what the participant thinks the experimenter wants to hear, etc.) and is finally recorded as an explicit response to a statement about the protagonist’s attitudes (e.g., “he brought about the side effect intentionally,” graded on a Likert scale.)[5]

A result that has been replicated repeatedly is that, when the vignette describes a norm violation, subjects indicate that they agree more strongly that the action violating the norm was performed intentionally. While this finding could be used by proponents of the negative program to undermine the conceptual coherence of our notion of moral responsibility, experimental moral philosophers working in the positive program have taken up the task of explaining the asymmetry by postulating models of unobservable entities that mediate, explain, and perhaps even rationalize the asymmetry. A discussion of several attempts to do so follows; each offers a different explanation of the asymmetry, but all represent vindications or at least rehabilitations of our intuitions about intention.

The Conceptual Competence Model

Perhaps the best known is Knobe’s conceptual competence model, according to which the asymmetry arises at the judgment stage. On this view, normative judgments about the action influence otherwise descriptive judgments about whether it was intentional (or desired, or expected, etc.). Moreover, this influence is taken to be part of the very conception of intentionality (desire, belief, etc.). Thus, on the conceptual competence model, the asymmetry in attributions is a rational expression of the ordinary conception of intentionality (desire, belief, etc.), which turns out to have a normative component.[6]

The Motivational Bias Model

The motivational bias model (Alicke 2008; Nadelhoffer 2004, 2006) agrees that the asymmetry originates in the judgment stage, and that normative judgments influence descriptive judgments. However, unlike the conceptual competence model, it takes this to be a bias rather than an expression of conceptual competence. Thus, on this model, the asymmetry in attributions is a distortion of the correct conception of intentionality (desire, belief, etc.).

The Deep Self Model

The deep self concordance model (Sripada 2010, 2012; Sripada & Konrath 2011) also locates the source of the asymmetry in the judgment stage, but does not recognize an influence (licit or illicit) of normative judgments on descriptive judgments. Instead, proponents of this model claim that when assessing intentional action, people not only attend to their representation of a person’s “surface” self—her expectations, means-end beliefs, moment-to-moment intentions, and conditional desires—but also to their representation of the person’s “deep” self, which harbors her sentiments, values, and core principles. According to this model, when assessing whether someone intentionally brings about some state of affairs, people determine (typically unconsciously) whether there exists sufficient concordance between their representation of the outcome the agent brings about and what they take to be her deep self. For instance, when the chairman says he does not care at all about either harming or helping the environment, people attribute to him a deeply anti-environment stance. When he harms the environment, this is concordant with his anti-environment deep self; in contrast, when the chairman helps the environment, this is discordant with his anti-environment deep self. According to the deep self concordance model, then, the asymmetry in attributions is a reasonable expression of the folk psychological distinction between the deep and shallow self.

The Conversational Pragmatics Model

Unlike the models discussed so far, the conversational pragmatics model (Adams & Steadman 2004, 2007) locates the source of the asymmetry in the pragmatic revision stage. According to this model, participants judge the protagonist not to have acted intentionally in both norm-conforming and norm-violating cases. However, when it comes time to tell the experimenter what they think, participants do not want to be taken as suggesting that the harm-causing protagonist is blameless, so they report that he acted intentionally. This is a reasonable goal, so according to the pragmatic revision model, the attribution asymmetry is rational, though misleading.

The Deliberation Model

According to the deliberation model (Alfano, Beebe, & Robinson 2012; Robinson, Stey, & Alfano 2013; Scaife & Webber 2013), the best explanation of the complex patterns of evidence is that the very first mental stage, the formation of a mental model of the scenario, differs between norm-violation and norm-conformity vignettes. When the protagonist is told that a policy he would ordinarily want to pursue violates a norm, he acquires a reason to deliberate further about what to do; in contrast, when the protagonist is told that the policy conforms to some norm, he acquires no such reason. Participants tend to think of the protagonist as deliberating about what to do when and only when a norm would be violated. Since deliberation leads to the formation of other mental states such as beliefs, desires, and intentions, this basal difference between participants’ models of what happens in the story flows through the rest of their interpretation and leads to the attribution asymmetry. On the deliberation model, then, the attribution asymmetry originates earlier than other experimental philosophers suppose, and is due to rational processes.

2.4 Another Example: The Linguistic Analogy

Another positive program investigates the structure and form of moral intuitions, in addition to their content, with the aim of using these features to inform a theory of the cognitive structures underlying moral judgment and their etiology.

Rawls (1971), drawing on Chomsky’s (1965) theory of generative linguistics, suggested that moral cognition might be usefully modeled on our language faculty, a parallel endorsed by Chomsky himself:

I don’t doubt that we have a natural moral sense….That is, just as people somehow can construct an extraordinarily rich system of knowledge of language on the basis of rather limited and degenerate experience, similarly, people develop implicit systems of moral evaluation, which are more or less uniform from person to person. There are differences, and the differences are interesting, but over quite a substantial range we tend to make comparable judgments, and we do it, it would appear, in quite intricate and delicate ways involving new cases and agreement often about new cases… and we do this on the basis of a very limited environmental context available to us. The child or the adult doesn’t have much information that enables the mature person to construct a moral system that will in fact apply to a rich range of cases, and yet that happens.…whenever we see a very rich, intricate system developing in a more or less uniform way on the basis of rather restricted stimulus conditions, we have to assume that there is a very powerful, very rich, highly structured innate component that is operating in such a way as to create that highly specific system on the basis of the limited data available to it. (Chomsky, quoted in Mikhail 2005).

Here Chomsky points to three similarities between the development of linguistic knowledge and the development of moral knowledge:

L1: A child raised in a particular linguistic community almost inevitably ends up speaking an idiolect of the local language despite lack of sufficient explicit instruction, lack of extensive negative feedback for mistakes, and grammatical mistakes by caretakers.

M1: A child raised in a particular moral community almost inevitably ends up judging in accordance with an idiolect of the local moral code despite lack of sufficient explicit instruction, lack of sufficient negative feedback for moral mistakes, and moral mistakes by caretakers.

L2: While there is great diversity among natural languages, there are systematic constraints on possible natural languages.

M2: While there is great diversity among natural moralities, there are systematic constraints on possible natural moralities.

L3: Language-speakers obey many esoteric rules that they themselves typically cannot articulate or explain, and which some would not even recognize.

M3: Moral agents judge according to esoteric rules (such as the doctrine of double effect) that they themselves typically cannot articulate or explain, and which some would not even recognize.

L4: Drawing on a limited vocabulary, a speaker can both produce and comprehend a potential infinity of linguistic expressions.

M4: Drawing on a limited moral vocabulary, an agent can produce and evaluate a very large (though perhaps not infinite) class of action-plans, which are ripe for moral judgment.

We will now explain and evaluate each of these pairs of claims in turn.

L1/M1 refer to Chomsky’s poverty of the stimulus argument: despite receiving little explicit linguistic and grammatical instruction, children develop language rapidly and at a young age, and quickly come to display competence applying complex grammatical rules. Similarly, Mikhail and other proponents of the analogy argue that children acquire moral knowledge at a young age based on relatively little explicit instruction. However, critics of the analogy point out several points of difference. First, children actually receive quite a bit of explicit moral instruction and correction, and in contrast with the linguistic case, this often takes the form of explicit statements of moral rules: ‘don’t hit,’ ‘share,’ and so on. Secondly, there is debate over the age at which children actually display moral competence. Paul Bloom (2013; see also Blake, McAuliffe, and Warneken 2014) has argued that babies display moral tendencies as early as 3–6 months, but others (most famously Kohlberg (1969) and Piaget (1970); see also Turiel (1983) and Nucci (2002)) have argued that children are not fully competent with moral judgment until as late as 8–12 years, by which time they have received quite a bit of moral instruction, both implicit and explicit. A related point concerns the ability to receive instructions: in the case of language, a child requires some linguistic knowledge or understanding in order even to receive instruction: a child who has no understanding of language will not understand instructions given to her. But in the moral case, a child need not understand moral rules in order to be instructed in them, so there is less need to posit some innate knowledge or understanding. Nichols et al. (2016) have conducted a series of experiments using statistical learning and Bayesian modeling to show how children might learn complex rules with scant input. Finally, while children may initially acquire the moral values present in their environment, they sometimes change their values or develop their own values later in life in ways that present spontaneously and with little conscious effort. This is in marked contrast with language; as any second-language learner will recognize, acquiring a new language later in life is effortful and, even if one succeeds in achieving fluency, the first language is rarely lost. Lastly, as Prinz (2008) points out, children are punished for moral violations, which may explain why they are quicker to learn moral than grammatical rules.

L2/M2 refer to the existence of so-called linguistic universals: structures present in all known languages. Whether this is true in the moral case is controversial, for reasons we’ll discuss further below. Prinz (2008) and Sripada (2004) have argued that there are no exceptionless moral universals, unless one phrases or describes the norms in question in such a way as to render them vacuous. Sripada uses the example ‘murder is wrong’ as a vacuous rule: while this might seem like a plausible candidate for a moral universal, when we consider that ‘murder’ refers to a wrongful act of killing, we can see that the norm is not informative. But Mikhail might respond by claiming that the fact that all cultures recognize a subset of intentional killings as morally wrong, even if they differ in how they circumscribe this subset, is itself significant, and the relevant analogy here would be with the existence of categories like ‘subject’, ‘verb’, and ‘object’ in all languages, or with features like recursion, which are present in the grammars of all natural languages.

L3/M3 refer to the patterns displayed by grammatical and moral intuitions. In the case of language, native speakers can recognize grammatical and ungrammatical constructions relatively easily, but typically cannot articulate the rules underlying these intuitions. In the case of moral grammar, the analogy claims, the same is true: we produce moral intuitions effortlessly without being able to explain the rules underlying them. Indeed, in both cases, the rules underlying the judgments might be quite esoteric and difficult for native speakers to learn and understand—as anyone who has taught the doctrine of double effect to introductory students can likely attest.

L4/M4 refer to the fact that we can embed linguistic phrases within other linguistic phrases to create novel and increasingly complex phrases and sentences. This property is known as recursion, and is present in all known natural languages (indeed, in a 2002 paper, Chomsky suggests that perhaps recursion is the only linguistic universal; see Hauser, Chomsky, and Fitch 2002). For instance, just as phrases can be embedded in other phrases to form more complex phrases:

the calico cat \(\rightarrow\) the calico cat (that the dog chased) \(\rightarrow\) the calico cat (that the dog [that the breeding conglomerate wanted] chased) \(\rightarrow\) the calico cat (that the dog [that the breeding conglomerate{that was bankrupt} wanted] chased)

so moral judgments can be embedded in other moral judgments to produce novel moral judgments (Harman 2008, 346). For example:

It’s wrong to \(x \rightarrow\) It’s wrong to coerce someone to \(x \rightarrow\) It’s wrong to persuade someone to coerce someone to \(x\)

The point is twofold: first, we can create complex action descriptions; second, we can evaluate novel and complex actions and respond with relatively automatic intuitions of grammatical or moral permissibility. Mikhail (2011: 43–48) uses experimental evidence of judgments about trolley problems to argue that our moral judgments are generated by imposing a deontic structure on our representation of the causal and evaluative features of the action under consideration. Mikhail points to a variation on the poverty of the stimulus argument, which he calls the poverty of the perceptual stimulus (Mikhail 2009: 37): when faced with a particular moral situation, we draw complex inferences about both act and actor based on relatively little data. Mikhail (2010) uses this argument against models of moral judgment as affect-driven intuitions:

Although each of these rapid, intuitive, and highly automatic moral judgments is occasioned by an identifiable stimulus, how the brain goes about interpreting these complex action descriptions and assigning a deontic status to each of them is not something revealed in any obvious way by the surface structure of the stimulus itself. Instead, an intervening step must be postulated: an intuitive appraisal of some sort that is imposed on the stimulus prior to any deontic response to it. Hence, a simple perceptual model, such as the one implicit in Haidt’s (2001) influential model of moral judgment, appears inadequate for explaining these intuitions, a point that can be illustrated by calling attention to the unanalyzed link between eliciting situation and intuitive response in Haidt’s model

Unlike the traditional poverty of the stimulus argument, this version does not rely on developmental evidence but on our ability to quickly and effortlessly appraise complicated scenarios, such as variations on trolley problems, and then issue normative judgments. The postulated intervening step is like the unconscious appeal to rules of grammar, and the evidence for such a step must come from experiments showing that we do, in fact, have such intuitions, and that they conform to predictable patterns.

The linguistic analogy relies on experimental evidence about the nature and pattern of our moral intuitions, and it outlines an important role for experiments in moral theory. If the analogy holds, then an adequate moral theory must be based on the judgments of competent moral judges, just as grammatical rules are constructed based on the judgments of competent speakers. A pressing task will be the systematic collection of such judgments. The analogy also suggests a kind of rationalism about moral judgment, since it posits that our judgments result from the unconscious application of rules to cases. Finally, the analogy has metaethical implications. Just as we can only evaluate grammaticality of utterances relative to a language, it may be that we can only evaluate the morality of an action relative to a specific moral system. How to individuate moralities, and how many (assuming there are multiple ones) there are, is a question for further empirical investigation, and here again experimental evidence will play a crucial role.

3. Character, Wellbeing, and Emotion

Until the 1950s, modern moral philosophy had largely focused on either consequentialism or deontology. The revitalization of virtue ethics led to a renewed interest in virtues and vices (e.g., honesty, generosity, fairness, dishonesty, stinginess, unfairness), in eudaimonia (often translated as ‘happiness’ or ‘flourishing’), and in the emotions. In recent decades, experimental work in psychology, sociology, and neuroscience has been brought to bear on the empirical grounding of philosophical views in these areas.

3.1 Character and Virtue

A virtue is a complex disposition comprising sub-dispositions to notice, construe, think, desire, and act in characteristic ways. To be generous, for instance, is (among other things) to be disposed to notice occasions for giving, to construe ambiguous social cues charitably, to desire to give people things they want, need, or would appreciate, to deliberate well about what they want, need, or would appreciate, and to act on the basis of such deliberation. Manifestations of such a disposition are observable and hence ripe for empirical investigation. Virtue ethicists of the last several decades have sometimes been optimistic about the distribution of virtue in the population. Alasdair MacIntyre claims, for example, that “without allusion to the place that justice and injustice, courage and cowardice play in human life very little will be genuinely explicable” (1984, 199). Julia Annas (2011, 8–10) claims that “by the time we reflect about virtues, we already have some.” Linda Zagzebski (2010) provides an “exemplarist” semantics for virtue terms that only gets off the ground if there are in fact many virtuous people.

Starting with Owen Flanagan’s Varieties of Moral Personality (1993), philosophers began to worry that empirical results from social psychology were inconsistent with the structure of human agency presupposed by virtue theory. In this framework, people are conceived as having more or less fixed traits of character that systematically order their perception, cognition, emotion, reasoning, decision-making, and behavior. For example, a generous person is inclined to notice and seek out opportunities to give supererogatorily to others. The generous person is also inclined to think about what would (and wouldn’t) be appreciated by potential recipients, to feel the urge to give and the glow of satisfaction after giving, to deliberate effectively about when, where, and how to give to whom, to come to firm decisions based on such deliberation, and to follow through on those decisions once they’ve been made. Other traits are meant to fit the same pattern, structuring perception, cognition, motivation, and action of their bearers. Famous results in social psychology, such as Darley and Batson’s (1973) Good Samaritan experiment, seem to tell against this view of human moral conduct. When someone helps another in need, they may do so simply because they are not in a rush, rather than because they are expressing a fixed trait like generosity or compassion.

In the virtue theoretic framework, people are not necessarily assumed to already be virtuous. However, they are assumed to be at least potentially responsive to the considerations that a virtuous person would ordinarily notice and take into account. Flanagan (1993), followed by Doris (1998, 2002), Harman (1999, 2000), and Alfano (2013), made trouble for this framework by pointing to social psychological evidence suggesting that much of people’s thinking, feeling, and acting is instead predicted by (and hence responsive to) situational factors that don’t seem to count as reasons at all—not even bad reasons or temptations to vice. These include influences such as ambient sensibilia (sounds, smells, light levels, etc.), seemingly trivial and normatively irrelevant inducers of positive and negative moods, order of presentation of stimuli, and a variety of framing and priming effects, many of which are reviewed in Alfano (2013: 40–50).[7] It’s worth emphasizing the depth of the problem these studies pose. It’s not that they suggest that most people aren’t virtuous (although they do suggest that as well). It’s that they suggest that they undermine the entire framework in which people are conceived as cognitively sensitive and motivationally responsive to reasons. Someone whose failure to act virtuously because they gave in to temptation can be understood in the virtue theoretic framework. Someone whose failure to act virtuously because they’d just been subliminally primed with physical coldness, which in turn is metaphorically associated with social coldness, finds no place in the virtue theoretic framework. These sorts of effects push us to revamp our whole notion of agency and personhood (Doris 2009).

Early estimates suggested that individual difference variables typically explain less than 10% of the variance in people’s behavior (Mischel 1968)—though, as Funder & Ozer (1983) pointed out, situational factors may explain less than 16%.[8] More recent aggregated evidence indicates that situational factors explain approximately twice as much of the variance in human behavior as the five main trait factors (Rauthmann et al. 2014). Convergent evidence from both lexicographical and survey studies indicates that there are at least five dimensions of situations that reliably predict thought, feeling, and behavior: (1) negative valence, (2) adversity, (3) duty, (4) complexity, and (5) positive valence (Rauthmann and Sherman 2018).

According to Doris (2002), the best explanation of this lack of cross-situational consistency is that the great majority of people have local rather than global, traits: they are not honest, courageous, or greedy, but they may be honest-while-in-a-good-mood, courageous-while-sailing-in-rough-weather-with-friends, and greedy-unless-watched-by-fellow-parishioners. In contrast, Christian Miller (2013, 2014) thinks the evidence is best explained by a theory of mixed global traits, such as the disposition to (among other things) help because it improves one’s mood. Such traits are global, in the sense that they explain and predict behavior across situations (someone with such a disposition will, other things being equal, typically help so long as it will maintain her mood), but normatively mixed, in the sense that they are neither virtues nor vices. Mark Alfano (2013) goes in a third direction, arguing that virtue and vice attributions tend to function as self-fulfilling prophecies. People tend to act in accordance with the traits that are attributed to them, whether the traits are minor virtues such as tidiness (Miller, Brickman, & Bolen 1975) and ecology-mindedness (Cornelissen et al. 2006, 2007), major virtues such as charity (Jensen & Moore 1977), cooperativeness (Grusec, Kuczynski, Simutis & Rushton 1978), and generosity (Grusec & Redler 1980), or vices such as cutthroat competitiveness (Grusec, Kuczynski, Simutis & Rushton 1978). On Alfano’s view, when people act in accordance with a virtue, they often do so not because they possess the trait in question, but because they think they do or because they know that other people think they do. He calls such simulations of moral character factitious virtues, and even suggests that the notion of a virtue should be revised to include reflexive and social expectations.[9]

It might seem that the criticisms that motivate these novel approaches to virtue miss their mark. After all, virtue ethicists needn’t (and often don’t) commit themselves to the claim that almost everyone is virtuous. Instead, many argue that virtue is the normative goal of moral development, and that people mostly fail in various ways to reach that goal. The argument from the fact that most people’s dispositions are not virtues to a rejection of orthodox virtue ethics, then, might be thought a non sequitur, at least for such views. But empirically-minded critics of virtue ethics do not stop there. They all have positive views about what sorts of dispositions people have instead of virtues. These dispositions are alleged to be so structurally dissimilar from virtues (as traditionally understood) that it may be psychologically unrealistic to treat (traditional) virtue as a regulative ideal. What matters, then, is the width of the gap between the descriptive and the normative, between the (structure of the) dispositions most people have and the (structure of the) dispositions that count as virtues.

Three leading defenses against this criticism have been offered. Some virtue ethicists (Kupperman 2009) have conceded that virtue is extremely rare, but argued that it may still be a useful regulative ideal. Others (Hurka 2006, Merritt 2000) have attempted to weaken the concept of virtue in such a way as to enable more people, or at least more behaviors, to count as virtuous. Still others (Kamtekar 2004, Russell 2009, Snow 2010, Sreenivasan 2002) have challenged the situationist evidence or its interpretation. While it remains unclear whether these defenses succeed, grappling with the situationist challenge has led both defenders and challengers of virtue ethics to develop more nuanced and empirically informed views.[10]

3.2 Wellbeing, Happiness, and the Good Life

Philosophers have always been interested in what makes a human life go well, but recent decades have seen a dramatic increase in both psychological and philosophical research into happiness, well-being, and what makes for a good life. It is important to distinguish here between ‘good life’ in the sense of a life that goes well for the one who lives it, and a morally good life, since philosophers have long debated whether a morally bad person could enjoy a good life. The empirical study of wellbeing focuses primarily on lives that are good in the former sense: good for the person whose life it is. Second, we need to distinguish between a hedonically good life and an overall good life. A life that is hedonically good is one that the subject experiences as pleasant; an overall good life might not contain much pleasure but might be good for other reasons, such as what it accomplishes. We might decide, after investigation, that an overall good life must be a hedonically good life, but the two concepts are distinct.

With these distinctions in mind, we can see the relevance of experimental evidence to investigations in this area. First and perhaps most obviously, experiments can investigate our intuitions about what constitutes a good life, thereby giving us insight into the ordinary concepts of happiness, well-being, and flourishing. To this end, Phillips, Nyholm, & Liao (2014) investigated intuitions about the relationship between morality and happiness. Their results suggest that the ordinary conception of happiness involves both descriptive and normative components: specifically, we judge that people are happy if they are experiencing positive feelings that they ought to experience. So, to use their examples, a Nazi doctor who gets positive feelings from conducting his experiments is not happy. By contrast, a nurse who gets positive feelings from helping sick children is happy, though a nurse who is made miserable by the same actions is not happy.

Another set of experimental findings bearing on this question involves Nozick’s (1974: 44–45) experience machine thought experiment. In response to the hedonist’s claim that pleasure is the only intrinsic good, Nozick asks us to consider the following:

Suppose there was an experience machine that would give you any experience you desired. Super-duper neuropsychologists could stimulate your brain so that you would think and feel you were writing a great novel, or making a friend, or reading an interesting book. All the time you would be floating in a tank, with electrodes attached to your brain. Should you plug into this machine for life, preprogramming your life experiences? […] Of course, while in the tank you won’t know that you’re there; you’ll think that it’s all actually happening […] Would you plug in?

Nozick argues that our response to the experience machine reveals that well-being is not purely subjective: “We learn that something matters to us in addition to experience by imagining an experience machine and then realizing that we would not use it.” DeBrigard (2010) reports finding that subjects’ intuitions about the experience were different if they were told they were already in such a machine, in which case they would not choose to unplug; he explains this in terms of the status quo bias. Weijers (2014) goes further, asking subjects about Nozick’s original scenario while also asking them to justify their response; he found that many of the justifications implied a kind of imaginative resistance to the scenario or cited irrelevant factors. He also found that respondents were more likely to say that a ‘plugged in life’ would be better when they were choosing for someone else, rather than for themselves.

A second type of study involves investigating the causes and correlates of happiness, well-being, and life-satisfaction. These experiments look at the conditions under which people report positive affect or life-satisfaction, as well as the conditions under which they judge that their lives are going well. This is distinct from the first type of research, since the fact that someone reports an experience as being pleasurable does not necessarily tell us whether they would judge that experience to be pleasurable for someone else; there may be asymmetries in first- and third-person evaluations. Furthermore, this type of research can tell us how various candidates contribute to well-being from a first-person perspective, but that doesn’t settle the question of the concept of a good life. I might judge that my life is going well, yet fail to notice that I am doing so because I am in a very good mood, and that in fact I am not accomplishing any of my goals; if confronted with another person in a similar situation, I might not make the same judgment. Which of these judgments best represents our concept of well-being is a tricky question, and a normative one since experimental evidence alone may not settle it. As it turns out, experiments have uncovered a number of factors that influence our own reports and assessments of pleasure and well-being. We will discuss two areas of research in particular: reports of pleasures and pains, and judgments of life satisfaction.

First, in the realm of hedonic evaluation, there are marked divergences between the aggregate sums of in-the-moment pleasures and pains and ex post memories of pleasures and pains. For example, the remembered level of pain of a colonoscopy is well-predicted by the average of the worst momentary level of pain and the final level of pain; furthermore, the duration of the procedure has no measurable effect on ex post pain ratings (Redelmeier & Kahneman 1996). What this means is that people’s after-the-fact summaries of their hedonic experiences are not simple integrals with respect to time of momentary hedonic tone. If the colonoscopy were functionally completed after minute 5, but arbitrarily prolonged for another 5 minutes so that the final level of pain was less at the end of minute 10 than at the end of minute 5, the patient would retrospectively evaluate the experience as less painful. This complicates accounts of wellbeing in terms of pleasure (for example, Bentham’s 1789/1961 hedonism) insofar as it raises the question whether the pleasure being measured is pleasure as experienced in the moment, or retrospectively: if, in the very act of aggregating pleasure, we change the way we evaluate it, this is a complication for hedonism and for some versions of utilitarianism. Since well-being is supposed to be a normative notion capable of guiding both individual actions and social policy, findings like these also call into question what, exactly, we ought to be seeking to maximize: pleasurable moments, or the overall retrospective evaluation of pleasure.

Such findings have led some philosophers to seek out alternatives to hedonism in hopes of establishing a more empirically stable underpinning for well-being: in particular, the idea that well-being consists in life satisfaction. The most prominent psychologist in this field is Ed Diener[11] whose Satisfaction with Life Scale asks participants to agree or disagree with statements such as, “I am satisfied with my life” and “If I could live my life over, I would change almost nothing.” These questions seem to get at more stable and significant features of life than hedonic assessments in terms of pleasure and pain, and one might expect the responses to be more consistent and robust. However, two problems arise. The first is that participants’ responses to life satisfaction questionnaires may not be accurate reports of standing attitudes. Fox & Kahneman (1992), for instance, showed that, especially in personal domains people seem to value (friends and love life), what predicts participants’ responses is not recent intrapersonal factors but social comparison. Someone who has just lost a friend but still thinks of herself as having more friends than her peers will tend to report higher life satisfaction than someone who has just gained a friend but who still thinks of himself as having fewer friends than his peers.

Life satisfaction surveys also seem to be subject to order effects. For instance, if a participant is asked a global life satisfaction question and then asked about his romantic life, the correlation between these questions tends to be near zero, but if the participant is asked the dating question first, the correlation tends to be high and positive (Strack, Martin, & Schwarz 1988).[12] This suggests that life-satisfaction judgments might be unduly influenced by the topics and questions considered just before making a life-satisfaction judgment. Another example is Schwarz and Clore’s oft-cited 1983 study, in which the authors reported a correlation between weather and life-satisfaction when subjects were asked about weather first, but not when the weather question followed the life-satisfaction query. We note, however, that there is significant controversy about this oft-cited example. Some recent papers claim to find a correlation between the two (e.g. Connolly 2013) while others claim there is no evidence for an effect of weather on life-satisfaction judgments (e.g. Lucas & Lawless 2013).

Findings like the above have led some researchers (e.g., Haybron 2008) to argue that life-satisfaction judgments are too arbitrary to ever satisfy the requirements of a theory of well-being. In response, Tiberius and Plakias (2011) argue for an idealized life-satisfaction theory they call value based life satisfaction, suggesting that by asking subjects to consider their life-satisfaction while attending to the domains they most value much of the instability plaguing the studies described above is removed, a claim they support with research showing that judgments made after priming subjects to think about their values demonstrate higher levels of retest stability (Schimmack & Oishi 2005).

3.3 Emotion and Affect

As much of the previous discussion reveals, the relationship between emotion and moral judgment is one of the central concerns of both traditional and experimental moral philosophy. Our discussion of this topic will focus on two types of research: the role of emotion in moral reasoning generally, and the role of one specific emotion—disgust—in moral judgments.

One debate concerns whether hot, emotion-driven reasoning is necessarily better or worse than reasoning based only on cooler, more reflective thinking—a distinction sometimes referred to using Kahneman’s terminology of system 1/system 2 thinking. The terminology is not perfect, though, because Kahneman’s terms map onto a quick, automatic, unconscious system of judgments (system 1) and a slow, effortful, deliberative decision-making process (system 2), and as we saw above, this is not a distinction between emotion and reason, since rule-based judgments can be automatic and unconscious while emotional judgments might be effortful and conscious. The debate between Mikhail and Haidt is a debate over the extent to which emotions rather than rules explain our moral judgments; Singer and Greene’s arguments against deontology rest on the claim that emotion-backed judgments are less justified than their utilitarian counterparts.

One reason for thinking that moral judgments essentially involve some emotional component is that they tend to motivate us. Internalism is the view that there is a necessary connection between moral judgment and motivation. This contrasts with externalism, which doesn’t deny that moral judgments are usually motivating, but does deny that they are necessarily so, as the link between judgment and motivation is only contingent. Since emotions are intrinsically motivational, showing that moral judgments consist, even in part, of emotions would vindicate internalism. One route to this conclusion has involved surveying people’s intuitions about moral judgment. Nichols (2002: 289) asked subjects whether an agent who “had no emotional reaction to hurting other people” but claims to know that hurting others is wrong really understands that hurting others is wrong. He found that most subjects did attribute knowledge in such cases, suggesting that the ordinary concept of moral judgment is externalist, a claim that is further supported by Strandberg and Björklund (2013). An additional source of evidence comes from psychopaths and patients with traumatic brain injuries, both of whom show evidence of impaired moral functioning—though how one regards this evidence depends on which perspective one begins with: while externalists (Nichols 2002, Roskies 2003) claim that the existence of psychopaths provides a counterexample to the claim that moral judgment is motivating (since psychopaths lack empathy and an aversion to harming others), internalists (Smith 1994, Maibom 2005, Kennett and Fine 2008) argue that psychopaths don’t actually make full-fledged moral judgments. Psychologist Robert Hare (1993: 129) quotes a researcher as saying that, they “know the words but not the music.” Psychopaths also display other cognitive and affective deficiencies, as evidenced by their poor decision-making skills in other areas. This may mean that they should not be taken as evidence against internalism.

A reason for thinking that moral judgments ought not involve emotion is that emotions sometimes seem to lead to judgments that are distorted or off-track, or that seem otherwise unjustified. One emotion in particular is often mentioned in this context: disgust. This emotion, which seems to be unique to human animals and emerges relatively late in development (from the ages of about 5–8), involves a characteristic gaping facial expression, a tendency to withdraw from the object of disgust, a slight reduction in body temperature and heart rate, and a sense of nausea and the need to cleanse oneself. In addition, the disgusted subject is typically motivated to avoid and even expunge the offending object, experiences it as contaminating and repugnant, becomes more attuned to other disgusting objects in the immediate environment, and is inclined to treat anything that the object comes in contact with (whether physically or symbolically) as also disgusting. This last characteristic is often referred to as ‘contamination potency’ and it is one of the features that makes disgust so potent and, according to its critics, so problematic. The disgust reaction can be difficult to repress, is easily recognized, and empathically induces disgust in those who do recognize it.[13] There are certain objects that almost all normal adults are disgusted by (feces, decaying corpses, rotting food, spiders, maggots, gross physical deformities). But there is also considerable intercultural and interpersonal variation beyond these core objects of disgust, where the emotion extends into food choices, sexual behaviors, out-group members, and violations of social norms. Many studies have claimed to show that disgust is implicated in harsher moral judgments (Schnall, Haidt, & Clore 2008), citing experiments in which subjects filling out questionnaires in smelly or dirty rooms evaluated moral transgressions more harshly. Others have gone further and argued that disgust might itself cause or comprise a part of moral judgment (Haidt 2001; Wheatley & Haidt 2005). If this is true, critics argue, we ought to be wary of those judgments, because disgust has a checkered past in multiple senses. First, it’s historically (and currently) associated with racism, sexism, homophobia and xenophobia; the language of disgust is often used in campaigns of discrimination and even genocide. Secondly, disgust’s evolutionary history gives us reason to doubt it. Kelly (2011) argues that the universal bodily manifestations of disgust evolved to help humans avoid ingesting toxins and other harmful substances, while the more cognitive or symbolic sense of offensiveness and contamination associated with disgust evolved to help humans avoid diseases and parasites. This system is later recruited for an entirely distinct purpose: to help mark the boundaries between in-group and out-group, and thus to motivate cooperation with in-group members, punishment of in-group defectors, and exclusion of out-group members.

If Kelly’s account of disgust is on the right track, it seems to have a number of important moral upshots. One consequence, he argues, is “disgust skepticism” (139), according to which the combination of disgust’s hair trigger and its ballistic trajectory mean that it is especially prone to incorrigible false positives that involve unwarranted feelings of contamination and even dehumanization. Hence, “the fact that something is disgusting is not even remotely a reliable indicator of moral foul play” but is instead “irrelevant to moral justification” (148).

It is important to note that the skeptical considerations Kelly raises are specific to disgust and its particular evolutionary history, so they aren’t intended to undermine the role of all emotions in moral judgment. Still, if Kelly is correct, and if disgust is implicated in lots of moral judgments, we may have a reason to be skeptical of many of our judgments. Plakias (2011, 2017) argues against the first half of this antecedent, claiming that Kelly and other ‘disgust skeptics’ are wrong to claim that the purposes of moral and physical disgust are totally distinct; she suggests that disgust is sometimes a fitting response to moral violations that protects against social contagion. May (2014) argues against the second half, claiming that disgust’s role in moral judgment has been significantly overblown; at most, we have evidence that disgust can, in certain cases, slightly amplify moral judgments that are already in place. However, more recent empirical work indicates that incidental disgust has little effect on the harshness of moral judgments, though dispositional disgust-sensitivity does (Landy and Goodwin 2015).

4. Metaethics and Experimental Moral Philosophy

Metaethics steps back from moral theorizing to ask about the nature and function of morality. While first-order ethics seeks to explain what we should do, metaethics seeks to explain the status of those theories themselves: what are their metaphysical commitments, and what evidence do we have for or against them? Which epistemology best characterizes our moral practices? What is the correct semantics for moral language? These questions might not seem obviously empirical, but insofar as it attempts to give an account of moral semantics, epistemology, and ontology, metaethics aims, in part, to capture or explain what we do when we engage in moral talk, judgment, and evaluation. To the extent that metaethics sees itself as characterizing our ordinary practice of morality, it is therefore answerable to empirical data about that practice. To the extent that a theory claims that we are mistaken or in widespread error about the meaning of moral language, or that we lack justification for our core moral beliefs, this is taken to be a strike against that theory. For example, relativism is often criticized on the grounds that it requires us to give up the (putatively) widespread belief that moral claims concern objective matters of fact and are true or false independently of our beliefs or attitudes. We have already seen several ways that experimental data bears on theories about moral reasons (the debate between internalists and externalists) and the epistemology of moral judgment (the debate over the role of intuitions). In this section we will examine experimental contributions to the debate over moral realism, arguments about moral disagreement, and moral language.

4.1 Folk Metaethics and Moral Realism

Much contemporary metaethics relies on assumptions about the nature of ordinary moral thought, discourse, and practice; metaethicists tend to see their project as essentially conservative. For example, Michael Smith writes that the first step in metaethical theorizing is to “identify features that are manifest in ordinary moral practice” and the second step is to “make sense of a practice having these features.” (1994) This assumption has had a major impact on the debate between realists and anti-realists, with realists claiming to best capture the nature of ordinary moral discourse: “We begin as (tacit) cognitivists and realists about ethics,” says Brink (1989), and then “we are led to some form of antirealism (if we are) only because we come to regard the moral realist’s commitments as untenable… Moral realism should be our metaethical starting point, and we should give it up only if it does involve unacceptable metaphysical and epistemological commitments.”

But experimental work has cast doubt on this claim, beginning with Darley and Goodwin (2008), and continued by James Beebe (2014) and others (Wright et al. 2013; Campbell and Kumar 2012; Goodwin and Darley 2010; and Sarkissian et al. 2011). Goodwin and Darley asked subjects to rate their agreement with statements drawn from the moral, ethical, and aesthetic domain, and asked subjects whether they agreed with the statement (for example, “before the 3rd month of pregnancy, abortion for any reason (of the mother’s) is morally permissible,”), whether they thought it represented a fact or an opinion or attitude, and whether, if someone were to disagree with them about the statement, at least one of the disputants would have to be mistaken. (We will say more about the authors’ use of disagreement as a proxy for realism in the following section.) In general, subjects rated moral statements as less factual than obviously factual statements (e.g. “the earth is at the center of the universe,”) but more factual than statements about matters of taste or etiquette. What is striking about these findings is not just that people are not straightforwardly realist, but that they seem to treat moral questions variably: some are treated as matters of fact, others as matters of opinion. This pattern has been replicated in several studies, and persists even when subjects are allowed to determine for themselves which issues to assign to the moral domain, suggesting that subjects do not think moral claims are uniformly objective