Truthlikeness

Truth is widely held to be the constitutive aim of inquiry. Even those who think the aim of inquiry is something more accessible than the truth (such as the empirically discernible truth), as well as those who think the aim is something more robust than possessing truth (such as the possession of knowledge) still affirm truth as a necessary component of the end of inquiry. And, other things being equal, it seems better to end an inquiry by endorsing truths rather than falsehoods.

Even if there is something to the thought that inquiry aims at truth, it has to be admitted that truth is a rather coarse-grained property of propositions. Some falsehoods seem to realize the aim of getting at the truth better than others. Some truths better realize the aim than other truths. And perhaps some falsehoods even realize the aim better than some truths do. The dichotomy of the class of propositions into truths and falsehoods needs to be supplemented with a more fine-grained ordering — one which classifies propositions according to their closeness to the truth, their degree of truthlikeness, or their verisimilitude.

We begin with the logical problem of truthlikeness: the problem of giving an adequate account of the concept and determining its logical properties. In §1 we lay at the logical problem and various possible solutions to it. In §1.1 we examine the basic assumptions which generate the logical problem, which in part explain why the problem emerged when it did. Attempted solutions to the problem quickly proliferated, but they can be gathered together under three broad lines of attack. The first two, the content approach (§1.2) and the consequence approach (§1.3), were both initiated by Popper in his ground-breaking work and both deliver what he regarded as an essential desideratum for any theory of truthlikeness — what we will call the value of content for truths. Although Popper's specific proposals did not capture the intuitive concept, the content and consequence approaches are still being actively developed and refined. The third, the likeness approach (§1.4), takes the likeness in truthlikeness seriously. Assuming likeness relations among worlds, the likeness of a proposition to the truth would seem to be some function of the likeness of worlds that make the proposition true to the actual world. The main problems facing the likeness approach are outlined in §1.4.1 –§1.4.4.

Given that there are at least three different approaches to the logical problem, a natural question is whether there might be a way of combining the different desiderata that motivate them (§1), thereby incorporating the most desirable features of each. Recent results suggest that unfortunately the three approaches, while not logically incompatible, cannot be fruitfully combined. Any attempt to unify these approaches will have to jettison or radically modify at least one of the motivating desiderata.

There are two further problems of truthlikeness, both of which presuppose the solution of the logical problem. One is the epistemological problem of truthlikeness (§2). Even given a suitable solution to the logical problem, there remains a nagging question about our epistemic access to truthlikeness. The other is the axiological problem. Truth and truthlikeness are interesting, at least in part because they appear to be cognitive values of some sort. Even if they are not cognitively valuable themselves, they are closely connected to what is of cognitive value. The relations between truth, truthlikeness and cognitive value are explored in §3.

- 1. The Logical Problem

- 2. The Epistemological Problem

- 3. The Axiological Problem

- 4. Conclusion

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. The Logical Problem

Truth, perhaps even more than beauty and goodness, has been the target of an extraordinary amount of philosophical dissection and speculation. This is unsurprising. After all, truth is the constitutive aim of all inquiry and a necessary condition of knowledge. And yet (as the redundancy theorists of truth have emphasized) there is something disarmingly simple about truth. That the number of planets is 8 is true just in case, well, … the number of planets is 8. By comparison with truth, the more complex and much more interesting concept of truthlikeness has only recently become the subject of serious investigation.The logical problem of truthlikeness is the problem of giving a consistent and materially adequate account of the concept. But before embarking on the project, we have to make it plausible that there is a coherent concept in the offing to be investigated.

1.1 What's the problem?

The proposition the number of planets in our solar system is 9 may be false, but quite a bit closer to the truth than the proposition that the number of planets in our solar system is 9 billion. (One falsehood may be closer to the truth than another falsehood.) The true proposition the number of the planets is between 7 and 9 inclusive is closer to the truth than the true proposition that the number of the planets is greater than equal to 0. (So a truth may be closer to the truth than another truth.) Finally, with the demotion of Pluto to planetoid status, the proposition that the number of the planets is either less than or greater than 9 may be true but it is arguably not as close to the whole truth of this matter (namely, that there are precisely 8 planets) as its highly accurate but strictly false negation: that there are 9 planets.

This particular numerical example is admittedly extremely simple, but a wide variety of judgments of relative likeness to truth crop up both in everyday parlance as well as in scientific discourse. While some involve the relative accuracy of claims concerning the value of numerical magnitudes, others involve the sharing of properties, structural similarity, or closeness among putative laws.

Consider a non-numerical example, also highly simplified but quite topical in the light of the recent rise in status of the concept of fundamentality. Suppose you are interested in the truth about which particles are fundamental. At the outset of your inquiry all you know are various logical truths, like the tautology either electrons are fundamental or they are not. Tautologies like these are pretty much useless in helping you locate the truth about fundamental particles. Now, suppose that the standard model is actually on the right tracks. Then learning the that electrons are fundamental (which we suppose, for the sake of the example, is true) edges you a little bit closer to your goal. It is by no means the complete truth about fundamental particles, but surely it is a piece of it. If you go on to learn that electrons, along with muons and tau particles, are a kind of lepton and that all leptons are fundamental, you have presumably edged a little closer.

If this is right, then some truths are closer to the truth about fundamental particles than others.

The discovery that atoms are not fundamental, that they are in fact composite objects, displaced the earlier hypothesis that atoms are fundamental. For a while the proposition that protons, neutrons and electrons are the fundamental components of atoms was embraced, but unfortunately it too turned out to be false. Still, this latter falsehood seems quite a bit closer to the truth than its predecessor (assuming, again, that the standard model is true). And even if the standard model contains errors, as surely it does, it is presumably closer to the truth about fundamental particles than these other falsehoods. At least, it makes sense to suppose that it might be.

So again, some falsehoods may be closer to the truth about fundamental particles than other falsehoods.

As we have seen, a tautology is not a terrific truth locator, but if you moved from the tautology that electrons either are or are not fundamental to embrace the false proposition that electrons are not fundamental you would have moved further from your goal.

So, some truths are closer to the truth than some falsehoods.

But it is by no means obvious that all truths about fundamental particles are closer to the whole truth than any falsehood. If you move from the tautology to the false proposition that electrons, protons and neutrons are the fundamental components of atoms, you may well have taken a step towards the truth.

If this is right, certain falsehoods may be closer to the truth than some truths.

Investigations into the concept of truthlikeness began in earnest with a tiny trickle of activity in the early nineteen sixties; became something of a torrent from the mid-seventies until the late eighties; and is now a relatively steady stream. Why is truthlikeness such a latecomer to the philosophical scene? The reason is simple. It wasn't until the latter half of the twentieth century that mainstream philosophers gave up on the Cartesian goal of infallible knowledge. The idea that we are quite possibility, even probably, mistaken in our most cherished beliefs, that they might well be just false, was mostly considered tantamount to capitulation to the skeptic. By the middle of the twentieth century, however, it was clear that natural science postulated a very odd world behind the phenomena, one rather remote from our everyday experience, one which renders many of our commonsense beliefs, as well as previous scientific theories, strictly speaking, false. Further, the increasingly rapid turnover of scientific theories suggested that, far from being established as certain, they are ever vulnerable to refutation, and typically are eventually refuted, to be replaced by some new theory. Taking the dismal view, the history of inquiry is a history of theories shown to be false, replaced by other theories awaiting their turn at the guillotine. (This is the “dismal induction”.)

Realism holds that the constitutive aim of inquiry is the truth of some matter. Optimism holds that the history of inquiry is one of progress with respect to its constitutive aim. But fallibilism holds that, typically, our theories are false or very likely to be false, and when shown to be false they are replaced by other false theories. To combine all three ideas, we must affirm that some false propositions better realize the goal of truth — are closer to the truth — than others. So the optimistic realist who has discarded infallibilism has a problem — the logical problem of truthlikeness.

Before exploring possible solutions to the logical problem a couple of common confusions should be cleared away. Truthlikeness should not be conflated with either epistemic probability or with vagueness.

One common mistake is to conflate truthlikeness with vagueness. Suppose vagueness is not an epistemic phenomenon, and that it can be explained by treating truth and falsehood as extreme points on a scale of distinct truth values. Even if there are vagueness-related “degrees of truth”, ranging from clearly true through to clearly false, they should not be confused with degrees of closeness to the truth. To see this, suppose Alan is exactly 179 cm tall. Then the proposition that Alan is exactly 178.5 cm tall should turn out to be clearly false on any good theory of vagueness. Nevertheless it is pretty close to the truth. That Alan is tall, on the other hand, is a vague claim, one that in the circumstances is neither clearly true nor clearly false. However, since it closer to the clearly true end of the spectrum, it has a high (vagueness-related) degree of truth. Still, Alan is tall is not as close to the truth as the quite precise, but nevertheless clearly false proposition that Alan is exactly 178.5 cm tall. So closeness to the truth and vagueness-related degrees of truth (if there are such) can also pull in different directions.

Neither does truthlikeness — likeness to the whole truth of some matter — have much to do with high probability. The probability that the number of planets is greater than or equal to 0 is maximal but not terribly close to the whole truth. Suppose some non-tautological true propositions can be known for certain — call their conjunction the evidence. Then any truth that goes beyond the evidence will be less probable than the evidence. However truths that go beyond the evidence might well be closer to the whole truth than the evidence is. The true proposition which goes most beyond the evidence is the strongest possible truth — it is the truth, the whole truth and nothing but the truth. And that true proposition is clearly the one that is closest to the whole truth. So the truth with the least probability on the evidence is the proposition that is closest to the whole truth.

What, then, is the source of the widespread conflation of truthlikeness with probability? Probability — at least of the epistemic variety — measures the degree of seeming to be true, while truthlikeness measures the degree of being similar to the truth. Seeming and being similar might at first strike one as closely related, but of course they are very different. Seeming concerns the appearances whereas being similar concerns the objective facts, facts about similarity or likeness. Even more important, there is a difference between being true and being the truth. The truth, of course, has the property of being true, but not every proposition that is true is the truth in the sense of the aim of inquiry. The truth of a matter at which an inquiry aims is ideally the complete, true answer to its central query. Thus there are two dimensions along which probability (seeming to be true) and truthlikeness (being similar to the truth) differ radically.

While a multitude of apparently different solutions to the problem have been proposed, they can be classified them into three main approaches, each with its own heuristic — the content approach, the consequence approach and the likeness approach.

1.2 The content approach

Karl Popper was the first philosopher to take the logical problem of truthlikeness seriously enough to make an assay on it. This is not surprising, since Popper was also the first prominent realist to embrace a very radical fallibilism about science while also trumpeting the epistemic superiority of the enterprise.

According to Popper, Hume had shown not only that we can't verify any interesting theory, we can't even render it more probable. Luckily, there is an asymmetry between verification and falsification. While no finite amount of data can verify or probabilify any interesting scientific theory, they can falsify the theory. According to Popper, it is the falsifiability of a theory which makes it scientific, the more falsifiable the better. In his early work, he implied that the only kind of progress an inquiry can make consists in falsification of theories. This is a little depressing, to say the least. What it lacks is the idea that a succession of falsehoods can constitute genuine cognitive progress. Perhaps this is why, for many years after first publishing these ideas in his 1934 Logik der Forschung Popper received a pretty short shrift from the philosophers. If all we can ever .say with confidence is “Missed again!” and “A miss is as good as a mile!”, and the history of inquiry is a sequence of such misses, then epistemic pessimism pretty much follows. Popper eventually realized that this naive falsificationism is compatible with optimism provided we have an acceptable notion of verisimilitude (or truthlikeness). If some false hypotheses are closer to the truth than others, if verisimilitude admits of degrees, then the history of inquiry may well turn out to be one of progress towards the goal of truth. Moreover, it may be reasonable, on the basis of the evidence, to conjecture that our theories are indeed making such progress even though we know they are all false, or highly likely to be false.

Popper saw clearly that the concept of truthlikeness should not be confused with the concept of epistemic probability, and that it has often been so confused. (See Popper 1963 for a history of the confusion.) Popper's insight here was undoubtedly facilitated by his deep but largely unjustified antipathy to epistemic probability. He thought his starkly falsificationist account favored bold, contentful theories. Degree of informative content varies inversely with probability — the greater the content the less likely a theory is to be true. So if you are after theories which seem, on the evidence, to be true, then you will eschew those which make bold — that is, highly improbable — predictions. On this picture, the quest for theories with high probability is simply wrongheaded.

To see this distinction clearly, and to articulate it, was one of Popper's most significant contributions, not only to the debate about truthlikeness, but to philosophy of science and logic in general. As we will see, however, his deep antagonism to probability combined with his passionate love affair with boldness was both a blessing and a curse. The blessing: it led him to produce not only the first interesting and important account of truthlikeness, but to initiate a whole approach to the problem — the content approach (Zwart 2001). The curse: content alone is insufficient to characterize truthlikeness.

Popper made the first real assay on the logical problem of truthlikeness in his famous collection Conjectures and Refutations. Since he was a great admirer of Tarski's assay on the concept of truth, he strove to model his theory of truthlikeness on Tarski's theory. First, let a matter for investigation be circumscribed by a formalized language L adequate for discussing it. Tarski showed us how the actual world induces a partition of sentences of L into those that are true and those that are false. The set of all true sentences is thus a complete true account of the world, as far as that investigation goes. It is aptly called the Truth, T. T is the target of the investigation couched in L. It is the theory that we are seeking, and, if truthlikeness is to make sense, theories other than T, even false theories, come more or less close to capturing T.

T, the Truth, is a theory only in the technical Tarskian sense, not in the ordinary everyday sense of that term. It is a set of sentences closed under the consequence relation: a consequence of some sentences in the set is also a sentence in the set. T may not be finitely axiomatizable, or even axiomatizable at all. Where the language involves elementary arithmetic it follows (from Gödel's incompleteness theorem) that T won't be axiomatizable. However, it is a perfectly good set of sentences all the same. In general we will follow the Tarski-Popper usage here and call any set of sentences closed under consequence a theory, and we will assume that each proposition we deal with is identified with the theory it generates in this sense. (Note that when theories are classes of sentences, theory A logically entails theory B just in case B is a subset of A.)

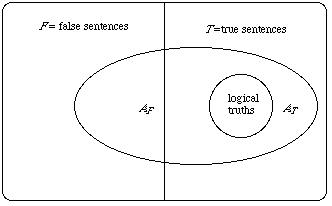

The complement of T, the set of false sentences F, is not a theory even in this technical sense. Since falsehoods always entail truths, F is not closed under the consequence relation. (This is part of the reason we have no complementary expression like the Falsth. The set of false sentences does not describe a possible alternative to the actual world.) But F too is a perfectly good set of sentences. The consequences of any theory A that can be formulated in L will thus divide its consequences between T and F. Popper called the intersection of A and T, the truth content of A (AT), and the intersection of A and F, the falsity content of A (AF). Any theory A is thus the union of its non-overlapping truth content and falsity content. Note that since every theory entails all logical truths, these will constitute a special set, at the center of T, which will be included in every theory, whether true or false.

Diagram 1: Truth and falsity contents of false theory A

A false theory will cover some of F, but because every false theory has true consequences, it will also overlap with some of T (Diagram 1).

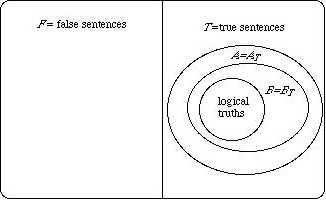

A true theory, however, will only cover T (Diagram 2):

Diagram 2: True theory A is identical to its own truth content

Amongst true theories, then, it seems that the more true sentences that are entailed, the closer we get to T, hence the more truthlike. Set theoretically that simply means that, where A and B are both true, A will be more truthlike than B just in case B is a proper subset of A (which for true theories means that BT is a proper subset of AT). Call this principle: the value of content for truths.

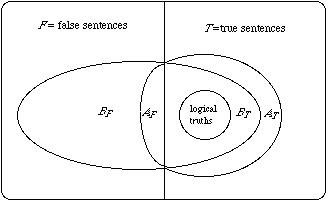

Diagram 3: True theory A has more truth content than true theory B

This essentially syntactic account of truthlikeness has some nice features. It induces a partial ordering of truths, with the whole Truth T at the top of the ordering: T is closer to the Truth than any other true theory. The set of logical truths is at the bottom: further from the Truth than any other true theory. In between these two extremes, true theories are ordered simply by logical strength: the more logical content, the closer to the Truth. Since probability varies inversely with logical strength, amongst truths the theory with the greatest truthlikeness (T) must have the smallest probability, and the theory with the largest probability (the logical truth) is the furthest from the Truth. Popper made a bold and simple generalization of this. Just as truth content (coverage of T) counts in favor of truthlikeness, falsity content (coverage of F) counts against. In general then, a theory A is closer to the truth if it has more truth content without engendering more falsity content, or has less falsity content without sacrificing truth content (diagram 4):

Diagram 4: False theory A closer to the Truth than false theory B

The generalization of the truth content comparison also has some nice features. It preserves the comparisons of true theories mentioned above. The truth content AT of a false theory A (itself a theory in the Tarskian sense) will clearly be closer to the truth than A (diagram 1). More generally, a true theory A will be closer to the truth than a false theory B provided A's truth content exceeds B's.

Despite these nice features the account has a couple of disastrous consequences. Firstly, since a falsehood has some false consequences, and no truth has any, it follow that no falsehood can be as close to the truth as a logical truth — the weakest of all truths. A logical truth leaves the location of the truth wide open, so it is practically worthless as an approximation to the whole truth. So on Popper's account a falsehood is never more worthwhile than a worthless logical truth. (We could call this result the absolute worthlessness of falsehoods.

Furthermore, it is impossible to add a true consequence to a false theory without thereby adding additional false consequences (or subtract a false consequence without subtracting true consequences). So the account entails that no false theory is closer to the truth than any other. We could call this result the relative worthlessness of all falsehoods. These worthlessness results were proved independently by Pavel Tichý and David Miller (Miller 1974, and Tichý 1974).

It is instructive to see why this latter result holds. Let us suppose that A and B are both false, and that A's truth content exceeds B's. Let a be a true sentence entailed by A but not by B. Let f be any falsehood entailed by A. Since A entails both a and f the conjunction, a&f is a falsehood entailed by A, and so part of A's falsity content. If a&f were also part of B's falsity content B would entail both a and f. But then it would entail a contrary to the assumption. Hence a&f is in A's falsity content and not in B's. So A's truth content cannot exceeds B's without A's falsity content also exceeding B's. Suppose now that B's falsity content exceeds A's. Let g be some falsehood entailed by B but not by A, and let f, as before, be some falsehood entailed by A. The sentence f→g is a truth, and since it is entailed by g, is in B's truth content. If it were also in A's then both f and f→g would be consequences of A and hence so would g, contrary to the assumption. Thus A's truth content lacks a sentence, f→g, which is in B's. So B's falsity content cannot exceeds A's without B's truth content also exceeding A's. The relationship depicted in diagram 4 simply cannot obtain.

It is tempting at this point (and Popper was so tempted) to retreat to something like the comparison of truth contents alone. That is to say, A is as close to the truth as B if A entails all of B's truth content, and A is closer to the truth than B just in case A is at least as close as B, and B is not at least as close as A. Call this the Simple Truth Content account.

This Simple Truth Content account preserves Popper's ordering of true propositions. However, it also deems a false proposition the closer to the truth the stronger it is. (Call this principle: the value of content for falsehoods.) According to this principle, since the false proposition that there are seven planets, and all of them are made of green cheese is logically stronger than the false proposition that there are seven planets the former is closer to the truth than the latter. So, once we know a theory is false we can be confident that tacking on any old arbitrary proposition, no matter how misleading it is, will lead us inexorably closer to the truth. Amongst false theories, brute logical strength becomes the sole criterion of a theory's likeness to truth. This is the brute strength objection.

Popper also dabbled in some measures of verisimilitude, based on measures of content, which in turn he derived from measures of logical probability (somewhat ironically, given his dim view of logical probability). Unfortunately his measures suffered from defects very similar to his purely qualitative proposals.

After the failure of Popper's attempts to capture the notion of truthlikeness, a number of variations on the content approach have been explored. Some stay within Popper's essentially syntactic paradigm, comparing classes of true and false sentences (e.g. Newton Smith 1981). Others make the switch to a more semantic paradigm, searching for a plausible theory of distance between the semantic content of sentences, construing these semantic contents as classes of possibilities. A variant of this approach takes the class of models of a language as a surrogate for possible states of affairs (Miller 1978a). The other utilizes a semantics of incomplete possible states like those favored by structuralist accounts of scientific theories (Kuipers 1987b). The idea which these share in common is that the distance between two propositions is measured by the symmetric difference of the two sets of possibilities. Roughly speaking, the larger the symmetric difference, the greater the distance between the two propositions. Symmetric differences might be compared qualitatively – by means of set-theoretic inclusion - or quantitatively, using some kind of probability measure. Both can be shown to have the general features of a measure of distance.

If the truth is taken to be given by a complete possible world (or perhaps represented by a unique model) then we end up with results rather close to the truncated version of Popper's account, comparing by truth contents alone (Oddie 1978). In particular, amongst both truths and falsehoods, one proposition is closer to the truth than another the stronger it is. However, if we take the structuralist approach then we will take the relevant possibilities to be “small” states of affairs — small chunks of the world, rather than an entire world — and then the possibility of more fine-grained distinctions between theories opens up. A rather promising exploration of this idea can be found in Volpe 1995.

The fundamental problem with the original content approach lies not in the way it has been articulated, but rather in the basic underlying assumption: that truthlikeness is a function of just two variables — content and truth value. This assumption has a number of rather problematic consequences.

Two things follow if truthlikeness is a function just of the logical content of a proposition and of its truth value. Firstly, any given proposition A can have only two degrees of verisimilitude: one in case it is false and the other in case it is true. This is obviously wrong. A theory can be false in very many different ways. The proposition that there are eight planets is false whether there are nine planets or a thousand planets, but its degree of truthlikeness is much higher in the first case than in the latter. As we will see below, the degree of truthlikeness of a true theory may also vary according to where the truth lies. Secondly, if we combine the value of content for truths and the value of content for falsehoods, then if we fix truth value, verisimilitude will vary only according to amount of content. So, for example, two equally strong false theories will have to have the same degree of verisimilitude. That's pretty far-fetched. That there are ten planets and that there are ten billion planets are (roughly) equally strong, and both are false in fact, but the latter seems much further from the truth than the former.

Finally, how might strength determine verisimilitude amongst false theories? There seem to be just two plausible candidates: that verisimilitude increases with increasing strength (the principle of the value of content for falsehoods) or that it decreases with increasing strength (the principle of the disvalue of content for falsehoods). Both proposals are at odds with attractive judgements and principles. One does not necessarily make a step toward the truth by reducing the content of a false proposition. The proposition that the moon is made of green cheese is logically stronger than the proposition that either the moon is made of green cheese or it is made of dutch gouda, but the latter hardly seems a step towards the truth. Nor does one necessarily make a step toward the truth by increasing the content of a false theory. The false proposition that all heavenly bodies are made of green cheese is logically stronger than the false proposition all heavenly bodies orbiting the earth are made of green cheese but it doesn't seem to be an improvement.

1.3 The Consequence Approach

Popper crafted his initial proposal in terms of the true and false consequences of a theory. Any sentence at all that follows from a theory is counted as a consequence that, if true, contributes to its overall truthlikeness, and if false, detracts from that. But it has struck many that this both involves an enormous amount of double counting, and that it is the indiscriminate counting of arbitrary consequences that lies behind the Tichý-Miller trivialization result.

Consider a very simple framework with three primitive sentences: h (for the state hot), r (for rainy) and w (for windy). This framework generates a very small space of eight possibilities. The eight maximal conjunctions of the three primitive sentences express those possibilities.

Suppose that in fact it is hot, rainy and windy (expressed by the maximal conjunction h&r&w). Then the claim that it is cold, dry and still (expressed by the sentence ~h&~r&~w) is further from the truth than the claim that it is cold, rainy and windy (expressed by the sentence ~h&r&w). And the claim that it is cold, dry and windy (expressed by the sentence ~h&~r&w) is somewhere between the two. These kinds of judgements, which seem both innocent and intuitively correct, Popper's theory cannot accommodate. And if they are to be accommodated we cannot treat all true and false consequences alike. For the three false claims mentioned here have exactly the same number of true and false consequences.

Clearly, if we are going to measure closeness to truth by counting true and false consequences, some true consequences have to count more than others if we are to discriminate amongst them. For example, h and r are both true, and ~h and ~r are false. The former should surely count in favor of a claim, and the latter against. But ~h→~r is true and h→~r is false. After we have counted the truth h in favor of a claim's truthlikeness and the falsehood ~r against it, should we also count the true consequence ~h→~r in favor, and the falsehood h→~r against? Surely this is both unnecessary and misleading. And it is precisely counting sentences like these that renders Popper's account, in terms of all true and false consequences, susceptible to the Tichý-Miller argument.

According to the consequence approach, Popper was right in thinking that truthlikeness depends on the relative sizes of classes of true and false consequences, but erred in thinking that all consequences of a theory count the same. Some consequences are relevant, some aren't. Let R be some criterion of relevance of consequences; let AR be the set of relevant consequences of A. Whatever the criterion R is it has to satisfy the constraint that A be recoverable from (and hence equivalent to ) AR. Popper's account is the limiting one — all consequences are relevant. (Popper's relevance criterion is the empty one, P, according to which AP is just A itself.) The relevant truth content of A (abbreviated ART) can be defined as AR∩T (or A∩TR), and similarly the relevant falsity content of A can be defined as AR∩F. Since AR = (AR∩T)∪(AR∩F) it follows that the union of true and false relevant consequences of A is equivalent to A. And where A is true AR∩F is empty, so that A is equivalent to AR∩T alone.

With this restriction to relevant consequences we can basically apply Popper's definitions: one theory is more truthlike than another if its relevant truth content is larger and its relevant falsity content no larger; or its relevant falsity content is smaller, and its relevant truth content is no smaller.

Although this basic idea was first explored by Mortensen in his 1983, explicitly using a notion of relevant entailment, by the end of his paper Mortensen had abandoned the basic idea as unworkable, at least within the framework of standard relevant logics. Others, however, have used the relevant consequence approach to avoid the trivialization results that plague the content approach, and capture quite a few of the basic intuitive judgments of truthlikeness. Subsequent proposals within the broad program have been offered by Burger and Heidema 1994, Schurz and Weingartner 1987 and 2010, and Gemes 2007. (Gerla also uses the notion of the relevance of a “test” or factor, but his account is best located more squarely within the likeness approach.)

One possible relevance criterion that the h-r-w framework might suggest is atomicity. (See Cevolani, Festa and Kuipers 2013) for an approach along these lines.) But even if we could avoid the problem of saying what it is for a sentence to be atomic, since many distinct propositions imply the same atomic sentences, this criterion would not satisfy requirement that A be equivalent to AR. For example, (h∨r) and (~h∨~r), like tautologies, imply no atomic sentences at all.

Burger and Heidema 1994 compare theories by positive and negative sentences. A positive sentence is one that can be constructed out of &, ∨and any true basic sentence (where a basic sentence is either an atomic sentence or its negation). A negative sentence is one that can be constructed out of &, ∨ and any false basic sentence. Call a sentence pure if it is either positive or negative. If we take the relevance criterion to be purity, and combine that with the relevant consequence schema above, we have Burger and Heidema's proposal, which yields a reasonable set intuitive judgments like those above. Unfortunately purity (like atomicity) does not quite satisfy the constraint that A be equivalent to the class of its relevant consequences. For example, if h and r are both true then (~h∨r) and (h∨~r) both have the same pure consequences (namely, none).

Schurz and Weingartner 2010 use the following notion of relevance S: being equivalent to a disjunction of atomic propositions or their negations. With this criterion they can accommodate a range of intuitive judgments in the simple weather framework that Popper's account cannot.

For example, where >S is the relation of greater S-truthlikeness we capture the following relations among false claims, which, on Popper's account, are mostly incommensurable:

(h&~r) >S (~r) >S (~h&~r).

and

(h∨r) >S (~r) >S (~h∨~r) >S (~h&~r).

The relevant consequence approach faces three major hurdles.

The first is an extension problem: the approach does produce some intuitively acceptable results in a finite propositional framework, but it needs to be extended to more realistic frameworks — for example, first-order and higher-order frameworks. Gemes's recent proposal in his 2007 is promising in this regard. More research is required to demonstrate its adequacy.

The second is that, like Popper's original proposal, it judges no false proposition to be closer to the truth than any truth, including logical truths. Schurz and Weingartner have answered this objection by extending their qualitative account to a quantitative account, by assigning weights to relevant consequences and summing. The problem with this is that it assumes finite consequence classes.

The third involves the language-dependence of any adequate relevance criterion. This problem will be outlined and discussed below in connection with the likeness approach (§1.4.4).

1.4 The Likeness Approach

In the wake of the collapse of Popper's articulation of the content approach two philosophers, working quite independently, suggested a radically different approach: one which takes the likeness in truthlikeness seriously (Tichý 1974, Hilpinen 1976). This shift from content to likeness was also marked by an immediate shift from Popper's essentially syntactic approach (something it shares with the consequence program) to a semantic approach, one which trafficks in the contents of sentences.

Traditionally the semantic contents of sentences have been taken to be non-linguistic, or rather non-syntactic, items — propositions. What propositions are is, of course, highly contested, but most agree that a proposition carves the class of possibilities into two sub-classes — those in which the proposition is true and those in which it is false. Call the class of worlds in which the proposition is true its range. Some have proposed that propositions be identified with their ranges (for example, David Lewis, in his 1986). This identification is implausible since, for example, the informative content of 7+5=12 seems distinct from the informative content of 12=12, which in turn seems distinct from the informative content of Gödel's first incompleteness theorem – and yet all three have the same range. They are all true in all possible worlds. Clearly if semantic content is supposed to be sensitive to informative content, classes of possible worlds will not be discriminating enough. We need something more fine-grained for a full theory of semantic content.

Despite this, the range of a proposition is certainly an important aspect of informative content, and it is not immediately obvious why truthlikeness should be sensitive to differences in the way a proposition picks out its range. (Perhaps there are cases of logical falsehoods some of which seem further from the truth than others. For example 7+5=113 might be considered further from the truth than 7+5=13 though both have the same range — namely, the empty set of worlds. See Sorenson 2007.) But as a first approximation to the concept, we will assume that it is not hyperintensional and that logically equivalent propositions have the same degree of truthlikess. The proposition that the number of planets is eight for example, should have the same degree of truthlikeness as the proposition that the square of the number of the planets is sixty four.

There is also not a little controversy over the nature of possible worlds. One view — perhaps Leibniz's and more recently David Lewis's — is that worlds are maximal collections of possible things. Another — perhaps the early Wittgenstein's — is that possible worlds are complete possible ways for things to be. On this latter state-conception, a world is a complete distribution of properties, relations and magnitudes over the appropriate kinds of entities. Since invoking “all” properties, relations and so on will certainly land us in paradox, these distributions, or possibilities, are going to have to be relativized to some circumscribed array of properties and relations. Call the complete collection of possibilities, given some array of features, the logical space, and call the array of properties and relations which underlie that logical space, the framework of the space.

Familiar logical relations and operations correspond to well-understood set-theoretic relations and operations on ranges. The range of the conjunction of two proposition is the intersection of the ranges of the two conjuncts. Entailment corresponds to the subset relation on ranges. The actual world is a single point in logical space — a complete specification of every matter of fact (with respect to the framework of features) — and a proposition is true if its range contains the actual world, false otherwise. The whole Truth is a true proposition that is also complete: it entails all true propositions. The range of the Truth is none other than the singleton of the actual world. That singleton is the target, the bullseye, the thing at which the most comprehensive inquiry is aiming.

Without additional structure on the logical space we have just three factors for a theorist of truthlikeness to work with — the size of a proposition (content factor), whether it contains the actual world (truth factor), and which propositions it implies (consequence factor). The likeness approach requires some additional structure to the logical space. For example, worlds might be more or less like other worlds. There might be a betweenness relation amongst worlds, or even a fully-fledged distance metric. If that's the case we can start to see how one proposition might be closer to the Truth — the proposition whose range singles out the actual world — than another. The core of the likeness approach is that the truthlikeness of a proposition supervenes on the likeness between worlds, or the distance between worlds.

The likeness theorist has two initial tasks: firstly, making it plausible that there is an appropriate likeness or distance function on worlds; and secondly, extending likeness between individual worlds to likeness of propositions (i.e. sets of worlds) to the actual world.

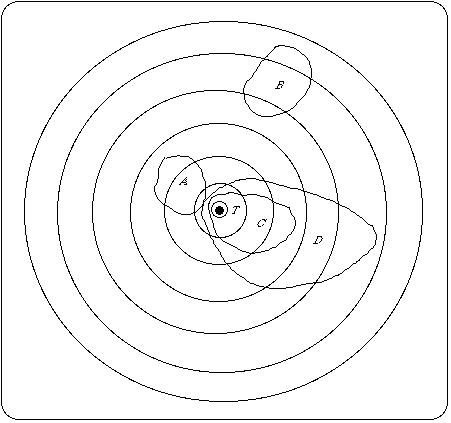

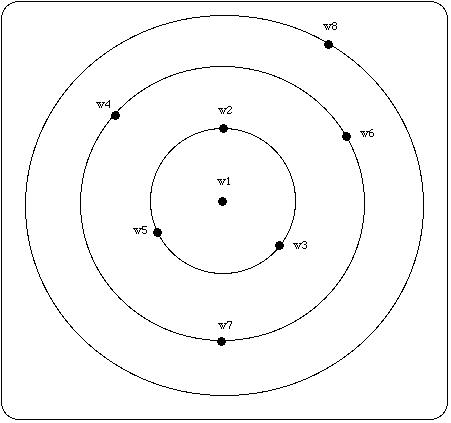

Diagram 5: Verisimilitude by similarity circles

Suppose, for example, that worlds are arranged in similarity spheres nested around the actual world, familiar from the Stalnaker-Lewis approach to counterfactuals. Consider Diagram 5.

The bullseye is the actual world and the small sphere which includes it is T, the Truth. The nested spheres represent likeness to the actual world. A world is less like the actual world the larger the first sphere of which it is a member. Propositions A and B are false, C and D are true. A carves out a class of worlds which are rather close to the actual world — all within spheres two to four — whereas B carves out a class rather far from the actual world — all within spheres five to seven. Intuitively A is closer to the bullseye than is B.

The largest sphere which does not overlap at all with a proposition is plausibly a measure of how close the proposition is to being true. Call that the truth factor. A proposition X is closer to being true than Y if the truth factor of X is included in the truth factor of Y. The truth factor of A, for example, is the smallest non-empty sphere, T itself, whereas the truth factor of B is the fourth sphere, of which T is a proper subset.

If a proposition includes the bullseye then of course it is true simpliciter, it has the maximal truth factor (the empty set). So all true propositions are equally close to being true. But truthlikeness is not just a matter of being close to being true. The tautology, D, C and the Truth itself are equally true, but in that order they increase in their closeness to the whole truth.

Taking a leaf out of Popper's book, Hilpinen argued that closeness to the whole truth is in part a matter of degree of informativeness of a proposition. In the case of the true propositions, this correlates roughly with the smallest sphere which totally includes the proposition. The further out the outermost sphere, the less informative the proposition is, because the larger the area of the logical space which it covers. So, in a way which echoes Popper's account, we could take truthlikeness to be a combination of a truth factor (given by the likeness of that world in the range of a proposition that is closest to the actual world) and a content factor (given by the likeness of that world in the range of a proposition that is furthest from the actual world):

A is closer to the truth than B if and only if A does as well as B on both truth factor and content factor, and better on at least one of those.

Applying Hilpinen's definition we capture two more particular judgements, in addition to those already mentioned, that seem intuitively acceptable: that C is closer to the truth than A, and that D is closer than B. (Note, however, that we have here a partial ordering: A and D, for example, are not ranked.) We can derive from this various apparently desirable features of the relation closer to the truth: for example, that the relation is transitive, asymmetric and irreflexive; that the Truth is closer to the Truth than any other theory; that the tautology is at least as far from the Truth as any other truth; that one cannot make a true theory worse by strengthening it by a truth (a weak version of the value of content for truths); that a falsehood is not necessarily improved by adding another falsehood, or even by adding another truth (a repudiation of the value of content for falsehoods).

But there are also some worrying features here. While it avoids the relative worthlessness of falsehoods, Hilpinen's account, just like Popper's, entails the absolute worthlessness of all falsehoods: no falsehood is closer to the truth than any truth. So, for example, Newton's theory is deemed to be no more truthlike, no closer to the whole truth, than the tautology.

Characterizing Hilpinen's account as a combination of a truth factor and an information factor seems to mask its quite radical departure from Popper's account. The incorporation of similarity spheres signals a fundamental break with the pure content approach, and opens up a range of possible new accounts: what such accounts have in common is that the truthlikeness of a proposition is a non-trivial function of the likeness to the actual world of worlds in the range of the proposition.

There are three main problems for any concrete proposal within the likeness approach. The first concerns an account of likeness between states of affairs – in what does this consist and how can it be analyzed or defined? The second concerns the dependence of the truthlikeness of a proposition on the likeness of worlds in its range to the actual world: what is the correct function? (This can be called “the extension problem”.) And finally, there is the famous problem of “translation variance” or “framework dependence” of judgements of likeness and of truthlikeness. This last problem will be taken up in §1.4.4.

1.4.1 Likeness of worlds in a simple propositional framework

One objection to Hilpinen's proposal (like Lewis's proposal for counterfactuals) is that it assumes the similarity relation on worlds as a primitive, there for the taking. At the end of his 1974 paper Tichý not only suggested the use of similarity rankings on worlds, but also provided a ranking in propositional frameworks and indicated how to generalize this to more complex frameworks.

Examples and counterexamples in Tichý 1974 are exceedingly simple, utilizing the little propositional framework introduced above, with three primitives — h (for the state hot), r (for rainy) and w (for windy).

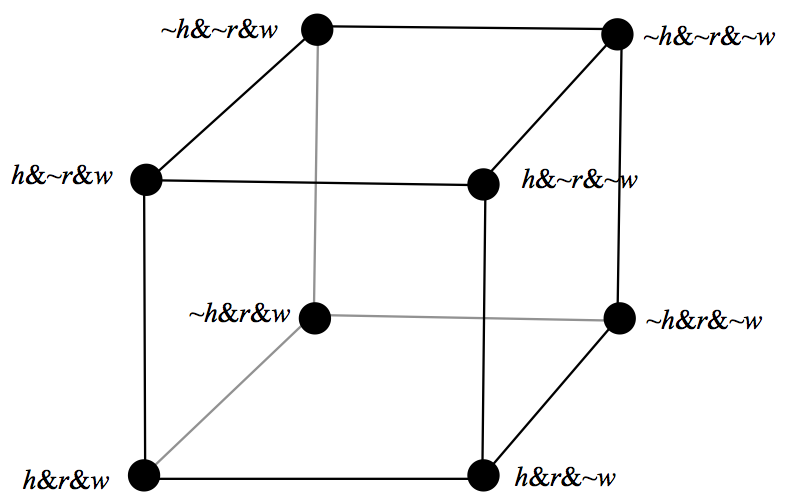

Corresponding to the eight-members of the logical space generated by distributions of truth values through the three basic conditions, there are eight maximal conjunctions (or constituents):

w1 h&r&w w5 ~h&r&w w2 h&r&~w w6 ~h&r&~w w3 h&~r&w w7 ~h&~r&w w4 h&~r&~w w8 ~h&~r&~w

Worlds differ in the distributions of these traits, and a natural, albeit simple, suggestion is to measure the likeness between two worlds by the number of agreements on traits. This is tantamount to taking distance to be measured by the size of the symmetric difference of generating states — the so-called city-block measure. As is well known, this will generate a genuine metric, in particular satisfying the triangular inequality. If w1 is the actual world this immediately induces a system of nested spheres, but one in which the spheres come with numbers attached:

Diagram 6: Similarity circles for the weather space

Those worlds orbiting on the sphere n are of distance n from the actual world.

In fact the structure of the space is better represented not by similarity circles, but rather but rather by a three-dimensional cube:

Diagram 7: The three-dimensional weather space

This way of representing the space makes a clearer connection between distances between worlds and the role of the atomic propositions in generating those distances through the city-block metric. It also eliminates inaccuracies in the relations between the worlds that are not at the center that the similarity circle diagram suggests.

1.4.2 The likeness of a proposition to the truth

Now that we numerical distances between worlds, numerical measures of propositional likeness to, and distance from, the truth can be defined as some function of the distances, from the actual world, of worlds in the range of a proposition. But which function is the right one? This is the extension problem .

Suppose, once more, that h&r&w is the whole truth about the matter of the weather. Following Hilpinen's lead, we might consider overall distance of a propositions from the truth to be some function of the distances from actuality of two extreme worlds. Let truth(A) be the truth value of A in the actual world. Let min(A) be the distance from actuality of that world in A closest to the actual world, and max(A) be the distance from actuality of that world in A furthest from the actual world.

Table 1: The min and max functions.

A truth(A) min(A) max(A) h&r&w true 0 0 h&r true 0 1 h&r&~w false 1 1 h true 0 2 h&~r false 1 2 ~h false 1 3 ~h&~r&w false 2 2 ~h&~r false 2 3 ~h&~r&~w false 3 3

The simplest proposal (made first in Niiniluoto 1977) would be to take the average of these two quantities might (call this measure min-max-average). This would remedy a rather glaring shortcoming which Hilpinen's qualitative proposal shares with Popper's original proposal, namely that no falsehood is closer to the truth than any truth (even the worthless tautology). This numerical equivalent of Hilpinen's proposal renders all propositions comparable for truthlikeness, and some falsehoods it deems more truthlike than some truths.

But now that we have distances between all worlds, why take only the extreme worlds in a proposition into account? Why shouldn't every world in a proposition potentially count towards its overall distance from the actual world?

A simple measure which does count all worlds is average distance from the actual world. Average delivers all of the particular judgements we used above to motivate Hilpinen's proposal in the first place, and in conjunction with the simple metric on worlds it delivers the following ordering of propositions in our simple framework:

Table 2: the average function.

A truth(A) average(A) h&r&w true 0 h&r true 0.5 h&r&~w false 1.0 h true 1.3 h&~r false 1.5 ~h false 1.7 ~h&~r&w false 2.0 ~h&~r false 2.5 ~h&~r&~w false 3.0

This ordering look quite promising. Propositions are closer to the truth the more they get the basic weather traits right, further away the more mistakes they make. A false proposition may be made either worse or better by strengthening (~w is the same distance from the Truth as ~h; h&r&~w is better than ~w while ~h&~r&~w is worse). A false proposition (like h&r&~w) can be closer to the truth than some true propositions (like h).

These judgments may be sufficient to show that average is superior to min-max-average), at least on this group of propositions, but they are clearly not sufficient to show that averaging is the right procedure. What we need are some straightforward and compelling general desiderata which jointly yield a single correct function. In the absence of such a proof, we can only resort to case by case comparisons.

Table 3: average violates the value of content for truths.

A truth(A) average(A) h ∨ ~r ∨ w true 1.4 h ∨ ~r true 1.5 h ∨ ~h true 1.5

Furthermore average has by no means found universal favor on the score of particular judgments either. Notably, there are pairs of true propositions such that the average measure deems the stronger of the two to be the further from the truth. According to the average measure, the tautology, for example, is not the true proposition furthest from the truth. Averaging thus violates the Popperian principle of the value of content for truths (Popper 1976).

Table 4: the sum function.

A truth(A) sum(A) h&r&w true 0 h&r true 1 h&r&~w false 1 h true 4 h&~r false 3 ~h false 8 ~h&~r&w false 2 ~h&~r false 5 h&~r&~w false 3

Consider the sum function — the sum of the distances of worlds in the range of a proposition from the actual world.

The sum function is an interesting measure in its own right, though no one has proposed it as a stand-alone account of closeness to truth. Although sum, like average is sensitive to the distances of all worlds in a proposition from the actual world, it is not plausible as a measure of distance from the truth. What sum does measure is a special kind logical weakness. In general the weaker a proposition is, the larger its sum value. But adding words far from the actual world makes the sum value larger than adding worlds closer in. This guarantees, for example, that of two truths the sum of the logically weaker is always greater than the sum of the stronger. Thus sum might play a role in capturing the value of content for truths. But it also delivers the implausible value of content for falsehoods. If you think that there is anything to the likeness program it is hardly plausible that the falsehood ~h&~r&~w is closer to the truth than its consequence ~h. Niiniluoto argues that sum is a good likeness-based candidate for measuring Hilpinen's “information factor”. It is obviously much more sensitive than is max to the proposition's informativeness about the location of the truth.

Table 5: The min-sum-average function.

A truth(A) min-sum-average(A) h&r&w true 0 h&r true 0.5 h&r&~w false 1 h true 2 h&~r false 2 ~h false 4.5 ~h&~r&w false 2 ~h&~r false 3.5 ~h&~r&~w false 3

Niiniluoto thus proposes, as a measure of distance from the truth, the average of this information factor and Hilpinen's truth factor: min-sum-average. Averaging the more sensitive information factor (sum) and the closeness-to-being-true factor (min) yields some interesting results. For example, this measure deems h&r&w more truthlike than h&r, and the latter more truthlike than h. And in general min-sum-average delivers the value of content for truths. For any two truths the min factor is the same (0), and the sum factor increases as content decreases. Furthermore, unlike the symmetric difference measures, min-sum-average doesn't deliver the objectionable value of contents for falsehoods. For example, ~h&~r&~w is deemed further from the truth than ~h. But min-sum-average is not quite home free. For example from an intuitive point of view. For example, ~h&~r&~w is deemed closer to the truth than ~h&~r. This is because what ~h&~r&~w loses in closeness to the actual world (min) it makes up for by an increase in strength (sum).

In deciding how to proceed here we confront a methodological problem. The methodology favored by Tichý is very much bottom-up. For the purposes of deciding between rival accounts it takes the intuitive data very seriously. Popper (along with Popperians like Miller) favor a more top-down approach. They are deeply suspicious of folk intuitions, and sometimes appear to be in the business of constructing a new concept rather than explicating an existing one. They place enormous weight on certain plausible general principles, largely those that fit in with other principles of their overall theory of science: for example, the principle that strength is a virtue and that the stronger of two true theories (and maybe even of two false theories) is the closer to the truth. A third approach, one which lies between these two extremes, is that of reflective equilibrium. This recognizes the claims of both intuitive judgements on low-level cases, and plausible high-level principles, and enjoins us to bring principle and judgement into equilibrium, possibly by tinkering with both. Neither intuitive low-level judgements nor plausible high-level principles are given advance priority. The protagonist in the truthlikeness debate who has argued most consistently for this approach is Niiniluoto.

How might reflective equilibrium be employed to help resolve the current dispute? Consider a different space of possibilities, generated by a single magnitude like the number of the planets (N). Suppose that N is in fact 8 and that the further n is from 8, the further the proposition that N=n from the Truth. Consider three sets of propositions. In the left-hand column we have a sequence of false propositions which, intuitively, decrease in truthlikeness while increasing in strength. In the middle column we have a sequence of corresponding true propositions, in each case the strongest true consequence of its false counterpart on the left (Popper's “truth content”). Again members of this sequence steadily increase in strength. Finally on the right we have another column of falsehoods. These are also steadily increasing in strength, and like the left-hand falsehoods, seem (intuitively) to be decreasing in truthlikeness as well.

Table 6

Falsehood (1) Strongest True Consequence Falsehood (2) 10 ≤ N ≤ 20 N=8 or 10 ≤ N ≤ 20 N=9 or 10 ≤ N ≤ 20 11 ≤ N ≤ 20 N=8 or 11 ≤ N ≤ 20 N=9 or 11 ≤ N ≤ 20 …… …… …… 19 ≤ N ≤ 20 N=8 or 19 ≤ N ≤ 20 N=9 or 19 ≤ N ≤ 20 N = 20 N=8 or N = 20 N=9 or N = 20

Judgements about the closeness of the true propositions in the center column to the truth may be less intuitively clear than are judgments about their left-hand counterparts. However, it would seem highly incongruous to judge the truths in table 4 to be steadily increasing in truthlikeness, while the falsehoods both to the left and the right, both marginally different in their overall likeness relations to truth, steadily decrease in truthlikeness. This suggests that that all three are sequences of steadily increasing strength combined with steadily decreasing truthlikeness. And if that's right, it might be enough to overturn Popper's principle that amongst true theories strength and truthlikeness must covary (even while granting that this is not so for falsehoods).

If this argument is sound, it removes an objection to averaging distances, but it does not settle the issue in its favor, for there may still be other more plausible counterexamples to averaging that we have not considered.

Schurz and Weingartner argue that this extension problem is the main defect of the likeness approach:

“the problem of extending truthlikeness from possible worlds to propositions is intuitively underdetermined. Even if we are granted an ordering or a measure of distance on worlds, there are many very different ways of extending that to propositional distance, and apparently no objective way to decide between them.” (Schurz and Weingartner 2010, 423)

One way of answering this objection head on is to identify principles that, given a distance function on worlds, constrain the distances between worlds and sets of worlds, principles perhaps powerful enough to identify a unique extension. (An argument of this kind is considered in §1.5.)

1.4.3 Likeness in more complex frameworks

Simple propositional examples are convenient for the purposes of illustration, but what the likeness approach needs is some evidence that it can transcend such simple examples. (Popper's content approach, whatever else its shortcomings, can be applied in principle to theories expressible in any language, no matter how sophisticated.) Can the likeness program be generalized to arbitrarily complex frameworks? For example, does the idea extend even to first-order frameworks the possible worlds of which may well be infinitely complex?

There is no straightforward, natural or obvious way to construct distance or likeness measures on worlds considered as non-denumerably infinite collections of basic or “atomic” states. But a fruitful way of implementing the likeness approach in frameworks that are more interesting and realistic than the toy propositional weather framework, involves cutting the space of possibilities down into manageable chunks. This is not just an ad hoc response to the difficulties imposed by infinite states, but is based on a principled view of the nature of inquiry — of what constitutes an inquiry, a question, a query, a cognitive problem, or a subject matter. Although these terms might seem to signify quite different notions they all have something deep in common.

Consider the notion of a query or a question. Each well-formed question Q receives an answer in each complete possible state of the world. Two worlds are equivalent with respect to the question Q if they receive the same answer in both worlds. Given that Q induces an equivalence relation on worlds, the question partitions the logical space into a set of mutually exclusive and jointly exhaustive cells: {C1, C2, ... , Ci, ...}. Each cell Ci is a complete possible answer to the question Q. And each incomplete answer to Q is tantamount to the union, or disjunction, of some collection of the complete answers. For example, the question what is the number of the planets? partitions the set of worlds into those in which N=0, those in which N=1, ..., and so on. The question what is the state of the weather? (relative to our three toy factors) partitions the set of worlds into those in which is hot, rainy and windy, those in which it is hot, rainy and still, ... and so on. (See Oddie 1986a.) In other words, the so-called “worlds” in the simple weather framework are in fact surrogates for large classes of worlds, and in each such class the answer to the coarse-grained weather question is the same. The elements of this partition are all complete answers to the weather question, and distances between the elements of the partition can be handled rather easily as we have seen. Essentially the same idea can be applied to much more complex questions.

Niiniluoto characterizes the notion of a cognitive problem in the same way, as a partition of a space of possibilities. And this same explication has been remarkably fruitful in clarifying the notions of aboutness and of a subject matter (Oddie 1986a, Lewis 1988).

Each inquiry is based on some question that admits of a range of possible complete answers, which effect a partition {C1, C2, ... , Cn, ...} of the space of worlds. Incomplete answers to the question are equivalent to disjunctions of those complete answers which entail the incomplete answer. Now, often there is a rather obvious measure of distance between the elements of such partitions, which can be extended to a measure of distance of an arbitrary answer (whether complete or partial) from the true answer.

One rather promising source of natural partitions (Tichý 1976, Niiniluoto 1977) are Hintikka's distributive normal forms (Hintikka 1963). These are smooth generalizations of the familiar maximal conjunctions of propositional frameworks used above. What corresponds to the maximal conjunctions are known as constituents, which, like maximal conjunctions in the propositional case, are jointly exhaustive and mutually exclusive. Constituents lay out, in a very perspicuous manner, all the different ways individuals can be related to each other— relative, of course, to some collection of basic attributes. (These correspond to the collection of atomic propositions in the propositional case.) The basic attributes can be combined by the usual boolean operations into complex attributes which are also like the maximal conjunctions, in that they form a partition of the class of individuals. These complex predicates are called Q-predicates in the simple monadic case, and attributive constituents in the more general case. Constituents specify, for each member in this set of mutually exclusive and jointly exhaustive complex attributes, whether or not that complex attribute is exemplified.

In the simplest case a framework of three basic monadic attributes (F,G and H) gives rise to eight complex attributes: — Carnap's Q-predicates:

Q1 F(x)&G(x)&H(x),

Q2 F(x)&G(x)&~H(x)

…

Q8 ~F(x)&~G(x)&~H(x)

The simplest constituents run through these Q – predicates (or attributive constituents) specifying for each one whether or not it is instantiated.

C1 ∃xQ1(x) & ∃xQ2(x) & ... & ∃xQ7(x) & ∃xQ8(x)

C2 ∃xQ1(x) & ∃xQ2(x) & ... & ∃xQ7(x) & ~∃xQ8(x)

…

C255 ~∃xQ1(x) & ~∃xQ2(x) & ... & ~∃xQ7(x) & ∃xQ8(x)

C256 ~∃xQ1(x) & ~∃xQ2(x) & ... & ~ ∃xQ7(x) & ~∃xQ8(x)

In effect the partition induced by the set constituents corresponds to the question: Which Q-predicates are instantiated? A complete answer to that question will specify one of the constituents, an incomplete answer will specify a disjunction of such.

Note that the last constituent in this listing, C256, is logically inconsistent. Given that the set of Q-predicates is jointly exhaustive, each individual has to satisfy one of them, so at least one of them has to be instantiated. Once such inconsistent constituents are omitted, every sentence in a monadic language (without identity or constants) is equivalent to a disjunction of a unique set of these constituents. (In the simple monadic case here there is only one inconsistent constituent, but in the general case there are many inconsistent constituents. Identifying inconsistent constituents is equivalent to the decision problem for first-order logic.)

For example, the sentence ∀x(F(x)&G(x)&H(x)) is logically equivalent to:

C64 ∃xQ1(x) & ~ ∃xQ2(x) & ... & ~∃xQ7(x) & ~ ∃xQ8(x).

The sentence ∀x(~F(x)&G(x)&H(x)) is logically equivalent to:

C128 ~∃xQ1(x) & ∃xQ2(x) & ~∃xQ1(x) & ... & ~∃xQ7(x) & ~∃xQ8(x).

The sentence ∀x(~F(x)&~G(x)&~H(x)) is logically equivalent to:

C255 ~∃xQ1(x) & ~∃xQ2(x) & ... &~ ∃xQ7(x) & ∃xQ8(x).

More typically, a sentence is equivalent to disjunction of constituents. For example the sentence ∀x(G(x)&H(x)) is logically equivalent to:

C64∨ C128.

And the sentence ∀x(F(x)∨G(x)∨H(x)) is logically equivalent to:

C1∨ C2 ∨ ... ∨ C253∨ C254.

Before considering whether distributive normal forms (disjunctions of constituents) can be generalized to more complex and interesting frameworks (involving relations and greater quantificational complexity) it is worth thinking about how to define distances between these constituents, since they are the simplest of all.

If we had a good measure of distance between constituents then presumably the distance of some disjunction of constituents from one particular constituent could be obtained using the right extension function (§1.4.2) whatever that happens to be. And then the distance of an arbitrary expressible proposition from the truth could be defined as the distance of its normal form from the true constituent.

As far as distances between constituents go, there is an obvious generalization of the city-block (or symmetric difference) measure on the corresponding maximal conjunctions (or propositional constituents): namely, the size of the symmetric difference of the sets of Q-predicates associated with each constituent. So, for example, since C1 and C2 differ on the instantiation of just one Q-predicate (namelyQ8) the distance between them would be 1. At the other extreme, since C2 and C255 disagree on the instantiation of every Q-predicate, the distance between them is 8 (maximal).

Niiniluoto first proposed this as the appropriate distance measure for these constituents in his 1977, and since he found a very similar proposal in Clifford, he called it the Clifford measure. Since it is based on symmetric differences it yields a function that satisfies the triangular inequality (the distance between X and Z is no greater than the sum of the distances between X and Y and Y and Z).

Note that on the Clifford measure the distance between C64 (which says that Q1 is the only instantiated Q-predicate) and C128 (which says that Q2 is the only instantiated Q-predicate) is the same as the distance between C64 and C255 (which says that Q8 is the only instantiated Q-predicate).

C255 ~∃xQ1(x) & ~∃xQ2(x) & ... & ~∃xQ7(x) & ∃xQ8(x)

C64 says that everything has F, G and H; C128, that everything has ~F, G and H; C255, that everything has ~F, ~G and ~H. If the likeness intuitions in the weather framework have anything to them, then C128 is closer to C64 than C255 is. Tichý (1976 and 1978) captured this intuition by first measuring the distances between Q-predicates using the city-block measure. Then the basic idea is to extend this distances between sets of Q-predicates by identifying the “minimal routes” from one set of such Q-predicates to another. The distance between two sets of Q-predicates is the distance along some minimal route.

Suppose that two normal forms feature the same number of constituents. That is, the two sets of Q-predicates associated with two constituents are the same size. Let a linkage be any 1-1 mapping from one set to the other. Let the breadth of the linkage be the average distance between linked items. Then according to Tichý the distance between two constituents (or their associated sets of Q-predicates) is the breadth of the narrowest linkage between them. If one constituent C contains more Q-predicates than the other then a linkage is defined as a surjection from the larger set to the smaller set, and the rest of the definition is the same.

This idea certainly captures the intuition concerning the relative distances between C128, C64 and C255. But it suffers the following defect: it does not satisfy the triangular inequality. This is because in a linkage between sets with different numbers of members, some Q-predicates will be unfairly represented, thereby possibly distorting the intuitive distance between the sets. This can be remedied, however, by altering the account of a linkage to make them fair. The details need not detain us here (for those see Oddie 1986a) but in a fair linkage each Q-predicate in a constituent receives the same weight in the linkage as it possesses in the constituent itself.

The simple Clifford measure ignores the distances between the Q-predicates, but it can be modified and refined in various ways to take account of such distances (see Niiniluoto 1987).

Hintikka showed that the distributive normal form theorem can be generalized to first-order sentences of any degree of relational or quantificational complexity. Any sentence of a first-order sentence comes with a certain quantificational depth —the number of its overlapping quantifiers. So, for example, analysis in the first-order idiom would reveal that (1) is a depth-1 sentence; (2) is a depth-2 sentence; and (3) is a depth-3 sentence.

(1) Everyone loves himself.

(2) Everyone who loves another is loved by the other.

(3) Everyone who loves another loves the other's lovers.

We could call a proposition depth-d if the shallowest depth at which it can be expressed is d. Hintikka showed how to define both attributive constituents and constituents of depth-d recursively, so that every depth-d proposition can be expressed by a disjunction of a unique set of (consistent) depth-d constituents.

Constituents can be represented as finite tree-structures, the nodes of which are all like Q-predicates – that is, strings of atomic formulas either negated or unnegated. Consequently, if we can measure distance between such trees we will be well down the path of measuring the truthlikeness of depth-d propositions – it will be some function of the distance of constituents in its normal form from the true depth-d constituent. And we can measure the distance between such trees using the same idea of a minimal path from one to the other. This too can be spelt out in terms of fair linkages between trees, thus guaranteeing the triangular inequality.

This program for defining distance between expressible propositions has proved quite flexible and fruitful, delivering a wide range of intuitively appealing results, at least in simple first-order cases. And the approach can be further refined – for example, by means of Carnap's concept of a family of properties.

There are yet more extension problems for the likeness program. First-order theories can be infinitely deep — that is to say, there is no finite depth at which such a theory theory can be expressed. The very feature of first-order constituents that makes them manageable — their finite structure — ensures that they cannot express propositions that are not finitely axiomatizable in a first-order language. Clearly it would be desirable for an account of truthlikeness to be able, in principle, to rank propositions that go beyond the expressive power of first-order sentences. First-order sentences lack the expressive power of first-order theories in general, and first-order languages lack the expressive resources of higher-order languages. A theory of truthlikeness that applies only to first-order sentences, while not a negligible achievement, would still have limited application.

There are plausible ways of extending the normal-form approach to these more complex cases. The relative truthlikeness of infinitely deep first-order propositions can be captured by taking limits as depth increases to infinity (Oddie 1978, Niiniluoto 1987). And Hintikka's normal form theorem, along with suitable measures of distance between higher-order constituents, can be extended to embrace higher-order languages (These higher-order “permutative constituents” are defined in Oddie 1986a and a normal form theorem proved.) Since there are propositions that are only expressible by a non-finitely axiomatizable theory in first-order logic that can be expressed by a single sentence of higher-order logic this greatly expands the reach of the normal-form approach.

Although scientific progress sometimes seems to consist in the accumulation facts about the existence of certain kinds of individuals (witness the recent excitement evoked by the discovery of the Higgs boson, for example) mostly the scientific enterprise is concerned with causation and laws of nature. L. J. Cohen has accused the truthlikeness program of completely ignoring this aspect of the aim of scientific inquiry.

… the truth or likeness to truth that much of science pursues is of a rather special kind – we might call it ‘physically necessary truth’ (Cohen 1980, 500)

The discovery of instances of new kinds (as well as the inability to find them when a theory predicts that they will appear under certain circumstances) is generally welcomed because of the light such discoveries throw on the structure of the laws of nature. The trouble with first-order languages is that they lack adequate resources to represent genuine laws. Extensional first-order logic embodies the Humean thesis that there are no necessary connections between distinct existences. At best first-order theories can capture pervasive, but essentially accidental, regularities.

There are various ways of modeling laws. According to the theory of laws favored by Tooley 1977 and Armstrong 1983, laws of nature involve higher-order relations between first-order universals. These, of course, can be represented easily in a higher-order language (Oddie 1982). But one might also capture lawlikeness in a first-order modal language supplemented with sentential operators for natural necessity and natural possibility (Niiniluoto 1983). And an even simpler representation in terms of nomic conjunctions in a propositional framework, which utilizes the simple city-block measure on partial constituents, has recently been developed in Cevolani, Festa and Kuipers 2012.

Another problem for the normal-form approach involves the fact that most interesting theories in science are not expressible in frameworks generated by basic properties and relations alone, but rather require continuous magnitudes — world-dependent functions from various entities to numbers. All the normal-form based accounts (even those developed for the higher-order) are for systems that involve basic properties and relations, but exclude basic functions. Of course, magnitudes can be finitely approximated by Carnap's families of properties, and relations between those can be represented in higher-order frameworks, but if those families are infinite then we lose the finitude characteristic of regular constituents. Once we lose those finite representations of states, the problem of defining distances becomes intractable again.