Agent-Based Modeling in the Philosophy of Science

Agent-based models (ABMs) are computational models that simulate behavior of individual agents in order to study emergent phenomena at the level of the community. Depending on the application, agents may represent humans, institutions, microorganisms, and so forth. The agents’ actions are based on autonomous decision-making and other behavioral traits, implemented through formal rules. By simulating decentralized local interactions among agents, as well as interactions between agents and their environment, ABMs enable us to observe complex population-level phenomena in a controlled and gradual manner.

This entry focuses on the applications of agent-based modeling in the philosophy of science, specifically within the realm of formal social epistemology of science. The questions examined through these models are typically of direct relevance to philosophical discussions concerning social aspects of scientific inquiry. Yet, many of these questions are not easily addressed using other methods since they concern complex dynamics of social phenomena. After providing a brief background on the origins of agent-based modeling in philosophy of science (Section 1), the entry introduces the method and its applications as follows. We begin by surveying the central research questions that have been explored using ABMs, aiming to show why this method has been of interest in philosophy of science (Section 2). Since each research question can be approached through various modeling frameworks, we next delve into some of the common frameworks utilized in philosophy of science to show how ABMs tackle philosophical problems (Section 3). Subsequently, we revisit the previously surveyed questions and examine the insights gained through ABMs, addressing what has been found to answer each question (Section 4). Finally, we turn to the epistemology of agent-based modeling and the underlying epistemic function of ABMs (Section 5). Given the often highly idealized nature of ABMs, we examine which epistemic roles support the models’ capacity to engage with philosophical issues, whether for exploratory or explanatory goals. The entry concludes by offering an outlook on future research directions in the field (Section 6).

Since the literature on agent-based modeling of science is vast and growing, it is impossible to give an exhaustive survey of models developed on this topic. Instead, this entry aims to provide a systematic overview by focusing on paradigmatic examples of ABMs developed in philosophy of science, with an eye to their relevance beyond the confines of formal social epistemology.

- 1. Origins

- 2. Central Research Questions

- 3. Common Modeling Frameworks

- 4. Central Results

- 5. Epistemology of Agent-Based Modeling

- 6. Conclusion and Outlook

- Bibliography

- Academic Tools

- Other Internet Resources

- Related Entries

1. Origins

The method of agent-based modeling was originally developed in the 1970s and ’80s with models of social segregation (Schelling 1971; Sakoda 1971; see also Hegselmann 2017) and cooperation (Axelrod & Hamilton 1981) in social sciences, and under the name of “individual-based modeling” in ecology (for an overview see Grimm & Railsback 2005). Following this tradition, ABMs drew the interest of scholars studying social aspects of scientific inquiry. By representing scientists as agents equipped with rules for reasoning and decision-making, agent-based modeling could be used to study the social dynamics of scientific research. As a result, ABMs of science have been developed across various disciplines that include science in their subject domain: from sociology of science, organizational sciences, cultural evolution theory, the interdisciplinary field of meta-science (or “science of science”), to social epistemology and philosophy of science. While ABMs developed in philosophy of science often tackle themes that are similar or related to those examined by ABMs of science in other domains, they are motivated by philosophical questions—issues embedded in the broader literature in philosophy of science. Their introduction was influenced by several parallel research lines: analytical modeling in philosophy of science, computational modeling in related philosophical domains, and agent-based modeling in social sciences. In the following, we take a brief look at each of these precursors.

One of the central ideas behind the development of formal social epistemology of science is succinctly expressed by Philip Kitcher in his The Advancement of Science:

The general problem of social epistemology, as I conceive it, is to identify the properties of epistemically well-designed social systems, that is, to specify the conditions under which a group of individuals, operating according to various rules for modifying their individual practices, succeed, through their interactions, in generating a progressive sequence of consensus practices. (Kitcher 1993: 303)

Such a perspective on social epistemology of science highlighted the need for a better understanding of the relationship between individual and group inquiry. Following the tradition of formal modeling in economics, philosophers introduced analytical models to study tensions inherent to this relationship, such as the tension between individual and group rationality. In this way, they sought to answer the question: how can individual scientists, who may be driven by non-epistemic incentives, jointly form a community that achieves epistemic goals? Most prominently, Goldman and Shaked (1991) developed a model that examines the relationship between the goal of promoting one’s professional success and the promotion of truth-acquisition, whereas Kitcher (1990, 1993) proposed a model of the division of cognitive labor, showing that a community consisting of scientists driven by non-epistemic interests may achieve an optimal distribution of research efforts. This work was followed by a number of other contributions (e.g., Zamora Bonilla 1999; Strevens 2003). Analytic models developed in this tradition endorsed economic approaches to the study of science, rooted in the idea of a “generous invisible hand”, according to which individuals interacting in a given community can bring about consequences that are beneficial for the goals of the community without necessarily aiming at those consequences (Mäki 2005).

Around the same time, computational methods entered the philosophical study of rational deliberation and cooperation in the context of game theory (Skyrms 1990, 1996; Grim, Mar, & St. Denis 1998), theory evaluation in philosophy of science (Thagard 1988) and the study of opinion dynamics in social epistemology (Hegselmann & Krause 2002, 2005; Deffuant, Amblard, Weisbuch, & Faure 2002). Computational models introduced in this literature included ABMs: for instance, a cellular automata model of the Prisoner’s Dilemma, or models examining how opinions change within a group of agents.

Agent-based modeling was first applied to the study of science in sociology of science, with the model developed by Nigel Gilbert (1997) (cf. Payette 2011). Gilbert’s ABM aimed at reproducing regularities that had previously been identified in quantitative sociological research on indicators of scientific growth (such as the growth rate of publications and the distribution of citations per paper). The model followed an already established tradition of simulations of artificial societies in social sciences (cf. Epstein & Axtell 1996). In contrast to abstract and highly-idealized models developed in other social sciences (such as economics and archaeology), ABMs in sociology of science tended towards an integration of simulations and empirical studies used for their validation (cf. Gilbert & Troitzsch 2005).

Soon after, ABMs were introduced to the philosophy of science through pioneering works by Zollman (2007), Weisberg and Muldoon (2009), Grim (2009), Douven (2010)—to mention some of the most prominent examples. In contrast to ABMs developed in sociology of science, these ABMs followed the tradition of abstract and highly-idealized modeling. Similar to analytical models, they were introduced to study how various properties of individual scientists—such as their reasoning, decision-making, actions and relations—bring about phenomena characterizing the scientific community—such as a success or a failure to acquire knowledge. By representing inquiry in an abstract and idealized way, they facilitated insights into the relationship between some aspects of individual inquiry and its impact on the community while abstracting away from numerous factors that occur in actual scientific practice. But in contrast to analytical models, ABMs proved to be suitable for scenarios often too complex for analytical approaches. These scenarios include heterogeneous scientific communities, with individual scientists differing in their beliefs, research heuristics, social networks, goals of inquiry, and so forth. Each of these properties can change over time, depending on the agents’ local interactions. In this way ABMs can show how certain features characterizing individual inquiry suffice to generate population-level phenomena under a variety of initial conditions. Indeed, the introduction of ABMs to philosophy of science largely followed the central idea of generative social science: to explain the emergence of a macroscopic regularity we need to show how decentralized local interactions of heterogeneous autonomous agents can generate it. As Joshua Epstein summed it up: “If you didn’t grow it, you didn’t explain its emergence” (2006).

2. Central Research Questions

ABMs of science typically model the impact of certain aspects of individual inquiry on some measure of epistemic performance of the scientific community. This section surveys some of the central research questions investigated in this way. Its aim is to explain why ABMs were introduced to study philosophical questions, and how their introduction relates to the broader literature in philosophy of science.

2.1 Theoretical diversity and the incentive structure of science

How does a community of scientists make sure to hedge its bets on fruitful lines of inquiry, instead of only pursuing suboptimal ones? Answering this question is a matter of coordination and organization of cognitive labor which can generate an optimal diversity of pursued theories. The importance of a synchronous pursuit of a plurality of theories in a given domain has long been recognized in the philosophical literature (Mill 1859; Kuhn 1977; Feyerabend 1975; Kitcher 1993; Longino 2002; Chang 2012). But how does a scientific community achieve an optimal distribution of research efforts? Which factors influence scientists to divide and coordinate their labor in a way that stimulates theoretical diversity? In short, how is theoretical diversity achieved and maintained?

One way to address this question is by examining how different incentives of scientists impact their division of labor. To see the relevance of this question, consider a community of scientists all of whom are driven by the same epistemic incentives. As Kitcher (1990) argued, in such a community everyone might end up pursuing the same, initially most promising line of inquiry, resulting in little to no diversity. Traditionally, philosophers of science tried to address this worry by arguing that a diversity in theory choice may result from diverse methodological approaches (Feyerabend 1975), diverse applications of epistemic values (Kuhn 1977), or from different cognitive attitudes towards theories, such as acceptance and pursuit-worthiness (Laudan 1977). Kitcher, however, wondered whether non-epistemic incentives, such as fame and fortune—usually thought of as interfering with the epistemic goals of science—might actually be beneficial for the community by encouraging scientists to deviate from dominant lines of inquiry.

The idea that scientists are rewarded for their achievements through credit, which impacts their research choices, had previously been recognized by Merton (1973) and Hull (1978, 1988). For example, a scientist may receive recognition for being the first to make a discovery (known as the “priority rule”), which may incentivize a specific approach to research. Yet, such non-epistemic incentives could also fail to promote an optimal kind of diversity. For instance, they may result in too much research being spent on futile hypotheses and/or in too few scientists investigating the best theories. Moreover, an incentive structure will have undesirable effects if rewards are misallocated to scientists that are well-networked rather than assigned to those who are actually first to make discoveries, or if credit-driven science lowers the quality of scientific output. This raises the question: which incentive structures promote an optimal division of labor, without having epistemically or morally harmful effects?

ABMs provide an apt ground for studying these issues: by modeling individual scientists as driven by specific incentives, we can examine their division of labor and the resulting communal inquiry. We will look at the models studying these issues in Section 4.1.

2.2 Theoretical diversity and the communication structure of science

Another way to study theoretical diversity is by focusing on the communication structure of scientific communities. In this case we are interested in how the information flow among scientists impacts their distribution of research across different rival hypotheses. The importance of scientific interaction for the production of scientific knowledge has traditionally been emphasized in social epistemology (Goldman 1999). But how exactly does the structure of communication impact scientists’ generation of knowledge? Are scientists better off communicating within strongly connected social networks, or rather within less connected ones, and under which conditions of inquiry? These and related questions belong to the field of network epistemology, which studies the impact of communication networks on the process of knowledge acquisition. Network epistemology has its origin in economics, sociology and organizational sciences (e.g., Granovetter 1973; Burt 1992; Jackson & Wolinsky 1996; Bala & Goyal 1998) and it was first combined with agent-based modeling in the philosophical literature by Zollman (2007) (see also Zollman 2013).

Simulations of scientific interaction originated in the idea that different communication networks among scientists, characterized by varying degrees of connectedness (see Figure 1), may have a different impact on the balance between “exploration” and “exploitation” of scientific ideas. Suppose a scientist is trying to find an optimal treatment for a certain disease, since the existing one is insufficiently effective. On the one hand, she could pursue the currently dominant hypothesis concerning the causes of the disease, hoping that it will eventually lead to better results. On the other hand, she could explore novel ideas hoping to have a breakthrough leading to a more successful cure for the disease. The scientist thus faces a trade-off between exploitation as the use of existing ideas and exploration as the search of new possibilities, long studied in theories of formal learning and in organizational sciences (March 1991). The information flow among scientists could impact this trade-off in the following way: if an initially misleading idea is shared too quickly throughout the community, scientists may lock-in on researching it, prematurely abandoning the search for better solutions. Alternatively, if the information flow is slow and sparse, important insights gained by some scientists, which could lead to an optimal solution, may remain undetected by the rest of the community for a lengthy period of time. ABMs were introduced to investigate whether and in which circumstances the information flow could have either of these effects. For instance, if scientists are assumed to be rational agents, could a tightly connected community end up exploring too little and miss out on significant lines of inquiry?

Besides studying communities consisting of “epistemically uncompromised” scientists—that is, agents whose inquiry and actions are directed at discovering and promoting the truth—similar questions can be posed about communities in which epistemic interests have been overridden. For instance, the impact of industrial interest groups on science may lead to biased or deceptive practices, which may sway the scientific community away from its epistemic goals (Holman & Elliott 2018). While recent philosophical discussions on this problem have largely focused on the role of non-epistemic values in science (Douglas 2009; Holman & Wilholt 2022; Bueter 2022; Peters 2021), ABMs were introduced to examine how epistemically pernicious strategies can impact the process of inquiry, as well as to identify interventions that can be used to mitigate their harmful effects.

In addition to the problem of theoretical diversity, network epistemology has been applied to a number of other themes, such as optimal forms of collaboration, factors leading to scientific polarization, effects of conformity on the collective inquiry, effects of demographic diversity, the position of minorities, optimal regulations of dual-use research, argumentation dynamics, and so forth. We will look at the models studying theoretical diversity and the communication structure of science in Section 4.2 and Section 4.6.

(a)

(b)

(c)

Figure 1: Three types of idealized communication networks, representing an increasing degree of connectedness: (a) a cycle, (b) a wheel, and (c) a complete graph. The nodes in each graph stand for scientists, while edges between the nodes stand for information channels between two scientists.

2.3 Cognitive diversity

A diversity of cognitive features of individuals can be beneficial in various problem-solving situations, including business and policy-making (Page 2017). But how does the diversity of cognitive features of scientists, including their background beliefs, reasoning styles, research preferences, heuristics and strategies impact the inquiry of the scientific community? In philosophy of science, this issue gained traction with Kuhn’s distinction between normal and revolutionary science (Kuhn 1962), suggesting that different propensities may push scientists towards one type of research rather than another (see also Hull 1988). This raises the question: how does the distribution of risk-seeking, maverick scientists and risk-averse ones impact the inquiry of the community? Put more generally: do some ways of dividing labor across different research heuristics result in a more successful collective inquiry than others?

By equipping agents with different cognitive features we can use ABMs to represent different cognitively diverse (or uniform) populations, and to study their impact on some measure of success of the communal inquiry. We will look at the models studying these issues in Section 4.3.

2.4 Social diversity

A scientific community is socially diverse when its members endorse different non-epistemic values, such as moral and political ones, or when they have different social locations, such as gender, race and other aspects of demography (Rolin 2019). The importance of social diversity has long been emphasized in feminist epistemology, both for ethical and epistemic reasons. For instance, many have pointed out that social diversity is an important catalyst for cognitive diversity, which in turn is vital for the diversity of perspectives, and therefore for scientific objectivity (Longino 1990, 2002; Haraway 1989; Wylie 1992, 2002; Grasswick 2011; Anderson 1995; for a discussion on different notions of diversity in the context of scientific inquiry see Steel et al. 2018).

Moreover, in the field of social psychology and organizational sciences, it has been argued that social diversity is epistemically beneficial even if it doesn’t promote cognitive diversity. Instead, it may counteract epistemically pernicious tendencies of homogeneous groups, such as unwarranted trust in each other’s testimony or unwillingness to share dissenting opinions (for an overview of the literature see Page 2017; Fazelpour & Steel 2022). While these hypotheses have received support in virtue of empirical studies, ABMs have proved a complementary testing ground, allowing for an investigation of minimal sets of conditions which need to hold for social diversity to be epistemically beneficial.

Another problem tackled by means of ABMs concerns factors that can undermine social diversity or disadvantage members of minorities in science. For instance, how does one’s minority status impact one’s position in a collaborative environment, given the norms of collaboration that can emerge in scientific communities? Or how does one’s social identity impact the uptake of their ideas? We will look at the models studying these issues in Section 4.4 and Section 4.7.

2.5 Peer-disagreement in science

Scientific disagreements are commonly considered vital for scientific progress (Kuhn 1977; Longino 2002; Solomon 2006). They typically go hand in hand with theoretical diversity (see Section 2.1 and 2.2) and stimulate critical interaction among scientists, important for the achievement of scientific objectivity. Nevertheless, an inadequate response to disagreements may lead to premature rejections of fruitful inquiries, to fragmentation of scientific domains and hence to consequences that are counterproductive for the progress of science. This raises the question: how should scientists respond to disagreements with their peers, to lower the chance of a hindered inquiry? Which epistemic and methodological norms should they follow in such situations?

This issue has been discussed in the context of the more general debate on peer-disagreement in social epistemology. The problem of peer disagreement concerns the question: what is an adequate doxastic attitude towards p, upon recognizing that one’s peer disagrees on p? Should one follow a “Conciliatory Norm”, demanding, for instance, to lower the confidence in p, split the difference by taking the middle ground between the opponent’s belief and one’s own on the issue, or to suspend one’s judgment on p? Or should one rather follow a “Steadfast Norm” demanding to stick to one’s guns and keep the same belief with the same confidence as before encountering a disagreeing peer? (For initial arguments in favor of the Conciliatory Norm see, e.g., Elga 2007, Christensen 2010, Feldman 2006; for arguments in favor of the Steadfast Norm see, e.g., De Cruz & De Smedt 2013, Kelp & Douven 2012; for reasons why norms are context-dependent see, e.g., Kelly 2010 [2011], Konigsberg 2013, Christensen 2010, Douven 2010; for a recent critical review of the debate as applied to scientific practice see Longino 2022.)

Similarly, in case of scientific disagreements and controversies we can ask: should a scientist who is involved in a peer disagreement strive towards weakening her stance by means of a conciliatory norm, or should she remain steadfast? What makes this issue particularly challenging in the context of scientific inquiry is that we are not only interested in the epistemic question of an adequate doxastic response to a disagreement, but also in the methodological (or inquisitive) question of how the norms impact the success of collective inquiry as a process. In particular, if scientists encounter multiple disagreements throughout their research on a certain topic, will their collective inquiry benefit more from individuals adopting conciliatory attitudes or steadfast ones? ABMs naturally lend themselves as a method for investigating these issues: by modeling scientists as guided by different normative responses to a disagreement, we can study the impact of the norms on the communal inquiry. We will look at the models studying these issues in Section 4.5.

2.6 Scientific polarization

Closely related to the issue of scientific disagreements is the problem of scientific polarization. While scientific controversies typically resolve over time, they may include periods of polarization, with different parts of the community maintaining mutually conflicting attitudes even after an extensive debate. But how and why does polarization emerge? Do scientific communities polarize only if scientists are too dogmatic or biased towards their viewpoints, or can polarization emerge even among rational agents?

Following a range of formal models in social and political sciences addressing a similar issue in society at large (for a review see Bramson et al. 2017), ABMs have been used to examine the emergence of polarization in the context of science. What makes agent-based modeling particularly apt for this task is not only that we can model different aspects of individual inquiry that may contribute to the emergence of polarization (such as different background beliefs, different communication networks, different trust relationships, and so on), but we can also observe the formation of polarized states, their duration (as stable or temporary states throughout the inquiry) and their features (such as the distribution of scientists across the opposing views). We will look at the models studying these issues in Section 4.6.

2.7 Scientific collaboration

As acquiring and analyzing scientific evidence can be highly resource-demanding for any individual scientist, scientific collaboration is a widespread form of group inquiry. Of course, this is not the only reason why scientists collaborate: incentives leading to collaborations range from epistemic ones (such as increasing the quality of research) to non-epistemic ones (such as striving for recognition). This raises the question: when is collaborating beneficial, and which challenges may occur in collaborative research? Inspired by these questions, philosophers of science have investigated why collaborations are beneficial (Wray 2002), which challenges they pose on epistemic trust and accountability (Kukla 2012; Wagenknecht 2015), what kind of knowledge emerges through collaborative research (such as collective beliefs or acceptances, (M. Gilbert 2000; Wray 2007; Andersen 2010), which values are at stake in collaborations (Rolin 2015), what an optimal structure of collaborations is (Perović, Radovanović, Sikimić, & Berber 2016), and so on.

ABMs of collaboration were introduced to study the above and related questions, focusing on how collaborating can impact inquiry. While collaborations can indeed be beneficial, determining conditions under which they are such is not straightforward. For instance, depending on how scientists engage in collaborations, minorities in the community may end up disadvantaged. We will look at the models studying these issues in Section 4.7.

2.8 Summing up

Beside the above themes, ABMs have been applied to the study of numerous other topics in philosophy of science: from the allocation of research funding, testimonial norms, strategic behavior of scientists, all the way to different procedures for theory-choice (see Section 4.8 where we list models studying additional themes). Moreover, one ABM can simultaneously address multiple questions (for example, social diversity and scientific collaboration are often inquired together).

To study the questions presented in this section, philosophers have utilized different representational frameworks. Even if models are aimed at the same research question, they are often based on different modeling approaches. For instance, individual scientists may be represented as Bayesian reasoners, as agents with limited memory, as agents searching for peaks on an epistemic landscape, as agents that form their opinions by averaging information they receive from other scientists and from their own inquiry, or as agents that are equipped with argumentative reasoning. Similarly, the process of evidence gathering may be represented in terms of pulls from probability distributions, as foraging on an epistemic or an argumentation landscape, or as receiving signals from others and from the world. We now look into some of the common modeling frameworks employed in the study of the above questions.

3. Common Modeling Frameworks

When developing a model examining certain aspects of scientific inquiry, one first has to decide on a number of relevant representational assumptions, such as:

- How to represent the process of inquiry and evidence gathering?

- What do agents in the simulation stand for (e.g., individual scientists, research groups, scientific labs, etc.)?

- What are the units of appraisal in scientific inquiry (e.g., hypotheses, theories, research programs, methods, etc.)?

- How do scientists reason and evaluate their units of appraisal?

- How do scientists exchange information?

Similarly, if we wish to model a scenario in which scientists bargain about the division of tasks or resources, we will have to decide how to represent their interactions and rewards they get out of them. These modeling choices are guided by the research question the model aims to tackle, as well as the epistemic aim of the model.

The majority of ABMs developed in philosophy of science are built as simple and highly idealized models. The simpler a model is, the easier it is to understand and analyze mechanisms behind the results of simulations (we return to this issue in Section 5). In this section, we delve into several common modeling frameworks that have been used in this way. Each framework offers a different take on the above representational choices and has served as the basis for a variety of ABMs. Particular models will not be discussed just yet—we leave this for Section 4.

3.1 Epistemic landscape models

Modeling the process of inquiry as an exploration of an epistemic landscape draws its roots from models of fitness landscapes in evolutionary biology, first introduced by Sewall Wright (1932). By representing a genotype as a point on a multidimensional landscape, where the “height” of the landscape corresponds to its fitness, the model has been used to study evolutionary paths of populations.

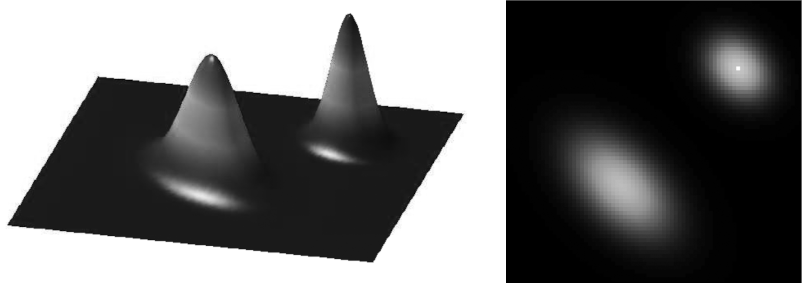

The idea of epistemic landscapes entered philosophy of science with the work of Weisberg and Muldoon (2009) and Grim (2009). In this reinterpretation of the model, the landscape represents a research topic, consisting of multiple projects or multiple hypotheses. A research topic can be understood either in a narrow sense (e.g., the study of treatments for a certain disease) or in a broader sense (e.g., the field of astrophysics). A point on the landscape stands for a certain hypothesis or a specific approach to investigating the topic. Approaches can vary in terms of different background assumptions, methods of inquiry, research questions, etc. Accordingly, the landscape can be modeled in terms of \(n\) dimensions, where \(n-1\) dimensions represent different aspects of scientific approaches, while the \(n^{th}\) dimension (visualized as the “height” of the landscape) stands for some measure of epistemic value an agent gets by pursuing the corresponding approach. For instance, in case of a three-dimensional landscape, the x and y coordinates form a two-dimensional disciplinary matrix in which approaches are situated, while z coordinate measures their “epistemic significance” (see Figure 2). The latter can be understood in line with Kitcher’s idea that significant approaches are those that enable the conceptual and explanatory progress of science (Kitcher 1993: 95).

ABMs of science have utilized two-dimensional and three-dimensional landscapes, as well as the generalized framework of NK-landscapes, in which the number of dimensions and the ruggedness of the landscape are parameters of the model.[1] Scientists are modeled as agents who explore the landscape, trying to find its peak(s), that is, the epistemically most significant points. The framework allows for different ways of measuring the success and efficiency of inquiry: in terms of the success of the community in discovering the peak(s) of the landscape (rather than getting stuck in local maxima), the success in discovering any of the areas of non-zero vicinity, the time required for such discoveries, and so forth.

Figure 2: Two representations of Weisberg and Muldoon’s epistemic landscape: a three-dimensional representation on the left and a two-dimensional representation of the same landscape on the right, where the height of the landscape is represented by different shades of gray: the lighter the shade, the more significant the point on the landscape (adapted from Weisberg & Muldoon 2009).

3.1.1 Application to the problem of cognitive diversity

The framework of epistemic landscapes has been applied to a variety of research questions in philosophy of science. Weisberg and Muldoon (2009) introduced it to examine the impact of cognitive diversity on the performance of scientific communities. What makes the epistemic landscape framework particularly attractive for the study of cognitive diversity and the division of labor is its capacity to represent various research strategies (as different heuristics of exploring the landscape), as well as a coordinated distribution of research efforts (cf. Pöyhönen 2017).

Weisberg and Muldoon’s ABM employs a three-dimensional landscape (see Figure 2), built on a discrete toroidal grid, with two peaks representing the highest points of epistemic significance. To study cognitive diversity, the model examines three research strategies, implemented as three types of agents:

- The “controls” who aim to find a higher point on the landscape than their current location, while ignoring the exploration of other agents.

- The “followers” who aim to find already explored approaches in their direct neighborhood, which have a higher significance than their current location.

- The “mavericks” who also aim to find points of higher significance given previously explored points, but rather than following in the footsteps of other scientists, they prioritize the discovery of new, so far unvisited, points.

The control strategy represents individual learning that disregards social information, while the follower and the maverick strategies represent different ways of taking the latter into account. The model examines how homogeneous populations of each type of explorers, or heterogeneous populations consisting of diverse groups of explorers, impact the efficiency of the community in discovering the highest points on the landscape, and in covering all points of non-zero significance.

Following Weisberg and Muldoon’s contribution, the framework of epistemic landscapes became highly influential, resulting in various refinements and extensions of the model. For instance, Alexander, Himmelreich, and Thompson (2015) introduced the “swarm” strategy, which describes scientists who can with certain probability identify points of higher significance in their surrounding and adjust their approach so that it is similar, but different from approaches pursued by others who are close to them. Thoma (2015) introduced “explorer” and “extractor” strategies, the former of which describes a scientist seeking approaches very different from those pursued by others, while the latter (similar to Alexander and colleagues’ swarm researcher) seeks approaches that are similar to, yet distinct from those pursued by others. Moreover, Fernández Pinto and Fernández Pinto (2018) examined alternative rules for the follower strategy. Finally, Pöyhönen (2017) introduced a “dynamic” landscape, such that the exploration of patches “depletes” their significance.

3.1.2 Applications to network epistemology

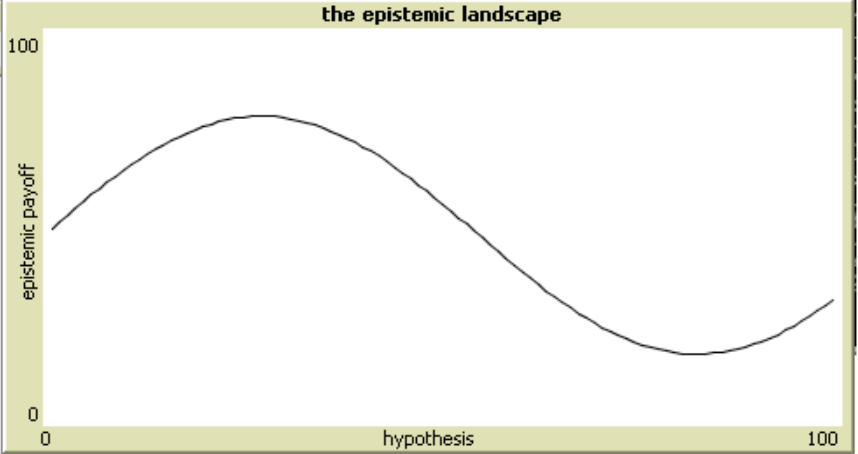

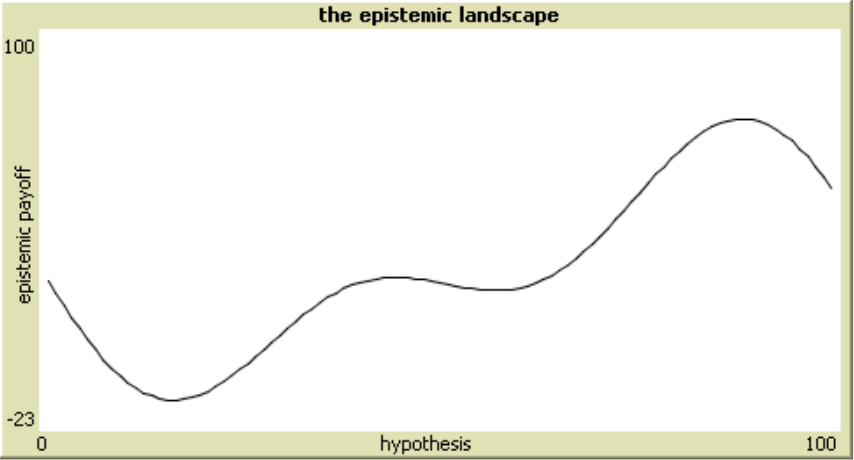

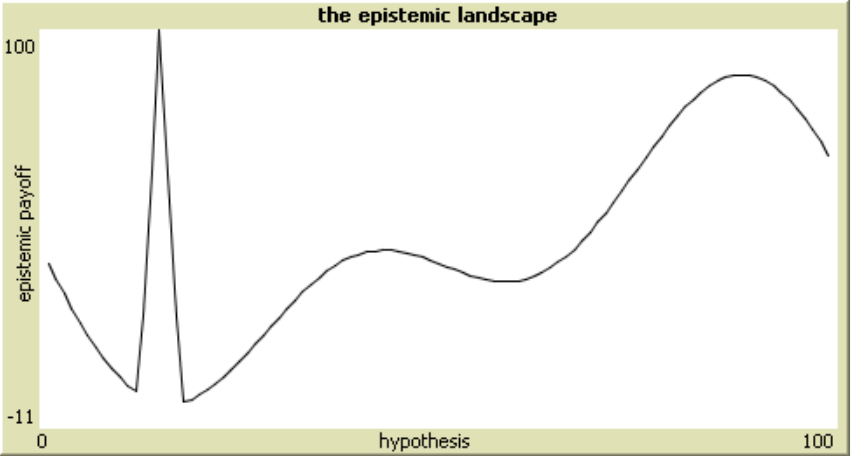

Another application domain of the epistemic landscape framework is the communication structure of science and its impact on communal inquiry. To study these issues Grim and Singer (Grim 2009; Grim, Singer, Fisher, et al. 2013) developed ABMs employing a two-dimensional landscape, where points on the x-axis represent alternative hypotheses for a given domain of phenomena, while the y-axis indicates the “epistemic goodness” of each hypothesis or the epistemic payoff an agents gets by pursuing it. Depending on how well the best hypothesis is hidden, the shape of the epistemic terrain will represent a more or less “fiendish” research problem (see Figure 3). For instance, if the best hypothesis is a narrow peak in the landscape (as in Figure 3c) finding it will resemble a search for a needle in a haystack and therefore a fiendish research problem.[2] An inquiry is considered successful if any scientist (eventually followed by the rest of the community) discovers the highest peak on the landscape, while it is unsuccessful if the community converges on a local maximum. The model is used to study how different social networks with varying degrees of connectedness (such as those in Figure 1), and different epistemic landscapes with different degrees of fiendishness impact the success of inquiry.

(a)

(b)

(c)

Figure 3: Epistemic landscapes with an increasing degree of fiendishness (adapted from Grim, Singer, Fisher, et al. 2013: 443)

3.1.3 Other applications

Beside the above themes, the framework of epistemic landscapes has appeared in many other variants. For instance, De Langhe (2014a) proposed an ABM aimed at studying different notions of scientific progress, which makes use of a “moving epistemic landscape”: a landscape in which the significance of approaches can change as a result of exploration of other approaches. Balietti, Mäs, and Helbing (2015) developed an epistemic landscape model to study the relationship between fragmentation in scientific communities and scientific progress, in which agents explore a two-dimensional landscape, representing a space of possible answers to a certain research question, with the correct answer located at the center of the landscape. Currie and Avin (2019) proposed a reinterpretation of the three-dimensional landscape aimed at studying the diversity of scientific methods, in which the x and y axis stand for different investigative techniques or methods of acquiring evidence, and the z axis for the “sharpness” of the obtained evidence. Sharpness here refers to the relationship between research results and a hypothesis, where the more the evidence increases one’s credence in the hypothesis, the sharper it is. The model also represents the “independence” of evidence as the distance between two points on the landscape according to the x and y coordinates: the further apart two methods are, the less overlapping their background theories are, and the more independent the evidence obtained by them is.

Finally, epistemic landscape modeling has inspired related landscape models. The argumentation-based model of scientific inquiry by Borg, Frey, Šešelja, and Straßer (2018, 2019) is one such example. The model, inspired by abstract argumentation frameworks (Dung 1995), employs an “argumentation landscape”. The landscape is composed of “argumentation trees” that represent rival research programs (see Figure 4). Each program is a rooted tree, with nodes as arguments and edges as a “discovery relation”, representing a path agents take to move from one argument to another. Arguments in one theory may attack arguments in another theory. In contrast to epistemic landscapes, argumentation landscapes aim to capture the dialectic dimension of inquiry where some points on the landscape, assumed to be acceptable arguments, may subsequently be rejected as undefendable. This allows for an explicit representation of false positives (accepting a false hypothesis) as an argument on the landscape an agent accepts without knowing there exists an attack on it, and false negatives (rejecting a true hypothesis) as an argument an agent rejects, without knowing that it can be defended. The model has been used to study how argumentative dynamics among scientists, pursuing rival research programs, impacts their efficiency in acquiring knowledge under various conditions of inquiry (such as different social networks, different degrees of cautiousness in decision making, and so on).

Figure 4: Argumentation-based ABM by Borg, Frey, Šešelja, and Straßer (2019) employing an argumentative landscape. The landscape represents two rival research programs (RP), with darker shaded nodes standing for arguments that have been explored by agents and are thus visible to them; brighter shaded nodes stand for arguments that are not visible to agents. The biggest node in each RP is the root argument, from which agents start their exploration via the discovery relation, connecting arguments within one RP. Arrows stand for attacks from an argument in one RP to an argument in another RP.

3.2 Bandit models

In the previous section we saw how epistemic landscape models have been used to represent inquiries involving mutually compatible research projects (as in Weisberg and Muldoon’s model) as well as inquiries involving rival hypotheses (as in Grim and Singer’s model). Another framework used to study the latter scenario is based on “bandit problems”.

The name of bandit models comes from the term “one-armed bandit”—a colloquial name for a slot machine. Multi-armed bandit problems, introduced in statistics (Robbins 1952; Berry & Fristedt 1985) and studied in economics (Bala & Goyal 1998; Bolton & Harris 1999), are decision-making problems that concern the following kind of scenario: suppose a gambler is about to play several slot machines. Each machine gives a random payoff according to a probability distribution unknown to the gambler. To determine which machine will give a higher reward in the long run, the gambler has to experiment by pulling arms of the machines. This will allow her to learn from the obtained results. But which strategy should she use? For instance, should she alternate between the machines for the first couple of pulls, and then play only the machine that has given the highest reward during the initial test run? Or should she rather have a lengthy test phase before deciding which machine is better? While in the first case she might start exploiting her current knowledge before she has sufficiently explored, in the second case she might explore for too long. In this way the gambler faces a trade-off between exploitation (playing the machine that has so far given the best payoff) and exploration (continued testing of different machines). The challenge is thus to come up with the strategy that provides an optimal balance between exploration and exploitation, so as to maximize one’s total winnings.

As we have seen above (see Section 2.2), the exploration/exploitation trade-off may also occur in the context of scientific research. Whether scientists are attempting to determine if a novel treatment for a disease is superior to an existing one, or selecting between two novel methods of evidence gathering with varying success rates, they may encounter the exploration/exploitation trade-off. In other words, they may have to decide when to cease exploring alternatives and begin exploiting the one that appears most suitable for the task.

ABMs based on bandit problems were first introduced to philosophy of science by Zollman (2007, 2010). A bandit ABM usually looks as follows. In analogy to slot machines, each scientific theory (or hypothesis, or method) is represented as having a designated probability of success. Scientists are typically modeled as “myopic” agents in the sense that they always pursue a theory they believe to give a higher payoff. They gather evidence for a theory by making a random draw from a probability distribution. Subsequently, they update their beliefs in a Bayesian way, on the basis of results of their own research (the gathered evidence) as well as results of neighboring scientists in their social network (such as those in Figure 1). Scientists are thus not modeled as passive observers of evidence for all the available theories, but rather as agents who actively determine the type of evidence they gather by choosing which theory to pursue. The inquiry of the community is considered successful if, for example, scientists reach a consensus on the better of the two theories.

Bandit models of this kind build on the analytical framework developed by economists (Bala & Goyal 1998) who examined the relationship between different communication networks in infinite populations and the process of social learning. Applied to the context of science, this variation of bandit problems concerns the puzzle mentioned above in Section 2.2: assuming a scientific community is trying to determine which of the two available hypotheses offers a better treatment for a given disease, could the structure of the information flow among the scientists impact their chances of converging on the better hypothesis? By applying Bala and Goyal’s framework to the context of science and scenarios involving finite populations, Zollman initiated the field of network epistemology (see Zollman 2013), which studies the impact of social networks on the process of knowledge acquisition.

Beside the aim of examining network effects on the formation of scientific consensus, bandit models of scientific interaction have been applied to a number of other topics, such as the impact of preferential attachment on social learning (Alexander 2013), bias and deception in science (Holman & Bruner 2015, 2017; Weatherall, O’Connor, & Bruner 2020), optimal forms of collaboration (Zollman 2017), factors leading to scientific polarization (O’Connor & Weatherall 2018; Weatherall & O’Connor 2021b), effects of conformity (Weatherall & O’Connor 2021a), effects of demographic diversity (Fazelpour & Steel 2022), regulations of dual-use research (Wagner & Herington 2021), social learning in which a dominant group ignores or devalues testimony from a marginalized group (Wu 2023), or disagreements on the diagnosticity of evidence for a certain hypothesis (Michelini & Osorio et al. forthcoming).

3.3 Bounded confidence models of opinion dynamics

As we have seen above, the performance of a scientific community is sometimes assessed in terms of its success in achieving consensus on the true hypothesis. The question how consensus is formed and which factors benefit or hinder its emergence is also studied within the theme of opinion dynamics in epistemic communities. Models of opinion dynamics aim at investigating how opinions form and change in a group of agents who adjust their views (or beliefs) over a number of rounds or time-steps, resulting in the formation of consensus, polarization, or a plurality of views. A modeling framework that has been particularly influential in this context originates from the work of Hegselmann and Krause (2002, 2005, 2006), drawing its roots from analytical models of consensus formation (French 1956; DeGroot 1974; Lehrer & Wagner 1981).[3]

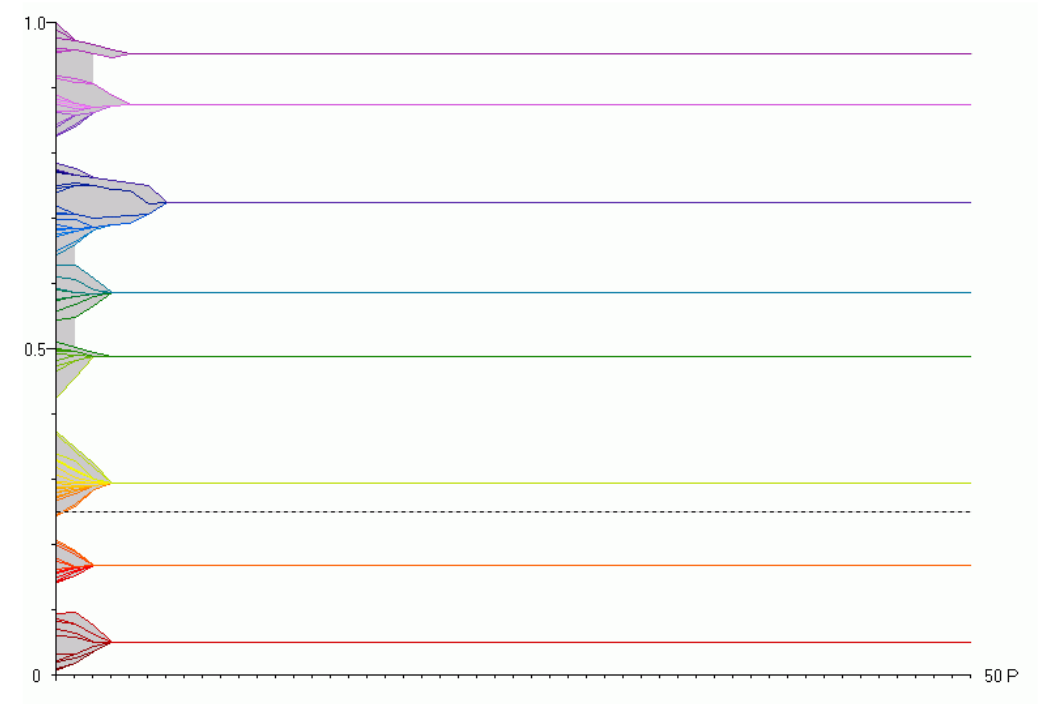

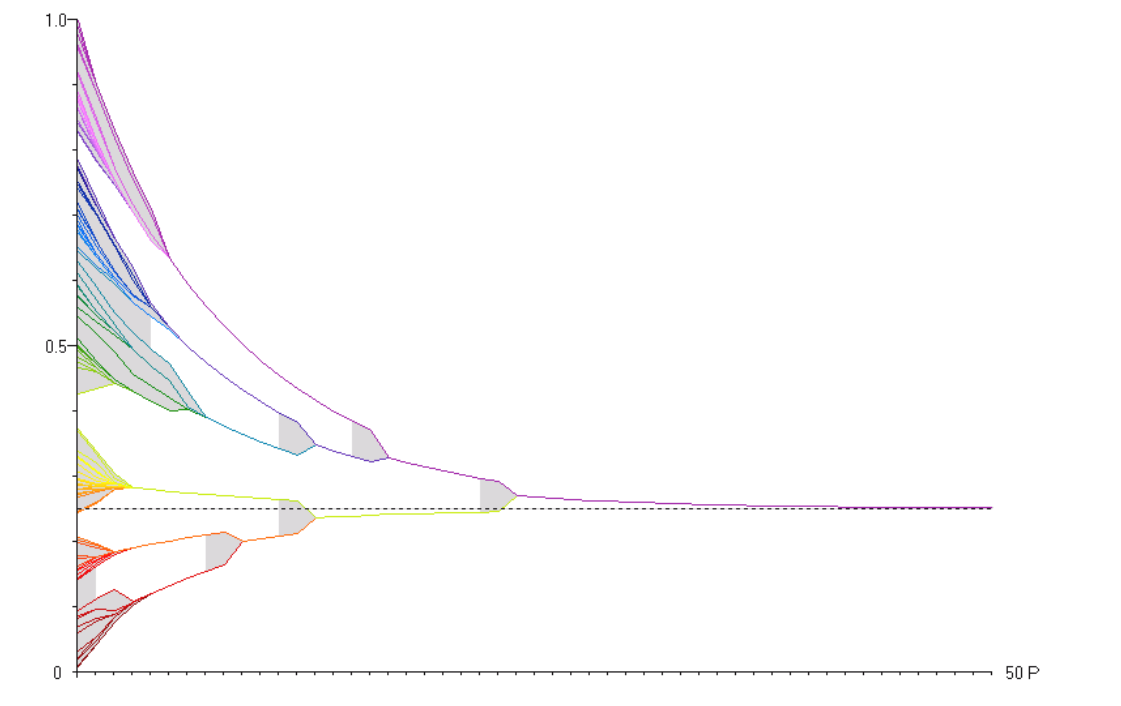

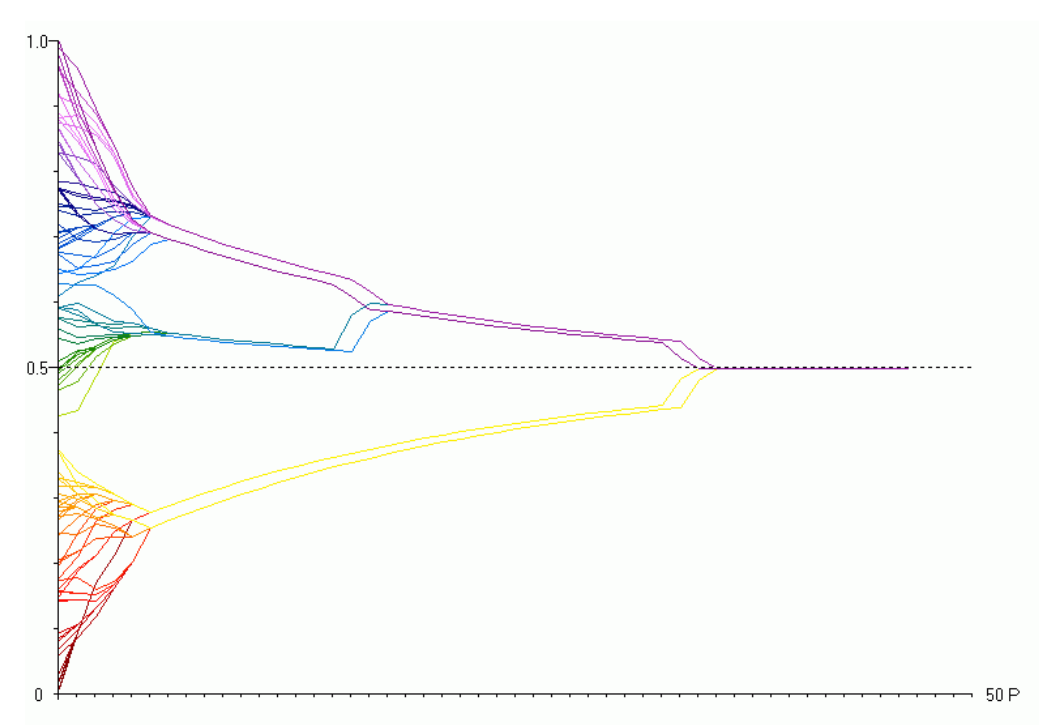

The basic model functions as follows. At the start of the simulation (that is, at time \(t=0\)) each agent \(x_i\) is assigned an opinion on a certain issue, expressed by a real number \(x_i(0) \in {(0,1]}\). Agents then exchange their opinions (or beliefs) with others and adjust them by taking the average of those beliefs that are “not too far away” from their own. These are opinions that fall within a “confidence interval” of size \(\epsilon\), which is a parameter of the model. In this way, agents have bounded confidence in opinions of others. By iterating this process, the model simulates the social dynamics of the opinion formation (see Figure 5a).

Applied to the context of scientific inquiry, scientists are usually represented as truth seeking agents who are trying to determine the value of a certain parameter \(\tau\) for which they only know that it lies in the interval \((0,1]\) (Hegselmann & Krause 2006). At the start of the simulation each agent is assigned a random initial belief. As the model runs, agents adjust their beliefs by receiving a (noisy) signal about \(\tau\) and by learning opinions of others who fall within their confidence interval. For instance, an agent’s belief can be updated in terms of the weighted average of others’ opinions and the signal from the world.[4] Such dynamics represent scientists as agents who are able to generate evidence that points in the direction of \(\tau\), though their updates are also influenced by their prior beliefs and the beliefs of their peers (see Figure 5b & Figure 5c).

(a)

(b)

(c)

Figure 5: Examples of runs of Hegselmann and Krause’s model, with the x-axis representing time steps in the simulation and the y-axis opinions of 100 agents. The change of each agent’s opinion is represented with a colored line. The value of \(\tau\) (the assumed position of the truth) is represented as a black dotted line. Figure (a) shows opinion dynamics in a community without any truth-seeking agents, resulting in a plurality of diverging views. Figure (b) shows the opinion dynamics in a community in which all agents are truth-seekers, which achieves a consensus close to the truth. Figure (c) shows the opinion dynamics in a community in which only half of the population are truth-seekers, which also achieves a consensus close to the truth (adapted from Hegselmann & Krause 2006; for other parameters used in the simulations see the original article).

An early application of the bounded confidence model was the problem of the division of cognitive labor (Hegselmann & Krause 2006). Since agents can be modeled as updating their beliefs only on the basis of social information (that is, by averaging over opinions of others who fall in one’s confidence interval) or on the basis of both social information and the signal from the world, the model can be used to study the opinion dynamics in a community in which not everyone is a “truth-seeker”. The model then examines what kind of division of labor between truth-seekers and agents who only receive social information allows the community to converge to the truth.

Subsequent applications of the framework in social epistemology and philosophy of science focused on the study of scientific disagreements and the question: what is the impact of different norms guiding disagreeing scientists on the efficiency of collective inquiry (Douven 2010; De Langhe 2013)? If agents update their beliefs both in view of their own research and in view of other agents’ opinions they represent scientists that follow a Conciliatory Norm by “splitting the difference” between their own view and the views of their peers (see above Section 2.5). In contrast, if they update their beliefs only in view of their own research they represent scientists that follow a Steadfast Norm. Other application themes of this framework include the impact of noisy data on opinion dynamics (Douven & Riegler 2010), opinion dynamics concerning complex belief states, such as beliefs about scientific theories (Riegler & Douven 2009), updating via an inference to the best explanation in a social setting (Douven & Wenmackers 2017), deceit and spread of disinformation (Douven & Hegselmann 2021), network effects and theoretical diversity in scientific communities (Douven & Hegselmann 2022), and so forth.

3.4 Evolutionary game-theoretic models of bargaining

Game theory studies situations in which the outcome of one’s action depends not only on one’s choice of an action, but also on actions of others. A “game” in this sense is a model of a strategic interaction between agents (“players”), each of whom has a set of available actions or strategies. Each combination of the players’ strategic responses has a designated outcome or a “payoff”. In contrast to traditional game theory, which focuses on agents’ rational decision-making aimed at maximizing their payoff in one-off interactions, the evolutionary approach focuses on repeated interactions in a population. A game is assumed to be played over and over again by players who are randomly drawn from a large population. While agents start with a certain strategic behavior, they learn and gradually adjust their responses according to specific rules called “dynamics” (for example, by imitating other players or by considering their own past interactions). As a result, successful strategies will diffuse across the community. In this way, evolutionary game-theoretic models can be used to explain how a distribution of strategies across the population changes over time as an outcome of long-term population-level processes. While the standard approach to game theory has primarily focused on combinations of players’ strategies that lead to a “stable” state, such as the Nash equilibrium—a state in which no player can improve their payoff by unilaterally changing their strategy—the evolutionary approach has been used to study how equilibria emerge in a community (see the entries on game theory and on evolutionary game theory).

Evolutionary game theory was originally introduced in biology (Lewontin 1961; Maynard Smith 1982). It subsequently gained interest of social scientists and philosophers as a tool for studying cultural evolution, that is, for investigations into how beliefs and norms change over time (Axelrod 1984; Skyrms 1996). The models can be implemented using a mathematical treatment based on differential equations, or using agent-based modeling. While the former approach employs certain idealizations, such as an infinite population size or perfect mixing of populations, ABMs were introduced to study scenarios in which such assumptions are relaxed (see, e.g., Adami, Schossau, & Hintze 2016).

Applications of evolutionary game theory to social epistemology of science were especially inspired by models of bargaining, studying how different bargaining norms emerge from local interactions of individuals (Skyrms 1996; Axtell, Epstein, & Young 2001). The framework was introduced to the study of epistemic communities by O’Connor and Bruner (2019), building up on Bruner’s (2019) model of cultural interactions.[5]

The basic idea of bargaining models is as follows: agents bargain over shares of available resources, where their demands and expectations about others’ demands evolve endogenously in view of their previous interactions. Applied to the context of science, bargaining concerns not only explicit negotiations over financial resources, but also situations in which scientists need to agree how to divide their workload in joint projects (O’Connor & Bruner 2019). For example, if two scientists are working on a joint paper or if they are organizing a conference together they will have to agree on how much time and effort each of them will devote to it. The norms determining such a division of labor may not be fair. For example, if scientist A puts much less effort into the project than scientist B, but they both get the same recognition for its success, B will be disadvantaged. Similarly, if they agree on A being the first author in a collaborative paper, while B ends up working much more on it, the outcome will again be unfair. Such norms may become entrenched in the scientific community, especially in the context of interactions between members of majority and minority groups in academia. But how do such norms emerge? Are biases favoring members of certain groups over others necessary for the emergence of such discriminatory patterns, or can they become entrenched due to other, perhaps more surprising factors?

Evolutionary game-theoretic models have been used to study these and related questions. Bargaining is represented as a strategic interaction between two agents, each of whom makes a demand concerning the issue at hand (for instance, a certain amount of workload, the order of authors’ names in a joint publication, and so on). Depending on each agent’s demand, each gets a certain payoff. For instance, suppose A and B wish to organize a conference and they start by negotiating who will cover which tasks. If they both make a high demand in the sense that each is willing to put only a minimal effort into the project while expecting the other to cover the rest of the tasks, they will fail to organize the event. If B, on the other hand, makes a low demand (by taking on a larger portion of the work) while A makes a high demand, they will be able to organize the conference, though the division of labor will be unfair (assuming they both get equal credit for successfully realizing the project).

The game, originating in the work of John Nash (1950), is called the “Nash demand game” (or the “mini-Nash demand game”). Each player in the game makes their demand (Low, Med or High). If the demands do not jointly exceed a given resource, each player gets what they asked for. If they do exceed the available resource, no-one gets anything. In the example above, High can be interpreted as demanding to work less than the other on the organization of the conference, or demanding the first authorship in a joint paper while putting relatively lower effort into it. Similarly, Low corresponds to the willingness to take on a larger portion of the work (relative to the order of authors in case of the joint paper), while Med corresponds to demanding a fair distribution of labor. Table 1 displays the payoffs in such a game. Any combination of demands that gives a joint payoff of 10 is a Nash equilibrium, which means that either player’s strategy is the best response to the other player’s one. While a Nash equilibrium may correspond to a fair distribution of resources (if both players demand Med), it may also correspond to an unfair one (if one player demands Low and the other one High). This raises the question: which equilibrium will the community achieve if agents learn from their previous interactions? In particular, if the individuals are divided into sub-groups (which may be of different sizes), where their membership can be identified by means of markers visible to other agents, they can develop strategies conditional on the group membership of their co-players. Which equilibrium state will such a community evolve to? To study such questions, evolutionary models employ rules or dynamics that determine how players update their strategies and how the distribution of strategies across the community changes over time.[6]

| Low | Med | High | |

|---|---|---|---|

| Low | L,L | L,5 | L,H |

| Med | 5,L | 5,5 | 0,0 |

| High | H,L | 0,0 | 0,0 |

Table 1: A payoff table in the Nash demand game. The rows show the strategic options of Player1 and the columns the options of Player2. Each cell shows the payoff Player1 gets for the given combination of options, followed by the payoff for Player2. Players can make three demands: Low, Med and High for the total resource of 10. The payoffs are represented as L, M and H, where \(\mathrm{M}= 5\), \(\mathrm{L} < 5 < \mathrm{H}\), and \(\mathrm{L} + \mathrm{H} = 10\). (cf. O’Connor & Bruner 2019; Rubin & O’Connor 2018)

The modeling framework based on bargaining has been used to study norms in scientific collaborations and inequalities that may emerge through them. For example, O’Connor and Bruner (2019) investigate the emergence of discriminatory norms in academic interactions between minority and majority members. Rubin and O’Connor (2018) study the emergence of discriminatory patterns and their effects on diversity in collaboration networks, while Ventura (2023) examines the impact of the structure of collaborative networks on the emergence of discriminatory norms even if there are no visible markers of an agent’s membership to a sub-group. Moreover, Klein, Marx, and Scheller (2020) use a similar framework to study the relationship between rationality and inequality, that is, the success of different strategies for bargaining (such as maximizing expected utility) and their impact on the emergence of inequality.

3.5 Summing up

Besides the above frameworks, numerous additional approaches have been used to build simulations in social epistemology and philosophy of science. Some prominent frameworks not mentioned above include the Bayesian framework “Laputa” developed by Angere (2010—in Other Internet Resources) and Olsson (2011), aimed at studying social networks of information and trust, the model of argumentation dynamics by Betz (2013), or the influential framework by Hong and Page (2004) utilized in the study of cognitive diversity (for more on these frameworks see the entry on computational philosophy). Another evolutionary framework used in philosophy of science was proposed by Smaldino and McElreath (2016). The model represents a scientific community as a population consisting of scientific labs that employ culturally transmitted methodological practices, where the practices undergo natural selection from one generation of scientists to the next, and it has been employed, for example, to study the selection of conservative and risk-taking science (O’Connor 2019). In the next section we take a look at some of the central results obtained by ABMs in philosophy of science, based on the above and some other frameworks.

4. Central Results

To provide an overview of the main findings obtained by means of ABMs in philosophy of science, we will revisit the research questions discussed in Section 2 and look at how they have been answered through specific models.

4.1 Theoretical diversity and the incentive structure of science

Before we survey ABMs that study the incentive structure of science, we first look into the results of some analytical models which inspired the development of simulations. To get a more precise grip on how individual incentives shape epistemic coordination and theoretical diversity, philosophers introduced formal analytical models, inspired by research in economics. One of the central results from this body of literature is that the optimal distribution of labor can be achieved when scientists act according to their self-interest rather than following epistemic ends (e.g., Kitcher 1990; Brock & Durlauf 1999; Strevens 2003). More precisely, the models show that if we assume scientists aim at maximizing rewards from making discoveries, they will succeed to optimally distribute their research efforts if they take into account the probability of success of each research line and how many other scientists currently pursue it. Assuming that all scientists evaluate theories in the same way, their interest in fame and fortune, rather than epistemic goals alone, will lead some of them to select avenues that initially appear less promising.[7]

ABMs were introduced to address similar questions, but assuming more complex scenarios. For example, Muldoon and Weisberg (2011) developed an epistemic landscape model (see Section 3.1) to examine the robustness of Kitcher’s and Strevens’ results under the assumption that scientists have varying access to information about the pursued research projects in their community and the future success of those projects. Their results indicate that once scientists have limited information about what others are working on, or about the degree to which projects are likely to succeed, their self-organized division of labor fails to be optimal. Another example is the model by De Langhe & Greiff (2010) who generalize Kitcher’s model to a situation with multiple epistemic standards determining the background assumptions of scientists, acceptable methods, acceptable puzzles, and so on. The simulations show that once scientific practice is modeled as based on multiple standards, the incentive to compete fails to provide an optimal division of labor.

A closely related question concerns the “priority rule”—a norm that allocates credit for a scientific discovery to the first one to make it (Strevens 2003, 2011)—and its impact on the division of labor. While Kitcher’s and Strevens’s models suggested that the priority rule incentivizes the optimal distribution of scientists across rival research programs, a range of formal models, including ABMs, were developed to reexamine these results and shed additional light on this norm. For instance, Rubin and Schneider (2021) examine what happens if credit is assigned by individuals, rather than by the scientific community as a whole, as in Strevens’ model. They further suppose that news about simultaneous discoveries by two scientists spreads through a networked community. The simulations show that more connected scientists are more likely to gain credit than the less connected ones, which may, on the one hand, disadvantage minority members in the community, and on the other hand, undermine the role of the priority rule as an incentive resulting in the optimal division of labor. Besides the question of how the priority rule impacts the division of labor, ABMs have also been used to study other effects of the priority rule. For example, Tiokhin, Yan, and Morgan (2021) develop an evolutionary ABM showing that the priority rule leads the scientific community to evolve towards research based on smaller sample sizes, which in turn reduces the reliability of published findings.

The impact of incentives on the division of labor in science has also been analyzed in terms of incentives to “exploit” existing projects in contrast to incentives to “explore” novel ideas. For instance, De Langhe (2014b) developed a generalized version of Kitcher and Strevens’ model in which agents achieve the optimal division of labor by weighing up relative costs and benefits of exploiting available theories and exploring new ones. Within the framework of bandit models and network epistemology (see Section 3.2), Kummerfeld and Zollman (2016) proposed an ABM that examines a scenario in which scientists face two rival hypotheses, one of which is better though the agents don’t know which one. While agents always choose to pursue (or exploit) a hypothesis that seems more promising, they may also occasionally research (and thereby explore) the alternative one. The simulations show that if the community is left to be self-organized in the sense that each scientist explores to the extent that they consider individually optimal, agents will be incentivized to leave exploration to others. As a result, scientists will fail to develop a sufficiently high incentive for exploring novel ideas, that is, an incentive which would be optimal from the perspective of their community at large.

4.2 Theoretical diversity and the communication structure of science

4.2.1 The “Zollman effect”

The study of theoretical diversity in terms of network epistemology led to a novel hypothesis: that the communication structure of a scientific community can promote or hinder the emergence of theoretical diversity and thereby impact the division of cognitive labor. The idea was first demonstrated by bandit models developed by Zollman (2007, 2010; see Section 3.2) and came to be known as the “Zollman effect” (Rosenstock, Bruner, & O’Connor 2017). ABMs by Grim (2009) Grim, Singer, Fisher, and colleagues (2013), and Angere & Olsson (2017) produced similar findings based on different modeling frameworks.[8] These models show that in highly connected communities early erroneous results may spread quickly among scientists, leading them to investigate a sub-optimal line of inquiry. As a result, scientists may prematurely abandon the exploration of different hypotheses, and instead exploit the inferior ones. In light of these findings, Zollman (2010) emphasized that for an inquiry to be successful it needs a property of “transient diversity”: a process in which a community engages in a parallel exploration of different theories, which lasts sufficiently long to prevent a premature abandonment of the best theory, but which eventually gets replaced by a consensus on it. Besides the result that connectivity can be harmful, it has also been shown that learning in less connected networks is slower, which indicates a trade-off between accuracy and speed in the context of social learning (Zollman 2007; Grim, Singer, Fisher, et al. 2013).

Subsequent studies showed, however, that the Zollman effect is not very robust within the parameter space of the original model (Rosenstock et al. 2017). In particular, the result holds for those parameters that can be considered characteristic of difficult inquiry: scenarios in which there is a relatively small number of scientists, the evidence is gathered in relatively small batches, and the difference between the objective success of the rival hypotheses is relatively small. Moreover, additional models showed that if the diversity (and hence, exploration) of pursued hypotheses is generated in some other way, more connected communities may outperform less connected ones. For instance, Kummerfeld and Zollman (2016) showed that relaxing the trade-off between exploration and exploitation by allowing agents to occasionally gain information about the hypothesis they are not currently pursuing is a way to generate diversity, leading a fully connected community to perform better than less connected ones. Another way of generating diversity was examined by Frey and Šešelja (2020): they show that if scientists have a dose of caution or “rational inertia” when deciding whether to abandon their current theory and start pursuing the rival, a fully connected community gets a sufficient degree of exploration to outperform less connected groups. Similar points have been made with ABMs based on other modeling frameworks, such as the bounded confidence model by Douven and Hegselmann (2022), or an argumentation-based ABM by Borg, Frey, Šešelja, and Straßer (2018), each of which shows a different way of preserving transient diversity in spite of a high degree of connectivity.

4.2.2 The spread of disinformation

ABMs studying epistemically pernicious strategies in scientific communities have largely employed network epistemology bandit models (see Section 3.2). For instance, a model by Holman and Bruner (2015) examines how an interference of industry-sponsored agents may impact the information flow in the medical community, and which strategies scientists could employ to protect themselves from such a pernicious influence. For this purpose, they consider a scenario in which medical doctors regularly communicate with industry-sponsored agents about the efficacy of a certain pharmaceutical product as a treatment for a given disease. Since the industry-sponsored agents are motivated by financial rather than epistemic interests and they are unlikely to change their minds no matter how much opposing evidence they receive, they are not merely biased, but “intransigently biased”. Their simulations indicate two ways in which a scientific community can protect itself from the pernicious influence of the intransigently biased agent: first, by increasing their connectivity, and second, by learning how to reorganize their social network on the basis of trustworthiness, which leads them to eventually ignore the biased agent. In a follow-up model, Holman and Bruner (2017) also show how the industry can bias a scientific community without corrupting any of the individual scientists that compose it, but by simply helping industry-friendly scientists to have successful careers.

Using a similar network-epistemology approach, Weatherall, O’Connor and Bruner (2020) developed a bandit model to study the “Tobacco strategy”, employed by the tobacco industry in the second half of the twentieth century to mislead the public about the negative health effects of smoking (analyzed in detail by Oreskes & Conway 2010). In particular, the model examines how certain deceptive practices can mislead public opinion without even interfering with (epistemically driven) scientific research. The authors look into two such propagandist strategies: a “selective sharing” of research results that fit the industry’s preferred position, and a “biased production” of research results where additional research gets funded, but only suitable findings get published. The results show that both strategies are effective in misleading policymakers about the scientific output under various examined parameters since in both cases the policymakers update their beliefs on the basis of a biased sample of results, skewed towards the worse theory. The authors also look into strategies employed by journalists reporting on scientific findings and show that incautiously aiming to be “fair” by reporting an equal number of results from both sides of the controversy may result in the spread of misleading information.

Another example of ABMs developed to examine deception in science is an argumentation-based model by Borg, Frey, Šešelja, and Straßer (2017, 2018; see Section 3.1.3). Assuming the context of rival research programs where scientists have to identify the best out of three available ones, deceptive communication is represented in terms of agents sharing only positive findings about their theory, while withholding news about potential anomalies. The underlying idea is that deception consists in providing some (true) information while at the same time withholding other information, which leads the receiver to make a wrong inference (Caminada 2009). Unlike the previous two models discussed in this section, where not all agents display biased or deceptive behavior, Borg et al. study network effects in a population consisting entirely of deceptive scientists. Such a scenario represents a community that is driven, for instance, by confirmation bias and an incentive to shield one’s research line from critical scrutiny. The simulations show that, first, reliable communities (consisting of no deceivers) are significantly more successful than the deceptive ones, and second, increasing the connectivity makes it more likely that deceptive populations converge on the best theory.

4.3 Cognitive diversity

As we have seen in Section 2.1, the problem of cognitive diversity concerns the relation between the diversity of cognitive features of scientists (including their background beliefs, reasoning styles, research preferences, heuristics and strategies) and the inquiry of the scientific community. Philosophers of science have especially been interested in how the division of labor across different research heuristics impacts the performance of the community.

A particularly influential ABM tackling this issue is the epistemic landscape model by Weisberg and Muldoon (2009). The model examines the division of labor catalyzed by different research strategies, where scientists can act as the “controls”, “followers” or “mavericks” (see above Section 3.1). In view of the simulations, Weisberg and Muldoon argue that, first, mavericks outperform other research strategies. Second, if we consider the maverick strategy to be costly in terms of the necessary resources, then introducing mavericks to populations of followers can lead to an optimal division of labor. While Weisberg and Muldoon’s ABM eventually turned out to include a coding error (Alexander et al. 2015), their claim that cognitive diversity improves the productivity of scientists received support from adjusted versions of the model, albeit with some qualifications.

First, Thoma’s (2015) model showed that cognitively diverse groups outperform homogeneous ones if scientists are sufficiently flexible to change their current approach and sufficiently informed about the research conducted by others in the community. Second, Pöyhönen (2017) confirmed that if we consider the maverick strategy to be slightly more time-consuming, mixed populations of mavericks and followers may outperform communities consisting only of mavericks in terms of the average epistemic significance of the obtained results. According to Pöyhönen, another condition that needs to be satisfied if cognitive diversity is to be beneficial concerns the topology of the landscape: diverse populations outperform homogeneous ones only in case of more challenging inquiries (represented in terms of rugged epistemic landscapes), but not in case of easy research problems (represented by landscapes such as Weisberg and Muldoon’s one, Figure 2). The importance of the topology of the landscape was also emphasized by Alexander and colleagues (2015) who use NK-landscapes to show that whether social learning is beneficial or not crucially depends on the landscape’s topology. Finally, there are other research strategies (such as Alexander and colleagues’ “swarm” one, see Section 3.1.1) that outperform Weisberg and Muldoon’s mavericks, while small changes in the follower strategy may significantly improve its performance (Fernández Pinto & Fernández Pinto 2018).

Another aspect of cognitive diversity that received attention in the modeling literature concerns the relationship between diversity and expertise. This issue was first studied in economics by Hong and Page (2004). The model examined how heuristically diverse groups, consisting of agents with diverse problem-solving approaches, compare with groups consisting solely of experts with respect to finding a solution to a particular problem. Hong and Page’s original result suggested that “diversity trumps ability” in the sense that groups consisting of individuals employing diverse heuristic approaches outperfom groups consisting solely of experts, that is, agents who are the best “problem-solvers”. While this finding became quite influential, subsequent studies showed that it does not hold robustly once more realistic assumptions about expertise are added to the model (Grim, Singer, Bramson, et al. 2019; Reijula & Kuorikoski 2021; see also Singer 2019).

The problem of cognitive diversity and the division of labor in scientific communities was also studied by Hegselmann and Krause’s bounded-confidence model (Hegselmann & Krause 2006; see above Section 3.3). The ABM examines opinion dynamics in a community that is diverse in the sense that only some individuals are active truth seekers, while others adjust their beliefs by exchanging opinions with those agents who have sufficiently similar beliefs to their own. Hegselmann and Krause investigate conditions under which such a community can reach consensus on the truth, combining agent-based modeling and analytical methods. They show that, on the one hand, if all agents in the model are truth seekers, they achieve a consensus on the truth. On the other hand, if the community divides labor, its ability to reach a consensus on the truth will depend on the number of truth seeking agents, the position of the truth relative to the agents’ opinions, the degree of confidence determining the scope of opinion exchange and the relative weight of the truth signal (in contrast to the weight of social information). For instance, under certain parameter settings even a single truth-seeking agent will lead the rest of the community to the truth.

4.4 Social diversity